You are wrong. The physics for the radars you describe and those for automotive radars are the same, but the implementations for automotive use are not as you imagine. For proof, see the following illustration from here

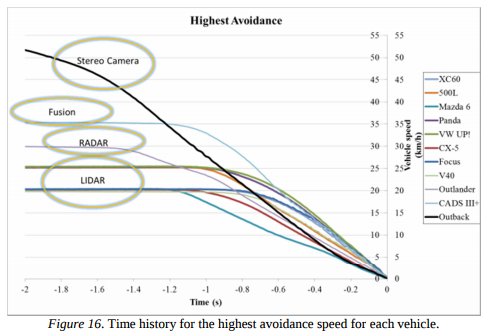

http://cdn.euroncap.com/media/1384/...13-0-a837f165-3a9c-4a94-aefb-1171fbf21213.pdf . These vehicles all responded to a stopped object ahead, including those with radar. Also, my own experience since the 1990s with automotive radar is that no automotive radar system ignores stationary objects in the expected path. To reduce false alarms, many of them wait after first detection for more confidence the object is not overhead or outside the lane, but even those react before collision. That's what defines today's "collision imminent braking" which very often uses radar. Also, here in the U.S., the Insurance Institute for Highway Safety tests vehicles with automatic emergency braking, including for braking for a stopped vehicle ahead, and many of those systems are radar-based -- and their tests show they work.

Word.

The 'aircraft pilot' above notes notch filtering, but his knowledge doesn't extend to point clouds for some reason. (At least he should have seen Black Mirror's, Metalhead)

With version 8 Tesla added the updated Bosch drivers which greatly enhance the radar's capabilities. It sees things in a 3D representation, but not only that, it compares successive images so that if a beer can's concave bottom reflects strongly in one image, it is far less in the next.

Sure the radar has to ignore the world rushing toward it. But it also sees what is

directly in front of it, and

that's the main thing that matters. What is directly in front of you. It is beyond me why AEB isn't better. Some say that it can't know if we are about to swerve, but if we do (or brake) it immediately disengages automatic control. Jason's accident wasn't preventable with version 7, but these recent accidents should have been with v8.

And as to the poster above who referenced "CID" -- that is the Tegra processor VCM daughtercard which handles all in-car functions and mediates between the MobileEye (AP1) and CAN busses. It's what we 'root'.

Don't make excuses for not having better AEB. Other car makers manage to do it. This is something we need to have.

If AEB causes the car behind to to hit you, I'm sorry that life isn't a bucket of roses. The law makes that their fault.

Passive optical sensors (including cameras) don't directly measure speed or distance. Spaced sensors (e.g. stereo cameras) can compare results and estimate distance if not too far away, like our eyes allow our brains to do. They deduce speed by observing how quickly the size of an object appears to be growing or shrinking.

Right again. Tesla AI looks for things like tail lights in front of you growing farther apart (indicating of course, you're getting closer... not that the car in front of you is growing to gigantic size). We as humans, don't remember learning this a long time ago, but we did.

BTW, there is no doppler notch. There is the aircraft notch maneuver, which is a whole nother pile of bananas. Now, notch

filtering refers to

allowing a particular signal to the exclusion of all others.