This will be a bit lengthy and technical update of what I found out in the snapshot images, that looks more like a blog post. I’m posting it because it partially shows some info about the cameras, partially straightens some incorrect things I said or shown earlier. Hope it will not bore you too much (and sorry for my english, hope you won’t struggle reading it as much as I when writing it).

I took a look into “sunset” sets previously, but images I got from this light conditions didn’t look right and it bothered me. I tried to find information helping with it in the camera specification, assuming that this sensor is Aptina AR0132AT (as mentioned earlier in this thread, and what can be seen in the data seems to confirm that). Documentation however at first did not add up fully with what I saw, so looking up for hints I ended up decoding camera chip register data sent as first two lines of the image (they are mangled up a bit due to the fact how the camera is transmitting pixel data and how this is then stored into the file). I couldn’t find the registers set reference for AR0132AT, so I used the documentation for AR0130, (register description from it seems to match up with values in the images, so I think those are close enough). I think I finally realized what is going on in those images.

Firstly, those sensors are HDR cameras, with build in hardware support for multi-exposure HDR capture that is being used by Autopilot. Cameras are set up to capture three images for each frame, stepping exposure time between them by a factor of 16. Those images are automatically combined into a single frame by the camera.

Secondly, among camera registers are:

- green1_gain

- blue_gain

- red_gain

- green2_gain

Those are digital gain values for separate color channels in

Bayer pattern. This chip is most likely designed for couple different purposes at once, including full color cameras with Bayer mosaic. Grayscale sensors and Gray-Red sensors differ only with a different color filter coating on them, so in our case red gain affects red channel, rest affects grayscale pixels. Anyway, those registers are set all to the same value meaning, that there are no color balancing set up, at least at the sensor level. Why is this important for me? The red color filter coating is preventing some portion of the light to reach pixel sensors, this means that the grayscale pixels (without coating) are much more sensitive than the red ones (this is one of many reasons why you want to use a grayscale camera whenever applicable). When restoring color in previous images I had to multiply red values by a factor of about 2. The fact that this is not done at sensor makes me think, that Tesla is not yet using color information, at least for Enhanced Autopilot, but I might be wrong.

If those cameras were capturing a single frame with linear output, all I had to do to restore the color is to multiply the red channel, to match them up. But when multiple frames are combined in HDR mode, all with red channel off from balance, than the color relation is a bit more complicated.

This is why I thought previously that this relation might not be linear. In previous images I converted, I accidentally found the portion of brightness from the middle exposure (the most useful for their light conditions). This is also why I thought the side cameras had different response. All what was wrong with them was a wrong exposure time set up (this is the only meaningful difference in registers state), making them overexposure. In their case I accidentally found the portion from the shortest exposure, which was the most useful for their condition.

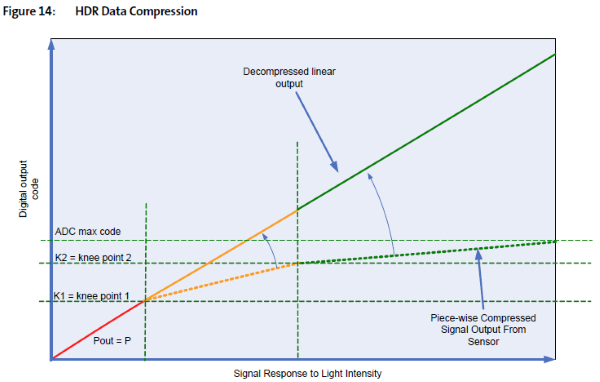

This is not yet the end. When working in HDR mode the camera is combining the images into the resulting frame. According to the specification it has a 12bit sensor. When combing three images with x16, x16 exposure ratios it ends up with 20bit linear brightness value (E1 + 16*E2 + 16*16*E3). It can then transmit this 20bit value, or compress the brightness to 14bit (without data loss, fitting the sum of 12bit values into it), or to 12bit (with some data loss), using this function:

When looking at the image data I was sure that the camera is working in 14bit mode, because I was seeing values up to 15 999 (and 14bit range is 2^14 = 16 384). This is where images data did not match with the camera specification for 14bit mode. After looking into registers it turned out that the camera is actually working in 12bit mode. Pixel values in the files are just shifted by two bits (multiplied by 4). Why it is set up like that, I don’t know. Maybe they prepared the vision algorithms for 14bit data and then decided to switch the cameras to 12bit mode, although I doubt it. More over those two extra bits seems to be occasionally filled with random values, as I saw in the register encoding lines, which is weird and still unexplained for me. Anyway, when taking under account 12 bit mode of the camera and 2 bit shift afterwards things are starting to make sense. Characteristic points on the image histogram finally lined up with values in the specification. Processing images according to this specification finally works universally for all the images. The colors are still quite off for different images, but this time there are no sharp spots of opposite colors in the same image that suggested nonlinear relation previously.

Basically I have to reverse what the camera is doing when combining multi exposure images and compressing brightness values, split brightness range back into three exposure ranges (although it may not be possible to do it perfectly) and amplify the red channels for each range separately. Even when not computing the color, the grayscale image still have to be processed to decompress the brightness range.

For example, previously I

uploaded an image with traffic light warning signs that was a bit overexposure. Turns out in reality it is not as bad:

But getting back to sunset snapshots. Images from this light conditions have very wide usable range of brightness and it is quite hard to visualize them in such a way, that will not lie about what Autopilot can actually see. I don’t know much about HDR post-processing or tone mapping, and methods from quick online search are not producing satisfying results, so I try to present it in other way. Here are images made out of almost whole captured brightness range (notice that traffic lights are not overexposure):

Dark parts of those images of course contains much more information. If I crop brightness to those dark ranges this is what can be seen:

In some parts you can see, what I think, is a ghosting from HDR multi-exposure in motion.

Below is a combined video of main camera replays from all sunset snapshots. This time the quality is really crappy. This is because the h265 compression was applied on top of the brightness range compression, and when decompressing the brightness I’m basically amplifying h256 compression artifacts up to the roof. Replay video is also stored as 10bit/px, so some quality is lost here too.

sunset main_replay - Streamable

Some other random info that can be found in register settings:

When in HDR mode the sensor can perform 2D motion detection and compensation, but it is not in use (it is turned off in its register).

Sensor provides automatic exposure, analog gain and digital gain management, but it is not used and all of those parameters are controlled manually by Autopilot ECU. It can be seen in the snapshots that Autopilot is changing exposure time and gain depending on the lighting conditions. In the video at about 0:31 you can see the result of increasing gain in the sensor.

If I’m correctly understanding how to calculate the chip temperature out of register values, than the main camera sensor in sunset snapshot is at about 50°C, side camera sensor is about 44°C, main camera in intersection snapshot is about 40°C, and at the end of “drivingaround1_day” set the main sensor reached over 60°C. A bit warm, but maximum allowed ambient temperature for the sensor is 105°C, so allowed die core temperature should be even higher. I’m bringing this up because some people were checking sensor temperatures using thermal cameras and weren’t sure if the raised temperature was because of the sensor running or heating element working.