Jigglypuff

Member

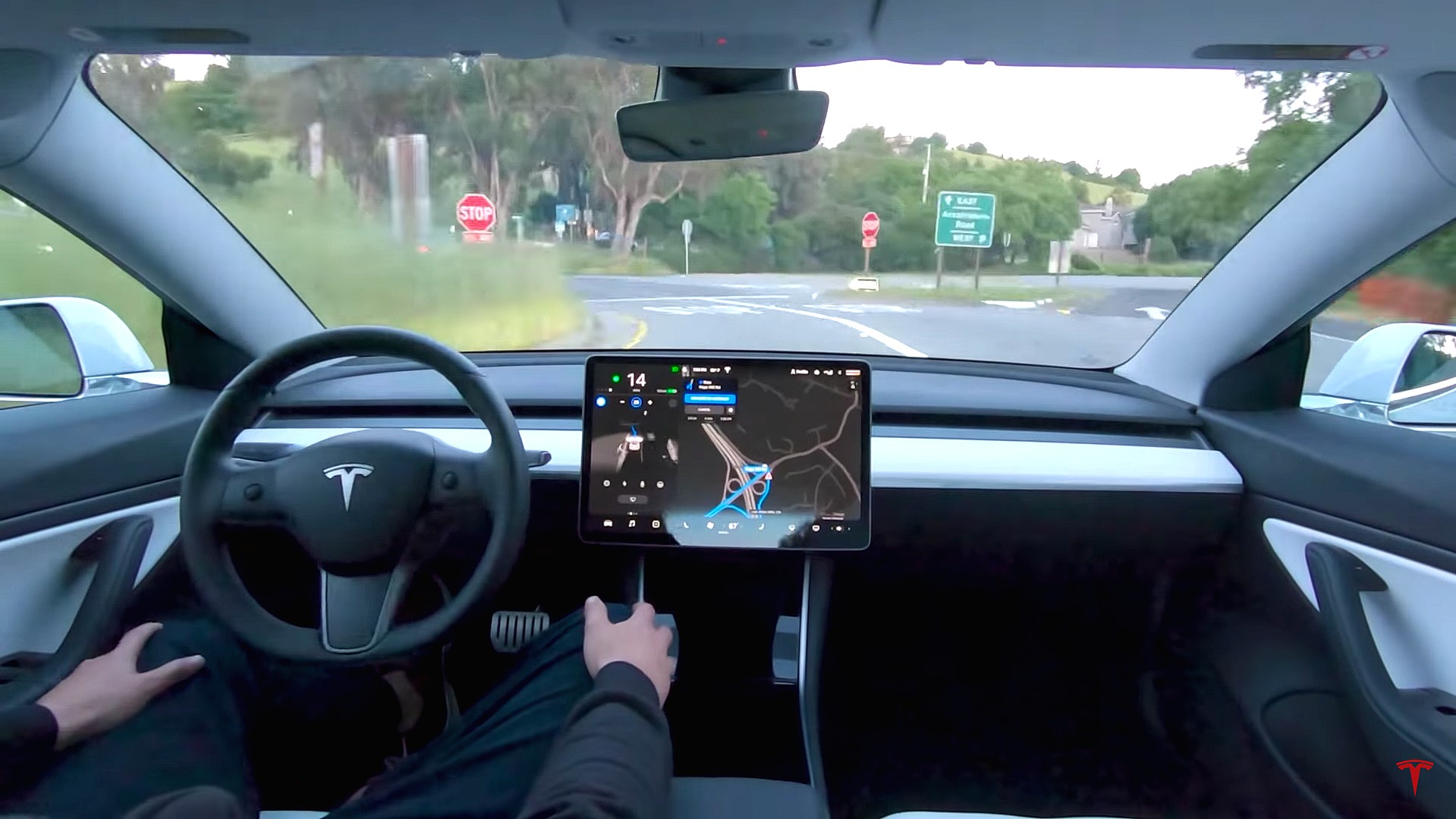

Autopilot is essentially garbage, and I only use it in slow, heavy traffic on a divided highway, where it's decent but not good cruise control with lane keeping. But if the traffic starts to speed up and slow down too much, becoming quick go then quick stop, I turn that crap off because it has garbage predictive powers as it doesn't see well, if it sees at all, past the car right in front of me. It speeds up too quickly and slams on the brakes too hard. It cannot handle a country road, taking me towards the proverbial ditch. It cannot stay centered well in a lane except at slow speeds. Even with it off, I get sudden steering corrections for no reason at all. Autopilot is awful. FSD is a lie. Musk promises and promises but no, he doesn't deliver. With all the updates the software has received, it's just as bad as ever.

However, autopilot is excellent, just excellent, at spotting orange cones. No software has even been as good at cone spotting.

However, autopilot is excellent, just excellent, at spotting orange cones. No software has even been as good at cone spotting.