Don't be a tease - which criterion/a do you meet? As for the dirty camera problem - I assume they've thought of that. What the solution is - dunno. I will say WRT glare that autopilot seems to be able to see lane markings in night rain situations that defeat my own eyes.I meet one of your expert criteria but do not feel I am qualified to give an expert opinion. Your criteria are too easy.

If FSD is vision based, what happens if the vision is obscured with glare, snow, rain, mud, etc?

As a human, we squint our eyes or turn our head. The cameras are in fixed locations without any ability to clean or shade themselves.

I'd be curious what the car will do if it is temporarily blinded.

Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

So if the consencious here by experts is that the suite isn't enough for L5 what do you think is Teslas reasoning behind marketing and selling FSD and Musks most recent claim at TED that were about 2 years away from sleeping in our cars?

Trying my best to stay away from non-expert speculation on this thread - but - since you asked - one could speculate that as one of the experts here suggested, AP 2 will get to L3/4. 2 years from now will be right around the time we are definitely due for an upgraded sensor suite. AP3 anyone?So if the consencious here by experts is that the suite isn't enough for L5 what do you think is Teslas reasoning behind marketing and selling FSD and Musks most recent claim at TED that were about 2 years away from sleeping in our cars?

whitex

Well-Known Member

Stereo vision and sufficient software can achieve Level 5 full autonomy. You (Calisnow) are living proof of this ! (assuming you drive)

RT

B.S. Computer Science (19XX)

Uhm, duct tape a helmet to your headrest, then tape over the visor making 2 holes, one in front of each eye. This will simulate fixed cameras. Then put on headphones with white noise to remove the hearing. Not much we can do about disabling you from sensing acceleration, but I don't think you'll have to drive far before you're in an accident disproving your point.

EVie'sDad

Member

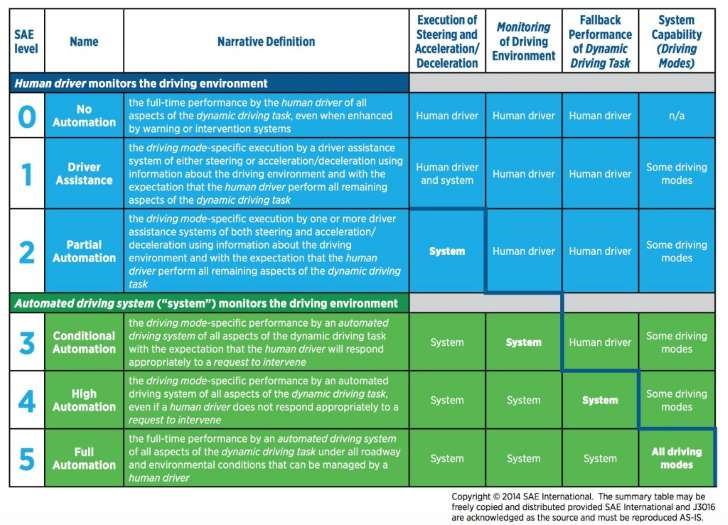

We should worry a about getting to level 3. Eight cameras, 12 ultrasonic sensors and radar may be enough, but one camera and radar and upgrading sensors, no ...never . AP2 is only using two of those eight cameras right now. I hope someday my AP1 can achieve level 3 but i am not holding my breath. After 18 months i understand the next gen of AP3 will be closer to level 4 but i doubt level 5 will happen in the next three or four years.

Last edited:

whitex

Well-Known Member

We have a number of threads polluted with the opinions/rants/speculations of laymen (myself included). How about a thread inviting comment for *only* experts? If you are/have:

Please comment and give us your thoughts.

- A grad student or researcher in AI/computer vision/computer science

- Have a BS in computer science

- A degree in computational or theoretical physics or math

- A software engineer actually employed working with AI/neural networks/artificial vision/autonomous driving

- An electrical engineer

- Someone with domain expertise working with radar

If you are *NOT* a software engineer/researcher/ please be quiet. For example, if you are a philosophy major like me working in finance who once took Pascal as a 12 year old and has watched some Youtube videos with the CEO of Mobileye - SHUT THE HELL UP ON THIS THREAD. If you are a plastic surgeon who double majored in neuroscience - SHUT THE HELL UP ON THIS THREAD. If you took a 10 week coding bootcamp to learn to use Ruby to get a job building web apps 'cause your creative writing AA wasn't working hard enough for your financial future - SHUT THE HELL UP ON THIS THREAD.

Okay, experts please comment away.

Boy have you come to the wrong place, an open Internet forum, for this kind of advice. Anyone on the Internet can claim to be whatever they want to be. Actual experts either don't frequent TMC or are not going to answer your questions due to potential conflict of interests, or fear of divulging confidential information. The way your post was worded was hostile and unprofessional, which will further alienate any actual experts and invite others to blow off some steam. Maybe that was your goal?

Sir Guacamolaf

The good kind of fat

does the addition of lidar and/or more radar sensors reduce the computation required?

It shifts computation requirement - hard to say if it 'reduces' it - probably not. While there is less AI needed now, since you have the data - no need to intelligently guess, you have a LOT more data to process in real-time. I would think in today's computational terms, think of the latest graphics cards, a few of those in parallel could do it. I don't know for sure without actually trying it, but you could easily burn a few kw power on just running the computers to process lidar inputs. But atleast the algorithms would be much simpler and human achievable with our current level of on the street expertise.

However, here I have to agree with Tesla's approach. Cameras, not Lidars, and clever image processing software is far better suited to autopilot and general human-replacement-automation, than lidars. Also with clever image processing you could bring the computational requirements to meet what Tesla has put in the AP2 cars. However writing such software is not easy, as Tesla is finding out. Plus it needs to be done in real time and be very very very tight, an extra unpredictable millisecond can be life or death. And those wiggly lines on AP2 tell me, Tesla is far from perfecting this so far.

Now the real question is, what will it take for Tesla to fix their f'''''''' service centers! Grr. (Sorry just pissed at my SC right now).

S4WRXTTCS

Well-Known Member

I don't think the right question is being asked.

I think the right question is what combination of technologies will ultimately achieve level 5 driving that is approved by a significant number of regulatory agencies? Agencies that will require a high levels of safety than what's achievable in vision + front radar system

I say this because we're already starting to see rollouts of technology like car-car communication, and cheaper/improved lidar systems. We're seeing these things before EAP has been released. Before we even know how well the camera in an HW2 Model S/X even work in various weather conditions (a requirement for level 5).

So my belief is by the time we have Level 5 driving what's in it is going to far surpass what's in HW2. Where HW2 won't have the level of safety that is offered by these new systems.

I also think Level 5 is asking for a lot. If I had a HW2 car I'd be pretty happy and content with Level 3, and really pleased if it just barely met Level 4. From what I've read most experts in autonomous cars feel like HW2 at best will barely meet Level 4. It likely won't matter much to most people because the roads themselves will likely have to get white listed. There are lots of roads that are hard for humans to even deal with. So there is also an infrastructure problem.

I think the right question is what combination of technologies will ultimately achieve level 5 driving that is approved by a significant number of regulatory agencies? Agencies that will require a high levels of safety than what's achievable in vision + front radar system

I say this because we're already starting to see rollouts of technology like car-car communication, and cheaper/improved lidar systems. We're seeing these things before EAP has been released. Before we even know how well the camera in an HW2 Model S/X even work in various weather conditions (a requirement for level 5).

So my belief is by the time we have Level 5 driving what's in it is going to far surpass what's in HW2. Where HW2 won't have the level of safety that is offered by these new systems.

I also think Level 5 is asking for a lot. If I had a HW2 car I'd be pretty happy and content with Level 3, and really pleased if it just barely met Level 4. From what I've read most experts in autonomous cars feel like HW2 at best will barely meet Level 4. It likely won't matter much to most people because the roads themselves will likely have to get white listed. There are lots of roads that are hard for humans to even deal with. So there is also an infrastructure problem.

Last edited:

So far the "experts" have disagreed with some saying "yes" and others saying "no" and others saying you are asking the wrong question.Gahhhh! Nobody can follow instructions. :sob: :shakes fist at non-existent God:

So in other words, the usual at TMC.

FlatSix911

Porsche 918 Hybrid

Elon Musk Predicts Fully Driverless Cars Are Less Than Two Years Away

Last week when Elon Musk sat down to give his Ted Talk, the Tesla CEO spoke about more than just Tesla's entry into the semi truck market and The Boring Company, he also talked about the entry into full vehicle automation. This news comes less than a week after Audi readies its first level-3 autonomous vehicle for production with the launch of its A8. Tesla, however, aims to advance at a much quicker pace for its autonomy and promises full-automation much sooner than expected.

Currently, Tesla vehicles equipped with the Autopilot hardware suite ship with eight cameras, one radar, and a series of ultrasonic sensors - all of which provide feedback to a central processing computer to control the driving. The thought is that some existing vehicles, as well as all second-generation vehicles would be equipped with E technology to enable level-5 automation without needing any upgraded hardware.

Last week when Elon Musk sat down to give his Ted Talk, the Tesla CEO spoke about more than just Tesla's entry into the semi truck market and The Boring Company, he also talked about the entry into full vehicle automation. This news comes less than a week after Audi readies its first level-3 autonomous vehicle for production with the launch of its A8. Tesla, however, aims to advance at a much quicker pace for its autonomy and promises full-automation much sooner than expected.

Currently, Tesla vehicles equipped with the Autopilot hardware suite ship with eight cameras, one radar, and a series of ultrasonic sensors - all of which provide feedback to a central processing computer to control the driving. The thought is that some existing vehicles, as well as all second-generation vehicles would be equipped with E technology to enable level-5 automation without needing any upgraded hardware.

stopcrazypp

Well-Known Member

I qualify as "expert" under your CS major criteria, but like others, I don't feel this necessarily helps things very much.

I should point out experts in a given field may also have their own biases. For example, in energy storage, if you are a chemistry major, you may focus on batteries or fuel cells, and depending on your focus, you may be heavily biased toward one solution. Similarly an expert working on vision, radar, or lidar, may have their own biases.

On the question of computation power, I don't think it is that relevant. The growth of power efficiency of SoCs is so rapid that talk based on current technology is almost immediately outdated. For example, Nvidia PX2 used 250 watts to get 24 DL TOPs (deep learning trillions of Operations Per Second; Nvidia's own metric), but the upcoming Xavier SoC uses just 20 watts for 20 TOPs. That's 10x the efficiency.

As for whether vision and forward radar alone (and I presume you are including the ultrasonic sensors too) is enough for level 5 there will largely be two camps:

1) Humans can do it with vision alone (plus hearing and a bit of acceleration sensing), so it's sufficient.

2) Current camera systems do not match human vision and it is unknown if AI can match human intelligence in processing visual data, so it's not sufficient.

(I suppose there is a third camp that I perhaps fall into that say we don't know enough to say either way).

I would say it's not that simple though. Current camera systems are inferior to human vision in some ways (lower resolution, fixed position and field of view), but superior in other ways (cameras can be viewed/processed simultaneously, have larger FOV or range, mounted in positions other than the driver's view, optimized for night vision, etc).

The premise may not even be correct: cameras may not necessarily need to match human eyes to be better for driving. Remember, we aren't talking about cameras handling all possible visual tasks a human may encounter, just the ones relevant to driving.

I should point out experts in a given field may also have their own biases. For example, in energy storage, if you are a chemistry major, you may focus on batteries or fuel cells, and depending on your focus, you may be heavily biased toward one solution. Similarly an expert working on vision, radar, or lidar, may have their own biases.

On the question of computation power, I don't think it is that relevant. The growth of power efficiency of SoCs is so rapid that talk based on current technology is almost immediately outdated. For example, Nvidia PX2 used 250 watts to get 24 DL TOPs (deep learning trillions of Operations Per Second; Nvidia's own metric), but the upcoming Xavier SoC uses just 20 watts for 20 TOPs. That's 10x the efficiency.

As for whether vision and forward radar alone (and I presume you are including the ultrasonic sensors too) is enough for level 5 there will largely be two camps:

1) Humans can do it with vision alone (plus hearing and a bit of acceleration sensing), so it's sufficient.

2) Current camera systems do not match human vision and it is unknown if AI can match human intelligence in processing visual data, so it's not sufficient.

(I suppose there is a third camp that I perhaps fall into that say we don't know enough to say either way).

I would say it's not that simple though. Current camera systems are inferior to human vision in some ways (lower resolution, fixed position and field of view), but superior in other ways (cameras can be viewed/processed simultaneously, have larger FOV or range, mounted in positions other than the driver's view, optimized for night vision, etc).

The premise may not even be correct: cameras may not necessarily need to match human eyes to be better for driving. Remember, we aren't talking about cameras handling all possible visual tasks a human may encounter, just the ones relevant to driving.

dknisely

Member

Aim for Mars to get a colony on the moon. Aim for L5 to get L4. Pretty straightforward to me. And that is NOT a criticism of Elon.

People probably think I'm being critical, but striving for "FSD" or L5 or whatever is just a way to get a really awesome set of driver assistance features that make driving a Tesla 10x safer than a normal car. Will there be a Tesla FSD fleet capability with AP2? I would bet good odds against. But, will there be an amazing set of autonomy features for highway merge-to-exit (L4), yeah. I think they will accomplish that by YE2018 (but only if M3 has the same AP2 sensor suite ). M3 is Tesla's business to a first approximation; we AP2 MS and MX customers are just a hobby.

). M3 is Tesla's business to a first approximation; we AP2 MS and MX customers are just a hobby.

People probably think I'm being critical, but striving for "FSD" or L5 or whatever is just a way to get a really awesome set of driver assistance features that make driving a Tesla 10x safer than a normal car. Will there be a Tesla FSD fleet capability with AP2? I would bet good odds against. But, will there be an amazing set of autonomy features for highway merge-to-exit (L4), yeah. I think they will accomplish that by YE2018 (but only if M3 has the same AP2 sensor suite

. I would think in today's computational terms, think of the latest graphics cards, a few of those in parallel could do it

This is an area I know something about. As a motion graphics artist I have a system with 7 GTX Titan X Pascal cards for GPU 3D rendering. Currently the fastest consumer gpu cards and I think Elon mistakenly said they were what was powering AP2 at one point. The machine eats up about 1400 watts when under full load. This gives you an idea about what power is needed for a few gpu cards running in parallel.

Sir Guacamolaf

The good kind of fat

@boofagle sounds about right. Very tough to say what kind of CPU crunching we need, and therefore what kind of parallelization, and therefore what kind of power requirements. But my original estimate of a few kw .. I'd say somewhere between 1-5kw is my guesstimate. I'd think your range would take some hit.

Of course this sort of analysis is not what Tesla aims to do with AP2. AP2 is merely a matter of image recognition + reactionary algoritm that is tight and fast so it can run in real time - that's basically it. And that can run on their current hardware at a negligible power consumption, once they manage to write it.

Of course this sort of analysis is not what Tesla aims to do with AP2. AP2 is merely a matter of image recognition + reactionary algoritm that is tight and fast so it can run in real time - that's basically it. And that can run on their current hardware at a negligible power consumption, once they manage to write it.

AnxietyRanger

Well-Known Member

However, here I have to agree with Tesla's approach. Cameras, not Lidars, and clever image processing software is far better suited to autopilot and general human-replacement-automation, than lidars. Also with clever image processing you could bring the computational requirements to meet what Tesla has put in the AP2 cars. However writing such software is not easy, as Tesla is finding out. Plus it needs to be done in real time and be very very very tight, an extra unpredictable millisecond can be life or death. And those wiggly lines on AP2 tell me, Tesla is far from perfecting this so far.

To clarify, though, Tesla's approach does not only differ in lack of LIDAR. It also differs in lack of 360 radar which everyone else seems to be doing.

The autobahn car approaching super fast from the adjacent lane is certainly an area where rear radars can be useful. The Germans have thus had them for years in their cars. But they also provide a blanket of redundancy around the car that ultrasonics due to their limited nature most of the time can not.

LIDAR may or may not be the future, but I do expect an AP3 to include more radar coverage. Or alternatively more redundant cameras. I can not see any way around the problem of single, fixed cameras being obstructed quite easily.

What it all means I'll leave to the experts, just wanted to clarify the different implementations in cars at this time.

feslatan

Member

Oliver Cameron is undeniably one of the foremost experts you're looking for. Someone asked him this question back in February and he said yes.

Oliver is an autonomous vehicle engineer who is now CEO of a company building out an autonomous taxi service, so he should meet your 'expert' criteria.

Oliver is an autonomous vehicle engineer who is now CEO of a company building out an autonomous taxi service, so he should meet your 'expert' criteria.

whitex

Well-Known Member

Yes, but did he say by when, and whether you can get to FSD sooner with more sensors, then gradually step back as the system is learning to drive with less sensors?Oliver Cameron is undeniably one of the foremost experts you're looking for. Someone asked him this question back in February and he said yes.

Oliver is an autonomous vehicle engineer who is now CEO of a company building out an autonomous taxi service, so he should meet your 'expert' criteria.

I have a question less about hardware and more about AI learning.

Most cars sit for hours at a time after the owner is done with work or running a few errands because we obviously have to sleep. During our sleep we replay the day (multiple times faster) over and over again to strengthen our memories. Slumber Reruns: As We Sleep, Our Brains Rehash the Day I'm wondering if the same approach can be taken to Tesla AI for driving. Imagine putting an SSD in the car beside the Nvidia card and recording an entire day's worth of driving from all angles. At night the car can enter "learning mode" and replay the videos over and over again, constantly learning and tweaking the algorithm. In the morning when the car gets put in drive the videos delete and a new set are created. Google does something similar with the info they collect Google's self-driving cars rack up 3 million simulated miles every day but at a data center.

So my questions are, would there be any real benefit to doing this? Would the AI learn more from replaying the day's driving over and over again vs just doing it once in real-time?

It's less about driving between the lines and more about reacting to other cars around you, so taking the same route every day will always bring new challenges.

If so, why haven't they done something like this? I would imagine it'd be a privacy issue that you could disable if you didn't like the idea of recording the entire day. There are thousands of Teslas with lots of computational power spending a majority of their day doing nothing. Harnessing that power, to my non-expert mind, would be a huge benefit to speeding up the learning process.

Most cars sit for hours at a time after the owner is done with work or running a few errands because we obviously have to sleep. During our sleep we replay the day (multiple times faster) over and over again to strengthen our memories. Slumber Reruns: As We Sleep, Our Brains Rehash the Day I'm wondering if the same approach can be taken to Tesla AI for driving. Imagine putting an SSD in the car beside the Nvidia card and recording an entire day's worth of driving from all angles. At night the car can enter "learning mode" and replay the videos over and over again, constantly learning and tweaking the algorithm. In the morning when the car gets put in drive the videos delete and a new set are created. Google does something similar with the info they collect Google's self-driving cars rack up 3 million simulated miles every day but at a data center.

So my questions are, would there be any real benefit to doing this? Would the AI learn more from replaying the day's driving over and over again vs just doing it once in real-time?

It's less about driving between the lines and more about reacting to other cars around you, so taking the same route every day will always bring new challenges.

If so, why haven't they done something like this? I would imagine it'd be a privacy issue that you could disable if you didn't like the idea of recording the entire day. There are thousands of Teslas with lots of computational power spending a majority of their day doing nothing. Harnessing that power, to my non-expert mind, would be a huge benefit to speeding up the learning process.

So I have some of the required expertise (BS Comp Science) and have had some involvement in the area, although more conceptual than technical.

I agree with Musk that it is a software problem, and think that a heavy focus on hardware and sensor fusion is misplaced. There seems to be an accepted wisdom that the more sensors you throw at the problem, the better the outcome. I don't believe this is entirely true.

Going back to the "human" example: a regular human with a reasonable IQ and regular senses (2 eyes, 2 ears, and the ability to extend the visual field by moving the head around) can drive safely in most situations, and is able to adapt to new situations pretty quickly with minimal instruction.

But it is possible for a human to drive a car perfectly well with much less than that. Just one eye will do. And that one eye could even be colour-blind without a significant reduction in the driver's ability to drive and adapt to new situations.

The trillion-dollar question is: how do humans do it?

Well, we know that there are a few skills that are employed, and a few tricks in the way that we deploy them. There is a good article on Visual Expert (link) describing what is going on when a driver reaction is needed, but in summary:

Sensation - there is a shape in the road

Perception/recognition - the shape ahead is a dog

Situational awareness - the dog is jaywalking and has not seen me coming

Response selection & programming - slam on the brakes

Each of these skills is a collection of abilities, and it is some (not necessarily all) of these abilities that need to be replicated in software in order to have fully autonomous L5 vehicles.

What we see the most of at the moment, is Perception. The ability to recognise objects is a mature software solution that is fairly straightforward to implement with minimal hardware. Face detection on a smartphone app, for example.

The AP display in front of the driver is a simplistic view of the current state of this "skill". We know that Tesla have internal builds that are far more perceptive, having the ability to recognise vehicle types and road signs, for example.

What is much more of a challenge are the Situational awareness and Response selecton pieces. In a human, we subconsiously apply the following abilities in a fraction of a second:

Threat determination - will I hit them?

Intuition/experience - they will not see/hear me until it is too late for them to jump out of the way

Path prediction/Intent of others - is the oncoming car on the other side of the road going to stop / is the car behind too close to stop / where will the dog be if they don't change speed or direction before I arrive in that part of the road

Determination of vehicle capability - can the car even stop in time or should I try to avoid

Self-preservation & social prejudice - hit the lamp post, the oncoming car, or the dog

Response - brake hard, sound horn

At the moment, support for these skills in AP is very simplistic, and I even doubt whether a NN is involved at all with TACC or AEB after the Perception stage. Perhaps this is actually OK - building these abilities in a NN will be a tremendous challenge to get to human-equivilence, and there is no rule that says that NN alone is the right approach.

TL/DR;

Yes, the hardware is capable, but I think this the wrong question. The question that should be asked is: can the software ever be capable enough to safely control the vehicle in any given traffic scenario?

I agree with Musk that it is a software problem, and think that a heavy focus on hardware and sensor fusion is misplaced. There seems to be an accepted wisdom that the more sensors you throw at the problem, the better the outcome. I don't believe this is entirely true.

Going back to the "human" example: a regular human with a reasonable IQ and regular senses (2 eyes, 2 ears, and the ability to extend the visual field by moving the head around) can drive safely in most situations, and is able to adapt to new situations pretty quickly with minimal instruction.

But it is possible for a human to drive a car perfectly well with much less than that. Just one eye will do. And that one eye could even be colour-blind without a significant reduction in the driver's ability to drive and adapt to new situations.

The trillion-dollar question is: how do humans do it?

Well, we know that there are a few skills that are employed, and a few tricks in the way that we deploy them. There is a good article on Visual Expert (link) describing what is going on when a driver reaction is needed, but in summary:

Sensation - there is a shape in the road

Perception/recognition - the shape ahead is a dog

Situational awareness - the dog is jaywalking and has not seen me coming

Response selection & programming - slam on the brakes

Each of these skills is a collection of abilities, and it is some (not necessarily all) of these abilities that need to be replicated in software in order to have fully autonomous L5 vehicles.

What we see the most of at the moment, is Perception. The ability to recognise objects is a mature software solution that is fairly straightforward to implement with minimal hardware. Face detection on a smartphone app, for example.

The AP display in front of the driver is a simplistic view of the current state of this "skill". We know that Tesla have internal builds that are far more perceptive, having the ability to recognise vehicle types and road signs, for example.

What is much more of a challenge are the Situational awareness and Response selecton pieces. In a human, we subconsiously apply the following abilities in a fraction of a second:

Threat determination - will I hit them?

Intuition/experience - they will not see/hear me until it is too late for them to jump out of the way

Path prediction/Intent of others - is the oncoming car on the other side of the road going to stop / is the car behind too close to stop / where will the dog be if they don't change speed or direction before I arrive in that part of the road

Determination of vehicle capability - can the car even stop in time or should I try to avoid

Self-preservation & social prejudice - hit the lamp post, the oncoming car, or the dog

Response - brake hard, sound horn

At the moment, support for these skills in AP is very simplistic, and I even doubt whether a NN is involved at all with TACC or AEB after the Perception stage. Perhaps this is actually OK - building these abilities in a NN will be a tremendous challenge to get to human-equivilence, and there is no rule that says that NN alone is the right approach.

TL/DR;

Yes, the hardware is capable, but I think this the wrong question. The question that should be asked is: can the software ever be capable enough to safely control the vehicle in any given traffic scenario?

MarcusMaximus

Active Member

I have a question less about hardware and more about AI learning.

Most cars sit for hours at a time after the owner is done with work or running a few errands because we obviously have to sleep. During our sleep we replay the day (multiple times faster) over and over again to strengthen our memories. Slumber Reruns: As We Sleep, Our Brains Rehash the Day I'm wondering if the same approach can be taken to Tesla AI for driving. Imagine putting an SSD in the car beside the Nvidia card and recording an entire day's worth of driving from all angles. At night the car can enter "learning mode" and replay the videos over and over again, constantly learning and tweaking the algorithm. In the morning when the car gets put in drive the videos delete and a new set are created. Google does something similar with the info they collect Google's self-driving cars rack up 3 million simulated miles every day but at a data center.

So my questions are, would there be any real benefit to doing this? Would the AI learn more from replaying the day's driving over and over again vs just doing it once in real-time?

It's less about driving between the lines and more about reacting to other cars around you, so taking the same route every day will always bring new challenges.

If so, why haven't they done something like this? I would imagine it'd be a privacy issue that you could disable if you didn't like the idea of recording the entire day. There are thousands of Teslas with lots of computational power spending a majority of their day doing nothing. Harnessing that power, to my non-expert mind, would be a huge benefit to speeding up the learning process.

The problem here is that current learning techniques generally don't allow for this kind of refinement after the fact(for reasons that are a bit too complicated to go into). You can get around some of that by calculating certain parameters based on what the inference network has seen, but that's much more limited than what you're suggesting. The human brain manages this through its plasticity; actually changing its structure in response to new information, but we haven't really mastered doing that in AI.

So the only real option for doing this would actually be retraining the networks from scratch, with your data added in. But it's unlikely you'll have anywhere near the required storage for all that data in your car.

And, even more reason: the amount of data you collect should be insignificant next to how much Tesla has and, worse, focusing on your data could make the model "overfit", where it becomes very good at the particular type of driving you usually do, but unable to generalize to new situations.

Similar threads

- Replies

- 2

- Views

- 831

- Replies

- 85

- Views

- 9K

- Replies

- 46

- Views

- 19K

- Replies

- 2

- Views

- 2K