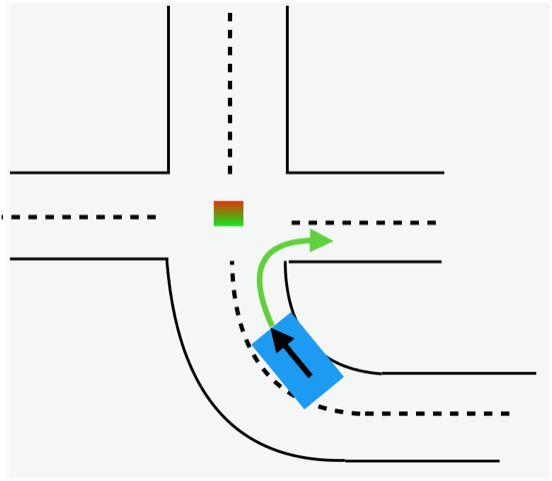

I’ve found that FSD Beta (under 2022.44.30.5) cannot negotiate a particular intersection geometry shown below. The vehicle just stops before the intersection and will not proceed.

Here’s the setup: the vehicle is in blue with the direction of motion shown by a black arrow. The planned path is shown in green. The intersection has traffic lights that I believe the vehicle sees. The vehicle does not pull up to the stop line, instead it stops in the middle of the curve and will not proceed. I’ve encountered two instances of this intersection geometry in my area, and in both cases the vehicle behaves the same. I have to manually drive the vehicle thru the right turn, then re-engage FSD.

I’ve also noted that the vehicle under FSD beta doesn’t do double-left-turn lanes at an intersection very well. It fails to anticipate which of the two left turn lanes it should use for its near-future planned path, and it will slowly maneuver between the two left turn lanes when there are no other vehicles around. I have to take over and re-position the vehicle in the correct lane for the future path.

Yet another problem: FSD beta will not use a particular freeway exit even though it is in the planned route. This exit is at the intersection of I-5 and I-405 south of Seattle in the Tukwila area. The particular exit is the transition between “southbound” I-405 and southbound I-5. It’s a 270 degree transition between freeways. The vehicle, in the right lane, consistently drives right past the exit onto SR-518 (beyond the end of I-405), then slows way down and impedes traffic behind. I have to drop out of FSD and speed up while Nav finds an alternate route. Sometimes, the vehicle will change lanes into the left lane because of traffic before the interchange and fails to change lanes back into the right lane in time to make the exit.

Here’s the setup: the vehicle is in blue with the direction of motion shown by a black arrow. The planned path is shown in green. The intersection has traffic lights that I believe the vehicle sees. The vehicle does not pull up to the stop line, instead it stops in the middle of the curve and will not proceed. I’ve encountered two instances of this intersection geometry in my area, and in both cases the vehicle behaves the same. I have to manually drive the vehicle thru the right turn, then re-engage FSD.

I’ve also noted that the vehicle under FSD beta doesn’t do double-left-turn lanes at an intersection very well. It fails to anticipate which of the two left turn lanes it should use for its near-future planned path, and it will slowly maneuver between the two left turn lanes when there are no other vehicles around. I have to take over and re-position the vehicle in the correct lane for the future path.

Yet another problem: FSD beta will not use a particular freeway exit even though it is in the planned route. This exit is at the intersection of I-5 and I-405 south of Seattle in the Tukwila area. The particular exit is the transition between “southbound” I-405 and southbound I-5. It’s a 270 degree transition between freeways. The vehicle, in the right lane, consistently drives right past the exit onto SR-518 (beyond the end of I-405), then slows way down and impedes traffic behind. I have to drop out of FSD and speed up while Nav finds an alternate route. Sometimes, the vehicle will change lanes into the left lane because of traffic before the interchange and fails to change lanes back into the right lane in time to make the exit.