mikes_fsd

Banned

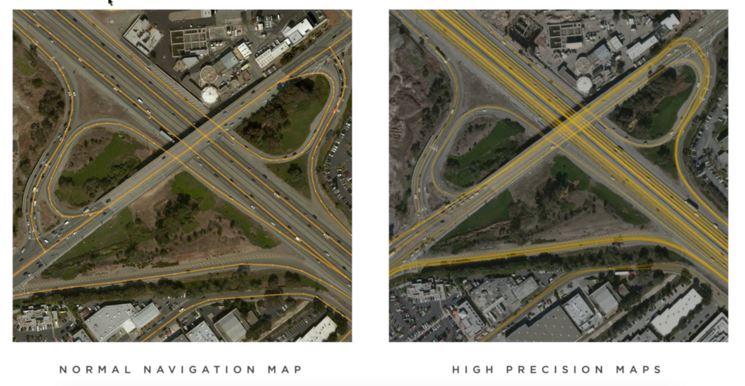

Exactly!so HD maps are bad but high precision maps are good? did I get it right?

That was exhausting!

Context is your friend!

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

Exactly!so HD maps are bad but high precision maps are good? did I get it right?

I propose we change the definition of a HD map to: "A Map that has been created prior to an autonomous vehicle can drive the area"

This is a bad definition because this makes conventional nav maps hd maps (obviously you must have nav maps to navigate in an area)

Where it does very poorly so far is where the lane lines are faded or incorrectly moves into other lanes etc. :

Maybe this is the best way to delineate.Tesla does not create a full 3-D reconstruction of the environment offline for comparison.

As it should be and as Tesla has stated that they use maps.

How is navigation supposed to be done in a FSD car if the car does not have maps?

The argument is not on whether maps are used, the argument is that it is not HD Maps (not highly detailed cm level accuracy maps)

In 2015 a full year before Tesla revealed the Autopilot 2.0 hardware.. there was this article Tesla Building Next Gen Maps through its Autopilot Drivers

How Tesla was getting fleet data to improve the accuracy of maps. At that time not many providers had lane level info for navigation (Google maps had just introduced the lane level info in their turn-by-turn navigations)

This does not give you lane marking drawn on tiles.

It gets you info along the lines of: 4 lanes in one direction off-ramp starts at this geo-code, etc

Oh I know that.Just remember that this mapping effort was one of the things that delayed FSD, Elon said that that got them to "local maximums" and that they had to abandon the high precision mapping and go back to relying on vision to make further progress.

Also, by definition anything that requires pre-existing maps, of any resolution, can not ever be L5. Because L5 has to be able to drive on a brand new freshly paved, or dirt/gravel, road. And we have seen examples of the Tesla beta FSD driving on roads that aren't even in Google Maps yet.

At least looking at OpenStreetMap data, intersections with traffic lights and stop signs often have a single node (e.g., the point where two road lines cross) with an attribution tag indicating there is a given road feature. If we just assume most intersections are of 2 lanes in each direction with roughly 5 meters width for each lane, the sign or signal most likely is along the edge or corner of the intersection (and not in the exact middle of the road), so the accuracy is probably around 5-10 meters for a small intersection. And this is based on some human overlaying a line on top of an ideally somewhat reasonably GPS oriented satellite image.so what accuracy is there on the tesla maps for traffic control devices, crosswalks, stop lines and other such objects?

If maps require any pre-rendered tiles of surroundings to be fed into the NN, they are HD maps

Hight Precision Maps are not pre-rendered maps

they do, but it's not clear if everybody got the explicit permission to post their stuff or only some of the participants.Are they rolling out FSD Beta to more people?

Vector tiles - Wikipediacan maps be not-prerendered?

Vector tiles, tiled vectors or vectiles are packets of geographic data, packaged into pre-defined roughly-square shaped "tiles" for transfer over the web. This is an emerging method for delivering styled web maps, combining certain benefits of pre-rendered raster map tiles with vector map data. As with the widely used raster tiled web maps, map data is requested by a client as a set of "tiles" corresponding to square areas of land of a pre-defined size and location. Unlike raster tiled web maps, however, the server returns vector map data, which has been clipped to the boundaries of each tile, instead of a pre-rendered map image.

how can maps be not-prerendered? Maps is a prior knowledge you have of the area before you actually enter the area.

You might want to rethink your definition a bit.

how can maps be not-prerendered? Maps is a prior knowledge you have of the area before you actually enter the area.

You might want to rethink your definition a bit.

The easiest thing for them to use would be a vector tile. After all that computers just doing a bunch of math.My guess is they are taking some simplistic information about the intersection, and predicting what it should look like based on learnings of intersections in general

The easiest thing for them to use would be a vector tile. After all that computers just doing a bunch of math.

Yeah, I was talking about the input.My guess is the output is from a neural net. No idea what form the input is.

yes, that's what Tesla does. They use valhalla/mapbox container with tiles.

They don't. We did not really discuss anything about 3D in prior discussion, why bring in 3D in all of a sudden?They are not creating a 3D environment offline.

Yes, this is what they do. Most importantly, if something is not in that "simplistic" information set as you call it (like say there's no side street marked in there) - the system does not see it until it's right next to it and even then it's not very realistic (see the SC road link I posted for examples) to have actual high confidence visual. When something IS marked in the "simplistic information" set - the system "predicts" it based on a lot lower confidence visuals.My guess is they are taking some simplistic information about the intersection, and predicting what it should look like based on learnings of intersections in general. Thus the output looks quite realistic, though it is actually mostly a prediction

huh? what's contrarian about it? I am just trying to get a non-contradictory definition.I’ve been following along, and at this point, you’re coming off as purely a contrarian with comments like this.

So, you are going to claim the vector tiles - something that Google has used on all they maps since 2013 is the HD Maps that you are referring to when you say Tesla uses HD Maps?yes, that's what Tesla does. They use valhalla/mapbox container with tiles.

Millimeter precision HD Vector Maps (the title of that page is fun btw)