sleepydoc

Well-Known Member

There's a difference between making an informed decision and assuming that reading some articles gives you qualifications you don't have.We all need to make informed decisions so we don't get swindled by bad actors.

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

There's a difference between making an informed decision and assuming that reading some articles gives you qualifications you don't have.We all need to make informed decisions so we don't get swindled by bad actors.

That is a strawman you have created because I don't see anyone claiming reading articles gave them qualifications.There's a difference between making an informed decision and assuming that reading some articles gives you qualifications you don't have.

Consider them marked .....I am not a engineer. But even I...a regular joe can tell you. The cameras on Teslas right now? Will NOT be enough to achieve Robotaxi level FSD capabilities.

Mark my words here. Now

That is a strawman you have created because I don't see anyone claiming reading articles gave them qualifications.

That said, 1.2mp sensors is not adequate for L4 or L5 ADS. You don't need a PhD in electrical engineering or Machine learning to come to that conclusion. A close read on the technological advancement in this field and some critical thinking should tell anyone that. For example, Elon Musk said AP1 hardware was capable of L5 autonomous driving, and they are on revision 3.0 now which is not looking like will be enough either. Tesla is possibly upgrading to 5mp sensors in Hardware 4.0 according to some reports.

Notice that NO ONE is disagreeing with me?Consider them marked .....

Since you’re nothing more than a troll I rarely respond to your posts but I simply suggest you take a look at my posts. You’ll see I’m no Fanboy. I’m also not a hater. I’m simply honest.Sleepydoc...you are NOT getting paid by Elon/Tesla to cover up their shortcomings, misleading statements, horrific communications, multiple failures at meeting established timelines, etc. Look around..read...the chorus of voices of frustration with F

Notice that NO ONE is disagreeing with me?

Nobody disagrees with you because then you won’t leave. You are the only one entertained by your input which is very little.Sleepydoc...you are NOT getting paid by Elon/Tesla to cover up their shortcomings, misleading statements, horrific communications, multiple failures at meeting established timelines, etc. Look around..read...the chorus of voices of frustration with F

Notice that NO ONE is disagreeing with me?

So what? Doesnt mean they agree, just means most people are bored with the continual "I know xxx" claims again and again and again ..Notice that NO ONE is disagreeing with me?

That’s still not a disagreement.So what? Doesnt mean they agree, just means most people are bored with the continual "I know xxx" claims again and again and again ..

Go look through the old posts before FSD beta was out. People were "proving" that the car would not be able to see approaching cars at all, and would drive out into traffic all the time, and who knows what else. Where are those "proofs" now?

It's possible, of course, that the car would benefit from additional cameras (headlight side ones have been suggested), but that doesnt mean the car cannot be made a competent driver without them. Right now the car in many ways has better vision than human drivers.

I didn't say it was .. as you well know.That’s still not a disagreement.

Not sure how it’s not clear that consumer cars today do not have the same hardware as existing Waymo and GM robotaxis (e.g. - in SF). Yes, I’m very familiar with announced LiDAR equipped vehicles, but you are comparing future models (which actually aren’t yet being advertised as L4 city robotaxi capable) to present day. Present day existing consumer vehicles, we don’t have L4 robotaxis. But the only issue with the argument being made is that Tesla (available for purchase) is being compared with Waymo/GM robotaxi (not available for purchase).I did not misunderstand. You said;

And that is wrong. Consumer vehicles do use the same hardware as GM and Waymo use just different configurations. Robo-taxis and consumer vehicles do not have to use the same sensor configuration. L4 on city streets in consumer vehicles is not ready and will not be ready for years but for things like robust D2D L2 and L3 systems we will see in the next couple of years, there are different types of Lidars and for consumer vehicles;

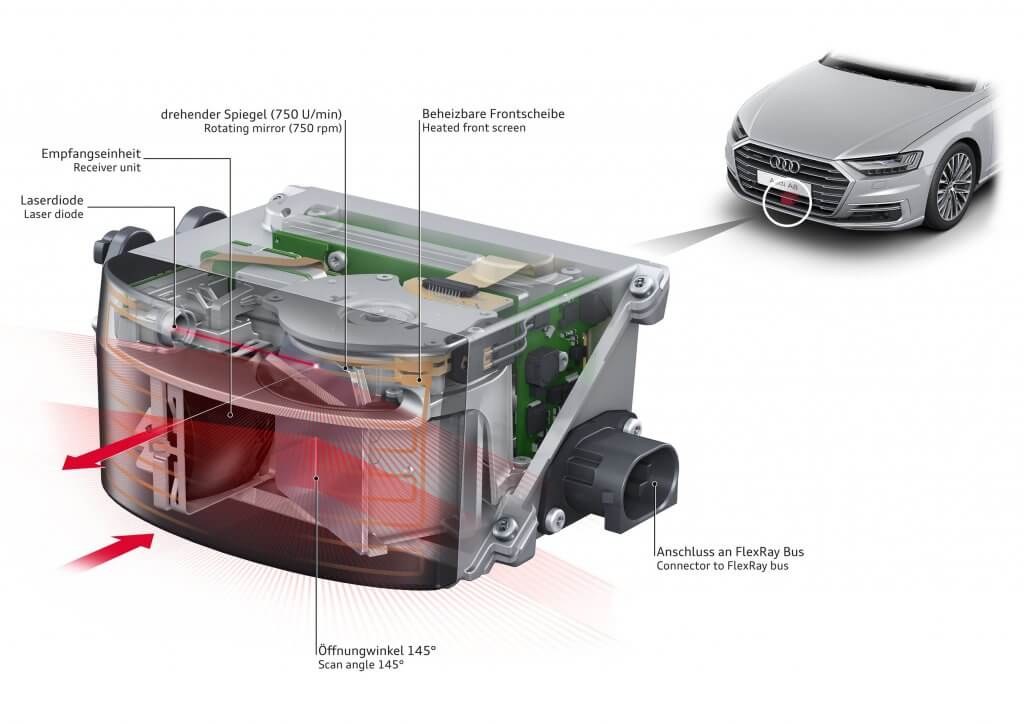

Audi has been using Valeo Scalar Lidar which spins but is placed in the front grill. It is lower resolution compared to something Waymo would use but is estimated to cost $600. Mercedes will be using the 2nd generation of Valeo in their L3 systems mounted in the front grill.

NIO will use Innovusion Falcon which comes standard in their ET7 which cost not more than ~$80K

Volvo will use Luminar LiDAR in their sensor configuration for driver assist and ADS. Consumer cars will use Solid State or Flash Lidars and not the top spinning lidar like robo-taxis. It does not really matter what a robo-taxi looks like, and sensor configuration and cost is less of an issue there.

They are talking about cost of systems that are not being produced at scale yet. Mercedes Drive Pilot which comes with 1 front facing Lidar cost $5300 on the S-Class, and $7900 on the EQS. Consumer cars will not have the same sensor configuration as a robo-taxi fleet operated by a company like Cruise or Waymo.

There are several cars available today with lidars, not just announced. NIO ET7, ET5, ES5, Huawei Arcfox HI, Xpeng P5, Lucid Air, etc. I could keep going.Not sure how it’s not clear that consumer cars today do not have the same hardware as existing Waymo and GM robotaxis (e.g. - in SF). Yes, I’m very familiar with announced LiDAR equipped vehicles, but you are comparing future models (which actually aren’t yet being advertised as L4 city robotaxi capable) to present day. Present day existing consumer vehicles, we don’t have L4 robotaxis. But the only issue with the argument being made is that Tesla (available for purchase) is being compared with Waymo/GM robotaxi (not available for purchase).

Who said MP was the "main" problem? I said 1.2MP is not adequate for a L4 or L5 ADS. Currently using only cameras is not adequate for a safe deployable L4 ADS. The current state of the art requires multimodal sensor fusion from various complimentary sensors. Just look at all the deployed L4 ADS and what they all have in common.I'm not convinced that the MP of a camera is the "main" problem.

You are not throwing more hardware at the problem; you are using better hardware that is necessary to achieve the task. Bigger and bigger NN require better and more compute. We know from greentheonly that Tesla currently saturates the compute onboard AP3 hardware with little room for redundancy.You can always throw more hardware at a problem

Everyone is finding ways to get the most out of every pixel. Tesla's NN architecture references research papers published by Facebook, Google, Waymo etc. Tesla is not doing anything that anyone else isn't in the field. Others are just using much better sensors as well.but from what I've heard Elon and team talk about is refining the input (pixels) where they take the raw values of each pixel without running it through filters us humans need to view them. It sounds like they are finding ways to get the most out of every pixel.

Tesla knows, that is why AP4 is in the works, and they are reported to have signed a deal with Samsung to provide 5MP sensors and a slight possibility that 4D Imaging Radar will make an appearance.Will it work? Who knows...

Because MP relates to visibility and being able to track and identify people and objects from greater distances? Tesla's current limit is lack of bandwidth and compute to process higher resolution data output which is something AP4 architecture should be able to do. They also sold 1.2MP and AP2 hardware with promises of L5 autonomous driving.Although you don't need a PhD in electrical engineering to come to a conclusion, some kind of proof or data helps. I'm seriously interested in seeing a published paper explaining why 1.2mp sensors are not adequate for L4 or L5. Then seeing if Tesla (in their published videos/documents) has taken steps to remedy any shortcomings (like the raw pixel stuff they already talked about).

My literal first example was a car from 2017 and cars being produced right now. My contention is not what level of autonomy these cars have but the idea that consumers can't afford cars with the type of sensors on Waymo and GM. Those same sensors have been and are in consumer cars right now. Lidar, Radar, High resolution cameras, Ultrasonics etc.Not sure how it’s not clear that consumer cars today do not have the same hardware as existing Waymo and GM robotaxis (e.g. - in SF).

My literal first example was a car from 2017 and cars being produced right now.Yes, I’m very familiar with announced LiDAR equipped vehicles, but you are comparing future models (which actually aren’t yet being advertised as L4 city robotaxi capable) to present day. Present day existing consumer vehicles, we don’t have L4 robotaxis. But the only issue with the argument being made is that Tesla (available for purchase) is being compared with Waymo/GM robotaxi (not available for purchase).

Well, that's a lot of claims .. care to explain the reasoning and/or quote your sources here?Who said MP was the "main" problem? I said 1.2MP is not adequate for a L4 or L5 ADS. Currently using only cameras is not adequate for a safe deployable L4 ADS. The current state of the art requires multimodal sensor fusion from various complimentary sensors. Just look at all the deployed L4 ADS and what they all have in common.

How can you prove that something doesn't exist?Well, that's a lot of claims .. care to explain the reasoning and/or quote your sources here?