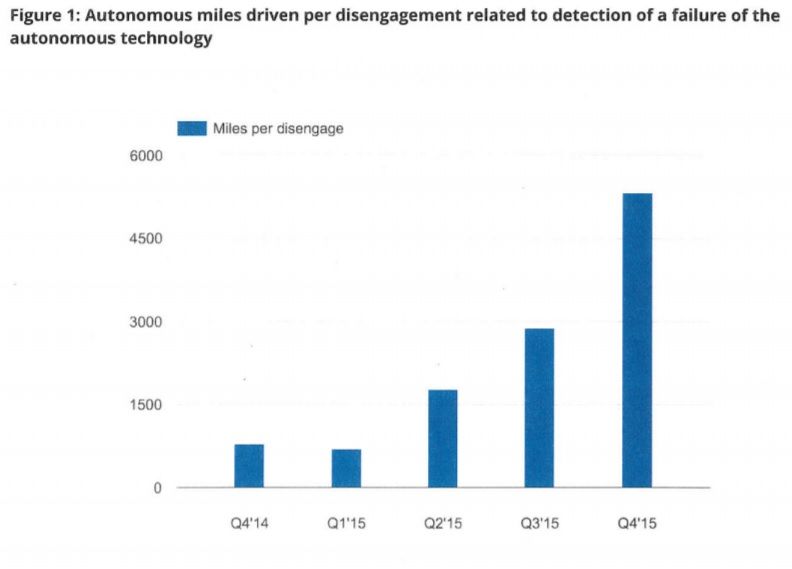

The point I am making is that one driver intervention on average every 5000 miles means that

Google's cars are not Autonomous, and really should not be described as such.

I have every respect for and applaud the work Google is doing but with all the effort that they are putting in and still can only achieve 1:5000 intervention:miles just goes to show how damn hard full autonomous is.

I also feel that Tesla are likely to run into difficulties with their claims of full autonomous driving, to the point that I doubt it will be achieved other than on interstate and even then in a limited way. It is worth observing however that not for the first time Tesla have been a bit cute with words .. hardware capable of fully autonomous driving ... is clearly not the same as full autonomous implemented everywhere.

imho autonomous cannot happen until systems can anticipate the driving scene in the same way a human does. Kids playing on the sidewalk with a ball, dog chasing a cat, partygoers spilling out of a nightclub, an incident the other side of the median ... all that sort of stuff.

Sure you can have autonomous without some of these edge cases, but then you get 1:5000 and that just isn't going to be acceptable when an autonomous car hits a young kid that just ran into the road without warning after their ball. A human driver can do this and there is a legal challenge to establish the events, but society accepts that this happens however unfortunate to the individuals concerned, society imho is a long ay from accepting that an autonomous car killed/injured a kid in the same circumstance.

On top of which the instant a car becomes autonomous, the manufacturer becomes responsible as the driver by definition is no longer in control and is relying entirely on the vehicles systems. That Manufacturer is going to need one heck of an insurance policy.

The

only route to manufacturers absolving themselves of responsibility is by standards body accreditation/testing.

This I see as the route forward but yet again attitudes have to come a long way.

All good fun though, let's enjoy the ride