Why does the size of Tesla’s training fleet matter? It’s well over 1 million cars, with the fleets of all competitors worldwide amounting to a combined size of well under 10,000. But is data really that important? Can you really get much more with 1 million+ cars than with 100 or 1,000? Yes.

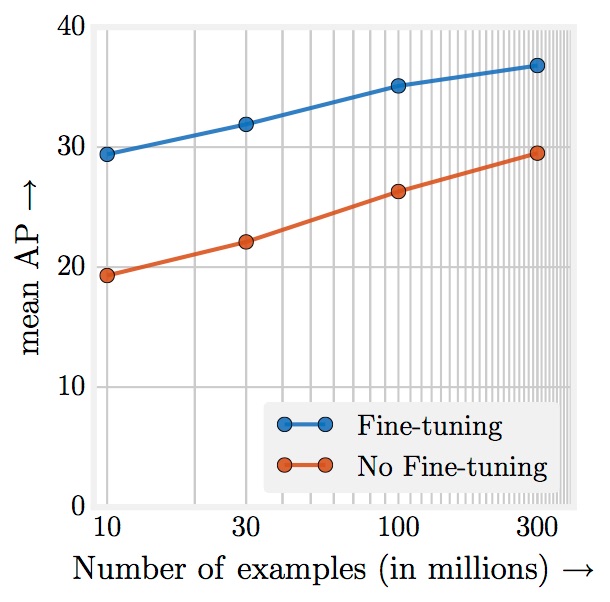

A paper and accompanying blog post by Google AI emphasizes that neural networks continue to improve logarithmically as noisily labelled training datasets grow exponentially, up to at least the scale of 300 million examples, as long as the neural network has enough capacity (in terms of size/depth) to absorb the training signal:

From the blog post:

Facebook AI later took this even further, using a dataset of 1 billion images.

In certain applications of deep learning — and my contention is that autonomous driving is one of them — the ceiling on neural network performance is imposed by the quantity of available data.

What about labelling? As many papers have shown, various techniques such as weak/noisy labelling, self-supervised learning, and automatic curation through active learning can leverage very large quantities of data for better neural network performance with an increase in hand annotation.

In the autonomous driving subdomain of planning, imitation learning and reinforcement learning can — at least in theory and somewhat in practice already — leverage real world data to train neural network without any human annotators in the loop (besides the drivers).

As a general principle of deep learning, it’s not controversial to say performance scales with data and neural network size, with no known limit as of now. It has become less controversial over the last few years that techniques like imitation learning, reinforcement learning, and self-supervised learning are highly promising, and virtually all autonomous vehicle companies as far as I’m aware have started to at least experiment with one or more of them, if not deploy them to their fleet.

If we apply this general principle in the specific case of Tesla, the inference is clear: Tesla is working under a much higher ceiling than everyone else. Everyone else combined, in fact.

It is my strong belief that this advantage will become plain to see over the next few years, perhaps starting as early as this year. I wouldn’t be surprised if, in a few years from now, people said it was always obvious this would happen.

A paper and accompanying blog post by Google AI emphasizes that neural networks continue to improve logarithmically as noisily labelled training datasets grow exponentially, up to at least the scale of 300 million examples, as long as the neural network has enough capacity (in terms of size/depth) to absorb the training signal:

From the blog post:

“Our first observation is that large-scale data helps in representation learning which in-turn improves the performance on each vision task we study. Our findings suggest that a collective effort to build a large-scale dataset for visual pretraining is important. It also suggests a bright future for unsupervised and semi-supervised representation learning approaches. It seems the scale of data continues to overpower noise in the label space.“

Another important excerpt:“It is important to highlight that the training regime, learning schedules and parameters we used are based on our understanding of training ConvNets with 1M images from ImageNet. Since we do not search for the optimal set of hyper-parameters in this work (which would have required considerable computational effort), it is highly likely that these results are not the best ones you can obtain when using this scale of data. Therefore, we consider the quantitative performance reported to be an underestimate of the actual impact of data for all reported image volumes.”

Facebook AI later took this even further, using a dataset of 1 billion images.

In certain applications of deep learning — and my contention is that autonomous driving is one of them — the ceiling on neural network performance is imposed by the quantity of available data.

What about labelling? As many papers have shown, various techniques such as weak/noisy labelling, self-supervised learning, and automatic curation through active learning can leverage very large quantities of data for better neural network performance with an increase in hand annotation.

In the autonomous driving subdomain of planning, imitation learning and reinforcement learning can — at least in theory and somewhat in practice already — leverage real world data to train neural network without any human annotators in the loop (besides the drivers).

As a general principle of deep learning, it’s not controversial to say performance scales with data and neural network size, with no known limit as of now. It has become less controversial over the last few years that techniques like imitation learning, reinforcement learning, and self-supervised learning are highly promising, and virtually all autonomous vehicle companies as far as I’m aware have started to at least experiment with one or more of them, if not deploy them to their fleet.

If we apply this general principle in the specific case of Tesla, the inference is clear: Tesla is working under a much higher ceiling than everyone else. Everyone else combined, in fact.

It is my strong belief that this advantage will become plain to see over the next few years, perhaps starting as early as this year. I wouldn’t be surprised if, in a few years from now, people said it was always obvious this would happen.

Last edited: