This is my attempt to explain the technical information that was presented at Tesla AI Day.

Part 1: Overview

Tesla autopilot needs to be understood as a general purpose driving system that can in theory handle any driving situation in any location. This is opposed to many other self driving systems from Ford, GM, Mercedes, etc. that rely on pre-defined high definition maps and geographically locked self driving areas.

The Tesla system perceives the driving environment in real time through its eight cameras. The Tesla vision and car control system uses backpropagation trained neural networks in combination with complex C++ coded algorithms.

A " backpropagation trained neural network" is the same kind of neural network that is currently used throughout the AI industry today. It is what powers voice assistants like Alexa and Siri, Netflix recommendations, and Apple's face recognition technology.

These neural networks (NN) have some differences and some similarities with the way our brains work.

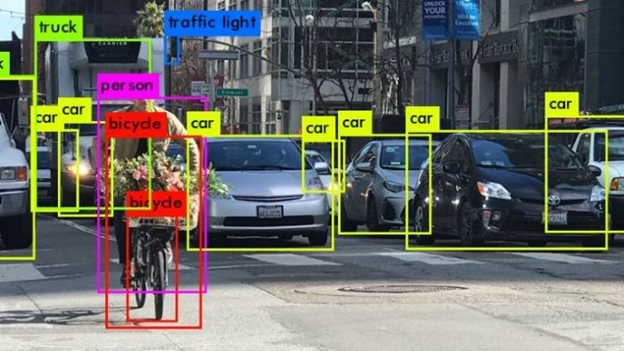

Production systems will train such a NN using millions of examples that explicitly tells the NN what it is supposed to be learning from these examples. For instance, a visual NN will be shown millions of images with each image also carrying one or more labels identifying what is in the image. To train a NN for complex scene analysis, a person would draw lines around objects, identifying what is in each drawn polygon.

For example, in the image below, labels are assigned to each object you want the system to know about.

Once you have millions of labeled images, you can train a NN on this data. Training is very compute intensive and is normally done on huge GPU clusters. It isn't unusual to take several days to train a large data set on a very large GPU cluster.

After training, you now have a NN with millions of internal parameters that were generated during training. You can then download this NN onto a typically much smaller "inference processor" and run the NN to do your scene analysis or whatever you are trying to do. Running a scene analysis will typically take a fraction of a second per image on an inference processor with a trained NN. Tesla, of course, built their own AI inference chip and each car has two of these.

Now, when Elon says each person using Autopilot is helping to train the NN, this is only partially true. First, your particular car isn't learning anything. It can only run the downloaded NN to understand visual scenes. What it can do though is upload 10 second long video snapshots when requested by Tesla.

For example, when Tesla wanted to improve detection of "cut-ins" or cars merging into your lane on the freeway, they wrote a query that ran in each car in the Tesla fleet. The Tesla car computers would trigger whenever it saw a car moving into your lane and then upload these 10 second or so clips to the Tesla datacenter. After a few days, Tesla would have, say, 10,000 video clips of this happening. These clips are "auto labelled" since they each contain video of the car being cut off on the freeway. Tesla then trains their NN on these labelled video clips. The NN picks out and learns clues from the video by itself, like when a blinker is on, or a car starts to drift over, or its angle subtly changes. That's the fundamental power of a NN – with labelled data, it can automatically without (much) human programming, learn how to recognize patterns.

So after retraining the NN, Tesla will deploy the new NN in shadow mode into the Tesla fleet and ask the fleet to observe when cut-in predictions are incorrect. Either failing to predict that a car will cut-in, or predicting that a car will cut in but it doesn't are flagged by the cars running in shadow mode. These exceptions are then uploaded again to the datacenter and a new NN is trained on this exception data. A newer NN is then deployed, and run again in shadow mode. This cycle repeats for however many times it takes for the network to get really good at prediction, at which time it is finally deployed to the fleet as a working update.

When Tesla says its fleet is its secret weapon versus everyone else, it isn't boasting. Tesla effectively has 1M+ mobile Tesla AI chips available at its disposal to do this kind of training. Thinking about it in these terms, Tesla has by far the biggest AI supercomputer on the planet.

OK, with that as background, let's get to how the Tesla NN actually works.

Part 2: Vision Architecture

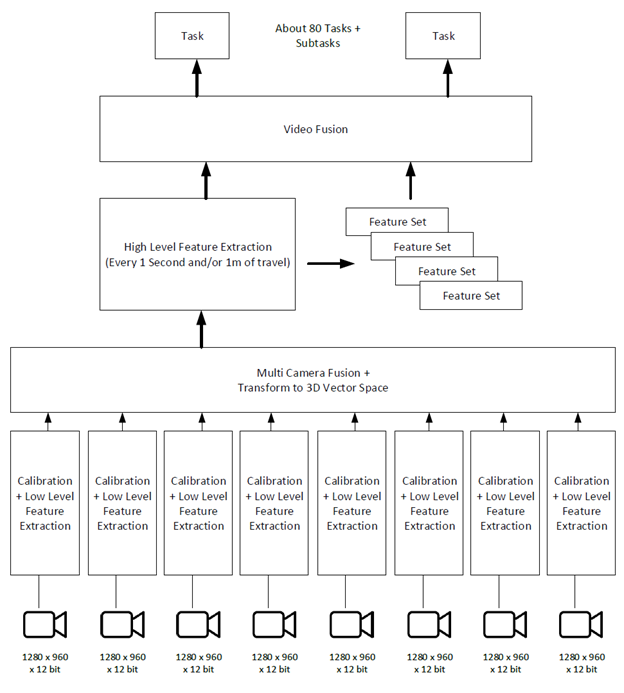

This figure shows the overall architecture of the vision system. This is only for perception, meaning understanding what you are seeing. Not shown is the driving and control system which will be discussed later.

This is very high level – each of these boxes will typically contain a few to a dozen neural nets each connected in various complex ways.

All 8 cameras first undergo a calibration neural net which warps each camera into a "standard" image that should be the same across the entire fleet. When you first purchase the car, Autopilot goes through a several day calibration exercise where it trains an in-car neural network to understand how each camera in the car is off baseline. Each camera could be slightly twisted, or pointed too high skyward, or whatever, and the car needs to understand what these deviations from baseline are so that the image that is presented to the Autopilot neural network looks the same to that neural network across all cars. This is the only time a neural network is actually trained in the car itself.

Once you get past this calibration (warping) stage, standard low level features are extracted from the images to reduce or compress the huge amount of image data into something more manageable without losing any important information.

The next step is to merge all eight cameras into one view while at the same time building a 3D vector space representation of the world.

Creating a 3D vector space representation of a 2D flat image is something our human visual cortex does so well automatically that you probably don't even realize you are doing it.

When you look at an image like this:

You just know that the red sign is further away than those three cars, and the American flag is at about the same distance away as the car with the headlights and that car is well back of the white van. The Autopilot NN has to figure this out as well and the resulting output is a three dimensional model of the world with key features located in that 3D space.

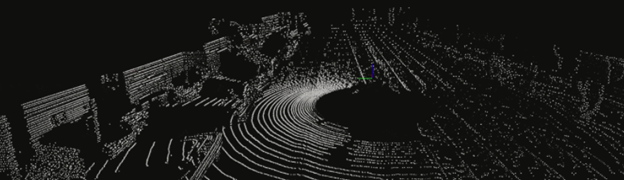

Tesla's 3D vector space model is a fairly dense raster with each point having a distance measurement away from the car. It is similar to the point cloud a LIDAR system would generate:

Tesla's 3D vector space additionally adds velocity to moving objects, locates curbs, driveable areas, is in color and has a higher resolution. It is also quite a bit more sophisticated than a LIDAR plot since it makes a 3D image that includes occluded objects – for instance, a pedestrian that is currently behind a car and can't be seen in the current video frame. The network tracks these occluded objects using a memory based video module described below.

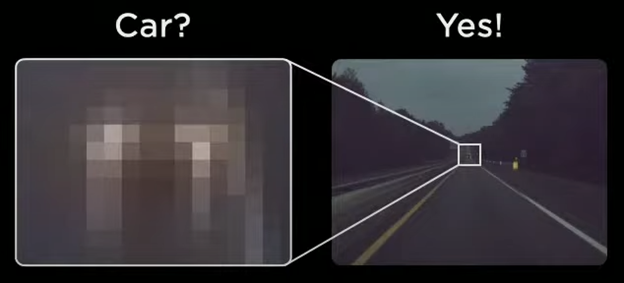

Note that Tesla's low level NN vision system is very sophisticated. Just like the human cortex, these networks share information from the top level of the hierarchy to the lowest. Here's an example where you are trying to figure out what a 10x10 pixel image is (on the left).

The NN knows that the 10x10 blurry pixels are at the vanishing point of the image, and thus it can infer they are a car's headlights.

Once the camera fusion and 3-D vector space is created, Tesla adds in time and location based memory. The example they gave is when you drive towards an intersection, you might roll over lane markings indicating the leftmost lane is a turn lane.

By the time you reach the intersection, it's been a couple of seconds when you passed over the left hand turn lane marker, so there needs to be some kind of memory of these features as you pass them by. The vision engine will snapshot the extracted features it is seeing every short time internal or 1m of travel, creating a list of recently seen visual clues. Again, this is very similar to the way humans drive – we may not consciously remember that we rolled over a left hand turn marker, but our own neural network does and uses that information to guide our driving.

This "video module" where Tesla adds in a running memory of recent features is very powerful and I'm not aware of any other self driving system that does anything like this.

Finally, the output of this video network that is a combination of the current visual scene and recent visual clues projected onto a 3D vector space is then consumed by around 80 other neural networks that Tesla calls "tasks". Each task does a very specific thing like locate and understand traffic lights, or perceive traffic cones, or see stop signs, or identify cars and their velocities, etc. The output of these tasks is high level semantic information like: in 30m, there is a red traffic light, or this car 30m in front of me is likely parked.

Part 3: Planning and Control

Now that the NN understands locations of all objects, what they are, how fast and in what direction they are moving, what is road surface, where the curbs are, what the signal environment (traffic, road signs) is, and what recently happened, now the autopilot must do three things.

First, it must predict what all the other moving objects are going to do in the next short while. Second, it must plan out what it is going to based on an overall plan (like following a GPS route), and third, it has to tell the car what to do.

This can get quite complicated. Let's explain using a simple example where the car is in the rightmost lane of a three lane road and needs to make a left turn up ahead while there are cars in the middle lane. To do this properly, the car must merge in between the two cars in the center lane and then merge into the far left lane, and do this without going too fast or too slow or with too much acceleration or deacceleration.

Currently, Tesla uses a search algorithm coded in C++ to figure this out. It essentially simulates a couple of thousand different scenarios in which the car and the other cars on the road all interact. It does this at a fairly coarse grained level and the numbers they gave is that the simulator/search system could simulate/search 2,500 different scenarios in 1.5 ms. All these simulations use physics based models meaning they are idealized representations of cars on roads in a simple road representation of lanes and dividers, etc.

At the end of this exercise, the path planner has a rough plan for what the next 15 seconds of travel will be and at that point, it creates a very detailed plan that exactly maps out the specific turning radius, travel path and speed changes required to make the driving as smooth as possible. This too is done using a C++ search algorithm, but uses a different technique that takes into account lateral acceleration, lateral jerk, collision risk, traversal time and merges all this into a total cost function to minimize the "total cost" for the refined planned route. The example they showed to plan out the smooth path for 10 seconds of driving shows about 100 iterations through this search function.

This planning function, along with all the visual perception system before it, happens every 27 ms (36 times a second). So autopilot is sensing and recreating plans 36 times a second to ensure that nothing is going to catch it unprepared for whatever weird crap happens around the car. Autopilot isn't omniscient and can't tell that the idiot driver you are merging in front of is rage texting a twitter response, but it can notice, more quickly that you can, that said driver's car is on a collision course and autopilot will thus react accordingly.

Tesla is working on a new planning algorithm that mostly uses a planning NN instead of hand coded C++ as is currently done. The benefit of a NN algorithm will probably be speed, but it might provide some better plans as well.

Oddly enough, one of the toughest things to plan is navigating around a parking lot. Tesla showed how their NN planner in development would handle this task in much less time than their current planner.

Part 4: Training

OK, I've talked about how the various Tesla NNs work when perceiving the world, and eventually, planning, to drive around it, but how do you teach the NN?

As I described in part 1, you need to give a NN millions of labeled examples for it to learn what you are trying to teach it.

Tesla started off doing this the same way the rest of the industry does it, by having humans draw polygons around features in images. This does not scale. Tesla soon created tools to label in their vector space that is an 8 camera stitched together 3D representation of the world. Label a curb in this enhanced view and now it is auto labeled in the corresponding visual image on maybe three cameras.

They then realized that their fleet drives through the same intersection thousands of times so by tagging an intersection using GPS co-ordinates, a single vector human space labelling effort could now auto label thousands of images taken during all sorts of weather conditions and time of day, and angle of viewing. So, in 3d vector space, once you know a stoplight is a stoplight, then thousands of future drives through the same intersection, from multiple different directions, time of day, weather and lighting all can label the same stoplight as a stoplight automatically with no more human input.

Tesla then added completely automatic auto labelling. A car's AI chip can only recognize so much but a 1,000 GPU cluster is much more powerful and doesn't have millisecond time constraints, so such a cluster can be used to automatically label novel images.

Tesla used this auto labelling capability to remove radar with 3 months of effort in early 2021. Part of the problem they had when removing radar was degraded video. For example when a passing snowplow dumps tons of snow onto the car occluding, well, everything.

Tesla realized they needed to auto label poor visibility situations, so they asked the Tesla fleet for examples of when the car NN had temporary zero visibility. They auto labeled 10,000 poor visibility videos in a week, something Tesla said would have taken several months with humans.

After retraining the visual NN with this batch of labelled poor visibility video, the system was able to remember and predict where everything was in the scene as you go through temporary poor visibility, similar to how humans handle such situations.

Using Simulations

Simulations, or creating a 3D world like you would in a detailed video game, is something that Tesla is starting to use mostly for edge cases. They showed examples of people or dogs running on a freeway, a scene with hundreds of pedestrians, or a new type of truck that no one's seen before (the Cybertruck!).

Even the auto labeler has a hard time with hundreds of pedestrians as you might have in Hong Kong or NYC, so creating a simulation where the system knows exactly where everyone is and how fast they are walking is a better solution to train with.

They work really hard to make accurate simulations. They have to mimic what the camera will see in real world conditions. Lighting and ray tracing must be very accurate. Noise in road surfaces must be introduced and they even have a special NN used just to add noise and texture to generated images.

Tesla now has thousands of unique vehicles, pedestrians, animals and props in their simulation engine. Each move or look real. They also have 2,000 miles of very diverse and unique roads.

And when they want to create a scene, the scene is most often created procedurally via computer algorithms as opposed to having an artist create the scene. They can ask for nighttime, daytime, raining, snowing, etc.

Finally, because they can automatically build whatever kind of visual world they want, they now can use adversarial machine learning techniques where one computer system is trying to create a scene that will break the car's visual perception system, and then this breakage can be used to train the vision system better and then it goes back into a closed loop training system.

To say that Tesla's learning pipeline is next level is an understatement. It is now extremely sophisticated and is arguably the most powerful in the world.

DoJo

DoJo is Tesla's next generation training data center. Right now, they "make do" with thousands of the current state of the art Nvidia A100 GPUs clustered together. Tesla thought they could do better so they custom designed their own AI training chip (not to be confused with the AI inference chip which is inside every Tesla car). The training chip is far more powerful and has been designed to work as a piece of a huge super computer cluster.

I won't say much about DoJo, but suffice it to say that it is world class in so many ways from packaging, cooling, power integration, and huge communications bandwidth (easily 10x of current capabilities). Dojo is still being built, I'd guess it is 4-6 months away from being used in a production environment. When it does start getting used, Tesla's productivity will increase – they'll basically be able to do more, faster.

Part 5: Future Work & Conclusions

From watching this and other Tesla AI videos from the last two years, you can tell that Tesla has been racing to get to a working FSD vision system. When Tesla releases their v10 FSD Beta (only weeks away ), it will be a great system, but there are a lot of optimizations Tesla is working on that will be rolled out over the next year or so. Here are a few they talked about.

), it will be a great system, but there are a lot of optimizations Tesla is working on that will be rolled out over the next year or so. Here are a few they talked about.

You might have noticed that Tesla's vision system merges all eight cameras quite late in processing. You could do this earlier in the pipeline and potentially save a lot of in car processing time.

The vision system produces a fairly dense 3D raster of world. The rest of the processing system (like those 80+ tasks) must then interpret this raster. Tesla is exploring ways of producing more of a simple object based representation of the world which would be much easier for the recognition tasks to process. By the way, this is one of those problems that sounds easy but is actually mind bendingly challenging.

As mentioned, Tesla is working on a neural network planner which would reduce path planning compute time significantly.

Their core in-car NNs use high precision floating point, but there is opportunity to use lower precision floating point and maybe going all the way down to 8 bit integer computations in certain cases.

And these are only some broad areas that are being worked on. No doubt each individual NN and piece of code could use some optimization care and love.

Conclusions

All these words, and I've only given you a top level summary of what was presented at Tesla AI day. Tesla really opened their kimono and gave way, way more details than written here at a fairly deep technical level. Indeed you actually had to be an AI researcher or programmer to truly understand everything that was said.

Companies simply do not give deep technical roadmaps like this to the public. Obviously Tesla was trying to recruit AI researchers, but even so, exposing so much of their architecture was a real OG move. The subtext is that Tesla doesn't care if you try to copy them, because by the time you re-create everything, they will have advanced that much further ahead.

The bottom line is that, in my opinion, Tesla can indeed deliver Full Self Driving with this architecture. "When" is always a tough thing to predict, but it appears that all the pieces are in place, and certainly we've seen some pretty impressive FSD beta videos.

Part 1: Overview

Tesla autopilot needs to be understood as a general purpose driving system that can in theory handle any driving situation in any location. This is opposed to many other self driving systems from Ford, GM, Mercedes, etc. that rely on pre-defined high definition maps and geographically locked self driving areas.

The Tesla system perceives the driving environment in real time through its eight cameras. The Tesla vision and car control system uses backpropagation trained neural networks in combination with complex C++ coded algorithms.

A " backpropagation trained neural network" is the same kind of neural network that is currently used throughout the AI industry today. It is what powers voice assistants like Alexa and Siri, Netflix recommendations, and Apple's face recognition technology.

These neural networks (NN) have some differences and some similarities with the way our brains work.

Production systems will train such a NN using millions of examples that explicitly tells the NN what it is supposed to be learning from these examples. For instance, a visual NN will be shown millions of images with each image also carrying one or more labels identifying what is in the image. To train a NN for complex scene analysis, a person would draw lines around objects, identifying what is in each drawn polygon.

For example, in the image below, labels are assigned to each object you want the system to know about.

Once you have millions of labeled images, you can train a NN on this data. Training is very compute intensive and is normally done on huge GPU clusters. It isn't unusual to take several days to train a large data set on a very large GPU cluster.

After training, you now have a NN with millions of internal parameters that were generated during training. You can then download this NN onto a typically much smaller "inference processor" and run the NN to do your scene analysis or whatever you are trying to do. Running a scene analysis will typically take a fraction of a second per image on an inference processor with a trained NN. Tesla, of course, built their own AI inference chip and each car has two of these.

Now, when Elon says each person using Autopilot is helping to train the NN, this is only partially true. First, your particular car isn't learning anything. It can only run the downloaded NN to understand visual scenes. What it can do though is upload 10 second long video snapshots when requested by Tesla.

For example, when Tesla wanted to improve detection of "cut-ins" or cars merging into your lane on the freeway, they wrote a query that ran in each car in the Tesla fleet. The Tesla car computers would trigger whenever it saw a car moving into your lane and then upload these 10 second or so clips to the Tesla datacenter. After a few days, Tesla would have, say, 10,000 video clips of this happening. These clips are "auto labelled" since they each contain video of the car being cut off on the freeway. Tesla then trains their NN on these labelled video clips. The NN picks out and learns clues from the video by itself, like when a blinker is on, or a car starts to drift over, or its angle subtly changes. That's the fundamental power of a NN – with labelled data, it can automatically without (much) human programming, learn how to recognize patterns.

So after retraining the NN, Tesla will deploy the new NN in shadow mode into the Tesla fleet and ask the fleet to observe when cut-in predictions are incorrect. Either failing to predict that a car will cut-in, or predicting that a car will cut in but it doesn't are flagged by the cars running in shadow mode. These exceptions are then uploaded again to the datacenter and a new NN is trained on this exception data. A newer NN is then deployed, and run again in shadow mode. This cycle repeats for however many times it takes for the network to get really good at prediction, at which time it is finally deployed to the fleet as a working update.

When Tesla says its fleet is its secret weapon versus everyone else, it isn't boasting. Tesla effectively has 1M+ mobile Tesla AI chips available at its disposal to do this kind of training. Thinking about it in these terms, Tesla has by far the biggest AI supercomputer on the planet.

OK, with that as background, let's get to how the Tesla NN actually works.

Part 2: Vision Architecture

This figure shows the overall architecture of the vision system. This is only for perception, meaning understanding what you are seeing. Not shown is the driving and control system which will be discussed later.

This is very high level – each of these boxes will typically contain a few to a dozen neural nets each connected in various complex ways.

All 8 cameras first undergo a calibration neural net which warps each camera into a "standard" image that should be the same across the entire fleet. When you first purchase the car, Autopilot goes through a several day calibration exercise where it trains an in-car neural network to understand how each camera in the car is off baseline. Each camera could be slightly twisted, or pointed too high skyward, or whatever, and the car needs to understand what these deviations from baseline are so that the image that is presented to the Autopilot neural network looks the same to that neural network across all cars. This is the only time a neural network is actually trained in the car itself.

Once you get past this calibration (warping) stage, standard low level features are extracted from the images to reduce or compress the huge amount of image data into something more manageable without losing any important information.

The next step is to merge all eight cameras into one view while at the same time building a 3D vector space representation of the world.

Creating a 3D vector space representation of a 2D flat image is something our human visual cortex does so well automatically that you probably don't even realize you are doing it.

When you look at an image like this:

You just know that the red sign is further away than those three cars, and the American flag is at about the same distance away as the car with the headlights and that car is well back of the white van. The Autopilot NN has to figure this out as well and the resulting output is a three dimensional model of the world with key features located in that 3D space.

Tesla's 3D vector space model is a fairly dense raster with each point having a distance measurement away from the car. It is similar to the point cloud a LIDAR system would generate:

Tesla's 3D vector space additionally adds velocity to moving objects, locates curbs, driveable areas, is in color and has a higher resolution. It is also quite a bit more sophisticated than a LIDAR plot since it makes a 3D image that includes occluded objects – for instance, a pedestrian that is currently behind a car and can't be seen in the current video frame. The network tracks these occluded objects using a memory based video module described below.

Note that Tesla's low level NN vision system is very sophisticated. Just like the human cortex, these networks share information from the top level of the hierarchy to the lowest. Here's an example where you are trying to figure out what a 10x10 pixel image is (on the left).

The NN knows that the 10x10 blurry pixels are at the vanishing point of the image, and thus it can infer they are a car's headlights.

Once the camera fusion and 3-D vector space is created, Tesla adds in time and location based memory. The example they gave is when you drive towards an intersection, you might roll over lane markings indicating the leftmost lane is a turn lane.

By the time you reach the intersection, it's been a couple of seconds when you passed over the left hand turn lane marker, so there needs to be some kind of memory of these features as you pass them by. The vision engine will snapshot the extracted features it is seeing every short time internal or 1m of travel, creating a list of recently seen visual clues. Again, this is very similar to the way humans drive – we may not consciously remember that we rolled over a left hand turn marker, but our own neural network does and uses that information to guide our driving.

This "video module" where Tesla adds in a running memory of recent features is very powerful and I'm not aware of any other self driving system that does anything like this.

Finally, the output of this video network that is a combination of the current visual scene and recent visual clues projected onto a 3D vector space is then consumed by around 80 other neural networks that Tesla calls "tasks". Each task does a very specific thing like locate and understand traffic lights, or perceive traffic cones, or see stop signs, or identify cars and their velocities, etc. The output of these tasks is high level semantic information like: in 30m, there is a red traffic light, or this car 30m in front of me is likely parked.

Part 3: Planning and Control

Now that the NN understands locations of all objects, what they are, how fast and in what direction they are moving, what is road surface, where the curbs are, what the signal environment (traffic, road signs) is, and what recently happened, now the autopilot must do three things.

First, it must predict what all the other moving objects are going to do in the next short while. Second, it must plan out what it is going to based on an overall plan (like following a GPS route), and third, it has to tell the car what to do.

This can get quite complicated. Let's explain using a simple example where the car is in the rightmost lane of a three lane road and needs to make a left turn up ahead while there are cars in the middle lane. To do this properly, the car must merge in between the two cars in the center lane and then merge into the far left lane, and do this without going too fast or too slow or with too much acceleration or deacceleration.

Currently, Tesla uses a search algorithm coded in C++ to figure this out. It essentially simulates a couple of thousand different scenarios in which the car and the other cars on the road all interact. It does this at a fairly coarse grained level and the numbers they gave is that the simulator/search system could simulate/search 2,500 different scenarios in 1.5 ms. All these simulations use physics based models meaning they are idealized representations of cars on roads in a simple road representation of lanes and dividers, etc.

At the end of this exercise, the path planner has a rough plan for what the next 15 seconds of travel will be and at that point, it creates a very detailed plan that exactly maps out the specific turning radius, travel path and speed changes required to make the driving as smooth as possible. This too is done using a C++ search algorithm, but uses a different technique that takes into account lateral acceleration, lateral jerk, collision risk, traversal time and merges all this into a total cost function to minimize the "total cost" for the refined planned route. The example they showed to plan out the smooth path for 10 seconds of driving shows about 100 iterations through this search function.

This planning function, along with all the visual perception system before it, happens every 27 ms (36 times a second). So autopilot is sensing and recreating plans 36 times a second to ensure that nothing is going to catch it unprepared for whatever weird crap happens around the car. Autopilot isn't omniscient and can't tell that the idiot driver you are merging in front of is rage texting a twitter response, but it can notice, more quickly that you can, that said driver's car is on a collision course and autopilot will thus react accordingly.

Tesla is working on a new planning algorithm that mostly uses a planning NN instead of hand coded C++ as is currently done. The benefit of a NN algorithm will probably be speed, but it might provide some better plans as well.

Oddly enough, one of the toughest things to plan is navigating around a parking lot. Tesla showed how their NN planner in development would handle this task in much less time than their current planner.

Part 4: Training

OK, I've talked about how the various Tesla NNs work when perceiving the world, and eventually, planning, to drive around it, but how do you teach the NN?

As I described in part 1, you need to give a NN millions of labeled examples for it to learn what you are trying to teach it.

Tesla started off doing this the same way the rest of the industry does it, by having humans draw polygons around features in images. This does not scale. Tesla soon created tools to label in their vector space that is an 8 camera stitched together 3D representation of the world. Label a curb in this enhanced view and now it is auto labeled in the corresponding visual image on maybe three cameras.

They then realized that their fleet drives through the same intersection thousands of times so by tagging an intersection using GPS co-ordinates, a single vector human space labelling effort could now auto label thousands of images taken during all sorts of weather conditions and time of day, and angle of viewing. So, in 3d vector space, once you know a stoplight is a stoplight, then thousands of future drives through the same intersection, from multiple different directions, time of day, weather and lighting all can label the same stoplight as a stoplight automatically with no more human input.

Tesla then added completely automatic auto labelling. A car's AI chip can only recognize so much but a 1,000 GPU cluster is much more powerful and doesn't have millisecond time constraints, so such a cluster can be used to automatically label novel images.

Tesla used this auto labelling capability to remove radar with 3 months of effort in early 2021. Part of the problem they had when removing radar was degraded video. For example when a passing snowplow dumps tons of snow onto the car occluding, well, everything.

Tesla realized they needed to auto label poor visibility situations, so they asked the Tesla fleet for examples of when the car NN had temporary zero visibility. They auto labeled 10,000 poor visibility videos in a week, something Tesla said would have taken several months with humans.

After retraining the visual NN with this batch of labelled poor visibility video, the system was able to remember and predict where everything was in the scene as you go through temporary poor visibility, similar to how humans handle such situations.

Using Simulations

Simulations, or creating a 3D world like you would in a detailed video game, is something that Tesla is starting to use mostly for edge cases. They showed examples of people or dogs running on a freeway, a scene with hundreds of pedestrians, or a new type of truck that no one's seen before (the Cybertruck!).

Even the auto labeler has a hard time with hundreds of pedestrians as you might have in Hong Kong or NYC, so creating a simulation where the system knows exactly where everyone is and how fast they are walking is a better solution to train with.

They work really hard to make accurate simulations. They have to mimic what the camera will see in real world conditions. Lighting and ray tracing must be very accurate. Noise in road surfaces must be introduced and they even have a special NN used just to add noise and texture to generated images.

Tesla now has thousands of unique vehicles, pedestrians, animals and props in their simulation engine. Each move or look real. They also have 2,000 miles of very diverse and unique roads.

And when they want to create a scene, the scene is most often created procedurally via computer algorithms as opposed to having an artist create the scene. They can ask for nighttime, daytime, raining, snowing, etc.

Finally, because they can automatically build whatever kind of visual world they want, they now can use adversarial machine learning techniques where one computer system is trying to create a scene that will break the car's visual perception system, and then this breakage can be used to train the vision system better and then it goes back into a closed loop training system.

To say that Tesla's learning pipeline is next level is an understatement. It is now extremely sophisticated and is arguably the most powerful in the world.

DoJo

DoJo is Tesla's next generation training data center. Right now, they "make do" with thousands of the current state of the art Nvidia A100 GPUs clustered together. Tesla thought they could do better so they custom designed their own AI training chip (not to be confused with the AI inference chip which is inside every Tesla car). The training chip is far more powerful and has been designed to work as a piece of a huge super computer cluster.

I won't say much about DoJo, but suffice it to say that it is world class in so many ways from packaging, cooling, power integration, and huge communications bandwidth (easily 10x of current capabilities). Dojo is still being built, I'd guess it is 4-6 months away from being used in a production environment. When it does start getting used, Tesla's productivity will increase – they'll basically be able to do more, faster.

Part 5: Future Work & Conclusions

From watching this and other Tesla AI videos from the last two years, you can tell that Tesla has been racing to get to a working FSD vision system. When Tesla releases their v10 FSD Beta (only weeks away

You might have noticed that Tesla's vision system merges all eight cameras quite late in processing. You could do this earlier in the pipeline and potentially save a lot of in car processing time.

The vision system produces a fairly dense 3D raster of world. The rest of the processing system (like those 80+ tasks) must then interpret this raster. Tesla is exploring ways of producing more of a simple object based representation of the world which would be much easier for the recognition tasks to process. By the way, this is one of those problems that sounds easy but is actually mind bendingly challenging.

As mentioned, Tesla is working on a neural network planner which would reduce path planning compute time significantly.

Their core in-car NNs use high precision floating point, but there is opportunity to use lower precision floating point and maybe going all the way down to 8 bit integer computations in certain cases.

And these are only some broad areas that are being worked on. No doubt each individual NN and piece of code could use some optimization care and love.

Conclusions

All these words, and I've only given you a top level summary of what was presented at Tesla AI day. Tesla really opened their kimono and gave way, way more details than written here at a fairly deep technical level. Indeed you actually had to be an AI researcher or programmer to truly understand everything that was said.

Companies simply do not give deep technical roadmaps like this to the public. Obviously Tesla was trying to recruit AI researchers, but even so, exposing so much of their architecture was a real OG move. The subtext is that Tesla doesn't care if you try to copy them, because by the time you re-create everything, they will have advanced that much further ahead.

The bottom line is that, in my opinion, Tesla can indeed deliver Full Self Driving with this architecture. "When" is always a tough thing to predict, but it appears that all the pieces are in place, and certainly we've seen some pretty impressive FSD beta videos.