Thanks for the reply.

In the quantum means the minimum amount? Absolutely!

"quantum leap" has been defined for you on the very first page of this thread.

Some of this "So far though it's less of a total re-write than I anticipated" and "this is not the

rewrite I expected" BS is getting out of hand.

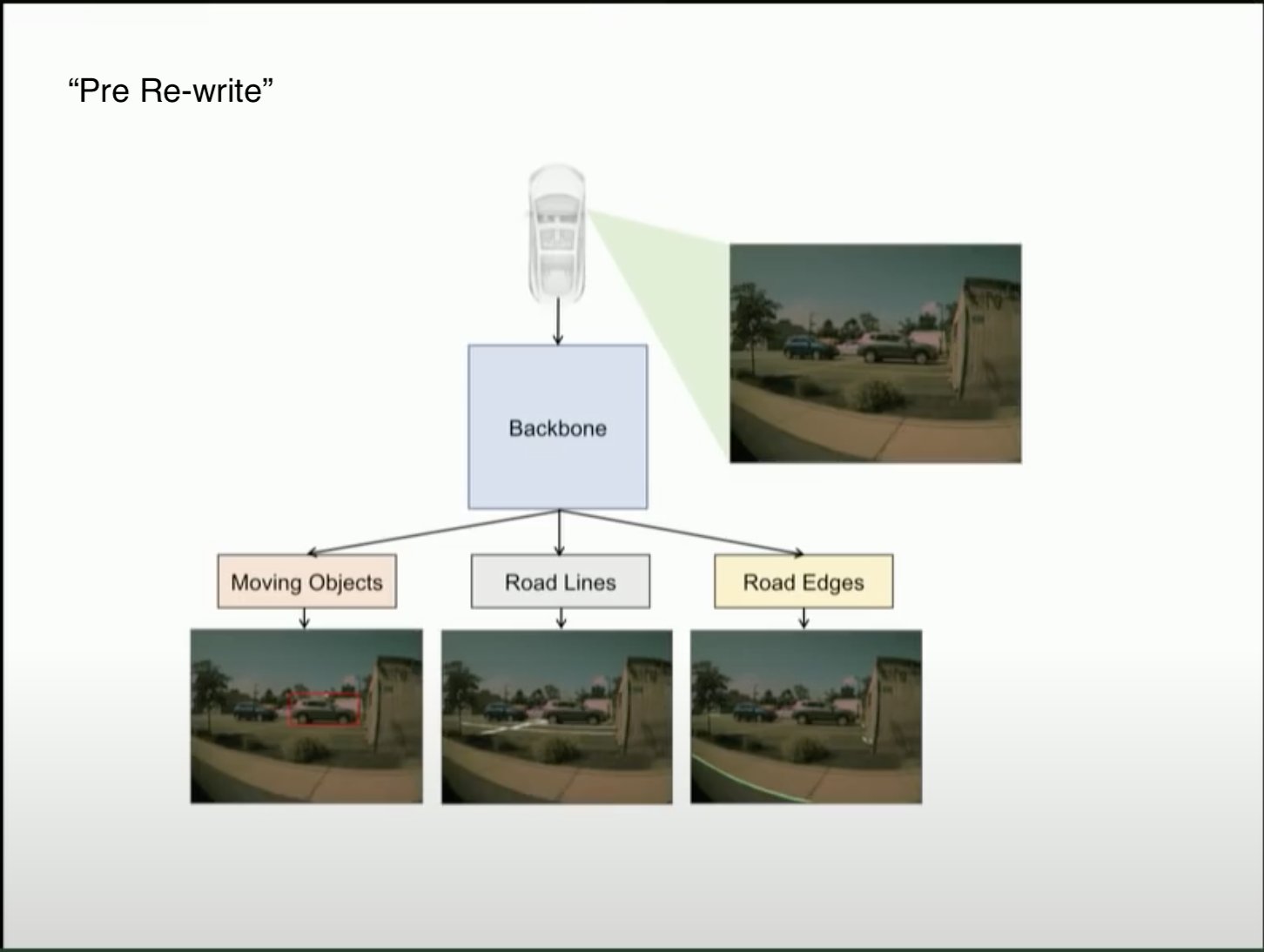

In June 2020 - Karpathy gave a presentation on how they are solving FSD and the challenge of scaling at Tesla.

the old hydranet heads fed into intermediate NNs to then tie them together

Notice that is HydraNet - the one that has existed prior to the PlaidNet (which coincidentally, PlaidNet no longer exists in the firmwares) But HydraNet lives on!

Karpathy is presenting HydraNet as the

current solution being used at Tesla for FSD, not a past solution.

When Karpathy shows this slide, he says the following about the 1000 distinct predictions coming out of the 48 NNs.

"

none of these predictions

can ever regress and

all of them must improve over time and this takes 70,000 GPU hours the Train of the neural nets"

Now, you posted this next image on another thread... it is useful

The only thing that I can come up with for "PlaidNet" was a new set of NN's that failed the test laid out by Karpathy "

none of these predictions

can ever regress and

all of them must improve over time and this takes 70,000 GPU hours the Train of the neural nets"

That is my guess as to why we do not see PlaidNet any longer.

But back to the image you posted.

Notice how they have "Shadow Mode" and then the arrow loops back around data is recorded and the training process is restarted.

I think PlaidNet was put out to the fleet in Shadow Mode to see how it would perform. Maybe PlaidNets performance on the actual fleet is what killed it off, Karpathy knows for sure.

In June Karpathy was discussing this rewrite at Tesla as the upcoming big milestone for deliverables.

And he only presents HydraNet and then later how those HydraNet outputs feed the BEV net, I am going to go with the most straight forward and direct answer of them all: These are all part of the FSD solution - when they started them they thought they can go the normal route of training these, this is where the "rewrite" came in - how to properly label/train the NN's especially the BEV net..

HydraNet and BEV Net are the backbone of the 4D perception infrastructure and the fundamental rewrite that Musk brought up in January on Third Row podcast was about how certain pieces of the stack would be trained. (see video transcript below)

The Temporal Module in that diagram above, this is the 4th dimension of Time. (this is so your FSD enabled Tesla does not have amnesia and can track objects across time)

In case people are not sure BEV net is for Birds Eye View - this is the NN that takes the processed output of each camera feed (processed through HydraNet) and stitches it all together into 3D.

This is a transcript (copied from 3rd Row Tesla podcast with Musk at 2:20:04

)

The word "rewrite" comes up only ONCE in the entire transcript.

Code:

140:04 significant foundational rewrite in the

140:07 telepath system that's almost complete

140:10 really yeah and what it what part of the

140:13 system like perception like planning or

140:16 just like it's it's instead of having

140:21 planning perception image recognition

140:24 will be separate they're they're being

140:27 combined so yeah I don't even understand

140:35 what if actually like the you're the

140:40 sort of neural net is absorbing more

140:43 more of the problem right beyond simply

140:46 the is this is if you see if an image is

140:50 this a car or not oh no it's it's kind

140:54 of what where does it where you do from

140:56 that

140:58 3d labeling is the next big thing where

141:02 the car can go through a scene with

141:05 eight cameras and and and kind of paint

141:08 a a would paint a path and then you can

141:12 label the path in 3d this is probably

141:16 two or three order of magnitude

141:17 improvement in labeling efficiency and

141:19 labeling accuracy you know you have to

141:23 do two throwers be proven in labeling

141:24 efficiency and significant improvement

141:27 in labeling accuracy as opposed to

141:30 having to label individual frames from

141:31 eight cameras at 36 frames a second you

141:36 just drive through the scene rebuild

141:40 that scene as a 3d thing with it's like

141:46 there might be a thousand frames that

141:47 were used to create that scene and then

141:49 you can label it all at once is that

141:53 related to the dojo thing you mentioned

141:54 a thought on Amida no doges for learning

141:57 for training the neural net that's like

142:00 when you're trying to build the neural

142:01 net that you ship into the car dojo

142:03 speeds that up by Hardware accelerating

Disclaimer: YouTube auto-generated transcripts do not delineate who is speaking, so the transcript has people talking over each other in some spots, it is just a time-stamped helper, so that you do not have to sift through 3 hours of video to find relevant info.

Again, Karpathy's CVPR presentation video is

6 months after Musk "broke" the news of a rewrite.

All the pieces seem to jell well with both what Karpathy said and what Musk said.

But on here, "it is not what I expected"! Maybe it's time to check your expectations with actual facts of what has been presented so far from multiple sources.

PlaidNet is dead - it failed the test, that is why it was dumped.

Long live PlaidNet!

Can we please stick to actual sources that work on the stuff and maybe attempt to reconcile 3rd party sources against them?

Oh, one last little

gem from the same video (re another "expert" opinion)

Note how Karpathy specifically delineates "No Lidar"

and "No HD maps" ....

So, the argument that by "NO HD Maps" Karpathy means that it is not lidar generated maps is silly.

Lastly, this video is

immensely relevant when discussing FSD and Tesla.

Please take 28 minutes and watch it: