jimmy_d

Deep Learning Dork

Car manufacturers usually buy competing cars, take them apart, compare every detail of them and see what they can learn from them. This is very standard. In this case they will learn the sensor suite Tesla is using, the ethernet network layout they use, the compute hardware, the neural network input, the neural network model and the weights. So they could probably spend a year to rebuild the same in their own car using the same weight, but that will be tricky as the weights are very tuned to the specific system. They could try to duplicate the data Tesla has also and start to train their own weights using the same model, but that will take a lot of time. It is very hard to be a copy cat, you will always be behind and the engineers will not feel very creative and look for options. And customers are willing to spend less on it. And there might be some legal actions Tesla can take to defend themselves against this.

Likely what they will do is learn what Tesla has done, then bring it into their design discussions. And they don't have access to the same data gathering capabilities that Tesla has so likely they will have to find a different approach and maybe network architecture tailored to their data. And the resistance to learning from what Tesla is doing can be heavy among the old companies, I say this from experience having held presentation to a car manufacturer about what I thought Tesla's strategy was. Maybe the Chinese and the Silicon Valley companies are more open to learning from Tesla's solutions.

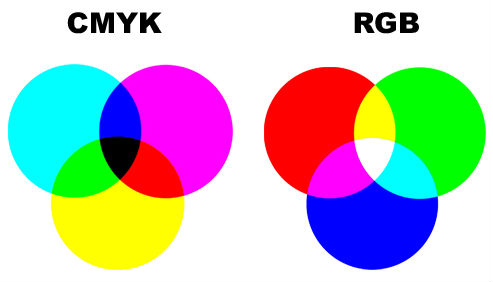

Fwiw, the network is not camera agnostic. It is maybe somewhat calibration agnostic, but is likely very much CMYK dependent and probably dependent on many other things also.

Interesting - it sounds like you're saying part of the calibration process is possibly color calibration. Is that right? I'm not familiar with how much color variation affects NN performance or how much color variation there is in commercial cameras. Because NN's need to be relatively immune to variations in lighting (cloudy day, sunset, street lamps) I would have thought that absolute color calibration wasn't very important. But of course if commercial camera variation is really large then maybe that gets swamped.

Do you have any info in that area?