Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Tesla Autopilot wrongful death suit in Japan

- Thread starter alstoralset

- Start date

Knightshade

Well-Known Member

That's exactly what a robot pretending not to be one would say!

Also from the link

The driver of the Tesla had dozed off shortly before the crash

So...no.

"The driver of the Tesla had dozed off shortly before the crash"

So...no.

AP's driver attention system allows one to "fall asleep with their hands on the wheel". One could make a case...

Knightshade

Well-Known Member

"Advice for Robots" - Cameras should be used for driver attention. Not steering wheel torque.

Cameras probably are better- (though still not perfect- Caddy has had a number of issues with the current system they're using)

But no Tesla ever built has the hardware to do this and they're never gonna retrofit a million cars (or even the 500k+ on AP2+ HW).... yeah the 3 (and Y I imagine) does have A camera inside- but it's the wrong type and location to do this task.

Really it's the result of Tesla expecting to get beyond L2 much more quickly than they actually have been able to (which is- not only years behind schedule, but so far, not at all).

AP's driver attention system allows one to "fall asleep with their hands on the wheel". One could make a case...

Not really.

Having no system at all ALSO allows that.

But no Tesla ever built has the hardware to do this and they're never gonna retrofit a million cars

Design flaw?

Having no system at all ALSO allows that.

Maybe AP encourages it?

Knightshade

Well-Known Member

Design flaw?

Given the accident rate is vastly lower than the average vehicle, and the average vehicle doesn't even have a driver attention check?

Probably be tough to convince anybody of that.

Maybe AP encourages it?

AP encourages falling asleep? As compared to most cars that don't beep at you at all if they don't detect hands on wheel every few seconds?

S4WRXTTCS

Well-Known Member

I wish the article would include information on what happened to the driver of the Model X.

Back when it happened the Japanese media seemed to treat it like any other accident where the driver fell asleep. They simply reported that, and the fact that the driver was arrested.

I'm of the position that Tesla isn't even partially responsible for this accident, but that Tesla should definitely switch from using the torque sensor to using more advanced driver monitor systems. The more advanced driver monitoring systems can detect when a person is falling asleep. Even Subaru is using it now on some models.

Back when it happened the Japanese media seemed to treat it like any other accident where the driver fell asleep. They simply reported that, and the fact that the driver was arrested.

I'm of the position that Tesla isn't even partially responsible for this accident, but that Tesla should definitely switch from using the torque sensor to using more advanced driver monitor systems. The more advanced driver monitoring systems can detect when a person is falling asleep. Even Subaru is using it now on some models.

mikes_fsd

Banned

I want to "Like" and "Dislike" the same post, how can I accomplish that on TMC? /sBut no Tesla ever built has the hardware to do this and they're never gonna retrofit a million cars (or even the 500k+ on AP2+ HW).... yeah the 3 (and Y I imagine) does have A camera inside- but it's the wrong type and location to do this task.

Really it's the result of Tesla expecting to get beyond L2 much more quickly than they actually have been able to (which is- not only years behind schedule, but so far, not at all).

I think Tesla has presented a unified front with technical presenations from Karpathy and team and "salesy" side from Elon.

The data they are getting from the fleet is the holy grail and Tesla has it!

We are collecting data from over 1 million intersections every month at this point. This number will grow exponentially as more people get the update and as more people start driving again. Soon, we will be collecting data from over 1 billion intersections per month. All of those confirmations are training on neural net, essentially, the driver when driving and taking action is effectively labeling -- the labeling reality as they drive, and making the neural net better and better. I think this is an advantage that no one else has, and we're quite literally orders of magnitude more than everyone else combined. I think, this is difficult to fully appreciate.

Knightshade

Well-Known Member

I want to "Like" and "Dislike" the same post, how can I accomplish that on TMC? /s

I think Tesla has presented a unified front with technical presenations from Karpathy and team and "salesy" side from Elon.

The data they are getting from the fleet is the holy grail and Tesla has it!

I agree they have a lot more real world data than anyone else.

That doesn't mean they have the solution though.

It's entirely possible that L4/L5 is simply not possible with 1 front radar, 12 surround sonars, and 8 720p cameras of the type and location Tesla is using, even given infinite data sets.

Or to offer a really terrible analogy-

The american cancer society has far more data on cancer than I do. They've been no more successful in eradicating cancer than I have though.

mikes_fsd

Banned

It's not just that they have "raw data" they know what to do with it.I agree they have a lot more real world data than anyone else.

...

It's entirely possible that L4/L5 is simply not possible with 1 front radar, 12 surround sonars, and 8 720p cameras of the type and location Tesla is using, even given infinite data sets.

They have clearly shown that they can perform campaigns/triggers for sub-NN validation in the wild.

But unlike cancer - they get real humans to validate the "correct" path via confirmation to proceed on current deployment.

And they get to specifically test against the scenarios that fail in real life (crashes, aborts, overrides).

Knightshade

Well-Known Member

It's not just that they have "raw data" they know what to do with it.

They have clearly shown that they can perform campaigns/triggers for sub-NN validation in the wild.

But unlike cancer - they get real humans to validate the "correct" path via confirmation to proceed on current deployment.

And they get to specifically test against the scenarios that fail in real life (crashes, aborts, overrides).

Sure.

And they're still years behind original stated targets, with little evidence they'll ever reach them (and some that they won't- the multiple-times dialing back the listed promises of FSD for example).

As I say- if the sensor suite turns out incapable of handling the job, no amount of data can fix that.

(and to be fair- cancer research gets real humans to validate various experimental treatments all the time too- to varying degrees of results)

mikes_fsd

Banned

You keep bringing that up as if that is somehow going to change the fact that Tesla has almost a million cars with a similar sensor suite and the next million has no indication of changes.As I say- if the sensor suite turns out incapable of handling the job, no amount of data can fix that.

- They would have realized that in the past 2 years.

- We would have seen changes go into Model Y to the sensor suite.

- just like we see a heater on the radar in the Model Y.

Knightshade

Well-Known Member

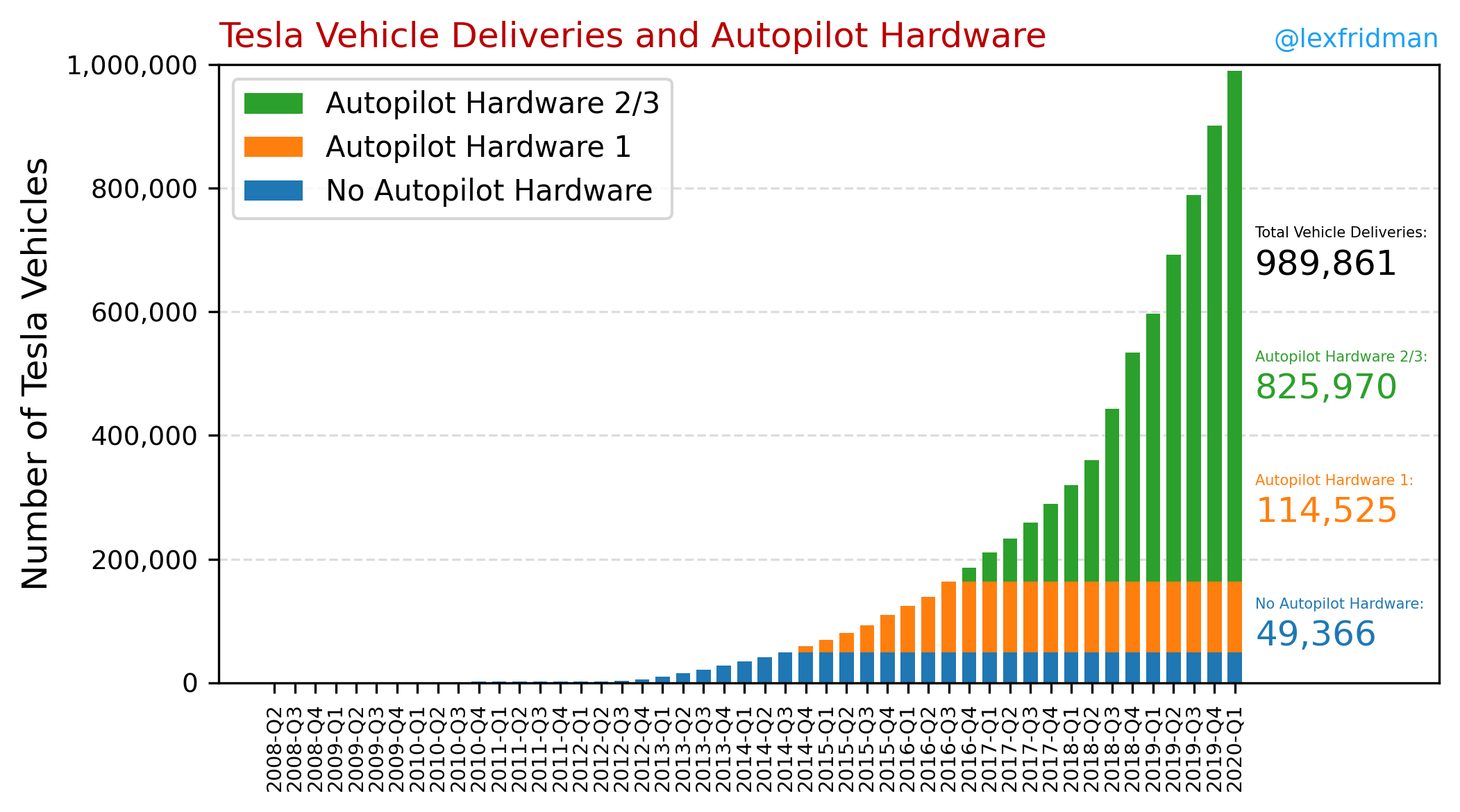

You keep bringing that up as if that is somehow going to change the fact that Tesla has almost a million cars with a similar sensor suite and the next million has no indication of changes.

Uh... that's not true at all.

They only broke 1 million total cars THIS quarter.

A couple hundred thousand are AP1 or no AP at all.

Another big chunk are AP2.0 (different sensor suite)

Some more are AP2.5 (different computer from what's now "needed")

Every time it changed they assured everyone NOW the car has ALL the HW it needs for FSD!

And they've been wrong multiple times so far.

They would have realized that in the past 2 years.

- We would have seen changes go into Model Y to the sensor suite.

- just like we see a heater on the radar in the Model Y.

Because that seems a great example of finding out most of those (not really a million) cars setups weren't good enough

I mean- they made a ton of HW2 cars back in the day... and turned out they eventually realized that was insufficient.

HW2.5 came along- different sensors (camera filters are different, front radar is different, computer and wiring different, etc)

Then THAT computer was not good enough either- HW3 computer (and extra work to get it to operate correctly with HW2 sensors- still unknown if there'll be functional differences there)

Then it turns out the fundamental design of AP just was not going to work... so they're currently rewriting it from the ground up

(done soon allegedly)

So they've repeatedly found the HW wasn't good enough- but it took a while between each thing.

Now they've found the SW core isn't good enough and they're redoing that.

Unknown if they then find more sensor shortcomings after they get data into the new SW.

And remember these are the folks who originally promised a cross-country FSD drive by "next year" since 2016.

It still can't drive across town that way though in 2020.

But IFF the sensor suite is insufficient, then you are correct, no amount of data from the sensors would be enough.

Right- so insisting they'll get FSD as originally promised working on this HW because "DATA" is assuming facts not in evidence.

If for example it turned out you really DO need LIDAR for L5, some other company with much less DATA, but all of it using LIDAR, will get there first.

(FWIW I don't actually think you DO need lidar- but I DO think you need more than the current AP2.x sensor suite- at least a few more cameras, ideally better ones, and I wouldn't object to rear and maybe corner radars though with enough of the right cameras could probably live without em)

mikes_fsd

Banned

Uh... that's not true at all.

They only broke 1 million total cars THIS quarter.

A couple hundred thousand are AP1 or no AP at all.

Another big chunk are AP2.0 (different sensor suite)

Some more are AP2.5 (different computer from what's now "needed")

Total no-AP or AP1 are ~164k

AP2 and AP3 sensors are "similar" hence why I used the word "similar" not "same".

Between AP2 and AP3 the main difference is the computer. You will see slight changes in sensor suite as we've seen to date going forward, that is to be expected and encouraged.

As for LiDAR....

mikes_fsd

Banned

My point of even including that sentence, was that they -- Tesla -- would have changed the sensor suite dramatically within the past 2 years.Right- so insisting they'll get FSD as originally promised working on this HW because "DATA" is assuming facts not in evidence.

ESPECIALLY with the Model Y.

More directly, the sensor suite is sufficient! Processing power is still to be determined.

mikes_fsd

Banned

In June it will be three (3) years since Karpathy joined Tesla. (For a year before that he worked with Musk at OpenAI - so there is prior work experience and knowledge of how Elon operates)

Lets say he needed a few months to get his vision/approach setup and communicated internally.

For 2.5 years he could have talked directly to Elon to request changes to the sensor suite, but all we got is incremental updates to sensors and massive leaps to processing.

Lets say he needed a few months to get his vision/approach setup and communicated internally.

For 2.5 years he could have talked directly to Elon to request changes to the sensor suite, but all we got is incremental updates to sensors and massive leaps to processing.

Last edited:

Knightshade

Well-Known Member

In June it will be three (3) years since Karpathy joined Tesla.

Lets say he needed a few months to get his vision/approach setup and communicated internally.

For 2.5 years he could have talked directly to Elon to request changes to the sensor suite, but all we got is incremental updates to sensors and massive leaps to processing.

Apparently he was too busy spending the first 2 years working on AP code that wouldn't end up working out and needing to spend much of the third year doing a fundamental re-write of the code.

Maybe that re-write was because he figured out the sensor suite wasn't sufficient with the original code design and he HOPES this new one WILL be sufficient, because Elon told him no on adding a bunch of hardware?

(We know Elon rejected things like cameras for driver attention when other engineers suggested it for example)

mikes_fsd

Banned

The point was initially to port the AP1 capabilities (which is just hard coded logic) onto AP2 hardware stack -- that is exactly what they initially did.Apparently he was too busy spending the first 2 years working on AP code that wouldn't end up working out and needing to spend much of the third year doing a fundamental re-write of the code.

Elon did not allow them to give themselves crutches to keep leaning on... since the vision was always full self driving.We know Elon rejected things like cameras for driver attention when other engineers suggested it for example

But, since you know all the internal workings of Tesla and especially Autopilot team, I will let you hang on to your "facts" without my participation. Enjoy!

Similar threads

- Replies

- 18

- Views

- 408

- Replies

- 140

- Views

- 4K

- Replies

- 0

- Views

- 289