Tesla has a newly published patent called "Systems and Methods for Training Machine Models with Augmented Data":

Excerpts from patent:

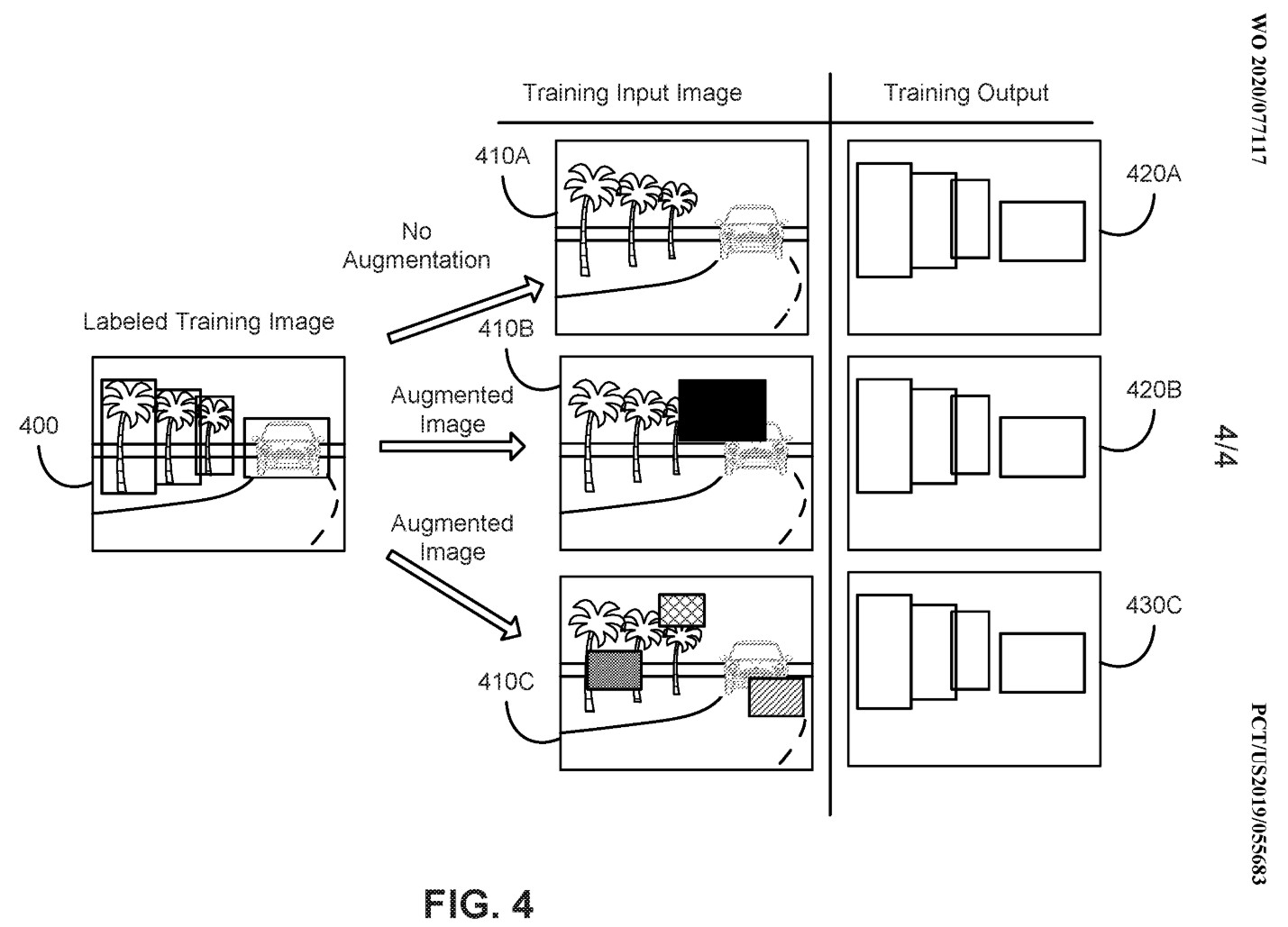

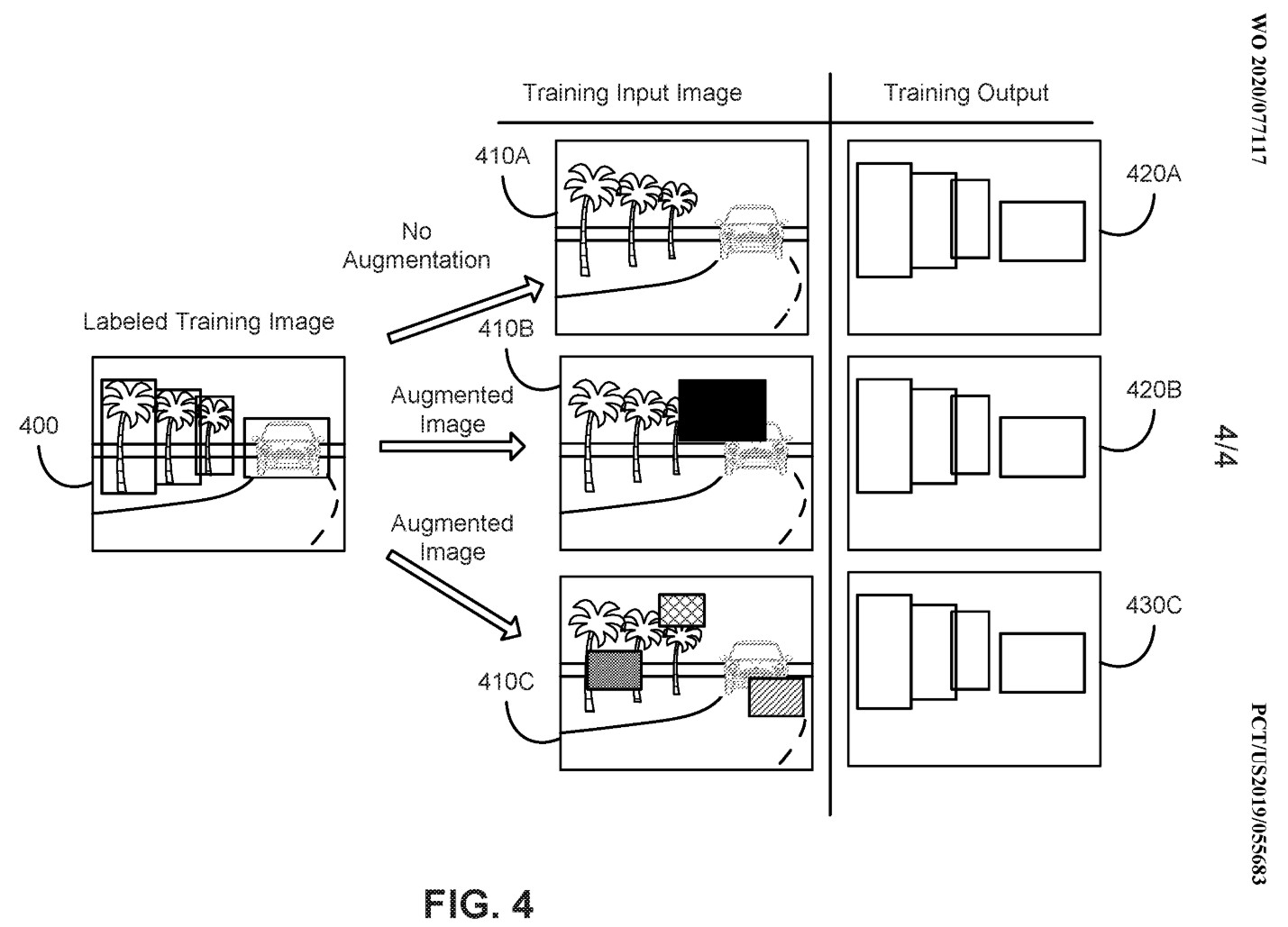

"Augmentation may provide generalization and greater robustness to the model prediction, particularly when images are clouded, occluded, or otherwise do not provide clear views of the detectable objects. These approaches may be particularly useful for object detection and in autonomous vehicles. This approach may also be beneficial for other situations in which the same camera configurations may be deployed to many devices. Since these devices may have a consistent set of sensors in a consistent orientation, the training data may be collected with a given configuration, a model may be trained with augmented data from the collected training data, and the trained model may be deployed to devices having the same configuration."

“As a further example, the images may be augmented with a“cutout” function that removes a portion of the original image. The removed portion of the image may then be replaced with other image content, such as a specified color, blur, noise, or from another image. The number, size, region, and replacement content for cutouts may be varied and may be based on the label of the image (e.g., the region of interest in the image, or a bounding box for an object).”

Tesla is patenting a clever way to train Autopilot with augmented camera images

If I am understanding this patent correctly, it seems like it is basically using "photoshop" to enhance the images to make them more useful for training the neural network. Is that right?

Any thoughts on this?

Excerpts from patent:

"Augmentation may provide generalization and greater robustness to the model prediction, particularly when images are clouded, occluded, or otherwise do not provide clear views of the detectable objects. These approaches may be particularly useful for object detection and in autonomous vehicles. This approach may also be beneficial for other situations in which the same camera configurations may be deployed to many devices. Since these devices may have a consistent set of sensors in a consistent orientation, the training data may be collected with a given configuration, a model may be trained with augmented data from the collected training data, and the trained model may be deployed to devices having the same configuration."

“As a further example, the images may be augmented with a“cutout” function that removes a portion of the original image. The removed portion of the image may then be replaced with other image content, such as a specified color, blur, noise, or from another image. The number, size, region, and replacement content for cutouts may be varied and may be based on the label of the image (e.g., the region of interest in the image, or a bounding box for an object).”

Tesla is patenting a clever way to train Autopilot with augmented camera images

If I am understanding this patent correctly, it seems like it is basically using "photoshop" to enhance the images to make them more useful for training the neural network. Is that right?

Any thoughts on this?