The following takes excerpts from @Cosmacelf's summary on Tesla's HotChips presentation of Dojo and FSD and presents them in article form.

Tesla is more than an automotive company – it's also a tech company. Thus, it only made sense for Tesla, as a tech company, to present more details surrounding their new Dojo supercomputer, previously showcased at last year's AI Day, alongside announcements from companies such as Intel and NVIDIA at the HotChips 34 conference. Cosmacelf, a well-known TMC member, wrote a summary thread discussing the keynote:

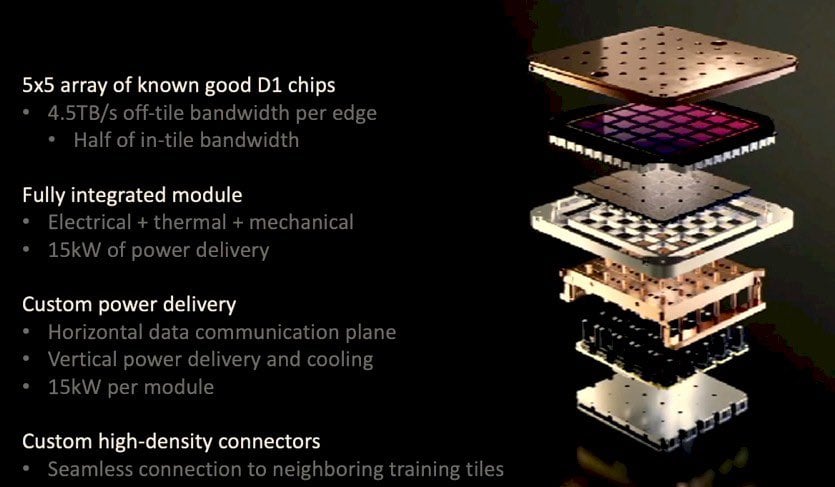

Tesla's Dojo supercomputer has been designed to massively outperform their GPU cluster in every way, and so one "exopod" of its system has been built out of over 1 million CPU nodes, all specialized for AI learning. Each exopod is designed to be able to connect to one another with 4.5 terabytes of off-tile bandwidth per connection, allowing for the exopods to seamlessly connect to form an even larger supercomputer of training tiles.

Dojo's exopod chips are being built using 7nm technology by TSMC, and each chip has "the raw performance of 362 TFlops using BF16 arithmetic, or 22 TFlops using FP32 running at 2 Ghz."

CREDIT: Tesla

Speed of communication between processing nodes is the biggest priority for machine learning chips. One company opted to make 30cm chips as making big chips is one way to improve this metric; however, the larger chips are made, the more likely it is for at least one node in each chip to not work. This, in turn, decreases the overall efficiency of each chip, as 100% functionality is substituted for faster communication between working nodes.

Tesla instead "opted to just make 2.5cm chips (big, but not impossibly so). They then test these chips to find the ones that work 100%. They then re-integrate these working chips back onto a wafer with interconnect logic on the wafer in a 5x5 grid. That's the core of the tile. I don't know if Tesla came up with this chip to wafer integration or whether they licensed this technology, but whatever, it is a great idea. This allows them to have blazingly fast interchip communications speeds on that tile."

Cosmacelf went on to emphasize that:

In designing the most efficient machine learning computer, Tesla has taken existing technology and engineered it to a pinnacle. They have done this with rockets, batteries, and AI, as pointed out by Cosmacelf and many others. Tesla once again proves why they are more than an auto manufacturer by showing us how truly well-engineered their Dojo supercomputer is, and we hope to see some comparative performance numbers, of which will be presented at Tesla's AI Day on September 30th, 2022.

Cosmacelf's full summary of Tesla's presentation is linked below, feel free to check it out and give it a like:

teslamotorsclub.com

teslamotorsclub.com

Tesla is more than an automotive company – it's also a tech company. Thus, it only made sense for Tesla, as a tech company, to present more details surrounding their new Dojo supercomputer, previously showcased at last year's AI Day, alongside announcements from companies such as Intel and NVIDIA at the HotChips 34 conference. Cosmacelf, a well-known TMC member, wrote a summary thread discussing the keynote:

Cosmacelf said:As we all know, FSD is chronically behind, and Tesla has been relying on their own GPU cluster (the world's 6th or 7th largest supercomputer GPU cluster), to bang out iteration after iteration of the FSD inference network. GPUs aren't the ideal hardware (they obviously were designed for something else), so Tesla decided to build a better training supercomputer.

The DOJO processing node is described as a high performance general purpose CPU with a custom instruction set tailored to ML. Each node has 1.25 MB of high speed SRAM used by both instructions and data. There is no cache. Each node has a proprietary off node network interface to connect to the 4 adjacent nodes (east/west/north/south). There is no virtual memory, very limited memory protection mechanisms, and has programmatic sharing of resources. 4 threads, typically 1-2 are used for compute, 1-2 are used for communications at any one time.

Tesla's Dojo supercomputer has been designed to massively outperform their GPU cluster in every way, and so one "exopod" of its system has been built out of over 1 million CPU nodes, all specialized for AI learning. Each exopod is designed to be able to connect to one another with 4.5 terabytes of off-tile bandwidth per connection, allowing for the exopods to seamlessly connect to form an even larger supercomputer of training tiles.

Dojo's exopod chips are being built using 7nm technology by TSMC, and each chip has "the raw performance of 362 TFlops using BF16 arithmetic, or 22 TFlops using FP32 running at 2 Ghz."

DOJO uses a custom communication protocol on top of their intra node/intra chip interconnects and Ethernet to shuttle packets around. This protocol, called TTP (Tesla Transport Protocol) is efficient enough to get 50 GB/s bandwidth when going over Ethernet (yeah, that's 400 Gbps Ethernet, which is apparently a thing).

CREDIT: Tesla

Speed of communication between processing nodes is the biggest priority for machine learning chips. One company opted to make 30cm chips as making big chips is one way to improve this metric; however, the larger chips are made, the more likely it is for at least one node in each chip to not work. This, in turn, decreases the overall efficiency of each chip, as 100% functionality is substituted for faster communication between working nodes.

Tesla instead "opted to just make 2.5cm chips (big, but not impossibly so). They then test these chips to find the ones that work 100%. They then re-integrate these working chips back onto a wafer with interconnect logic on the wafer in a 5x5 grid. That's the core of the tile. I don't know if Tesla came up with this chip to wafer integration or whether they licensed this technology, but whatever, it is a great idea. This allows them to have blazingly fast interchip communications speeds on that tile."

Cosmacelf went on to emphasize that:

This is Elon's ethos. He doesn't actually invent any new science. He takes existing technology and engineers the crap out of it to extract the best performance possible out of that core technology. Consider all the things he has done this with:

Rockets - liquid fueled rocket engines are an old proven technology. Elon engineered the F1, F9 and now Starship to go way past what others have achieved, but at the end of the day, they are still liquid fueled rockets.

Batteries - instead of waiting around for solid state batteries or exotic chemistries, he picked the best of what was available while also choosing a form factor (cylindrical) which would enable fast manufacturing. This choice seemed ridiculous at the time, why would you choose such a tiny form factor and stuff your car with 8,000 cells? Because you could manufacture it at scale, something competitors are still learning.

AI - rather than wait for better paradigms, Elon just went with what was current state of the art and threw tons of engineering at it to create a huge feedback system (each Tesla car helps the learning system) and a huge training computer, etc. Again, no real new AI paradigm was invented (yet).

In designing the most efficient machine learning computer, Tesla has taken existing technology and engineered it to a pinnacle. They have done this with rockets, batteries, and AI, as pointed out by Cosmacelf and many others. Tesla once again proves why they are more than an auto manufacturer by showing us how truly well-engineered their Dojo supercomputer is, and we hope to see some comparative performance numbers, of which will be presented at Tesla's AI Day on September 30th, 2022.

Cosmacelf's full summary of Tesla's presentation is linked below, feel free to check it out and give it a like:

Heads up: Dojo details given at HotChips conference

This Tuesday, there are three Tesla presentations, two on DOJO, one on their AI architecture being given here: https://hc34.hotchips.org/program/