Tesla announced today that they are transitioning away from ultrasonic sensors and replacing them Tesla Vision:

www.tesla.com

www.tesla.com

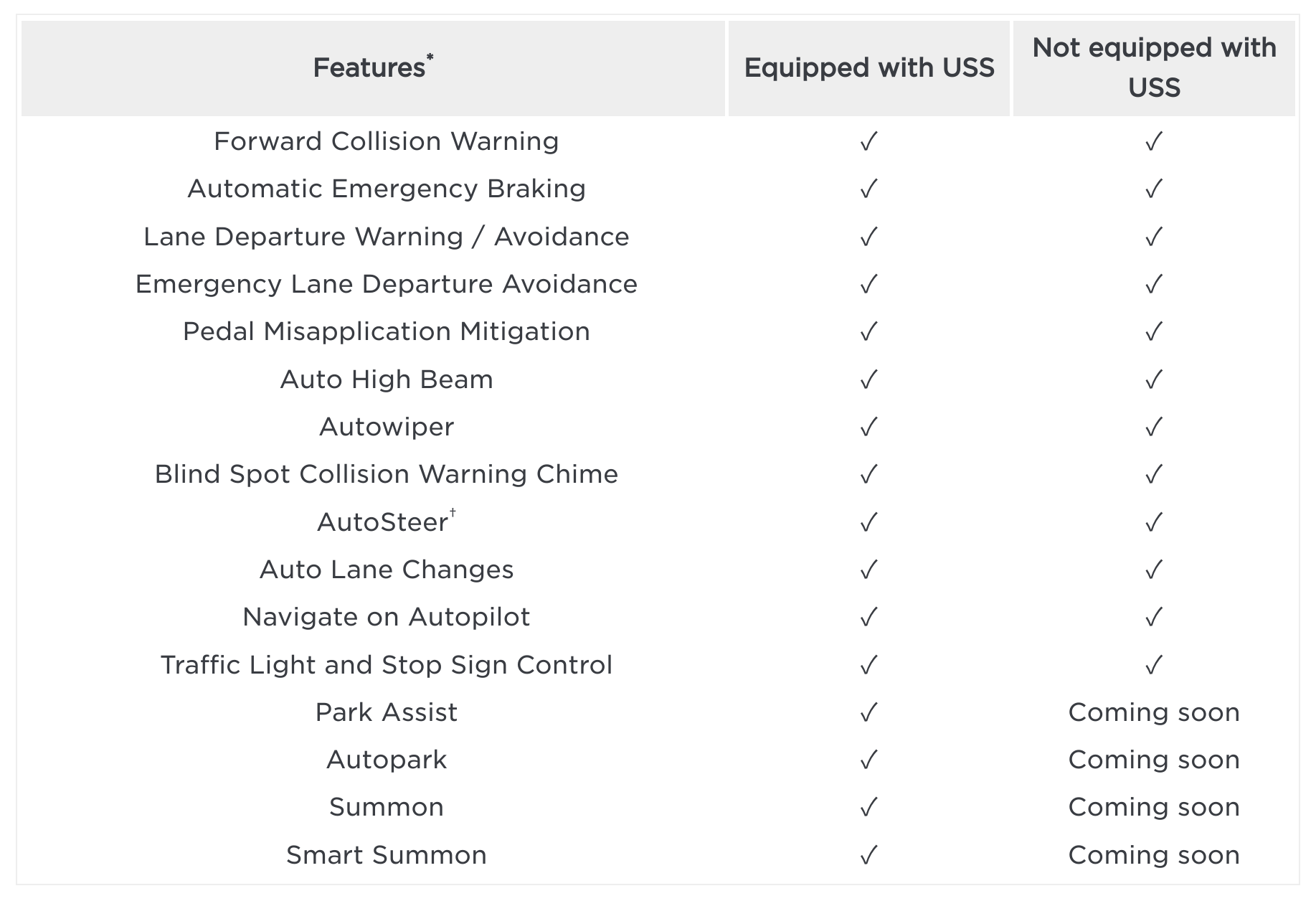

Today, we are taking the next step in Tesla Vision by removing ultrasonic sensors (USS) from Model 3 and Model Y. We will continue this rollout with Model 3 and Model Y, globally, over the next few months, followed by Model S and Model X in 2023.

Along with the removal of USS, we have simultaneously launched our vision-based occupancy network – currently used in Full Self-Driving (FSD) Beta – to replace the inputs generated by USS. With today’s software, this approach gives Autopilot high-definition spatial positioning, longer range visibility and ability to identify and differentiate between objects. As with many Tesla features, our occupancy network will continue to improve rapidly over time.

For a short period of time during this transition, Tesla Vision vehicles that are not equipped with USS will be delivered with some features temporarily limited or inactive, including:

In the near future, once these features achieve performance parity to today’s vehicles, they will be restored via a series of over-the-air software updates. All other available Autopilot, Enhanced Autopilot and Full Self-Driving capability features will be active at delivery, depending on order configuration.

- Park Assist: alerts you of surrounding objects when the vehicle is traveling <5 mph.

- Autopark: automatically maneuvers into parallel or perpendicular parking spaces.

- Summon: manually moves your vehicle forward or in reverse via the Tesla app.

- Smart Summon: navigates your vehicle to your location or location of your choice via the Tesla app.

Tesla Vision Update: Replacing Ultrasonic Sensors with Tesla Vision | Tesla Support

Safety is at the core of our design and engineering decisions. In 2021, we began our transition to Tesla Vision by removing radar from Model 3 and Model Y, followed by Model S and Model X in 2022. Today, in most regions around the globe, these vehicles now rely on Tesla Vision, our camera-based...