Hello new to the forums,

I was hoping to have a discussion about Tesla autopilot hardware.

Specifically it seems as though Tesla is going an entirely different direction when it comes to self-driving technology.

From listing to Investor conference calls it seems as though Tesla's final self-driving system will rely on very fine maps created by GPS (SpaceX satellite cluster perhaps), and not a LIDAR system creating a 360 map of the vehicles surroundings. Essentially Tesla vehicle seem to be just following lines created by GPS combined with self learning from people actually driving a Tesla.

Why are all other automakers installing LIDAR on their vehicles, while Tesla is alone with a simple sensor suite combined with GPS and front facing camera?

From what I can tell:

Sensor Suite Advantages: Can see through snow/rain/dust, cheaper, probably more standard off the shelf.

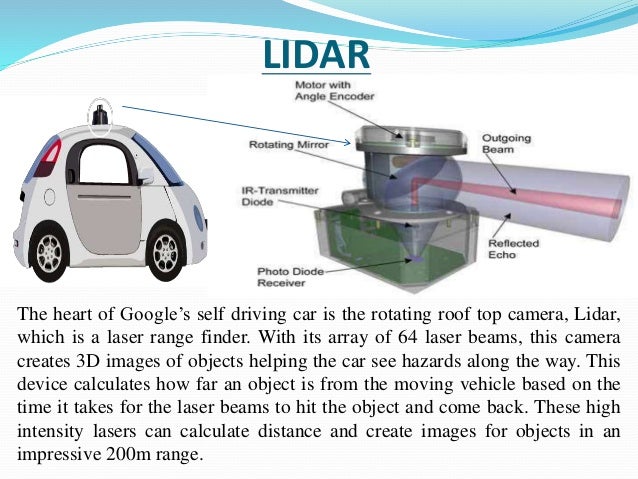

LIDAR Advantages: ?Laser beamz?...am I missing something?

Perhaps this is not the forum for a non-biased assessment, but does anyone know the advantage of LIDAR over the Tesla approach? Any information that you man have would be great.

Thanks very much in advance.

Mark

I was hoping to have a discussion about Tesla autopilot hardware.

Specifically it seems as though Tesla is going an entirely different direction when it comes to self-driving technology.

From listing to Investor conference calls it seems as though Tesla's final self-driving system will rely on very fine maps created by GPS (SpaceX satellite cluster perhaps), and not a LIDAR system creating a 360 map of the vehicles surroundings. Essentially Tesla vehicle seem to be just following lines created by GPS combined with self learning from people actually driving a Tesla.

Why are all other automakers installing LIDAR on their vehicles, while Tesla is alone with a simple sensor suite combined with GPS and front facing camera?

From what I can tell:

Sensor Suite Advantages: Can see through snow/rain/dust, cheaper, probably more standard off the shelf.

LIDAR Advantages: ?Laser beamz?...am I missing something?

Perhaps this is not the forum for a non-biased assessment, but does anyone know the advantage of LIDAR over the Tesla approach? Any information that you man have would be great.

Thanks very much in advance.

Mark