So you watched the entire thing before it was taken down? How about throw us a bone and do a TLDR?Great thread Karpathy talk Tesla fsd. A must watch the YouTube was taken down temporarily, I hope.

Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Tesla, TSLA & the Investment World: the Perpetual Investors' Roundtable

- Thread starter AudubonB

- Start date

Tslynk67

Well-Known Member

Several years back, @TrendTrader007 used to post here regularly. Typically would be a message like "Based on the chart, $TSLA poised for huge break out to $XXX" - this is OK, but he'd post these every other week, of course it never happened and he ended up getting laughed at so much that he left, returned, left, returned, then finally left, until he returns again...Is this an inside joke based on his 6 month old videos?

He couldn't handle people criticising his perpetual

We never knew how much $TSLA he had, but he implied it was >100k shares pre-split. He was forever flipping in and out of stock and calls. In theory he should be a billionaire by now, who knows...

I follow him on Twitter, he's somewhat schizophrenic, one day he's loading on $TSLA, the next he claims the apocalypse is coming and he has sold everything and moved into cash

Last edited:

EnergyMax

Member

The Twitter user was able to upload clips from his browser cache.So you watched the entire thing before it was taken down? How about throw us a bone and do a TLDR?

TLDW: vision is the future, lidar is a losing proposition. Their current training dataset is 1.5PB, for which they have a 1.5exafop nvidia-based cluster, which would measure #5 fastest supercomp in the world. He mentioned the word dojo, but unwilling to provide details.

RobStark

Well-Known Member

EV share jumps to 2.3% as registrations surge

Their registrations have risen nearly three times faster than overall vehicle registrations in the U.S. through April, according to data from Experian.

Great thread Karpathy talk Tesla fsd. A must watch the YouTube was taken down temporarily, I hope.

Direct YouTube link to entire talk:

Edit: This is a must watch. If you only have limited time skip to 3:30, but the rest is very information rich.

Last edited:

CVPR, great, no confidential information then, but would be too technical for Wall Street to understand.Andrej Karpathy Tesla Autonomous Driving Talk CVPR June 20 2021

Another advantage provided to us retails, cheers!

Btw: Andrej sounds like 2x speed playback on YouTube, not just the speed, the voice compression artifacts too…are we sure he is not an AGI secretly release by OpenAI?

Last edited:

Mike Smith

Active Member

Sorry, I just realized the Karpathy video I posted is missing the last 10 minutes so I deleted it. @MikeAtkinson's video two posts above is complete.

StealthP3D

Well-Known Member

Direct YouTube link to entire talk:

Very informative!

But I must say, I was not too impressed with page titled "Triggers" (at 16 min.) that referred to the act of braking four times and every single time it was spelled "break".

What is going on these days? Why is it so common to misspell "brake" as "break"? I mean, everyone makes occasional mistakes and has "brain farts" but the 21st century has had an epidemic of misspellings of the word "brake" compared to the 20th century.

Ok, thought that were only rumours (or educated guesses - Elon said they would do structural battery packs but as in the first iteration of the planning documents there was no cell production, Kato would have been the only possible source). Judging from the likes your post got that is not the caseThe plan has been to start with imported batteries from Kato since forever. If they can stick to this looks like they'll be producing cars at around the same time as Austin. Not bad even with three months headstart considering all the permit delays and Austin using five times the personnel for construction

Also, if they do both 2170 and 4680 as @avoigt implied, I would expect they would start with 2170 and not jump to structural battery back in the beginning.

As expected, not there for me (Europe)Don’t think I’ve seen this before? Tesla app under Upgrades>Manage Upgrades I’m seeing this:

View attachment 675648

(I already have AP and FSD).

Sorry if this isn’t new.

edit: typo

Wicket

Member

Direct YouTube link to entire talk:

Edit: This is a must watch. If you only have limited time skip to 3:30, but the rest is very information rich.

Does anyone else have the problem where you try to hit the maximize button on the video and you are so excited you instead hit the far right of the itty bitty timeline and then you have to figure out where the heck you were before and then you realize it still isn’t maximized, and so then you think to yourself ‘next time I do this I’ll maximize first and *then* go back and find my place’? THAT is how Tesla is solving FSD. -Joe Rogan (ed: not really him)

Attachments

Agreed, must watch, not very technical actually, so don’t be intimidated just because it’s for CVPR conference.Direct YouTube link to entire talk:

Edit: This is a must watch. If you only have limited time skip to 3:30, but the rest is very information rich.

My notes:

- Briefly touching vision vs Lidar and mentioned Lidar is not a generalizable solution.

- Now vision is working well that they can start to remove other sensors.

- Mainly focused on the feature of using pure vision to remove radar in AEB(auto emergency braking) to illustrate how Tesla AI works

- Start with data accusation, they have 221 triggers running that will record 10sec video and send back for events like “driver brakes hard on freeway” or “detected lead car slow down but driver didn’t brake” etc.

- Offline auto labeling to get accurate depth/velocity, with benefits of hindsight and more computing, auto labeling could actually get very good quality labels.

- Train net and deploy to fleet in shadow mode.

- Then collect more disagreements and retrain.

- 7 rounds of shadow mode deployment before final release.

- In total 1M clips collected, 6billion labels, 1.5Pb dataset size(some tweets interpreted this as the total size of FSD dataset, my interpretation is 1.5Pb is for AEB feature alone)

- Compared with legacy solution with radars, the new pure vision system avoids phantom brake, detects stopped truck earlier and works more reliably in case of hard braking of lead car(in that case radars lose tracking of the lead car and re-acquired it a few times in one second, produced a lot of noise)

- QA process includes 10k simulation scenarios, 10years of QA drive and 1000years of shadow mode drive. (Next time Waymo brags about simulation we can say Tesla do that too, on top of millions of real world video clips, hand picked from billions of miles of real world drives)

- Tesla training cluster has 5760x NV A100 GPUs, the 5th largest supercomputer in the world. And this is only 1 of the 3 clusters they are building. Next step is Dojo, but not ready to talk about that yet.

- Was asked about whether adding other sensors would be helpful, for example infra cam, answered there is infinite number of sensors you can chose from, but they still think cameras in visible spectrum are the best choice, it has all the information needed for driving, basically it’s necessary and sufficient for FSD.

Demonstrated a lot of speculated Tesla AI moats, data collection triggers, auto labeling, shadow mode, training clusters, etc.

Also shows great progress on pure vision using the example of vision AEB.

Bullish AF!

The article from @avoigt suggested via a leak that there would be two production lines at Giga Berlin. However I also recall this Business Insider article from earlier in the month that claims that Elon scrapped the second production line during his most recent Berlin visit. Tesla: Elon Musk hat bei seinem jüngsten Besuch in Grünheide Baupläne über den Haufen geworfenOk, thought that were only rumours (or educated guesses - Elon said they would do structural battery packs but as in the first iteration of the planning documents there was no cell production, Kato would have been the only possible source). Judging from the likes your post got that is not the case. Does someone have a source?

Also, if they do both 2170 and 4680 as @avoigt implied, I would expect they would start with 2170 and not jump to structural battery back in the beginning.

"Also, instead of having two production lines on site, Musk has requested a single one. According to information from Business Insider, the Tesla boss is said to have clashed with manager Marcel Jost because of his ideas - one of the reasons for the Tesla manager's recent departure."

I wouldn't normally trust BI reporting on Tesla but I'm still curious which is the more accurate and up to date with Tesla's current plans? I could understand Elon scrapping the second 2170 line if he had high confidence the 4680 cells would be available. But the contingency of a second line is also logical and understandable.

UkNorthampton

TSLA - 12+ startups in 1

Tesla related: UK second hand prices for Model 3 - less than 2 years old (introduced 2019) are down about 8% (Autotrader UK asking prices plus other sources*). Only exotics fare better (and you might have to be VERY special for the OEM to even consider you as a customer). Parkers is saying lower - the lowest private sale being around 78%, highest dealer price 91% after 2 years Free valuation | ParkersA friend of mine might be getting a tesla. I was looking on their CPO site and was amazed at the prices of the CPO cars. They are as much as a new one. There also were not many cars available either.

That makes a huge difference to Total Cost of Ownership and is extra encouragement to fleet buyers (company car, hire car, taxi).

* https://www.youtube.com/c/JonathanPorterfield/videos Eco Cars Orkney was on Fully Charged (I think) saying used/new Tesla Model 3 difference only around £400

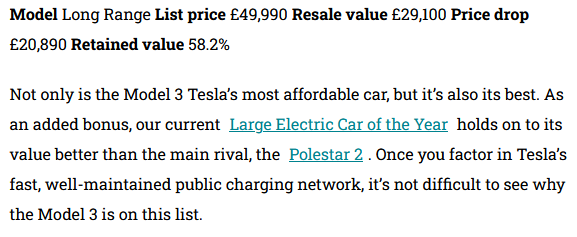

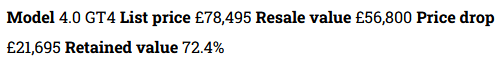

TM3 comes in at no 9 for least depreciating vehicles (58.2%) - but numbers are rubbish/estimated. Estimation is over 3 years - so not based on real life as TM3 not sold long enough in UK. The best is Porsche Cayman at 72.4% retained after 3 years. I think Tesla Model 3 will be beating this! Plus resale for ICE in a few years will be poor.

www.whatcar.com

www.whatcar.com

Porsche Cayenne

TM3 comes in at no 9 for least depreciating vehicles (58.2%) - but numbers are rubbish/estimated. Estimation is over 3 years - so not based on real life as TM3 not sold long enough in UK. The best is Porsche Cayman at 72.4% retained after 3 years. I think Tesla Model 3 will be beating this! Plus resale for ICE in a few years will be poor.

The 10 slowest-depreciating cars in 2023

Want your next car to hold on to as much of its value as possible? You'll need to buy one of these

Porsche Cayenne

Agreed, must watch, not very technical actually, so don’t be intimidated just because it’s for CVPR conference.

My notes:

TL;DL:

- Briefly touching vision vs Lidar and mentioned Lidar is not a generalizable solution.

- Now vision is working well that they can start to remove other sensors.

- Mainly focused on the feature of using pure vision to remove radar in AEB(auto emergency braking) to illustrate how Tesla AI works

- Start with data accusation, they have 221 triggers running that will record 10sec video and send back for events like “driver brakes hard on freeway” or “detected lead car slow down but driver didn’t brake” etc.

- Offline auto labeling to get accurate depth/velocity, with benefits of hindsight and more computing, auto labeling could actually get very good quality labels.

- Train net and deploy to fleet in shadow mode.

- Then collect more disagreements and retrain.

- 7 rounds of shadow mode deployment before final release.

- In total 1M clips collected, 6billion labels, 1.5Pb dataset size(some tweets interpreted this as the total size of FSD dataset, my interpretation is 1.5Pb is for AEB feature alone)

- Compared with legacy solution with radars, the new pure vision system avoids phantom brake, detects stopped truck earlier and works more reliably in case of hard braking of lead car(in that case radars lose tracking of the lead car and re-acquired it a few times in one second, produced a lot of noise)

- QA process includes 10k simulation scenarios, 10years of QA drive and 1000years of shadow mode drive. (Next time Waymo brags about simulation we can say Tesla do that too, on top of millions of real world video clips, hand picked from billions of miles of real world drives)

- Tesla training cluster has 5760x NV A100 GPUs, the 5th largest supercomputer in the world. And this is only 1 of the 3 clusters they are building. Next step is Dojo, but not ready to talk about that yet.

- Was asked about whether adding other sensors would be helpful, for example infra cam, answered there is infinite number of sensors you can chose from, but they still think cameras in visible spectrum are the best choice, it has all the information needed for driving, basically it’s necessary and sufficient for FSD.

Demonstrated a lot of speculated Tesla AI moats, data collection triggers, auto labeling, shadow mode, training clusters, etc.

Also shows great progress on pure vision using the example of vision AEB.

Bullish AF!

Good summary!

One thing I'm confused by is on the slide at 29:20

Release

15M miles

1.7M on Autopilot (0 crashes)

That must be a very recent release to have so few Autopilot miles, but there does not seem to be a recent release according to TeslaFI (last was 2021.4.18.3 on 10 June).

Or only HW3 cars.That must be a very recent release to have so few Autopilot miles, but there does not seem to be a recent release according to TeslaFI (last was 2021.4.18.3 on 10 June).

Or massively reduced travel due to corona in many parts of the world.

Or only a rollout in US.

There can be MANY reasons for these numbers.

I watched his talk. It was a lot like I was speculating half a year ago.Agreed, must watch, not very technical actually, so don’t be intimidated just because it’s for CVPR conference.

My notes:

TL;DL:

- Briefly touching vision vs Lidar and mentioned Lidar is not a generalizable solution.

- Now vision is working well that they can start to remove other sensors.

- Mainly focused on the feature of using pure vision to remove radar in AEB(auto emergency braking) to illustrate how Tesla AI works

- Start with data accusation, they have 221 triggers running that will record 10sec video and send back for events like “driver brakes hard on freeway” or “detected lead car slow down but driver didn’t brake” etc.

- Offline auto labeling to get accurate depth/velocity, with benefits of hindsight and more computing, auto labeling could actually get very good quality labels.

- Train net and deploy to fleet in shadow mode.

- Then collect more disagreements and retrain.

- 7 rounds of shadow mode deployment before final release.

- In total 1M clips collected, 6billion labels, 1.5Pb dataset size(some tweets interpreted this as the total size of FSD dataset, my interpretation is 1.5Pb is for AEB feature alone)

- Compared with legacy solution with radars, the new pure vision system avoids phantom brake, detects stopped truck earlier and works more reliably in case of hard braking of lead car(in that case radars lose tracking of the lead car and re-acquired it a few times in one second, produced a lot of noise)

- QA process includes 10k simulation scenarios, 10years of QA drive and 1000years of shadow mode drive. (Next time Waymo brags about simulation we can say Tesla do that too, on top of millions of real world video clips, hand picked from billions of miles of real world drives)

- Tesla training cluster has 5760x NV A100 GPUs, the 5th largest supercomputer in the world. And this is only 1 of the 3 clusters they are building. Next step is Dojo, but not ready to talk about that yet.

- Was asked about whether adding other sensors would be helpful, for example infra cam, answered there is infinite number of sensors you can chose from, but they still think cameras in visible spectrum are the best choice, it has all the information needed for driving, basically it’s necessary and sufficient for FSD.

Demonstrated a lot of speculated Tesla AI moats, data collection triggers, auto labeling, shadow mode, training clusters, etc.

Also shows great progress on pure vision using the example of vision AEB.

Bullish AF!

They have an entire stack for generating labelling, using a lot of compute to generate the labels. Probably using a lot of pseudo-lidar techniques to generate the labels and have cute visualizers in 4D to support the labelling team when they clean up the dataset and analyze potential edge cases.

7 iterations of data engine already for tesla vision, hopefully that has improved performance enought that they can release it ”in about two weeks” finally.

Tesla Vision will not fail like the legacy radar system with phantom breaking, often these were radar detections near the lead vehicle making the car think for a few ms that the car in front was breaking.

Tesla are now using transformers. This will likely improve performance as it will have better recall. Expect some interesting ”online learning” and ”hd-maps” comments from youtubers and tweeters as it will be able to ”see” more than the cameras can see and maybe ”remember” a block or two.

I wonder if their new reliance on transformers forced a redesign of HW4.

Again, this is mainly an ad for recruiting top talent to Tesla. Every elite ML-ninja will watch it and want to work for Tesla…

The talk made me very bullish. And it should scare the competition.

Last edited:

JohnnyEnglish

Member

This is another brilliant presentation by Andrej.Agreed, must watch, not very technical actually, so don’t be intimidated just because it’s for CVPR conference.

My notes:

TL;DL:

- Briefly touching vision vs Lidar and mentioned Lidar is not a generalizable solution.

- Now vision is working well that they can start to remove other sensors.

- Mainly focused on the feature of using pure vision to remove radar in AEB(auto emergency braking) to illustrate how Tesla AI works

- Start with data accusation, they have 221 triggers running that will record 10sec video and send back for events like “driver brakes hard on freeway” or “detected lead car slow down but driver didn’t brake” etc.

- Offline auto labeling to get accurate depth/velocity, with benefits of hindsight and more computing, auto labeling could actually get very good quality labels.

- Train net and deploy to fleet in shadow mode.

- Then collect more disagreements and retrain.

- 7 rounds of shadow mode deployment before final release.

- In total 1M clips collected, 6billion labels, 1.5Pb dataset size(some tweets interpreted this as the total size of FSD dataset, my interpretation is 1.5Pb is for AEB feature alone)

- Compared with legacy solution with radars, the new pure vision system avoids phantom brake, detects stopped truck earlier and works more reliably in case of hard braking of lead car(in that case radars lose tracking of the lead car and re-acquired it a few times in one second, produced a lot of noise)

- QA process includes 10k simulation scenarios, 10years of QA drive and 1000years of shadow mode drive. (Next time Waymo brags about simulation we can say Tesla do that too, on top of millions of real world video clips, hand picked from billions of miles of real world drives)

- Tesla training cluster has 5760x NV A100 GPUs, the 5th largest supercomputer in the world. And this is only 1 of the 3 clusters they are building. Next step is Dojo, but not ready to talk about that yet.

- Was asked about whether adding other sensors would be helpful, for example infra cam, answered there is infinite number of sensors you can chose from, but they still think cameras in visible spectrum are the best choice, it has all the information needed for driving, basically it’s necessary and sufficient for FSD.

Demonstrated a lot of speculated Tesla AI moats, data collection triggers, auto labeling, shadow mode, training clusters, etc.

Also shows great progress on pure vision using the example of vision AEB.

Bullish AF!

The Dojo precursor is incredibly impressive. The actual performance of supercomputers addressing this type of problem is always constrained by data flow between the computational nodes, not the compute speed of the nodes themselves, so it is very interesting to see Andrej quoting the (incredible) switching capacity of 640Tbps. Unsurprisingly this looks to be following the 'graph' computer approach discussed by Jim Keller in his last interview with Lex Fridman.

Presumably the custom silicon which Zach mentioned in the Q1 ER will be for the first true Dojo implementation.

Several years back, @TrendTrader007 used to post here regularly. Typically would be a message like "Based on the chart, $TSLA poised for huge break out to $XXX" - this is OK, but he'd post these every other week, of course it never happened and he ended up getting laughed at so much that he left, returned, left, returned, then finally left, until he returns again...

He couldn't handle people criticising his perpetualproclamations and started to be abusive, with stuff like "screw you amateurs, I've more money than all of you combined, go f yourselves", or words to that effect

We never knew how much $TSLA he had, but he implied it was >100k shares pre-split. He was forever flipping in and out of stock and calls. In theory he should be a billionaire by now, who knows...

I follow him on Twitter, he's somewhat schizophrenic, one day he's loading on $TSLA, the next he claims the apocalypse is coming and he has sold everything and moved into cash

First video of his I'd seen. I'd like better work.

His analysis at the start might beat many of the so called TA people in the TA forum, but it was way so brief. Really chill approach, clearly not looking for followers or else he easily could have thrown some screen grabs in. He had a nice written down list of rules on a recent video, said no options! He seemed really respectful of his lady, interested in what she had to say but it came across as a lot of rambling, akin to a bar or break room conversation.

Most of my money came through buy and hold. I really dislike the grandiosity of some over the years here. I certainly dislike when I exhibit it. I crave attention yet hate wanting it.

If he has been schizo recently, moving in and out, would make sense, it has been a getting your carrot chopped kind of market.

I'm happy he is bullish this week, I'm still in the camp that this takes some time to sort out. I like his QQQ/TSLA comments, find them true, just not confident as to when. Me thinks greater chance of it after next couple months. End of year should be good. Beware bold bullish moves during holiday lull!!! What moves will maximize EOY bonuses?

Arguing with people just to prove one is right is counterproductive. Arguing to sharpen ones opinions, gain new insights, understand competitors, or help those that want to learn, those are all worthy things. Many here just want to be right and think black white rather than gray. I wonder if mods somewhat reinforce some of this also, indirectly. Posts get banned when positions or viewpoints get criticized. Swords and knives are sharpened against stones, similarly the metal that some bring to the table here is pretty dull and requires stones to sharpen them. The end result is what passes for research, insight, knowledge, advice, TA, or guidance is often lacking, incomplete, or insufficient.

I really hope TSLA has the battery manufacturing down, that is key. If they get all the current capacity built and then have a superior battery with all the cost reductions it is game over for new entrants, especially if TSLA keeps using current suppliers for all they can make. I suspect that CT and Semi need not come out until Y demand slows down. If Y is profitable, no need to expand further, just keep other stuff on the shelf. That is why the mini china car or 25K car needs not have design party yet.

In other news, keep up with the free test rides for everyone folks. I let a guest drive our car and he will likely order one next few months.

Direct YouTube link to entire talk:

Edit: This is a must watch. If you only have limited time skip to 3:30, but the rest is very information rich.

Tell Gary Black the TSLA PR team is alive and well.

Similar threads

- Locked

- Replies

- 0

- Views

- 3K

- Locked

- Replies

- 0

- Views

- 6K

- Locked

- Replies

- 11

- Views

- 10K

- Replies

- 6

- Views

- 5K

- Locked

- Poll

- Replies

- 1

- Views

- 12K