Maybe Alec should take his own advice and just do acting.

I never cared much for Alec Baldwin, but I do find this film with its stellar cast recommendable:

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

Maybe Alec should take his own advice and just do acting.

Yes, it is.This logic makes the flawed assumption that driving a car safely is an "unknowable" task, that there's an almost infinitely long tail of edge cases.

This is not so.

You don't have to be safer than the average human, you have to be safer than the best human.Firstly, the bar of "driving 10x-100x times safer than a human" is in fact very low:

We should also consider, that while the road to the "perfect" NN with correct handling of all corner case might be a long one, another path to full autopilot is to simply avoid the problematic cases.

If you can't handle the 'bike on a car' situation, fall back to a safe distance and continue the journey at a slower pace, reroute or wait for another (human driven) car to lead you through the situation. That's still full self driving.

And while it might not get you many friends, slowing down or stopping (situation permitting) and simply waiting for the situation to resolve itself is also a possible way around unknown issues.

I'd look for a stronger word. If there's one sentence to remember from Musk on the Autonomy Day conference, it's:It's worth noting that Musk is now absolutely confident on autopilot, so he has likely decided to encourage leasing on a larger scale than in the past, so that Tesla will have a large taxi fleet coming off lease in 3 years.

[Autopilot] is basically our entire expense structure

Oh my dear God! If this is true, the new raise plus this extends Tesla's runway to nearly 5 years even if it continues to lose money at this rate, by which time the semi truck will definitely make a killing with limited FSD on highway.

I'm not kidding. Here's a trusted source: Mark B. Spiegel on TwitterI'd look for a stronger word. If there's one sentence to remember from Musk on the Autonomy Day conference, it's:

I think Semi will be bigger than Model 3. I don’t know why this is rarely discussedThe Semi can make a killing even if it doesn't have any FSD at all. The economics of electric trucks are overwhelming vs. diesel.

Furthermore: Even if you have to pay a human driver with a CDL to sit in every truck, you can probably pay less if the truck mostly drives itself, since it's a less stressful job.

This post shows the first example of the diagnostics reporting an issue before it causes a problem and has the part automatically shipped to your service center that I have seen.

Hopefully they can do more predictive failure detection like this and that it actually streamlines service.

This is probably a good approach. It won't make for a popular taxi ride, but it might work for getting the taxi to the pickup point.

It's worth noting that Musk is now absolutely confident on autopilot, so he has likely decided to encourage leasing on a larger scale than in the past, so that Tesla will have a large taxi fleet coming off lease in 3 years.

None of this is true. absolutely none of it.

Wheee! I am blocked on his twitter....he must not have liked the power of my 22 followers!I'm not kidding. Here's a trusted source: Mark B. Spiegel on Twitter

I think we can now get a rough idea of the Tesla/Fiat credit deal with your model and the new info from FCA:

So assuming a continued €280m per year credit purchases from tesla in the US - this is €840m in the next 3 years. This leaves €1bn credit purchases in the EU. We can estimate this €1bn EU credit purchase offsets €390m 2019 fine, €2.5bn *80% = €2bn 2020 fine and €2.5bn *15% = €375m 2021 fine. Or a total €2,765m. This makes the EU Tesla payment 36% of the estimated EU fine - so this is roughly 3x more cost efficient for FCA. This number also roughly aligns with the €120m credit purchase for a €390m fine reduction in 2019. I think 80% EU compliance would need about 170k EVs in 2020, so this looks like c.€4.2k per car in the EU ($4.7k or likely +c.10% to Tesla's EU Model 3 gross margin).

- FCA says in 2018 global credit purchases and non compliance fines cost €600m. Tesla sold $316m (€280m) of US Ghg credits in 2018, presumably all to FCA. I would guess there was no EU fine last year? If so, they had €280m US ghg purchases and €320m US fine.

- They expect 2019 to be moderately up from €600m.

- FCA expected a €390m fine this year (well done @generalenthu with your €430m estimate!). This sounds like they are referring to EU, but not completely clear. With the new credit deal they now expect to be close to compliance in 2019.

- EU compliance costs are now expected at around €120m this year. I think we can presume this is all credit purchases from Tesla. It also aligns with the $140m deferred reg credit revenue in Tesla's Q1 report.

- We also know total FCA cost of EU and Nafta credit purchases is €1,800m. As far as we know this is all from Tesla.

- From your model FCA's EU fine would be €2.5bn in 2020 and 2021.

- The transcript isn't clear if they are talking 2020 or 2019, or global/EU, but it sounds like FCA expects 80% of its EU fine will be reduced by credits in 2020 (with 20% from other tech) and 15% in 2021 (with 40% NEV tech & 45% conventional tech).

If this is correct, Tesla should get c.€120m from FCA for the EU and c.€280m from FCA for the US in 2019 (total $450m). In 2020 they should get c.€720m for the EU and €280m in the US (total $1.1bn). Tesla should be able to record this as straight profit.

Anyone agree/disagree with these assumptions?

If this is being implemented, it'll help a lot. (No evidence of implementation yet.) I know people who, facing weird situations, have simply decided "time to pull over and call the cops for a rescue". If this is implemented, they could get to pseudo-full self-driving much quicker. (Again, no signs that they're doing this yet.)Indeed, quantifying uncertainty and responding with appropriate caution is really important. For example, the car can't easily classify the object that it is following or if that object is behaving in atypical ways, then the car should not follow closely.

Human drivers do this all the time. The car ahead is doing something strange, not driving smoothly down the center of the lane, so you give it wide birth. You don't need to know if the driver is drunk, having a heart attack, or the car has blown a tire. There are many edge case reasons why the object is not smoothly conducting itself down the road. But it does not matter whether you can accurately diagnose the root cause. You simply know enough to back off.

Yep. I look forward to the announcement where they say they're doing that. They didn't mention it at autonomy day, therefore they're not doing it yet.So NN can definitely develop expectation about typical behavior or objects typically seen about the roads. When something is observed to depart substantially from these expectations, this should raise the level of uncertainty. In simple statistical modeling, we watch the residuals to see when the model is fitting poorly. There are many ways to measure departures from expectations. So it is possible to measure uncertainty in real time. The real-time response should be to back off and increase various margins of safety.

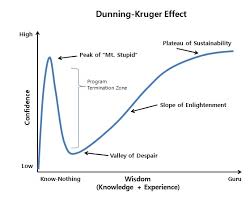

So where is Tesla FSD on the Dunning-Kruger curve? Is it at the peak of Mt Stupid? Has it finished descending to the Valley of Despair? Or is it now climbing the Slope of Enlightenment?

Oooooo! You’re a tricky dicky. Noted.

I think I have more $TSLAQ people that block me than I have actual followers.Wheee! I am blocked on his twitter....he must not have liked the power of my 22 followers!

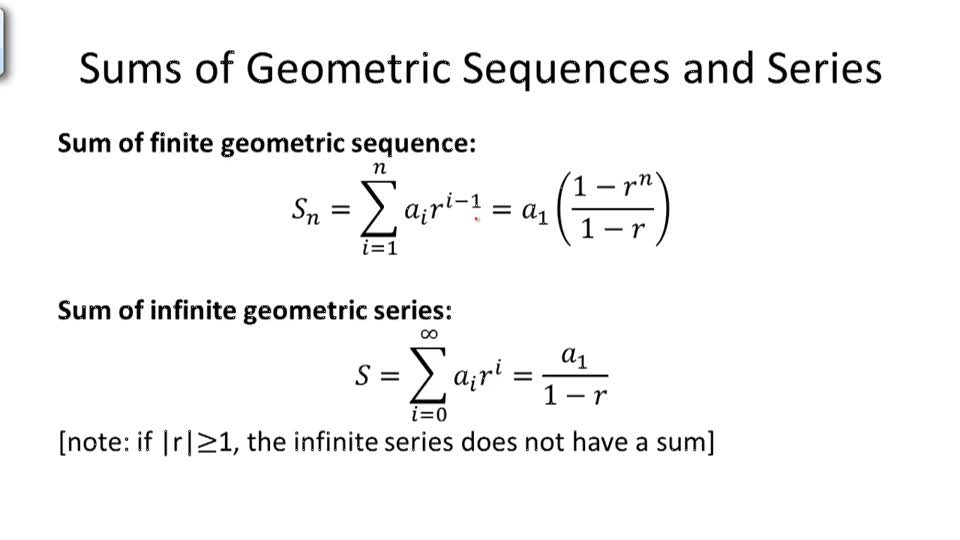

Lets assume the probability distribution of edge cases causing crashes in geometric. We know the sum total of all these probabilities is 1.No, they can't. As you work out the far end of the curve, it's just more and more edge cases of lower and lower frequency.

NopeMaybe i heard this wrong, but from the audio recap someone posted a link to regarding the (illegal according to neroden) investor meeting it sounded like Elon said that in fact they don't plan on using this money they just raised. Did I hear that wrong?

Yes. They had this stupid conspiracy theory called #CRCL - Can’t Raise; Can’t Leave - which was stoked and encouraged by reporters like Charley Grant and Russ Mitchell.

According to this theory, SEC had sent a Wells Notice to Tesla, which they will have to disclose if they were to go for a cap raise. I don’t know about the Can’t leave part.. I can’t be bothered anyway. All these theories hit a solid wall when Elon raised cash like a Boss (or an Absolute Unit).

This is going to be the final nail on the coffin

Subscribe to read | Financial Times

As Elon tweeted on Feb 25th, the day the FCA deal was signed - “Fate loves irony” - very apt

Link doesn't work - instead cut paste this into search to get the article: "Fiat Chrysler Automobiles has said it will pay electric carmaker Tesla close to €2bn to help it meet tough new emissions targets"