Tesla AI Day August 19th: Updated and New Predictions based upon Tesla's hint published by Rob Maurer

Tesla Begins Sending Out AI Day Invitations

My 1st prediction that the FSD 4.0 chip architecture and board will be announced was correct.

Prediction #2: it's being installed in all new S/3/X on 8/19 to avoid the Osbourne effect.

Tesla is working on robotics with the "Leonardo da Vinci of Robots" [1]. It should be obvious what the "secret project" is when you check out UCLA Samueli School of Engineering [*]

Professor Dennis Hong's lab webpage:

RoMeLa | The Robotics & Mechanisms Laboratory at UCLA

The lab studies: "humanoid robots and novel mobile robot locomotion strategies", including autonomous robots.

Prediction #3: One of the lab's robots is running Tesla's code running on FSD 4.0 to navigate the real world!! It is funny that one of the lab's robots is called "Darwin" after the scientist of course - not the award winners.

Prediction #4: Attendees on 8/19 will witness at least one of Prof. Hong's UCLA teams' RoMeLa robots navigate the real world running Tesla code on FSD 4.0.

If my predictions are accurate, this will be huge!! For example, Tesla Robots will be able to do chores around the house to help seniors live independently with dignity in their homes longer without having to hire outside help. Just like our Tesla vehicles, firmware downloads will enable new features over time. A brand new Tesla product category!!

This is why Elon said during the 2021 Q2 conference call:

"long term, people will think of Tesla as much as an AI robotics company as we are a car company, or an energy company. I think we are developing one of the strongest hardware and software AI teams in the world... So a long story but I think, yeah, probably others will want to use it too and we will make it available."

For insight into what Tesla is doing with the DOJO server technology, one only has to look at what Elon tweeted on 2020-09-20:

View attachment 691907

See what Google did with their generations of TPUs (Tensor Processing Units):

View attachment 691904

Google has announced their TPUv4 but it isn't generally available to Google Cloud customers yet:

Google claims its new TPUs are 2.7 times faster than the previous generation

"This year’s MLPerf results suggest Google’s fourth-generation TPUs are nothing to scoff at. On an image classification task that involved training an algorithm (ResNet-50 v1.5) to at least 75.90% accuracy with the ImageNet data set, 256 fourth-gen TPUs finished in 1.82 minutes. That’s nearly as fast as 768 Nvidia A100 graphics cards combined with 192 AMD Epyc 7742 CPU cores (1.06 minutes) and 512 of Huawei’s AI-optimized Ascend910 chips paired with 128 Intel Xeon Platinum 8168 cores (1.56 minutes). Third-gen TPUs had the fourth-gen beat at 0.48 minutes of training, but perhaps only because 4,096 third-gen TPUs were used in tandem." [VentureBeat]

Prediction #5: Tesla did in fact develop the current fastest DNN (Deep Neural Network model) training supercomputer in the world as Elon predicted a year ago. A product called something like "Tesla AI Cloud" will be announced on August 19th or some time thereafter.

If true, this is also huge for investors!! For example, $AMZN makes most of its profits from AWS [1].

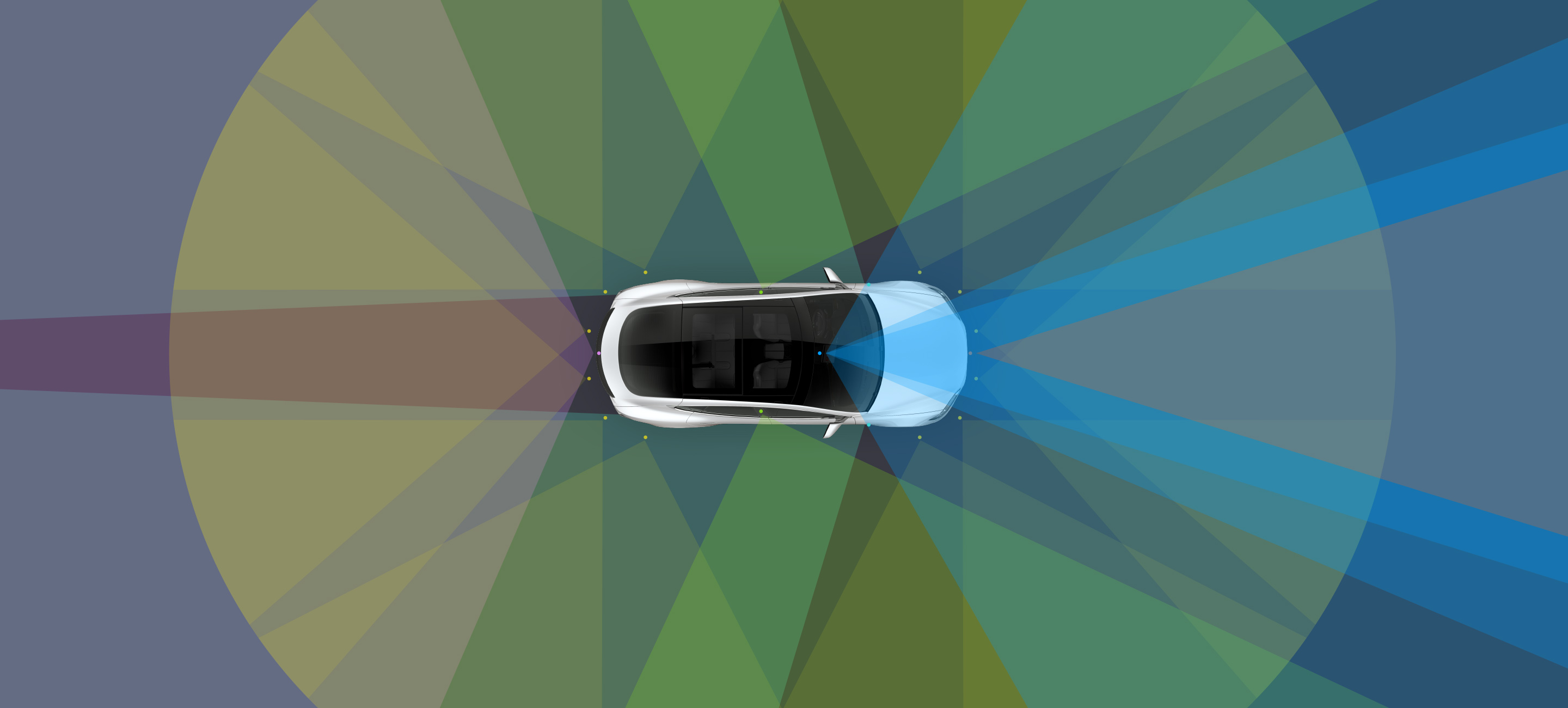

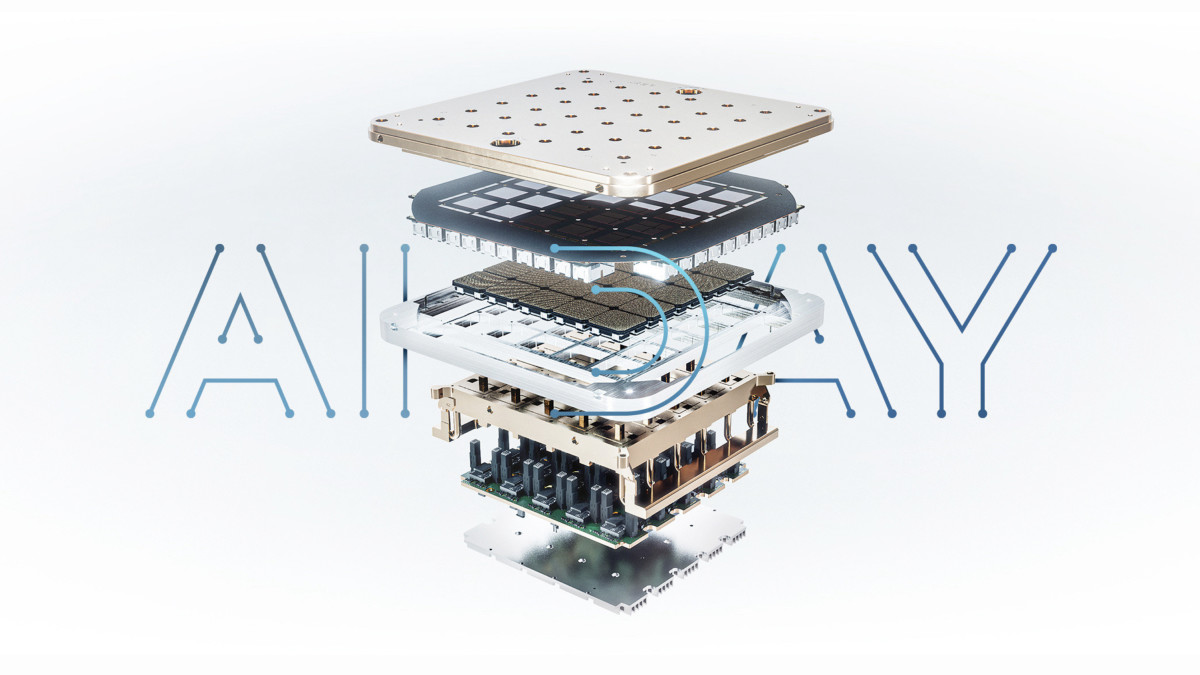

I believe $TSLA will be the first company to solve real-world real-time visual machine navigation (auto, robot, whatever) using their DOJO supercomputers to train their DNN (Deep Neural Network models). The photo shows 25 chiplets per assembly. Those will have the fastest interconnection bandwidth. I expect these supercomputers contain 1,024+ of these assemblies interconnected by some kind of super-fast bus topology. This is DNN training silicon and is distinct from FSD 4.0 which is used for inference only to infer what the video feeds are "seeing" in the real world.

Tesla Dojo supercomputers can be used for general-purpose machine learning (ML) training. ML training basically involves billions+ of matrix multiplications [2] of vectors and matrices with forward and backward propagation to calculate what are called "parameters" (probabilities) inside a multiple-layer model. The word "deep" in deep learning comes from the fact that often these models have over a hundred or more layers.

Sophisticated Wall $treet investors have already figured this stuff out. That is what started moving $TSLA higher and broke us back up through $700.

[1]

Dr. Dennis Hong on Achieving the Impossible With Robotic Inventions

[2]

How Amazon Makes Money

[3]

A Complete Beginners Guide to Matrix Multiplication for Data Science with Python Numpy

[*] My son is an MSCS student at UCLA's Samueli School of Engineering but I don't think he's taken any classes with Prof. Dennis Hong:

View attachment 691908

www.thestreet.com

www.thestreet.com