Let us know when you invent an artificial human visual cortex. It's not as easy as you think.This always has me thinking. I get it that LIDAR can augment and help fill in some gaps, but how can there be a place where vision doesn't work? That would mean humans can't drive there.

Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Waymo

- Thread starter Daniel in SD

- Start date

Dewg

Active Member

Am I missing something? I was asking a serious question. Are there places where vision is impossible, therefore humans cannot drive there?Let us know when you invent an artificial human visual cortex. It's not as easy as you think.

I started to write up this answer and it got pretty long , sorry but I'm posting it anyway! In the following, I am not advocating for Lidar nor for Vision only. I'm just trying to answer your question in a balanced way.This always has me thinking. I get it that LIDAR can augment and help fill in some gaps, but how can there be a place where vision doesn't work? That would mean humans can't drive there.

Yes, Lidar operates near (though not usually within) the human visual spectrum, so it has no significant perceptual benefit over camera vision. And in fact , since almost all modern Lidar systems are monochromatic, the Lidar really doesn't see colors or even grayscale representation of colors. Within limits, it can see differences in IR reflection and may be able to distinguish stripes on a road or even some signs, for example, in the right conditions, but this isn't the main point of the sensor. Think of everything in the image being wrapped in a neutral flat gray shrink wrap, on a heavily overcast day. That's all you can expect, though sometimes you can get more than that. In other words, in a lot of ways it's much more limited than camera vision.

However, I think there are two very significant reasons that AV engineers are interested in it:

1. Lidar provides a three-dimensional map of rather definite and precise voxel-occupancy information. This is because it's fundamentally designed to do rangefinding. It doesn't just see there's something out there intersecting the beam, it measures the distance with good resolution and confidence. The 3D map produced requires no AI techniques to obtain its fundamental output, though I do think AI interpretation is increasingly being applied in conjunction with more conventional noise-reduction and signal conditioning to help clean up and then interpret features detected in the coordinate space.

Monocular* camera vision doesn't inherently have this rangefinding capability**. It sees a much more intricate palette of color and texture, but this requires interpretation to assign three-dimensional coordinates. This interpretation module, these days, will almost certainly be heavy on evolving AI techniques and training. Without some sort of visual interpretive intelligence-based on training experience, depth information is very hard to extract. There are ways of categorizing image elements into edges and surfaces, and attempting to apply transforms and other kinds of math to infer perspective that can help with ranging, but it's very complicated and far from reliable. Others here are probably better at explaining various emerging approaches for "Vidar", but I believe this has only become practical because of very modern AI/ML/NN techniques. Again, Lidar provides this information directly without needing any fancy interpretation software.

* On and off, you see people discuss binocular stereoscopic or multiscopic techniques that one might think could be extracted from the multiple cameras with their (somewhat) overlapping fields of view. This often goes along with the assumption that normal human depth perception must be a key element of driver vision. Actually this is not really true, human depth perception at the distances important in high speed driving is extremely limited because of the relatively short triangulation baselength of the distance between our eyes. Humans get their depth understanding at greater distances much more from their intelligent knowledge of the scene and its contents, and from the changing perspective clues as they and/or other cars are in motion. This doesn't mean that absolutely no beneficial information can be extracted from overlapping multiple camera views, but I think it just isn't a very significant first or second order benefit in machine vision for driving.

** Another sometimes-discussed aspect is that people know something about the technology of autofocus for digital still cameras and video cameras, and so one might naturally assume that some of those associated rangefinding techniques would be applicable to the video cameras on the car. However, again this does not really translate well to the AV situation. The cameras on Teslas, and I believe most AV cars, don't have any focus-modulation hardware, not needing it because the inherent depth of field is quite large for the small-sensor and limited-resolution video cameras on the car. Both of the much-debated "contrast detect" and "phase detect" autofocus methods provide very poor selectivity when the depth of field is large and so everything in the scene is more or less in focus already - especially anything that is many car lengths away. Another way of looking at this is that the triangulation baselength of an image-sensor-based camera autofocus system is no greater than the diameter of the lens' aperture - a tiny and fairly useless dimension for the cameras were talking about.

2. Lidar is an active-emitting ie self-illuminating system. It can see at night as well as, even better than, in the day. It is only looking for the return of its very specific laser wavelength and can largely reject everything else coming back.However, direct sun or extremely strong sun reflections are still somewhat of a problem, and there are other possible sources of interference if and when many other cars become Lidar equipped. I wouldn't focus too much on these points because there are various ways to mitigate them and the technology continues to evolve and improve.

What may not improve too much, however, is that Lidar Imaging is quite vulnerable to poor-weather interference. Although the wavelength of the laser is at the long end of the visual spectrum, it's still nowhere near as long as conventional or new HD Radar. So it does a much poorer job of seeing through raindrops, snow, dust etc. I don't know the latest single processing advances, but my impression is that wide-spectrum camera vision has a better chance in a rainstorm then does Lidar. (Neither one is too good if you throw mud on the sensor and don't clean it off).

So I'll try to make a TLDR summary:

- Lidar provides a 3D there/not-there coordinate map of space occupancy that doesn't fundamentally depend on any evolving AI smarts. This is fundamentally a better capability than processed camera-vision image capture. For an engineer just starting to work on the self-driving problem, as well as for engineers who are tasked with providing the highest possible safety factors, this reliable coordinate map seems, at least initially, an almost irresistible benefit.

- But camera-vision engineers are having good success creating similar "Vidar" 3D maps by applying advanced computation including AI techniques to camera vision.

- Lidar provides its own illumination and is relatively good at rejecting interference from external illumination sources. Night vision is not an issue for lidar.

- Foul weather is a problem for Lidar, perhaps even more so than for camera vision.

I thought I gave a serious answer. Nobody has figured out how to make a machine vision system that can match the performance of a human.Am I missing something? I was asking a serious question. Are there places where vision is impossible, therefore humans cannot drive there?

I can’t think of a situation where human vision is truly impossible to use. You can always creep along at 1mph. A car with synthetic aperture radar could probably safely drive at higher speeds than a human in dense fog or smoke. I doubt that is something that AV companies are working on though, they’re still trying to get something that’s safe enough to work in normal environments.

As I've written before, I think it's a myth that a car with better-than-human vision in foul weather, can actually drive in foul weather. As long as there are other cars and drivers around who are not so equipped, it makes relatively little difference that the car can see. It simply cannot mingle with other traffic that cannot see.Am I missing something? I was asking a serious question. Are there places where vision is impossible, therefore humans cannot drive there?

In a future with only self-driving cars, that all have weatherproof vision, this would change. Then we'd have to start talking about whether the car can prevent itself from being picked up in a tornado or whether it can withstand a hurricane driving a 2x4 into the windshield! The challenges never end.

Dan D.

Desperately Seeking Sapience

Didn't you like any of my suggestions @Dewg ? I thought you might be looking for places where vision is impossible but radar/LIDAR may be possible.Am I missing something? I was asking a serious question. Are there places where vision is impossible, therefore humans cannot drive there?

https://teslamotorsclub.com/tmc/posts/6990013/

If you're just looking for places & situations where vision is impossible*. A blinding white-out. In the middle of a thicket of bushes. Major rain, mud, hail. Someone throws a tarp over the car. In the middle of a color-run, smoke/forest-fire/road fire. Hemmed in by a herd of bison, mass riot. Dirty cameras.

*unsafe at least

Last edited:

Ozdw

Member

I don't know if the Tesla cameras filter all infrared light, but if not, that would certainly help night vision. Not a panacea, though.I'm trying to think of places where vision systems might not work - or might not work well enough by themselves to be "safe". Bear with me.

Super amounts of glare blinding the car from the side/rear. Can still see ahead for traffic lights, but no safe view of approaching traffic from the side/rear.

I'm not convinced that non-radar, non-LIDAR cars can safely reverse down darkened areas. Sometimes even we get out of the car and look around, maybe with a flashlight first to get out of that really dark parking lot, dark cluttered alley, narrow & overgrown country lane. Vision cars have no provided light on the sides and very little at the rear, I presume we're not talking about night-vision cars.

How about detecting the potholes at night, determining where the walls are in an unlighted street tunnel, or seeing the wire strung across the road from a downed power pole. Not sure if vision/radar/LIDAR would detect any of these, but there's a chance one of them would.

Also, maybe it does mean humans can't drive in a particular location because it's vision-challenging, but no reason a good autonomous car can't. Might be a good use-case for it, pitch-black road and the headlights failed/turned off. Could be some rescue or military applications too.

Maybe there's better examples. Has anyone found a place where FSD fails to see?

diplomat33

Average guy who loves autonomous vehicles

This always has me thinking. I get it that LIDAR can augment and help fill in some gaps, but how can there be a place where vision doesn't work? That would mean humans can't drive there.

Camera vision has some limitations. Specifically, camera vision is less reliable in darkness, bright light, blindness, same color background and measuring distances:

However, cameras have a number of limitations. These include performance in the dark and in very bright light, and the transition between the two (such as when a vehicle emerges from a dark tunnel on a bright sunny day); their performance when confronted with reflections; their inability to detect objects of a particular color against the same colored background; and crucially, they cannot measure depth directly and instead must infer it, often with much poorer range accuracy than lidar or radar.

Source: What Is Lidar? 10 Things You Need to Know About Laser Sensor Technology

The other issue is that camera vision does not have 100% recall which is a problem if you want very high reliability. Currently, the only sensor with 100% recall is lidar:

I think Elon's view is that they can overcome these limitations with better machine learning and AI. Or at least, he believes vision-only can be "good enough" with enough data and machine learning. Mobileye takes the view that vision-only is good enough for L2 and they will add radar and lidar redundancy to do L4. AV companies like Waymo who are focused on L4, combine camera vision with radar and lidar in order to achieve a combined reliability higher than vision-only.

Thanks for asking the question. I hope this helps.

Last edited:

Dewg

Active Member

Thanks guys. I'm learning more about how these sensors work. How can LIDAR know exactly what everything is without a database, where vision systems require a database? If lidar sees a mailbox, it just knows it's a mailbox? Or does it just know there is a solid object in some shape and therefore should avoid it?

willow_hiller

Well-Known Member

How can LIDAR know exactly what everything is without a database, where vision systems require a database?

I think it's a strawman argument to say that vision-based systems rely on recognizing an object that has previously been seen by the training data. Yes, when you're doing basic 2D image segmentation, CV won't be able to recognize novel objects in the scene, but Tesla isn't using 2D image segmentation for obstacle detection anymore.

Here's Ashok Elluswamy's latest presentation from CVPR, timestamped to when he discusses the voxel occupancy network trained on surround video:

And at 16:44 he explicitly covers the topic of the occupancy network detecting obstacles when it doesn't know what they are:

Obviously if you can get "Vidar" to be as good as lidar then lidar is useless. The only question is if that will happen soon.I think it's a strawman argument to say that vision-based systems rely on recognizing an object that has previously been seen by the training data. Yes, when you're doing basic 2D image segmentation, CV won't be able to recognize novel objects in the scene, but Tesla isn't using 2D image segmentation for obstacle detection anymore.

Here's Ashok Elluswamy's latest presentation from CVPR, timestamped to when he discusses the voxel occupancy network trained on surround video:

And at 16:44 he explicitly covers the topic of the occupancy network detecting obstacles when it doesn't know what they are:

View attachment 844511

You said everything I wrote was wrong then proceeded to repeat exactly what I said.Please stop. Everything you wrote in that sentence is wrong. Lidar does work without HD maps. Lidar is an active sensor that detects objects and features around the car. It does not need HD maps. Tesla never used lidar with vision. If you use lidar, you don't drop vision. At a minimum, you still need vision to detect traffic light colors. And if you use vision, lidar is not redundant since lidar works in some conditions where vision does not. AVs use both lidar and vision. One does not make the other redundant.

diplomat33

Average guy who loves autonomous vehicles

You said everything I wrote was wrong then proceeded to repeat exactly what I said.

No. I am not repeating everything you wrote. Let me break it down for you.

You wrote:

Lidar doesn't work without HD maps because it cannot recognize objects or read signs.

This is wrong. Lidar uses lasers to detect objects. It does not need HD maps to work. And lidar does recognize objects. Back in the DARPA challenge days, they could navigate an obstacle course with just lidar, no HD maps.

Tesla used lidar with vision

This is wrong. Tesla used radar at one point. Tesla never used lidar.

but vision makes lidar redundant.

This is wrong. Vision has some limitations that lidar helps with. Vision is less reliable than lidar at measuring distances. So lidar is not redundant.

If you're going to use lidar just drop vision.

Now you are saying that you don't need vision if you have lidar. This is also wrong. You cannot drop vision. You always need vision.

Bottom line is that AV companies use both vision and lidar together. They don't use just one or the other.

diplomat33

Average guy who loves autonomous vehicles

Waymo starting public road testing of their autonomous trucks:

Maybe the confusion here is what it means to "know exactly what everything is". As a sensor, Lidar knows nothing about what anything is; it knows only where rhere is something occupying space, and only because that something reflects a bit of the laser beam to the Lidar unit.Thanks guys. I'm learning more about how these sensors work. How can LIDAR know exactly what everything is without a database, where vision systems require a database? If lidar sees a mailbox, it just knows it's a mailbox? Or does it just know there is a solid object in some shape and therefore should avoid it?

And if you take a step back and think about the Vision camera, even though it "sees" the world more like we do, it also doesn't know anything about what it's looking at. As a sensor, it only produces a dumb image capture.

The camera produces a 2D array of colorful pxels.

The Lidar produces a 3D array of colorless voxels.

In both cases, it's entirely up to a downstream processing computer to assign any kind of intelligent meaning to these raw 2D or 3D arrays, in your words to turn it into a database of recognized objects. Such intelligent interpretation is the crux of the AI technology advancements that are making it possible to contemplate successful self-driving, or general purpose robots, or nefarious Big-Brother government surveillance.

However - and this is a key point - between the two, it's only the Lidar that can produce an obviously useful output without such advanced AI interpretation processing. It doesn't necessarily need to know what is there, only that something you probably don't want to drive into is there. Because the raw output of Lidar tells you, directly.

Whereas the camera output, being just a 2D image capture with no 3D depth info, requires a quite sophisticated interpretation engine to build the kind of database you're talking about. It has to do that, to know how to associate each pixel to an object existing at some determined distance from the camera, to then know whether said object is in your drivable space or not, and if so exactly where and how big it is.

So in summary, to be used for correct driving decisions, the camera vision approach is completely dependent on relatively recent, complicated and still evolving AI technology. One can argue that the AI is already there, or really really close already and will be there very soon - personally I think it is getting close - but we can't forget that it does depend on the AI Machine Vision tech.

Whereas the Lidar, in conjunction with detailed maps (and then some relatively non-intelligent video for traffic light state etc) could arguably be the backbone of a self-driving car even if the last 10 years of AI developments had never happened. I'm not saying Waymo and others aren't top-notch and using AI very extensively, I'm just saying the Lidar gives them this easy and reliable "ground truth" capability even without the AI, that camera vision does not.

Doggydogworld

Active Member

I'm 99% certain these have a safety driver, just like the trucks they've been running between Dallas and Houston the past two years. The 'big news' is these trucks have super-redundant control systems which Waymo feels is required so they can eventually pull the safety drivers out.Waymo starting public road testing of their autonomous trucks:

diplomat33

Average guy who loves autonomous vehicles

I'm 99% certain these have a safety driver, just like the trucks they've been running between Dallas and Houston the past two years. The 'big news' is these trucks have super-redundant control systems which Waymo feels is required so they can eventually pull the safety drivers out.

That is correct. Waymo always starts with safety drivers before removing them.

@diplomat33 has explained sufficiently but I have a different take.

Sensors are just raw output of data, it does not "detect" or read things. Camera sensors do not detect or read thing as it is a passive sensor. Lidar does detect things because it is an active sensor and it bounces laser off things, and it detects the return. So, it truly detects where things are in 3d space. It cannot tell you what that thing is, but you know with 100% certainty that it is there. A camera sensor cannot tell you where anything is.

To make sense of these raw sensor output, you employ sophisticated algorithms to filter out the useful information you need. That is where classical computer vison and machine learning comes in play, this applies to Radar, Lidar and Camera output. I will use Waymo for example.

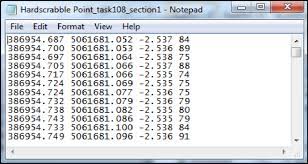

This is a semi raw lidar output from Waymo. You can clearly see the environment and how detailed the lidar return is. You can even see lane lines because lane lines use reflective paint so you can be able to see it when driving at night with your eyes.

If you apply a sophisticated algorithm to this lidar data, you can be able to filter out objects you don't need like buildings and the ground plane. You can track and segment bicyclists, cars, pedestrians, etc, in 3d space with perfect recall. That is something a camera sensor cannot provide without applying an even more compute heavy algorithm.

You can even do things like track when a car door is open, and yes you can do that with a camera and imaging radar as well but again a Lidar provides perfect recall compared to camera. With sensor fusion you have multiple sensors feeding you complimentary information by which you can navigate "safely".

You are "right" in a way; you cannot use Lidar alone to drive our complex road systems "effectively", but you can use Lidar alone to navigate just like you can use camera alone to navigate. But then again nobody is trying to use Lidar "alone". I put alone in quote because Mobileye intends their system to be true redundant system whereby the car should be able to still navigate safely if all the vision and radar systems fail at the same time leaving only Lidar. But that is a truly catastrophic failure point, and the car needs to pull over safely using just Lidar.Lidar doesn't work without HD maps because it cannot recognize objects or read signs.

Sensors are just raw output of data, it does not "detect" or read things. Camera sensors do not detect or read thing as it is a passive sensor. Lidar does detect things because it is an active sensor and it bounces laser off things, and it detects the return. So, it truly detects where things are in 3d space. It cannot tell you what that thing is, but you know with 100% certainty that it is there. A camera sensor cannot tell you where anything is.

To make sense of these raw sensor output, you employ sophisticated algorithms to filter out the useful information you need. That is where classical computer vison and machine learning comes in play, this applies to Radar, Lidar and Camera output. I will use Waymo for example.

This is a semi raw lidar output from Waymo. You can clearly see the environment and how detailed the lidar return is. You can even see lane lines because lane lines use reflective paint so you can be able to see it when driving at night with your eyes.

If you apply a sophisticated algorithm to this lidar data, you can be able to filter out objects you don't need like buildings and the ground plane. You can track and segment bicyclists, cars, pedestrians, etc, in 3d space with perfect recall. That is something a camera sensor cannot provide without applying an even more compute heavy algorithm.

You can even do things like track when a car door is open, and yes you can do that with a camera and imaging radar as well but again a Lidar provides perfect recall compared to camera. With sensor fusion you have multiple sensors feeding you complimentary information by which you can navigate "safely".

Tesla has never used Lidar. They use Lidar to train and validate their vision network and use "Vidar" which is Pseudo-Lidar. You see the irony? They use false Lidar because point cloud is just better for 3d space world representation, but they are just too cheap to use actual lidar. Everyone is doing that too, well i can't say everyone as not everyone is as open with their internal research. But Mobileye and Waymo use Vidar as well. Everyone is working towards reducing the number of sensors needed to achieve safe liable autonomous driving, but some recognizes that to do so now you require multimodal sensors to complement each other.Tesla used lidar with vision but vision makes lidar redundant.

Why? The argument has never been Camera Vs Lidar, it has always been Camera, Lidar, Radar vs camera only. There is no argument that has been made other than Lidar is too expensive and machine learning can hopefully someday solve the problem with camera sensors. But in the meantime, the only truly autonomous vehicles on the road use multiple sensors to achieve it safely.If you're going to use lidar just drop vision.

Great post by everyone, to round it off.

This is what a computer sees from camera (for example: RGB, etc).

Lidar is similar (XYZ, IR, etc)

All are tossed into big ML models for classification.

That's actually not true. The voxel occupancy network, like all network still have to have similar representation of what it sees in its database to detect it. That's why it still doesn't have a 100% recall rate. Also alot of the cons he listed for driveable space detection, segs and Depth Network. About them being in UV space rather than say World Space is due to the fact that they don't localize all the time to a HD map.

If you localize to a HD map, you know the world space location of all your detection.

This is what a computer sees from camera (for example: RGB, etc).

Lidar is similar (XYZ, IR, etc)

All are tossed into big ML models for classification.

I think it's a strawman argument to say that vision-based systems rely on recognizing an object that has previously been seen by the training data. Yes, when you're doing basic 2D image segmentation, CV won't be able to recognize novel objects in the scene, but Tesla isn't using 2D image segmentation for obstacle detection anymore.

Here's Ashok Elluswamy's latest presentation from CVPR, timestamped to when he discusses the voxel occupancy network trained on surround video:

And at 16:44 he explicitly covers the topic of the occupancy network detecting obstacles when it doesn't know what they are:

That's actually not true. The voxel occupancy network, like all network still have to have similar representation of what it sees in its database to detect it. That's why it still doesn't have a 100% recall rate. Also alot of the cons he listed for driveable space detection, segs and Depth Network. About them being in UV space rather than say World Space is due to the fact that they don't localize all the time to a HD map.

If you localize to a HD map, you know the world space location of all your detection.

willow_hiller

Well-Known Member

The voxel occupancy network, like all network still have to have similar representation of what it sees in its database to detect it.

You're assuming the occupancy network is trained like 2D segmentation, when we know that's not the case. It's trained on multiple, overlapping views with a defined time dimension. If what the network is ultimately detecting is 3D volume, it doesn't need to have a similar representation of an object in the training data to know that a 3D volume is perceived in a particular way via bi/trinocular views over multiple successive frames.

E.g. a garbage bag blowing across the street will be perceived by the occupancy network as a 3D volume because of the parallax in the multiple camera views telling it that those pixels are closer to the vehicle than the background, and how that parallax changes as the bag itself moves and the vehicle camera views move toward it.

Similar threads

- Replies

- 20

- Views

- 3K

- Article

- Replies

- 4

- Views

- 2K

- Replies

- 45

- Views

- 826

- Replies

- 23

- Views

- 3K