FSD can't become even more expensive if there is any hope of addressing the real intent of FSD, which is to reduce traffic accidents and fatalities.

I've seen many people bring up the doomsday statistics in support of FSD: 1.3million traffic-related deaths globally, we need to take driving out of the hands of humans to solve this!

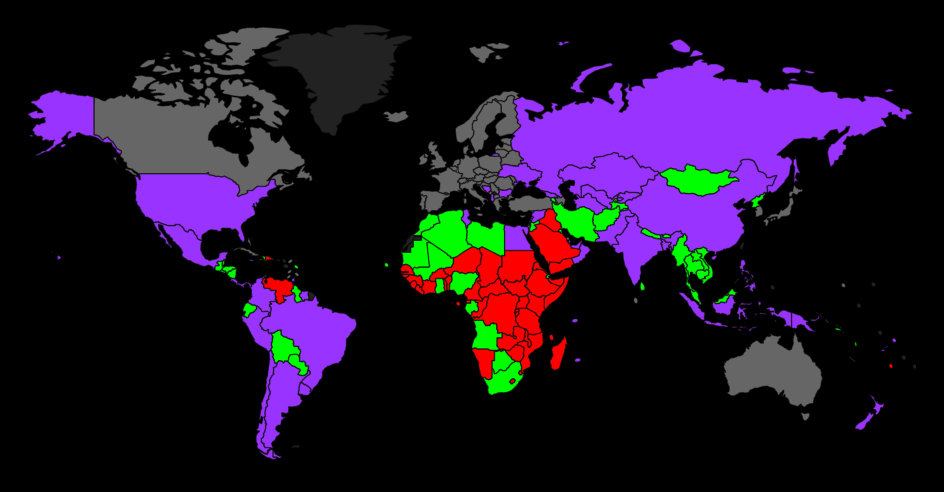

Reality is the vast majority of those fatalities are happening in countries that are far less wealthy and less developed, the stats are publicly available

Interactive Charts and Maps that Rank Road traffic accidents as a Cause of Death for every country in the World.

www.worldlifeexpectancy.com

The average vehicle on the road in these places might already be worth less than the $10k FSD price tag or cumulative cost of the subscription. No, we don't alleviate global traffic fatalities by putting this further from the reach of people where most of the accidents are happening. Either this remains ultra niche technology available only to the wealthy and thus has negligible impact on global traffic fatalities or autonomous vehicle tech needs to become much much cheaper.