I am a firm believer that Tesla will need to add more sensors at some point, including lidar, in order to achieve safe autonomous driving where driver supervision can be removed. But Tesla does seem to change hardware incrementally and only on a strictly as-needed basis.

So, I suspect that Tesla will wait to see how AP3 is working once "feature complete" is released to the public. Tesla will study what areas are still lacking in the hardware or software and make the bare minimum of changes to address the issues.

I could see the Model Y having "AP3.5" that just has better resolution cameras or something minor, not a fundamental change to the sensor layout. But I think 2-3 years from now, we will probably see "AP4" with the new AP4 chip and (I hope) additional sensors like lidar.

From my observation, I think that Tesla will need to

add some cameras on the very font side of the car, may be integrated

inside the high beam location, so the car would be able to

see perpendicularly on the front right and the front left

for incoming pedestrians or cars at a 'T' intersection or when exiting a garage and trying to merge with the traffic.

Currently FSD relies on the

cameras located on the top of the windshield, which is about the location of the eyes

of a driver trying to look left and right by moving the head a little bit forward above the steering wheel.

But even though,

it is often difficult to see the incoming traffic when I try to exit from my driveway

because the side view is obstructed when there is a car parked very close near the driveway exit..

In this case,

I need to look through the windshield the the car parked to see the incoming traffic

without having to move the front of my car inside the street.

But try to teach FSD to look through a parked car windshield to learn this trick,

which will not be possible anyway if the other car has a sun shade windshield cover.

Also I put sometime myself in difficult situation by detecting only at the very last moment

one of those

electrical bicycle ridding very fast and difficult to notice.

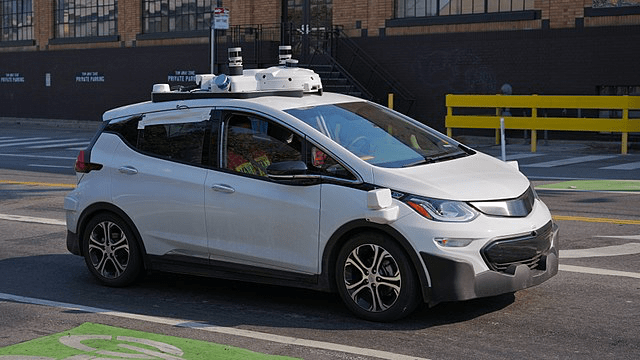

Note: On the following picture, you can noticed the

sensors located just above the front axial

and on

each side of this autonomous car, and where additional cameras would be needed,

to provide

peripheral vision.