Different risk tolerance among broad spectrum of drivers. Tesla's autopilot is a transitional technology that lack in area of collision avoidance.Funny, what "truth"?

This isn't "Model X-Files"

The system is pretty easy to understand, it follows lines. When there are badly maintained lines, a normally paying attention driver will handle fine.

Driver not paying attention at all = bad accident.

I'm not sure what people are expecting will come out of this beyond "conclusion: driver not paying attention"

That's the only truth out there.

Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Model X Crash on US-101 (Mountain View, CA)

- Thread starter mookhead

- Start date

What Tesla did was nothing like a release of raw data. The raw data is likely a huge amount of measurements (maybe even camera/radar images) likely recorded at great frequency. That raw data would be a huge data file, not a three paragraph statement to the press.

What Tesla released was (at best) a set of cherry-picked interpretations of the data (ie "the driver would have had ___ seconds to react" [without information about what assumptions/data underly that statement or whether it takes into account things like sunglare] and "the Tesla gave __ warnings" [without information about when those warnings occurred]) and some conclusions about blame/cause.

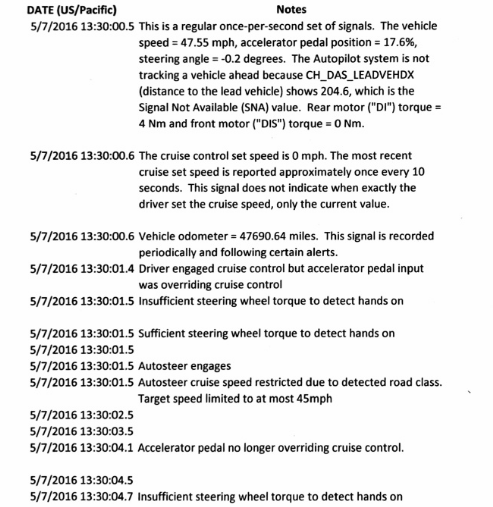

The logs are pretty simple. They are time stamped events. It's a simple exercise to convert the event codes to plain english. Tesla did that in the Florida crash. It looks like this translating the codes into plain english and adding explanations in the beggining:

see my prior post: Fatal autopilot crash, NHTSA investigating...

Also see reports from NTSB, NHTSA and FHP

https://www.ntsb.gov/investigations/AccidentReports/Reports/HAR1702.pdf

https://static.nhtsa.gov/odi/inv/2016/INCLA-PE16007-7876.PDF

Florida Highway Patrol releases full investigation into fatal Tesla crash: read it here

What Tesla did was nothing like a release of raw data. The raw data is likely a huge amount of measurements (maybe even camera/radar images) likely recorded at great frequency. That raw data would be a huge data file, not a three paragraph statement to the press.

What Tesla released was (at best) a set of cherry-picked interpretations of the data (ie "the driver would have had ___ seconds to react" [without information about what assumptions/data underly that statement or whether it takes into account things like sunglare] and "the Tesla gave __ warnings" [without information about when those warnings occurred]) and some conclusions about blame/cause.

Are we are talking about different releases? The initial (blog post) was very low level

In the moments before the collision, which occurred at 9:27 a.m. on Friday, March 23rd, Autopilot was engaged with the adaptive cruise control follow-distance set to minimum. The driver had received several visual and one audible hands-on warning earlier in the drive and the driver’s hands were not detected on the wheel for six seconds prior to the collision. The driver had about five seconds and 150 meters of unobstructed view of the concrete divider with the crushed crash attenuator, but the vehicle logs show that no action was taken.

Not seeing any talk of driver reaction time, only the conditions recorded.

chibi_kurochan

Member

Thanks for the great summary! Completely agree. I was following the first week of this thread, the rest is everyone’s own opinion/ideas.It's not a singular topic.

At first it was about the road, and how factors of the road played into accidents that happen routinely there. Then there was an even more important find that the crash barrier wasn't reset, and it's extremely likely that this fatality wouldn't have happened had it been reset.

Once it was discovered that AP was involved then people took their perspective corners based on their own biases, and world views.

Which is expected, but there is no way of knowing what went on in that car that day. There isn't a whole lot to go off of or explanations as to why the driver did what he did. The entire justification for AP is in the fact that we can predict with pretty good accuracy what went wrong.

With a human we don't have that.

With humans truth is often stranger than fiction. It is because fiction is the story we make up to comfort other people or to comfort ourselves. If we didn't have this fiction we'd all be massively OCD. Or we'd be wrapped in bubble wrap, and too scared to actually live life.

You mix in the unpredictability with humans with the unpredictability of life, and things happen that are completely unexplained.

Sometimes it's something tremendously great, and sometimes it's awful.

Society as a whole doesn't like that though especially when it comes to people dying before their time.

We can all battle it out based on what we think happened, and what we think could reduce the chances of it.

But, at the end of the day the grim reaper was just getting his quota in and found a way to do it. Probably some weird set of circumstances/happenstances that caused it. Or maybe he just glitched and did something he shouldn't have done like txting, and it was horrible timing. All of us have glitched at some point where we did something we knew was stupid.

The vast majority of us have also experienced weird situations that are really tough to explain everything that had to come together for the event to unfold the way it did. Where we didn't realize what was happening until too late, and even on our best days we couldn't have prevented it.

Earlier today a 16 year kid suffocated in his car because somehow he got trapped in some rear folding passenger seat. How he ended up in that circumstance is anyone's guess.

The really troubling thing about the whole thing wasn't the event itself, but the technology that would have saved him wasn't in place in his phone (an iPhone). But, I think it will be soonish. He used Siri to call for help, but the police couldn't find him. The iPhone didn't have any technology to allow a 911 operator to find the caller quickly.

In some other forum somewhere there is likely the same kind of arguments where someone is talking about suing the vehicle manufacture, and someone else is saying to sue apple.

I very much relate to your OCD comment

Economite

Member

Not seeing any talk of driver reaction time, only the conditions recorded.

"The driver had about five seconds and 150 meters of unobstructed view of the concrete divider with the crushed crash attenuator."

'Unobstructed view' is a conclusion.

"The driver had received several visual and one audible hands-on warning earlier in the drive and the driver’s hands were not detected on the wheel for six seconds prior to the collision."

This says nothing about when the warnings were given, except that they were not during the incident at question, and it fails to mention that hands-on warnings are not only fairly common in normal use of AP, but also can occur when the driver's hands are on the wheel, but just not triggering the sensors.

"the adaptive cruise control follow-distance set to minimum"

This is a cherry picked detail that really has nothing to do with the cause of the accident. Follow-distance is irrelevant when no car is being followed. Also, is Tesla advising that minimum follow distance is inappropriate for normal freeway use?

Then, later, while it was still under the party-silence agreement, Tesla made the following statement to the press:

"The crash happened on a clear day with several hundred feet of visibility ahead, which means that the only way for this accident to have occurred is if Mr. Huang was not paying attention to the road, despite the car providing multiple warnings to do so."

croman

Well-Known Member

Driving a car with any sort of autopilot on is like being captain of a boat.

The captain can use GPS, AutoPilot, Radar...but it remains the captain's responsibility.

So if the ship crashes into the rocks, it's the captain's fault.

Same with cars.

Wait there are level 2 semi-autonomous ships that are advertised as being able to stay in its lane but instead steer into rocks? Did I miss something?

One difference between this and the Florida crash is this time the family is being very vocal about how they see things from their side. Joshua Brown's family simply released a statement that they would not comment until the investigation was complete. I understand this is a horrible time for the Huang family and I'm not sure I would be able to keep my mouth shut in similar circumstances... but they are very publicly speculating mostly using hearsay so it's definitely an uneven playing field.What is possibly more interesting to me is they are still operating within the guidelines on other incidents. It seems mad they choose to be a party in some investigations, but not others.

No, it doesn't. It makes me wonder if it was a defensive move against all the non-factual speculation and opinion-sharing that is now rampant. There may be other reasons.Makes you wonder whether they perceive a difference in culpability here, doesn't it?

"The driver had about five seconds and 150 meters of unobstructed view of the concrete divider with the crushed crash attenuator."

'Unobstructed view' is a conclusion.

Not if they got a full set of data off of the car. Remember, even with the CANBus data limits, AP1 cars still kept a video crash buffer at low resolution and framerate:

Jason Hughes on Twitter

I haven't seen what kind of crash buffer AP2+ keeps, but I would assume it's substantially better since they don't have the same bandwidth issues.

Economite

Member

The logs are pretty simple. They are time stamped events. It's a simple exercise to convert the event codes to plain english. Tesla did that in the Florida crash. It looks like this translating the codes into plain english and adding explanations in the beggining:

It's pretty clear that the vehicle collects more data than just this sort of activity log. Otherwise how could Tesla be making statements about when the driver in the Mountain View accident would have had a clear view of the barrier?

If the data is this simple, then why can't tesla create a tool that allows NTSB to decode it themselves?

Also, in the Mountain View accident, what Tesla released to the public wasn't in any way like a complete set of decoded raw data. It was a narrative with a handful of supposed facts and interpretations; nothing near a complete or raw record.

Maximus-MX

Member

In the case of the Tesla Autopilot, the current safeguard appears to be the driver and the driver only. If the driver is not monitoring the car constantly, then the car can possibly operate outside of its limitation. Question then is that whether the driver should be the first and only safeguard, or should there be another layer or two of protections before a driver needs to intervene. We all know today's drivers are often distracted, so should Autopilot count on a potentially distracted driver to correct its mistake when Autopilot is supposed to be there to help distracted driver?

At current stage the Tesla autopilot is level 2.

Level 2: An advanced driver assistance system (ADAS) on the vehicle can itself actually control both steering and braking/accelerating simultaneously under some circumstances. The human driver must continue to pay full attention (“monitor the driving environment”) at all times and perform the rest of the driving task.

What you are describing is a level 3.

Level 3 An Automated Driving System (ADS) on the vehicle can itself perform all aspects of the driving task under some circumstances. In those circumstances, the human driver must be ready to take back control at any time when the ADS requests the human driver to do so. In all other circumstances, the human driver performs the driving task.

We are not there yet. Today for Tesla autopilot the only safeguard is the driver. I hope that everyone who turns it on understands this.

Automated Vehicles for Safety

Economite

Member

Not if they got a full set of data off of the car. Remember, even with the CANBus data limits, AP1 cars still kept a video crash buffer at low resolution and framerate:

Jason Hughes on Twitter

I haven't seen what kind of crash buffer AP2+ keeps, but I would assume it's substantially better since they don't have the same bandwidth issues.

"Unobstructed view" is still an interpretation. The raw data would be the actual (probably low res and framerate) video clips, as well as information about how the camera works and what it's settings (like contrast) are.

Without this information, video is inconclusive, and a description of what one viewer sees in the video is basically an interpretation by that viewer.

As an example of this, look at what happened with the super-poor quality dash cam in the uber autonomous crash in Arizona. The camera settings/quality created a video that made it look like there was essentially no visibility on the road. But, in fact, the road was well lit with properly set streetlights. The problem was not that there was insufficient light for the driver to see the road, but that the camera created an image that was far less lit and far lower contrast than what would have been visible to the human eye.

"The driver had about five seconds and 150 meters of unobstructed view of the concrete divider with the crushed crash attenuator."

'Unobstructed view' is a conclusion.

As @Saghost already stated, it is not a conclusion if they have camera footage of the unobstructed view. Regardless of your manufactured hypothesis that the camera had a better view/ dynamic range than the human eye (the Uber case was a worst range). It was broad daylight. The only way to be obstructed would be to have a vehicle between the car and the barrier.

"The driver had received several visual and one audible hands-on warning earlier in the drive and the driver’s hands were not detected on the wheel for six seconds prior to the collision."

This says nothing about when the warnings were given, except that they were not during the incident at question, and it fails to mention that hands-on warnings are not only fairly common in normal use of AP, but also can occur when the driver's hands are on the wheel, but just not triggering the sensors.

They stated the raw warning data. What you are talking about is data interpretation, which I thought was your original complaint.

"the adaptive cruise control follow-distance set to minimum"

This is a cherry picked detail that really has nothing to do with the cause of the accident. Follow-distance is irrelevant when no car is being followed. Also, is Tesla advising that minimum follow distance is inappropriate for normal freeway use?

Again, your interpretation. It is a data point. It also means they camera potentially had less field of view to work with (my personal guess is that there was a car being followed which was seen in the previous camera frames).

Then, later, while it was still under the party-silence agreement, Tesla made the following statement to the press:

"The crash happened on a clear day with several hundred feet of visibility ahead, which means that the only way for this accident to have occurred is if Mr. Huang was not paying attention to the road, despite the car providing multiple warnings to do so."

Which is interpretation, but a fairly direct one. The previously mentioned warnings state to pay attention. To run into a barrier indicates a lack of attention (for whatever reason).

T34ME

Active Member

It is "driver assistance", it IS NOT Full Self Driving. If you are expecting the car to avoid objects, do not purchase or use EAP.If the driver is inattentive, it does not make it right for autopilot drive toward the wall, right?

Tesla got the data recorder, read the data recorder, interpretated the data, and released the results. I think their investigation was over.

Message: Consumers are safer with AP, but it may not save them from themselves if they don't pay attention.

Can't believe you got a disagree this. I guess that's easier than actually providing a rebuttal to your well thought out statement.

MikeQ

Member

What if you allready paid additional 4000 to upgrade to FSD?

Then I'm sure you'd know that it wasn't available yet.

Economite

Member

As @Saghost already stated, it is not a conclusion if they have camera footage of the unobstructed view. Regardless of your manufactured hypothesis that the camera had a better view/ dynamic range than the human eye (the Uber case was a worst range). It was broad daylight. The only way to be obstructed would be to have a vehicle between the car and the barrier.

A picture or video is (more or less) raw evidence. A description of the picture or video is an interpretation of the raw evidence, and not itself raw evidence. A statement like "X would have been able to see Y" is clearly drawing a conclusion, not providing raw evidence.

Furthermore, while I strongly disagree with you that what Tesla has been providing is merely "raw evidence", Tesla would still be breaking the party-silence rule even if it were only disclosing raw evidence. And Tesla's public statements are clearly not a complete data dump (which would still be impermissible); it is choosing which bits data to release. That in itself is a clear form of spin. And remember, the data that Tesla is releasing doesn't really belong to Tesla. It is taken from the car's data recorder (which is owned by the driver's estate and in the possession of NTSB). But Tesla has made it so that the owner's family can't see/interpret the data collected by the driver's own property. And then Tesla is going off and releasing it's own cherry-picked interpretations of that data.

At current stage the Tesla autopilot is level 2.

Level 2: An advanced driver assistance system (ADAS) on the vehicle can itself actually control both steering and braking/accelerating simultaneously under some circumstances. The human driver must continue to pay full attention (“monitor the driving environment”) at all times and perform the rest of the driving task.

What you are describing is a level 3.

Level 3 An Automated Driving System (ADS) on the vehicle can itself perform all aspects of the driving task under some circumstances. In those circumstances, the human driver must be ready to take back control at any time when the ADS requests the human driver to do so. In all other circumstances, the human driver performs the driving task.

We are not there yet. Today for Tesla autopilot the only safeguard is the driver. I hope that everyone who turns it on understands this.

Automated Vehicles for Safety

I would think having a reliable automated emergency could be another safeguard for L2 instead of relying on the driver. Another is to limit the parameters of when and where it could work.

I do wonder if there have been serious accidents from other cars using similar technologies? Nearly all the new Honda's have lane keep assist that operate similar to AP1, and I would think there are more Honda's out there with this tech than AP1/AP2 Tesla's combined. Many other brands have similar technologies as well. Are those cars somehow doing a better job safeguarding against driver mis-use, or are drivers just not using it in those cars?

Economite

Member

"The crash happened on a clear day with several hundred feet of visibility ahead, which means that the only way for this accident to have occurred is if Mr. Huang was not paying attention to the road, despite the car providing multiple warnings to do so."

Which is interpretation, but a fairly direct one. The previously mentioned warnings state to pay attention. To run into a barrier indicates a lack of attention (for whatever reason).

First of all; as you admit, this is an interpretation. Therefore it is not raw data.

Furthermore, while it may be an accurate conclusion, it is by no means the only accurate conclusion that can be drawn from the data. Someone could just as accurately say: "the only way for this accident to have occurred is if AP failed to stay in lane, despite the activation of autosteer, and then Mr. Huang failed to notice that AP had crossed the lane markings." These sorts of conclusions always have a spin. That's why, in its final reports, NTSB identifies all factors involved in a mishap.

Tesla like to stop it's analysis at either "the driver deactivated AP before the collision, therefore AP was not at fault" (ignoring the fact that AP steered the car into the dangerous situation, and the driver only "deactivated" AP by panic breaking too close to the point of impact) or "the driver failed to intervene when he had the opportunity to do so" (ignoring the fact that it was AP's steering mistake, or failure to steer, that put the car on course to the collision). AP clearly (at the very least) contributes to accidents that occur while AP is activated or that involve a driver panic breaking/swerving to override AP. That doesn't necessarily mean that Tesla should be legally liable (though I think quite often it should be) or that AP is defective/unsafe (though I suspect it is), but it does mean that AP's involvement in such mishaps needs to be impartially studied.

I do wonder if there have been serious accidents from other cars using similar technologies? Nearly all the new Honda's have lane keep assist that operate similar to AP1, and I would think there are more Honda's out there with this tech than AP1/AP2 Tesla's combined. Many other brands have similar technologies as well. Are those cars somehow doing a better job safeguarding against driver mis-use, or are drivers just not using it in those cars?

Or are those brands less newsworthy, so the accidents never hit the national news?

Or do those companies have fewer shorts and enemies pushing the narrative into public view?

I would think having a reliable automated emergency could be another safeguard for L2 instead of relying on the driver. Another is to limit the parameters of when and where it could work.

I do wonder if there have been serious accidents from other cars using similar technologies? Nearly all the new Honda's have lane keep assist that operate similar to AP1, and I would think there are more Honda's out there with this tech than AP1/AP2 Tesla's combined. Many other brands have similar technologies as well. Are those cars somehow doing a better job safeguarding against driver mis-use, or are drivers just not using it in those cars?

Can't speak for Honda, but the Ford lane assist only nudges you if you drift. If you set the car up on a straight road with good markings, it will almost keep you out of the ditch on its own. The adaptive cruise is nice when traffic is moving, but does not seem to fully stop the car.

Similar threads

- Replies

- 13

- Views

- 339

- Replies

- 18

- Views

- 691

- Replies

- 4

- Views

- 714

- Replies

- 62

- Views

- 3K