Autopilot is still a work in progress, and Elon keeps telling us it will be perfected 6 months from now.

So far they have done a remarkable job of being able to follow car in front, reading speed limit and stop

signs, warning or preventing turning into the adjacent lane if there is a car there. They have not solved

the problem of detecting stationary objects in the road ahead. Teslas have run full speed into a parked

fire truck, lamp post, semi-trailers, and cement road divider. No attempt to slow down at all before

collision. Tesla has emphasized that the driver must remain alert and able to take over in an instant. I

think the best way to ensure the driver is alert, is to not let him use Autopilot.

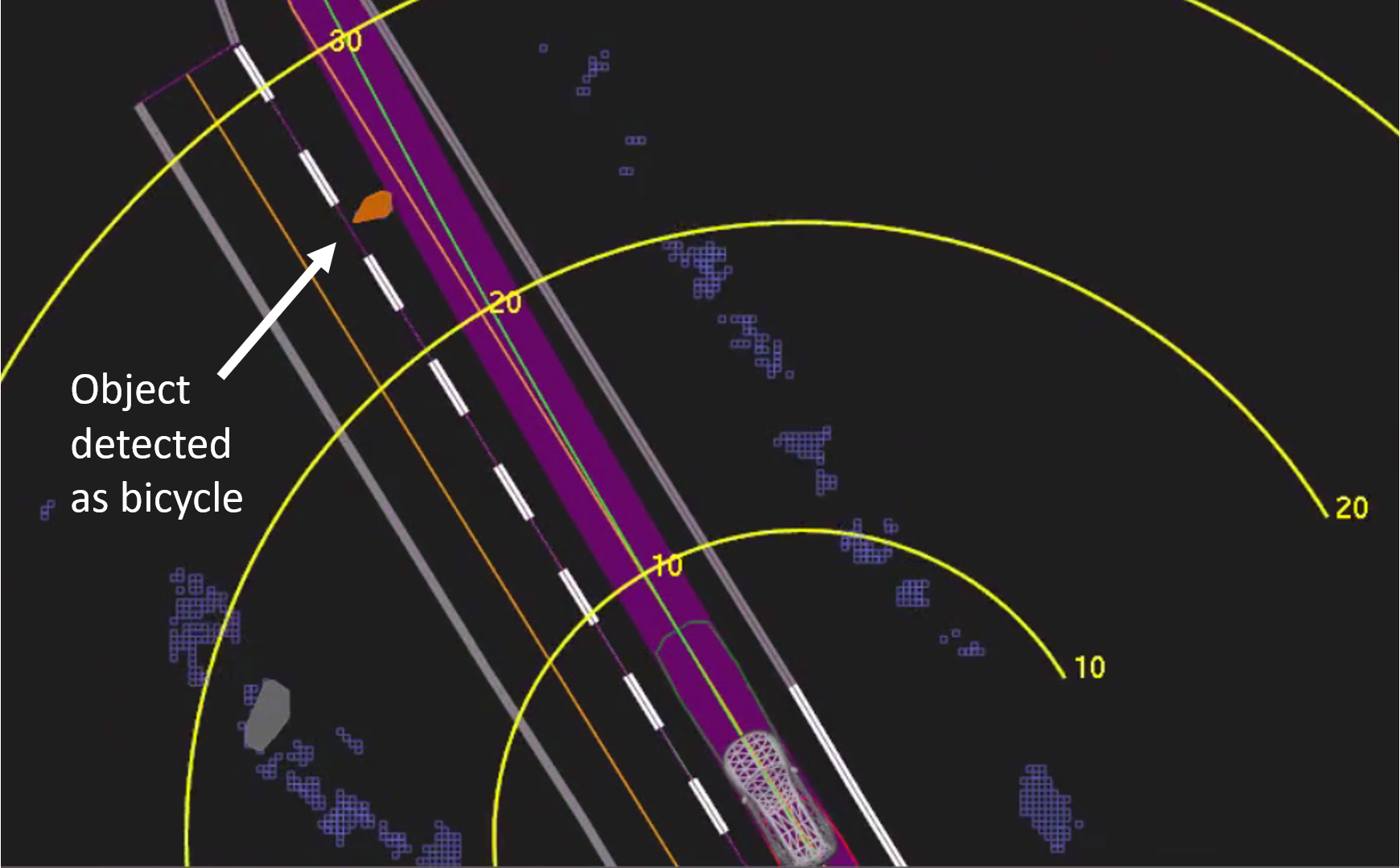

Tesla uses an array of cameras, sonar and front radar to detect objects around it. Based on the above

accidents, it appears there is heavy reliance on the radar and little or no reliance on the cameras to

determine distance to objects ahead. Radar works best when its signal can be reflected back by a metal

surface. It does not work well on humans, wood, plastic etc. It does not work well if a steel street pole

in front if it is round because almost all of the reflected signal goes off to the side instead of straight

back. The fire truck was not detected because it was parked on an angle so the radar was deflected to

the sides. Tesla uses an array of cameras and the 3 forward looking ones are grouped in the top centre

of the windshield. They are long range, medium and wide angle. They are not arranged or used for

binocular vision.

Binocular vision is what we use to determine distance to objects. Try this little experiment. Using only

one eye, reach out with your arm so your hand is about a foot to the side of your monitor. Point one

finger at your monitor, and move your hand in to touch the top corner of your monitor. Most people

will miss on their first try, afterwards you learn how far to reach so second or third is successful, but

this is not due to improving vision, just remembering muscle position. Doing this with both eyes open

is easy.

Edge detection software can use triangulation with two cameras looking at the same scene to determine

distance. By mapping edges, similar shapes can be identified and the displacement of these same

shapes from one camera to the other is used to triangulate and calculate distance. This can be very fast

and accurate. With only one camera an object in front has to grow larger taking up more pixels in the

image and after a short time, using the rate of size increase, estimate distance. It is this time that is

unacceptable, in an emergency situation you cannot wait a second or two before deciding action needs

to be taken, but this is exactly what Tesla does. Their system is not accurate, and they actually delay

making a determination about what is observed to avoid false positives, which would otherwise cause

needless and disconcerting braking.

I believe doubling up on binocular vision would be best, with two pairs of cameras in the top corners of

the windshield. It is easier to map edges from two cameras close together but less accurate than far

apart. Placing them diagonally allows the pair to more easily detect vertical and horizontal edges. With

approximate distance known from each pair, it is easier to match edges with the opposite pair for

increased accuracy. I believe this system would be much better than LIDAR because cameras have

much higher resolution and higher scanning rate.

In my opinion, autopilot should not be used until the car can reliably determine that there are objects in

front and be able to take appropriate action. Binocular vision is the obvious choice, Tesla engineers

must have rejected it. Why? Cost? I think they need to re-evaluate.

So far they have done a remarkable job of being able to follow car in front, reading speed limit and stop

signs, warning or preventing turning into the adjacent lane if there is a car there. They have not solved

the problem of detecting stationary objects in the road ahead. Teslas have run full speed into a parked

fire truck, lamp post, semi-trailers, and cement road divider. No attempt to slow down at all before

collision. Tesla has emphasized that the driver must remain alert and able to take over in an instant. I

think the best way to ensure the driver is alert, is to not let him use Autopilot.

Tesla uses an array of cameras, sonar and front radar to detect objects around it. Based on the above

accidents, it appears there is heavy reliance on the radar and little or no reliance on the cameras to

determine distance to objects ahead. Radar works best when its signal can be reflected back by a metal

surface. It does not work well on humans, wood, plastic etc. It does not work well if a steel street pole

in front if it is round because almost all of the reflected signal goes off to the side instead of straight

back. The fire truck was not detected because it was parked on an angle so the radar was deflected to

the sides. Tesla uses an array of cameras and the 3 forward looking ones are grouped in the top centre

of the windshield. They are long range, medium and wide angle. They are not arranged or used for

binocular vision.

Binocular vision is what we use to determine distance to objects. Try this little experiment. Using only

one eye, reach out with your arm so your hand is about a foot to the side of your monitor. Point one

finger at your monitor, and move your hand in to touch the top corner of your monitor. Most people

will miss on their first try, afterwards you learn how far to reach so second or third is successful, but

this is not due to improving vision, just remembering muscle position. Doing this with both eyes open

is easy.

Edge detection software can use triangulation with two cameras looking at the same scene to determine

distance. By mapping edges, similar shapes can be identified and the displacement of these same

shapes from one camera to the other is used to triangulate and calculate distance. This can be very fast

and accurate. With only one camera an object in front has to grow larger taking up more pixels in the

image and after a short time, using the rate of size increase, estimate distance. It is this time that is

unacceptable, in an emergency situation you cannot wait a second or two before deciding action needs

to be taken, but this is exactly what Tesla does. Their system is not accurate, and they actually delay

making a determination about what is observed to avoid false positives, which would otherwise cause

needless and disconcerting braking.

I believe doubling up on binocular vision would be best, with two pairs of cameras in the top corners of

the windshield. It is easier to map edges from two cameras close together but less accurate than far

apart. Placing them diagonally allows the pair to more easily detect vertical and horizontal edges. With

approximate distance known from each pair, it is easier to match edges with the opposite pair for

increased accuracy. I believe this system would be much better than LIDAR because cameras have

much higher resolution and higher scanning rate.

In my opinion, autopilot should not be used until the car can reliably determine that there are objects in

front and be able to take appropriate action. Binocular vision is the obvious choice, Tesla engineers

must have rejected it. Why? Cost? I think they need to re-evaluate.