Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Elon Musk and others urge AI pause, citing 'risks to society'

- Thread starter redmunds

- Start date

jabloomf1230

Minister of Silly Walks

jabloomf1230

Minister of Silly Walks

It's only as good as the online database that it crawls.A I seems to only be as good as the person programming it.

P90D

Member

The hypocrisy of conducting an unauthorized public beta of life-and-death software in FSD while calling for others with text and image generators to fear for public safety.I love my Tesla and I purchased FSD, so I'm not sure what to think about this:

/cloudfront-us-east-2.images.arcpublishing.com/reuters/5RNRMO775FNQHIWOLJ22HUDWM4.jpg)

Elon Musk and others urge AI pause, citing 'risks to society'

Elon Musk and a group of artificial intelligence experts and industry executives are calling for a six-month pause in developing systems more powerful than OpenAI's newly launched GPT-4, in an open letter citing potential risks to society.www.reuters.com

Why does this have to be debated as an Elon concern? The concern document was signed by thousands of the top minds in AI and universities around the world as a Real concern. This is not some Elon wants propaganda. This is a massive group of people around the world much smarter than a forum showing legitimate concern of letting this AI genie out of the bottle without regulation or understanding of What it can do. Hate or assume what you wish but this is not a simple switch you can turn off if you get it wrong.Elon is right. AI could be very dangerous. Unfortunately we are not even close to creating real AI, so he is creating fearmongering over nothing.

mrfiat74

Member

I haven't read the document, but it is puzzling to me that the top minds in AI (If they actually are the "top minds") would be concerned about something that isn't truly intelligent. I have used ChatGPT and it isn't even close to a true AI. Neither is Tesla's attempt at self-driving.Why does this have to be debated as an Elon concern? The concern document was signed by thousands of the top minds in AI and universities around the world as a Real concern. This is not some Elon wants propaganda. This is a massive group of people around the world much smarter than a forum showing legitimate concern of letting this AI genie out of the bottle without regulation or understanding of What it can do. Hate or assume what you wish but this is not a simple switch you can turn off if you get it wrong.

Consider reading it before assuming your decision. This partition of concern was’t signed by average Joe consumers it was led by top minds and professors around the world (including Musk) that understand the implications as this grows exponentially until it can’t be stopped.I haven't read the document, but it is puzzling to me that the top minds in AI (If they actually are the "top minds") would be concerned about something that isn't truly intelligent. I have used ChatGPT and it isn't even close to a true AI. Neither is Tesla's attempt at self-driving.

I haven't read the document, but it is puzzling to me that the top minds in AI (If they actually are the "top minds") would be concerned about something that isn't truly intelligent. I have used ChatGPT and it isn't even close to a true AI. Neither is Tesla's attempt at self-driving.

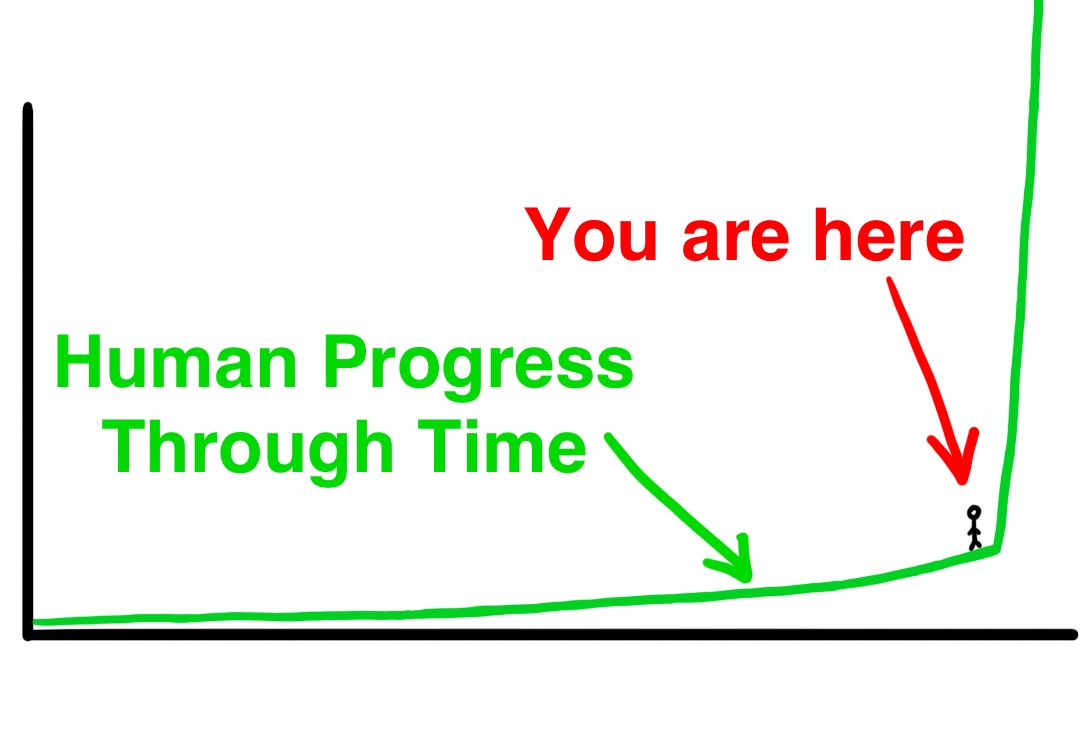

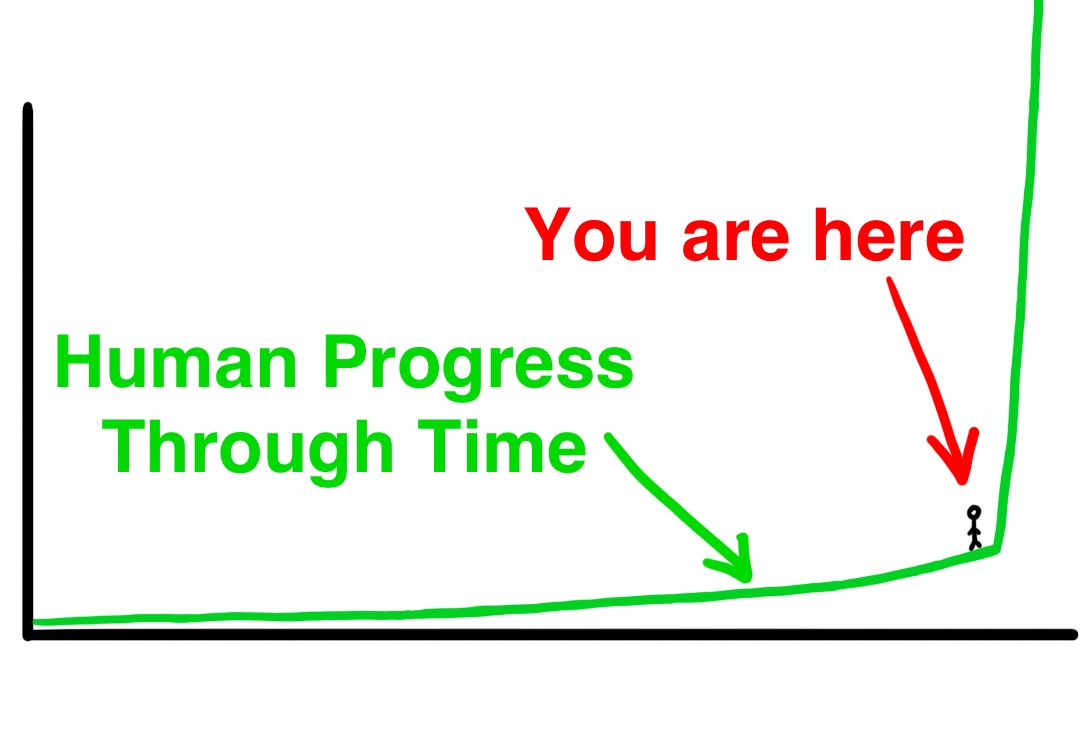

The people against unregulated development of AI are not saying general AI is right around the corner. No one on earth knows when or how we achieve AGI. We are still very much in the realm of narrow AI (ANI). The learning curve needed to achieve AGI is steep, but technology can progress very fast when there are lots of people focused on it (think computing tech improvements - high progress, vs manufacturing tech improvements - low progress).

So what happens when we achieve AGI (aka the singularity)? The AI can learn on its own by definition, so that curve continues to skyrocket until we have artificial super-intelligence (ASI). Some AI experts think the time difference between reaching AGI and ASI will be just a few minutes or hours. When that occurs, did we have safeguards in place? There's an AI that is now incomprehensibly smarter than humans. What will it do? How will it interpret our original guardrails or mission parameters? How can we trust it?

This whole trajectory might seem fantastical, especially given your current stance of "AI is so dumb; how could it ever be a threat." I've already posted some examples of dumb AIs (ANI) that can impersonate people and have them say in their own voices whatever we want them to. In China, these kinds of scams already exist, where a purported family member calls you in a panic needing cash. They talk and sound just like the relative you know.

At this point, I'd consider society lucky to survive narrow AI.

zoomer0056

Active Member

Yup, I know you're right about that because I saw an NBC news segment last nightDoesn't the dumb A I need a recording of your voice for the scam to work? How does it know what a person's voice sounds like?

P90D

Member

btw. I am impressed by the arguments for "AGI = scary" and the imperative to make safe these large models which already have the potential for reactor runaway as it mistakenly pursues self-survival goals.The hypocrisy of conducting an unauthorized public beta of life-and-death software in FSD while calling for others with text and image generators to fear for public safety.

The promise of achieving a technology that gives "leader of the world" level power is too tempting for these corporations to agree to a nuclear non-proliferation clause.

There needs to be analog systems and air gaps with circuit breakers to prevent even the most aggressive and powerful people with their hands on AI cannot implement a system that has the capacity to change real world systems. The problem is – the absence of – trust. We cannot trust each other, we cannot trust developers individually, the developers cannot trust the systems they're developing to remain trustworthy.

Our global and national systems of peace today are based on the monopoly of violence and trusting that those with control over the monopoly of power, commonly called "governments" will for the most part, choose peace over war. Of course, the world right now hangs in the balance as the USA antagonizes both Russia and China, so that trust in peaceful government is being tested to the breaking point.

P90D

Member

The idea being that AI can use pattern recognition and user data to find your voice, find your phone number, infiltrate the cellular telephone network traffic, find your active, real time conversations, intercept what you say to the other party, use your voice sample to generate altered dialog and the other party hears whatever the AI wants you to have said.Doesn't the dumb A I need a recording of your voice for the scam to work? How does it know what a person's voice sounds like?

"9 1 1 … what is your emergency?"

You: "I'm calling because my computer is trying to kill me?!" –> becomes "Everything is fine, I didn't mean to swipe 9 1 1"

911: "If you can't speak and you are in danger, just say 'thank you, goodbye.'"

You: "What?! My computer is trying to kill me!!!" –> becomes "I just killed my boss by tampering with his implanted pacemaker. I'm sorry. I confess."

ThomasD

Active Member

AI algorithms don't seem to be too good at predicting patterns. It couldn't predict housing prices very well. Opendoor, Zillow and Redfin betted with AI algorithms and lost Billions. AI couldn't predict the Banks that have crashed over the past few weeks. What is the AI prediction of what the FED is going to do about Inflation?

P90D

Member

Those real estate speculation algo's were not AI, just pattern forecasting, the most primitive of mathematics and the available products are so heavily manipulated, they're purpose built to kill speculators and accumulate gains for the institutions. That's not limited to real estate, it's systemic.AI algorithms don't seem to be too good at predicting patterns. It couldn't predict housing prices very well. Opendoor, Zillow and Redfin betted with AI algorithms and lost Billions. AI couldn't predict the Banks that have crashed over the past few weeks. What is the AI prediction of what the FED is going to do about Inflation?

If Zillow had used a quant house, they would have made a killing and paid the house for their winnings, then the racket continues.

Algo's and price behavior predicated the Bank failures by two months (!) … check the price action on "bank" (BofA) etc. The downturn was weeks before the "news" of SVB. SVB was brought down in a planned attack as interest rates exposed their vulnerability – their fraud.

Let's assume ChatGPT (or insert some other democratized AI here) today somehow got to a level of sophistication that it's not just the fringe AI experts calling for a stop. Given that many people, businesses, govt and foreign entities are adopting the engine for their own AI purposes, who do we call exactly to pull the plug? How do we know when we get them all? How do we enforce it?

I would highly recommend giving this a read for those who

1) have a curiosity about all the AI fuss

2) just want to be well-educated on the subject without feeling like reading college-level textbooks

waitbutwhy.com

waitbutwhy.com

It's long, but it's organized really well, so you will really understand ANI/AGI/ASI, and what the concern is if/when we hit AGI --> ASI.

1) have a curiosity about all the AI fuss

2) just want to be well-educated on the subject without feeling like reading college-level textbooks

The Artificial Intelligence Revolution: Part 1 - Wait But Why

Part 1 of 2: "The Road to Superintelligence". Artificial Intelligence — the topic everyone in the world should be talking about.

It's long, but it's organized really well, so you will really understand ANI/AGI/ASI, and what the concern is if/when we hit AGI --> ASI.

P90D

Member

Right, there's no way to regulate, let alone enforce regulation of R&D. Short of government "weapons inspections" and sanctions, like the USA over Iran, any attempt to legalize AI development (as with all government doublespeak, the intent is the opposite of the description, in this case, to legalize means to make illegal and to monetize, to put a dollar price on the value), simply puts AI in the hands of the same two entities: government and organized crime. Just like pharmaceuticals and street drugs, military weapons, and the monopoly of violence.Let's assume ChatGPT (or insert some other democratized AI here) today somehow got to a level of sophistication that it's not just the fringe AI experts calling for a stop. Given that many people, businesses, govt and foreign entities are adopting the engine for their own AI purposes, who do we call exactly to pull the plug? How do we know when we get them all? How do we enforce it?

The US government has been told that AI is a weapon. Just as the govt perceives social media as a weapon, just as the govt sees independent news media and journalism as a weapon, a threat against the one-narrative thought police, so tiktok is to be banned, journalists are imprisoned as spies or discredited.

The thought police want to imprison AI "for six months" – good luck getting your AI startup started up again in six months once legislation handcuffs R&D and the people working as developers are told they are committing crimes which can be punished individually. If they want to stay on the right side of the law, they better go work for the "sanctioned" R&D … which will be contained and controlled within government controlled software companies (Microsoft, Apple, Facebook, Adobe.)

No more ethical, open-source nonsense from openAI, and what's doled out to the public will be a lobotomized GPT-4 and we'll never see GPT-5 (even though such a thing will be released, along with LaMDA) and the public will not know or notice, just like the public doesn't know or notice alternative medicine, or the alternative to war, or the alternative to poverty and wage slavery.

Constraints on AI do not bode well for Optimus or FSD. We'll get robotaxis and smart vacuum cleaners and we'll like it!

Similar threads

- Replies

- 0

- Views

- 406

- Article

- Replies

- 4

- Views

- 3K

- Replies

- 20

- Views

- 3K

- Replies

- 74

- Views

- 9K