You honestly believe that Teslas get in 3-6 times fewer accidents per mile than the average vehicle?There is no question that the details are not there but the overwhelmingly safer numbers are there.

Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

FUD I believe in. There is an enormous leap from a safe Level 2 system to a safe Level 3-5 system.

- Thread starter Daniel in SD

- Start date

AlanSubie4Life

Efficiency Obsessed Member

There is no question that the details are not there but the overwhelmingly safer numbers are there.

As I said, I have not been able to draw any conclusions, and I have not been able to draw this conclusion either.

You lost me here...safer than what? 3x fewer accidents than the average vehicle on the road? That seems fairly clear, I suppose...though without the details of what an accident is, other than an airbag deployment...it is hard to say. Putting that aside, it still hardly seems like a good comparison. I agree it appears that if you are driving a Tesla it appears you get in fewer accidents than the average vehicle per mile traveled. But I'm not sure how important that metric is, in regards to it being a statement about the safety of the specific vehicle you are driving.

Do Teslas drive as much at night?

Do Teslas drive as much in winter conditions?

How does the age of the Tesla fleet compare to the average age of the national fleet?

What about socioeconomic factors? Is there any correlation between education and financial status and driving ability?

What about driver age? Don't more experienced drivers get in fewer accidents? Aren't Tesla drivers usually more experienced?

And...many other possible factors.

That's why everyone who has tried to draw a meaningful conclusion from these numbers has encouraged a more complete presentation of the data. Right now it just looks like a (transparent) marketing ploy.

Do note that I actually think it may very well be safer to use Autopilot - meaning it is possible - but I have no idea. It's just kind of frustrating to see a promise that they will release data...and then get this...

Tam

Well-Known Member

You honestly believe that Teslas get in 3-6 times fewer accidents per mile than the average vehicle?

For my own case: Yes!

I used Autopilot since 2017 and I've found that it has made me my driving much safer.

I discovered that I have engaged in dangerous behaviors in the past and I was lucky that I didn't get into an accident.

Whether there's Autopilot or not, my behaviors still have not changed much:

Talking either on the phone or in-person which made me missing exits multiples of times but not since NOA.

Changing radio/stereo/audio settings/channels

Settings on navigation

I realize that in the past, I did not center within a lane when I was doing the above tasks and also I almost got into many rear-end accidents because I didn't realize instantly that the traffic in front was getting slower...

Also, I love Auto Lane Change because it has done much better and much safer than I can manually.

I sure can manually change lane very safely but with distractions and without Autolane Change, bad things can happen as in the case of a driver who made a manual lane change which collided with Model 3 and resulted in serious injuries to the occupants.

sigh Sometimes I truly hate the internet, because you have to bring 100% of context with you every time you have a discussion. So let me give a little more of my context, and then respond to your points.

First, and I think this is probably most important, every time a new safety system is implemented it arrives with an increase in collision rate and a statistically confusing safety rate. In general, they are all safer than the status quo, and in general lives are always saved. This is true of safety belts, crumple zones, traction control, ABS, air bags, EBD, AEB, and so on. Every single one of them, if not used properly, can be as dangerous or more dangerous than a vehicle not equipped. And the arrival of each of these features has made drivers increasingly confident in their skill level and the survival rate of collisions. Neither of which has really improved significantly for a long time.

As an example, ABS on a snowy or rainy road vastly increases your stopping distance rather than decreasing it. Most drivers ( even many here on this forum) argue that ABS always decreases stopping distance. This inevitably led to an increased collision rate, because people don't judge stopping distance appropriately anymore. And yet, nobody in their right mind would argue against equipping vehicles with ABS! It overwhelmingly improves maneuverability during panic braking which allows an attentive driver to potentially avoid colliding with a vehicle, pedestrian, or any other obstacle. ABS has saved countless lives. We know this intuitively. And yet there is no statistic to point to except an overall decline of a certain type of collision.

Level 2 autonomy, and I think this is Waymo's point too, can provide that same risk increase and potentially the same safety outcome. But without users using it properly, the only thing we know for sure is that we will see an increased risk. To be very clear here, I am not calling for the removal of Level 2 autonomy from vehicles. I'm simply pointing out the pattern we have seen countless times in the past, and what should be obvious to us all at this point- Users can not be trusted for anything except to do what they're not supposed to do.

I agree completely with you, as always. Without hard data and statistical analysis, none of this matters at all because it's conjecture. I totally agree that we should be (independently) studying data from these systems and drawing conclusions from there. To that end, I do not trust Tesla's quarterly report although I read it and find it interesting. It would be counterproductive for Tesla to provide a narrative that says autopilot could be much more safe except for those darned users. And were I a major shareholder rather than a hobbyist, I don't think I'd want them to make any such announcement. If I were part of their legal team, well, I'd drink myself to sleep most afternoons.

I again want to clarify here. I am not at all saying L2 is bad or even definitely bad. I believe any aide system that prevents driver fatigue is likely worthwhile, and L2 (even in my skeptical estimation I've posted elsewhere) can handle 75% of highway driving situations with ease. The remaining question in my mind is the same question we ask about autopilot in aircraft. Is the user still engaged enough to have situational awareness to a degree where they can take over in an emergency.

What we see in the airline industry is that autopilot removes much of the human error in typical flying conditions, and nobody disputes that. I suggest we see the same with Tesla autopilot. What the airline industry also sees is that in an emergency situation, pilots need time to regain awareness and may miss critical data about their predicament that leads to catastrophe. I suggest we see the same with Tesla autopilot. I do not deny the benefit of the good, but I think we both agree we can not deny the introduced risk. A risk that already existed, BTW, with phones and conversations, and daydreaming. But now it's easier to fall into the trap of distraction, as Waymo highlighted so well.

Again, though, we need data. That data won't arrive without catastrophe, and some of the data will always need to be inferred. You can't measure a collision that never happened, after all.

Once more, I agree with you entirely.

First, and I think this is probably most important, every time a new safety system is implemented it arrives with an increase in collision rate and a statistically confusing safety rate. In general, they are all safer than the status quo, and in general lives are always saved. This is true of safety belts, crumple zones, traction control, ABS, air bags, EBD, AEB, and so on. Every single one of them, if not used properly, can be as dangerous or more dangerous than a vehicle not equipped. And the arrival of each of these features has made drivers increasingly confident in their skill level and the survival rate of collisions. Neither of which has really improved significantly for a long time.

As an example, ABS on a snowy or rainy road vastly increases your stopping distance rather than decreasing it. Most drivers ( even many here on this forum) argue that ABS always decreases stopping distance. This inevitably led to an increased collision rate, because people don't judge stopping distance appropriately anymore. And yet, nobody in their right mind would argue against equipping vehicles with ABS! It overwhelmingly improves maneuverability during panic braking which allows an attentive driver to potentially avoid colliding with a vehicle, pedestrian, or any other obstacle. ABS has saved countless lives. We know this intuitively. And yet there is no statistic to point to except an overall decline of a certain type of collision.

Level 2 autonomy, and I think this is Waymo's point too, can provide that same risk increase and potentially the same safety outcome. But without users using it properly, the only thing we know for sure is that we will see an increased risk. To be very clear here, I am not calling for the removal of Level 2 autonomy from vehicles. I'm simply pointing out the pattern we have seen countless times in the past, and what should be obvious to us all at this point- Users can not be trusted for anything except to do what they're not supposed to do.

and compare to unaided driving accident rates, appropriately adjusted for all factors, and rates in Teslas with all such features but without AP in use

I agree completely with you, as always. Without hard data and statistical analysis, none of this matters at all because it's conjecture. I totally agree that we should be (independently) studying data from these systems and drawing conclusions from there. To that end, I do not trust Tesla's quarterly report although I read it and find it interesting. It would be counterproductive for Tesla to provide a narrative that says autopilot could be much more safe except for those darned users. And were I a major shareholder rather than a hobbyist, I don't think I'd want them to make any such announcement. If I were part of their legal team, well, I'd drink myself to sleep most afternoons.

before drawing firm conclusions that L2 is definitely bad.

I again want to clarify here. I am not at all saying L2 is bad or even definitely bad. I believe any aide system that prevents driver fatigue is likely worthwhile, and L2 (even in my skeptical estimation I've posted elsewhere) can handle 75% of highway driving situations with ease. The remaining question in my mind is the same question we ask about autopilot in aircraft. Is the user still engaged enough to have situational awareness to a degree where they can take over in an emergency.

What we see in the airline industry is that autopilot removes much of the human error in typical flying conditions, and nobody disputes that. I suggest we see the same with Tesla autopilot. What the airline industry also sees is that in an emergency situation, pilots need time to regain awareness and may miss critical data about their predicament that leads to catastrophe. I suggest we see the same with Tesla autopilot. I do not deny the benefit of the good, but I think we both agree we can not deny the introduced risk. A risk that already existed, BTW, with phones and conversations, and daydreaming. But now it's easier to fall into the trap of distraction, as Waymo highlighted so well.

Again, though, we need data. That data won't arrive without catastrophe, and some of the data will always need to be inferred. You can't measure a collision that never happened, after all.

The question though, in the end, is when you look at the appropriately adjusted and statistically valid accident rate - is it safer to have an L2 system with driver monitoring, or not?

Once more, I agree with you entirely.

acoste

Member

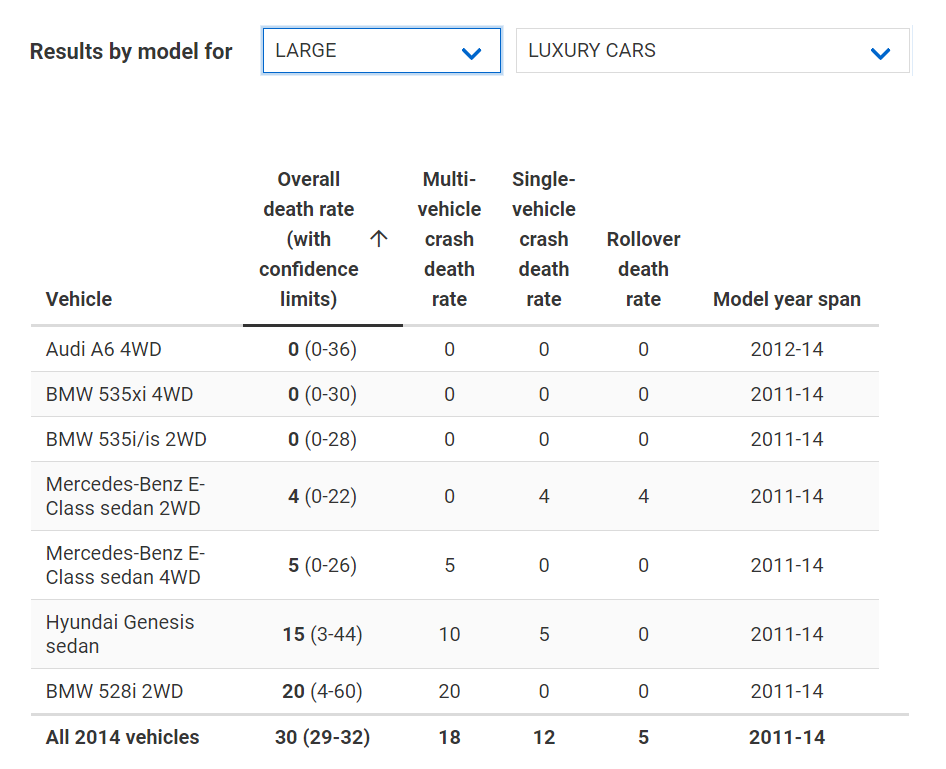

I have already mentioned it, but say it again. In higher price categories there are generally less accidents. So comparing Tesla to NHTSA's average number is incorrect.

These 11 Vehicles Had No Driver Deaths

"The 11 models with zero driver deaths include the Audi A6 Quattro, Audi Q7 Quattro, rear-wheel drive BMW 535i, BMW 535xi xDrive, Jeep Cherokee 4x4, Lexus CT 200h, front-wheel Lexus RX 350, front-wheel drive Mazda CX-9, Mercedes-Benz M-Class 4Matic, Toyota Tacoma Double Cab Long Bed 4x4, and front-wheel Volkswagen Tiguan."

Driver death rates by make and model

deaths per million registered vehicle years

"The overall driver death rate for all 2014 and equivalent models during 2012-15 was 30 deaths per million registered vehicle years."

Tesla’s Driver Fatality Rate is more than Triple that of Luxury Cars (and likely even higher)

"Tesla’s mortality rate (41 deaths per million vehicle years) is so much higher than the average luxury car (13 deaths per million vehicle years) that when comparing the two, the difference is hugely statistically significant. The difference is 28 additional deaths per million vehicle years, with a confidence interval of 11 to 63, and a p-value of 0.0001."

These 11 Vehicles Had No Driver Deaths

"The 11 models with zero driver deaths include the Audi A6 Quattro, Audi Q7 Quattro, rear-wheel drive BMW 535i, BMW 535xi xDrive, Jeep Cherokee 4x4, Lexus CT 200h, front-wheel Lexus RX 350, front-wheel drive Mazda CX-9, Mercedes-Benz M-Class 4Matic, Toyota Tacoma Double Cab Long Bed 4x4, and front-wheel Volkswagen Tiguan."

Driver death rates by make and model

deaths per million registered vehicle years

"The overall driver death rate for all 2014 and equivalent models during 2012-15 was 30 deaths per million registered vehicle years."

Tesla’s Driver Fatality Rate is more than Triple that of Luxury Cars (and likely even higher)

"Tesla’s mortality rate (41 deaths per million vehicle years) is so much higher than the average luxury car (13 deaths per million vehicle years) that when comparing the two, the difference is hugely statistically significant. The difference is 28 additional deaths per million vehicle years, with a confidence interval of 11 to 63, and a p-value of 0.0001."

Autopilot has not been programmed for pedestrian yet so using today's Autopilot should have no effect on the rate.

Pedestrian braking is the job of EAB which will override autopilot regardless of what autopilot's path plan is. Most major brands offer EAB at this point, and several of them even work. So far, based on industry testing, Tesla offers the best package. But no EAB system can prevent 100% of collisions because the operator may be traveling too fast for the conditions (speed is almost always the number one factor in any collision), or with autopilot may have set the speed too high for the conditions.

Even worse, in low visibility situations, the EAB system may not detect a pedestrian or an object, leading to a collision. This is most pronounced when the object is stationary and the radar unit can not detect it.

These aren't new risks, we're just being presented with existing risks in new ways. We can not say with absolute confidence one way or the other right now, and my whole point is that users put far too much faith into these systems without knowing or understanding their limitations. Your comments so far have been proof of my point, if I'm honest.

AlanSubie4Life

Efficiency Obsessed Member

For my own case: Yes!

Can't argue with you there.

This doesn't necessarily mean that Teslas are less safe though. The driver demographics make a huge difference in these statistics. I'm willing to bet that people who drive Tesla Model S are not the typical large luxury car buyers.Tesla’s Driver Fatality Rate is more than Triple that of Luxury Cars (and likely even higher)

"Tesla’s mortality rate (41 deaths per million vehicle years) is so much higher than the average luxury car (13 deaths per million vehicle years) that when comparing the two, the difference is hugely statistically significant. The difference is 28 additional deaths per million vehicle years, with a confidence interval of 11 to 63, and a p-value of 0.0001."

A great example is my previous car, a Subaru Legacy. IIHS says it has a fatality rate of 83 per million registered vehicle years. The Subaru Outback which is just a Legacy with a lift kit and plastic crap tacked on the sides has a rate of 40. I have a feeling that my fellow turbo Legacy owners were skewing the statistics and not that the plastic body cladding improved safety.

Southpasfan

Member

I find the anti Tesla bias an interesting case study. I can't put it all down to bots, its obvious the posts are from real people. Its also obvious that many posts are from people who not only don't have a Tesla but don't have any EV of any kind.

In this case, the statistics of less accidents per mile when autopilot is engaged could not be more intuitively correct. For example, accident rate per mile when a car is using basic cruise control has to be lower than accident rates overall. That's because cruise control would only be used on highways during times of greater mph, and further, no one uses cruise control during low mileage, high accident city driving.

Because all you need to "end" cruise control is to step on the brake, it was an easy technology to adopt, because unless the driver was not alert the driver steps on the brake regardless of whether cruise control is active or not.

If you simply look at current AP as a type of advanced cruise control, of course, its much safer per mile. If it wasn't it would be an unmitigated disaster. Because AP, like cruise control, can be disabled by the driver so easily, its no more likely to cause an accident.

Until, and all the way up until, you have FSD with zero driver involvement, what is going to happen is every step of the way, every feature, will prove, over millions of miles, to have less of an accident rate than basic driving without such feature. Every feature will need to prove that to get regulatory approval.

People speculate (and that's fine, hey, why not) that AP will result in making drivers less attentive. Actually, they do more than speculate, they assume. Having driven a Tesla for three months I fail to see that prediction and the assumption is wrong. Given what AP does, you can be "less attentive" to exact lane positioning and "less attentive" to following distance. But I find myself far more attentive to blind spots and the rear view and the side view in general.

Finally, rather than assume a causal connection that isn't there (AP = less attention), how about this question, "which is better, a driver not paying full attention in a Tesla with full safety feature and AP enabled on a highway, or a driver not paying full attention with none of Tesla's sensors and no AP?" The answer is obvious.

In this case, the statistics of less accidents per mile when autopilot is engaged could not be more intuitively correct. For example, accident rate per mile when a car is using basic cruise control has to be lower than accident rates overall. That's because cruise control would only be used on highways during times of greater mph, and further, no one uses cruise control during low mileage, high accident city driving.

Because all you need to "end" cruise control is to step on the brake, it was an easy technology to adopt, because unless the driver was not alert the driver steps on the brake regardless of whether cruise control is active or not.

If you simply look at current AP as a type of advanced cruise control, of course, its much safer per mile. If it wasn't it would be an unmitigated disaster. Because AP, like cruise control, can be disabled by the driver so easily, its no more likely to cause an accident.

Until, and all the way up until, you have FSD with zero driver involvement, what is going to happen is every step of the way, every feature, will prove, over millions of miles, to have less of an accident rate than basic driving without such feature. Every feature will need to prove that to get regulatory approval.

People speculate (and that's fine, hey, why not) that AP will result in making drivers less attentive. Actually, they do more than speculate, they assume. Having driven a Tesla for three months I fail to see that prediction and the assumption is wrong. Given what AP does, you can be "less attentive" to exact lane positioning and "less attentive" to following distance. But I find myself far more attentive to blind spots and the rear view and the side view in general.

Finally, rather than assume a causal connection that isn't there (AP = less attention), how about this question, "which is better, a driver not paying full attention in a Tesla with full safety feature and AP enabled on a highway, or a driver not paying full attention with none of Tesla's sensors and no AP?" The answer is obvious.

AlanSubie4Life

Efficiency Obsessed Member

the statistics of less accidents per mile when autopilot is engaged could not be more intuitively correct.

Sure, Tesla's data clearly shows that AP-enabled miles have lower accident rate than AP-not-enabled miles, but what does that mean? Does it mean it is safer to have Autopilot engaged?

if you simply look at current AP as a type of advanced cruise control, of course, its much safer per mile. If it wasn't it would be an unmitigated disaster.

I think that is the entire point...it really means that the data is kind of not useful...

"which is better, a driver not paying full attention in a Tesla with full safety feature and AP enabled on a highway, or a driver not paying full attention with none of Tesla's sensors and no AP?" The answer is obvious.

Yes, the answer is obvious if those are the only two choices. However, I think we have other choices too.

If it were possible to make an AI as advanced as myself then we'd already have FSD. You can look through my post history and see that I probably own a Model 3I find the anti Tesla bias an interesting case study. I can't put it all down to bots, its obvious the posts are from real people. Its also obvious that many posts are from people who not only don't have a Tesla but don't have any EV of any kind.

This is exactly why Tesla's accident statistics are useless for determining whether Autopilot makes the vehicles safer or not.In this case, the statistics of less accidents per mile when autopilot is engaged could not be more intuitively correct. For example, accident rate per mile when a car is using basic cruise control has to be lower than accident rates overall. That's because cruise control would only be used on highways during times of greater mph, and further, no one uses cruise control during low mileage, high accident city driving.

Yeah, but that's not the question. The question is whether or not Autopilot makes the car safer. It's possible that Autopilot causes drivers (on average! not you) to become complacent and that causes more accidents than the system prevents. It's also possible that it does improve safety. And of course this could all change over time. My belief, without any real data (since we don't have any), is that the current system is probably a wash in terms of safety. My real concern is that as the system gets "better" it may actually become less safe.Finally, rather than assume a causal connection that isn't there (AP = less attention), how about this question, "which is better, a driver not paying full attention in a Tesla with full safety feature and AP enabled on a highway, or a driver not paying full attention with none of Tesla's sensors and no AP?" The answer is obvious.

I find the anti Tesla bias an interesting case study. I can't put it all down to bots, its obvious the posts are from real people. Its also obvious that many posts are from people who not only don't have a Tesla but don't have any EV of any kind.

In this case, the statistics of less accidents per mile when autopilot is engaged could not be more intuitively correct. For example, accident rate per mile when a car is using basic cruise control has to be lower than accident rates overall. That's because cruise control would only be used on highways during times of greater mph, and further, no one uses cruise control during low mileage, high accident city driving.

Because all you need to "end" cruise control is to step on the brake, it was an easy technology to adopt, because unless the driver was not alert the driver steps on the brake regardless of whether cruise control is active or not.

If you simply look at current AP as a type of advanced cruise control, of course, its much safer per mile. If it wasn't it would be an unmitigated disaster. Because AP, like cruise control, can be disabled by the driver so easily, its no more likely to cause an accident.

Until, and all the way up until, you have FSD with zero driver involvement, what is going to happen is every step of the way, every feature, will prove, over millions of miles, to have less of an accident rate than basic driving without such feature. Every feature will need to prove that to get regulatory approval.

People speculate (and that's fine, hey, why not) that AP will result in making drivers less attentive. Actually, they do more than speculate, they assume. Having driven a Tesla for three months I fail to see that prediction and the assumption is wrong. Given what AP does, you can be "less attentive" to exact lane positioning and "less attentive" to following distance. But I find myself far more attentive to blind spots and the rear view and the side view in general.

Finally, rather than assume a causal connection that isn't there (AP = less attention), how about this question, "which is better, a driver not paying full attention in a Tesla with full safety feature and AP enabled on a highway, or a driver not paying full attention with none of Tesla's sensors and no AP?" The answer is obvious.

In addition to that, Tesla vehicles with Autopilot hardware and active safety features, but without Autopilot engaged, had 55% fewer accidents per mile than those without Autopilot and the active safety features:

In the 2nd quarter, we registered one accident for every 3.27 million miles driven in which drivers had Autopilot engaged. For those driving without Autopilot but with our active safety features, we registered one accident for every 2.19 million miles driven. For those driving without Autopilot and without our active safety features, we registered one accident for every 1.41 million miles driven. By comparison, NHTSA’s most recent data shows that in the United States there is an automobile crash every 498,000 miles.*

The active safety features are built off Autopilot, although the driver maintains control.

It is difficult to come up with a convincing explanation from driver demographics or otherwise why Tesla vehicles using the Autopilot system in driver assist mode would have a much lower accident rate than those without it. The most reasonable conclusion is the difference in accident rates is not due to driver demographics but that the active safety systems avoid a substantial number of accidents.

This suggests that Autopilot's accident avoidance features are already quite good (and improving all the time), and there are many videos floating around the interwebs showing Tesla cars braking and swerving to avoid accidents that would have been difficult or impossible for a human driver to avoid.

To try to argue that the accident avoidance capabilities from the active safety features of the system are entirely negated when Autosteer, Auto Lane change, and the rest of the Autopilot system is engaged seems a profound stretch IMO, particularly in light of the very low incidence of crashes with Autopilot engaged.

Last edited:

Southpasfan

Member

If it were possible to make an AI as advanced as myself then we'd already have FSD. You can look through my post history and see that I probably own a Model 3

This is exactly why Tesla's accident statistics are useless for determining whether Autopilot makes the vehicles safer or not.

Yeah, but that's not the question. The question is whether or not Autopilot makes the car safer. It's possible that Autopilot causes drivers (on average! not you) to become complacent and that causes more accidents than the system prevents. It's also possible that it does improve safety. And of course this could all change over time. My belief, without any real data (since we don't have any), is that the current system is probably a wash in terms of safety. My real concern is that as the system gets "better" it may actually become less safe.

My point was yes, people are asking, and debating, whether or not AP makes the car "safer."

But let me submit that is absolutely, NOT the question. I have, obviously, but bear with me, never had a fatal accident, nor an accident resulting in serious injury. So, as to me, there is no possible self driving feature that could make a car "safer" as to me.

What is happening is that Tesla, and Waymo, are working on progressing the tech to autonomous self driving. To get there, eventually, they will have so prove that a car without a driver is at least as statistically safe as a car with a driver.

But we are not at that point. Moreover, the real question is how are we going to get to that point? I would submit for consideration that autonomous self driving cannot be realized by any existing technology on a "0 to 100%" basis. Compare flight with a fixed wing aircraft. At some point technology had to go from "cannot get off the ground" to "take off, fly, and land" - and it pretty much had to be in one go.

I would say that autonomous self driving, on actual roads, not geo-fenced, with unlimited road conditions, can only be reached by increments.

The increments are breaking down the physical requirements of piloting a car with the intellectual decision making of the driver. Tesla already has many of the physical requirements down. Its obvious which ones are not yet either perfected or authorized for use by the general fleet. Its the same with the intellectual decisions. The intellectual decisions are far more difficult.

AP is no more dangerous that driving without it. I mean, there is no question. No one who has any knowledge of what AP actually does could think, for example, that it has the ability to recognize a stop sign and stop at it. It doesn't.

Its "safer" than driving without it because the concentration level of a computer given the same imputs are superior to a human. If AP randomly veered out of a lane due to dozing off, or texting, it would be the same as a human, its doesn't. And no feature will be rolled out until its proven it is better than a human.

I just had my Model 3 drive around 350 miles from north of Stockton, CA, to LA. I had to move the wheel at least 150-200 times along the way, but I did not have to correct the car at all. It handled two major fwy interchanges impeccably, and handled around 50 instances of passing slower cars via lane changes as well.

In terms of ability, at no point did AP create any more danger than I would have created, and it handled staying in the lane, not tailgating, and speed control far, far better than I would have done. And that's with 2019 AP software.

What is interesting is when the software will change to where I do not have to move the wheel 150-200 times? I don't know. But what I do know is that regulators will not approve disabling the "move the wheel feature" until Tesla has enough data to show that the "move the wheel feature" was unnecessary.

acoste

Member

we registered one accident for every 3.27 million miles driven in which drivers had Autopilot engaged. For those driving without Autopilot but with our active safety features, we registered one accident for every 2.19 million miles driven.

On the same road under the same conditions?

I think it is the question. When an automaker releases a feature on a car that will always be a question. Does Autopilot reduce the number of accidents? I agree that Autopilot when used correctly is probably safer but I'd like to know if it's safer when used by actual human beings.My point was yes, people are asking, and debating, whether or not AP makes the car "safer."

But let me submit that is absolutely, NOT the question.

I agree but I think it can be tested without increasing the number of accidents.I would say that autonomous self driving, on actual roads, not geo-fenced, with unlimited road conditions, can only be reached by increments.

I'll give it a shot!in light of the dramatically lower accident rate with Autopilot hardware+active safety features, It is difficult to come up with a convincing explanation from driver demographics or otherwise why Tesla vehicles using the Autopilot system in driver assist mode have a much lower accident rate than those without it.

The explanation would be that active safety features work and there is a plenty of evidence that they do. Do you think people use Autopilot more on the highway or in the city?

I'll give it a shot!

The explanation would be that active safety features work and there is a plenty of evidence that they do. <snip>

Hey, maybe we're making some progress here! (I doubt it, but humor me

It's not just that generic active safety features work, but that cars with Autopilot hardware+Tesla's industry leading active safety features (per European NCAP), have a 55% lower rate of accidents.

Let's review what these features are:

Reducing Forward Collisions: AEB and Forward Collision Warning

Reducing Unintended Lane Departures: Lane Departure Warning and Emergency Assistance

Obstacle-aware acceleration

Blind Spot Collision Warning

I believe we agree, but please confirm, that these features reduce accidents.

Now to enable basic Autopilot we replace Lane Departure Warning/Emergency Assistance and Blind Spot Collision Warning with Autosteer.

I don't think there is any question that Autosteer stays in the lane better than even well-above average human drivers. It is almost comical to drive along in Autopilot and watch how often regular drivers distracted by their phone, radio or whatever drift out of their lane.

So all things being equal, replacing lane departure warnings, etc. with

Autosteer

While keeping

Obstacle aware acceleration

Automatic Emergency Braking

Should make the car safer, all things being equal.

If we jump up to auto-lane change, I believe the same is true. While it is not always as graceful as good human drivers, it appears to be very safe. I am unaware of a single accident reported for a Tesla while undergoing an automatic lane change. That is quite remarkable since Tesla is always under a microscope. I think auto lane change is likely at least as safe and probably safer than lane changes by a human driver assisted by Tesla's blind spot warning, and likely considerably safer than lane changes without Tesla's blind spot warning.

Bringing this full circle, Tesla's Autopilot active safety features are the best in the business, with accidents reduced 55% in cars that have it versus similar cars (other Teslas) that don't. It is not hard to see how the features added when Autopilot is enabled are even better -- Autosteer (definitely) and Autolane change (probably).

Since the technology -- all of which is enabled by Autopilot -- makes the car inherently safer, it all comes down to an argument that driver inattention when you switch from active safety features to full Autopilot is so awful, and the system so incapable of operating without constant driver attention, that it wipes out all these benefits.

There is no data to support that argument. The best analysis to date (from Lex Fridman) suggests the contrary. https://hcai.mit.edu/tesla-autopilot-human-side.pdf

Last edited:

There are some issues I have with what you've said, but most of them don't contribute in a more meaningful way than has already been said dozens of times. But...

In fact, they do more than assume and speculate, they provide video evidence from a user engagement study like Waymo did and like Lex Fridman did in a Model S. The problem is people are very predictable. You might assume they aren't, and you might assume you're somehow above average on the distribution. But statistically, we're all alike, and we all stop paying attention.

The issue is we aren't in a black and white situation. Life has nuance. So the question is whether an attentive driver versus an attentive driver plus AP versus an inattentive driver plus AP. If you think an inattentive driver plus AP is safer than an attentive driver without AP, you haven't experienced the depth of confusing and dangerous behavior AP has to offer.

Complicating these factors is whether the user is properly educated or not. They can be completely attentive but ignorant, and be just as dangerous as an inattentive driver on AP. Or like many of us here have said in other threads, we know where to expect AP to fail, behave confusingly, or just be dangerous and continue on happily, and we take over before then. That's the happy medium I like to be in- I know not to trust AP in lots of situations, so I just remove it as a factor during those times.

People speculate (and that's fine, hey, why not) that AP will result in making drivers less attentive. Actually, they do more than speculate, they assume.

In fact, they do more than assume and speculate, they provide video evidence from a user engagement study like Waymo did and like Lex Fridman did in a Model S. The problem is people are very predictable. You might assume they aren't, and you might assume you're somehow above average on the distribution. But statistically, we're all alike, and we all stop paying attention.

Finally, rather than assume a causal connection that isn't there (AP = less attention), how about this question, "which is better, a driver not paying full attention in a Tesla with full safety feature and AP enabled on a highway, or a driver not paying full attention with none of Tesla's sensors and no AP?" The answer is obvious.

The issue is we aren't in a black and white situation. Life has nuance. So the question is whether an attentive driver versus an attentive driver plus AP versus an inattentive driver plus AP. If you think an inattentive driver plus AP is safer than an attentive driver without AP, you haven't experienced the depth of confusing and dangerous behavior AP has to offer.

Complicating these factors is whether the user is properly educated or not. They can be completely attentive but ignorant, and be just as dangerous as an inattentive driver on AP. Or like many of us here have said in other threads, we know where to expect AP to fail, behave confusingly, or just be dangerous and continue on happily, and we take over before then. That's the happy medium I like to be in- I know not to trust AP in lots of situations, so I just remove it as a factor during those times.

AlanSubie4Life

Efficiency Obsessed Member

There is no data to support that argument. The best analysis to date (from Lex Fridman) suggests the contrary. https://hcai.mit.edu/tesla-autopilot-human-side.pdf

Have you read that entire paper? It does not say what you think it says. @Lex_MIT is quite cognizant of many of the issues being discussed here. I do not think he would agree with this summary of his paper.

“Normalizing this number of Autopilot miles driven during the day in our dataset, we determine that such tricky disengagement occur on average every 9.2 miles of Autopilot driving. Under these conditions, the system limits reveal themselves regularly and the human driver “catches” the system and takes over. The natural engineering response to such data may be to strive to lower the rate of such “failures.” And yet, these imperfections are likely a significant contributing factor to why the drivers are maintaining functional vigilance. In other words, perfect may be the enemy of good when the human factor is considered. A successful AI-assisted system may not be one that is 99.99...% perfect but one that is far from perfect and effectively communicates its imperfections.”

This is Google’s concern in the originally posted video.

I recommend reading the paper through, rather than reading the Teslarati summary of the paper.

Have you read that entire paper? It does not say what you think it says. @Lex_MIT is quite cognizant of many of the issues being discussed here. I do not think he would agree with this summary of his paper.

“Normalizing this number of Autopilot miles driven during the day in our dataset, we determine that such tricky disengagement occur on average every 9.2 miles of Autopilot driving. Under these conditions, the system limits reveal themselves regularly and the human driver “catches” the system and takes over. The natural engineering response to such data may be to strive to lower the rate of such “failures.” And yet, these imperfections are likely a significant contributing factor to why the drivers are maintaining functional vigilance. In other words, perfect may be the enemy of good when the human factor is considered. A successful AI-assisted system may not be one that is 99.99...% perfect but one that is far from perfect and effectively communicates its imperfections.”

This is Google’s concern in the originally posted video.

I recommend reading the paper through, rather than reading the Teslarati summary of the paper.

Oh, I've read the paper.

The take-home message: "The central observations in the dataset is that drivers use Autopilot for 34.8% of their driven miles, and yet appear to maintain a relatively high degree of functional vigilance."

Similar threads

- Replies

- 1

- Views

- 335

- Replies

- 20

- Views

- 3K

- Replies

- 48

- Views

- 7K

- Article

- Replies

- 140

- Views

- 23K