Can you explain how "2.0" will replace classical code for driving policy? Anything will do.

Let me ask you this:

Do you believe you can train a NN to understand your surroundings? (The way Tesla claims they have and backed that claim up with FSD Beta releases)

If no, then there is no further discussion that can be had!

If yes, then we can go on to the next part of the discussion!

Assuming you agree that NN can be trained to understand their surroundings (obviously the understanding part is only as good as the dataset that you trained it on and how well it is maintained/labeled).

Take a look at the following scene (src: AIDRIVR

Code:

https://www.youtube.com/watch?v=wD_mF0OLJPs

@ 4:03 )

Beside the "normal" stuff like cars lanes, etc the scene gives us a descent idea of what the car understands.

(A) It is 25 mph zone (B) there is a car that is parked down a driveway (C)

Remember that the same NN's are already determining the drivable space as well.

In Beta 8 we saw a little more obvious info in the raw bounding box view with color coding of what each moveable object was doing (driving/walking perpendicular, oncoming, in the same direction etc)

If a NN can be trained to understand its surroundings - like we have seen in the FSD Beta ... it can also be trained to act properly based on the information that it has observed.

Driving policy can be boiled down to 2 actions (really!) Those actions must fit the environment where the car is.

1) acceleration -- or lack thereof -- i.e. brake

2) direction we should go -- i.e. steering

The rest is dependent on your environment.

* slow down for the speed bump vs slam on brakes because a pedestrian stepped into your lane.

* turn the wheel some small degree to follow a slight curve or make a left/right turn

* slow/speed up because speed limit changed.

All of these are dependent on the cars observations.

In this example:

* observe the 25 mph sign - make sure your are in the ~25 mph ballpark

* observe a driveway with/without cars know that there could be stuff coming out from there.

* if the Audi in this scene was switching into our lane observe car and slow down to give room.

If a NN can be trained to understand its environment well, it can be trained to act in that environment as well.

I am pretty certain that that the path planning that we are seeing in the FSD beta is coming as an output from the NN.

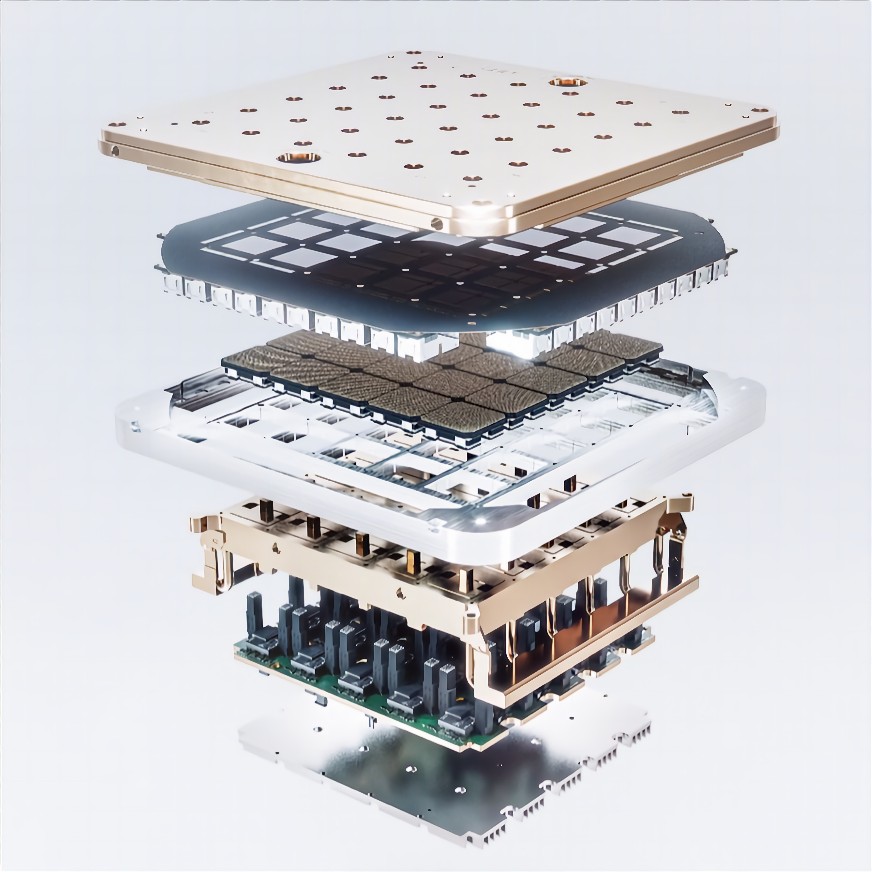

I think Dojo is what will be needed to be able to train a NN - not just to perceive the world around it, but also to train it how to behave in that world (i.e. driving policy)