Yeah, so visual input processing is the most computing intense part of full self-driving. Tesla has 8 cameras, and if you want to process each at 100 fps (one frame every 10 milliseconds), at the native HD resolution of the cameras, that's a lot of processing.

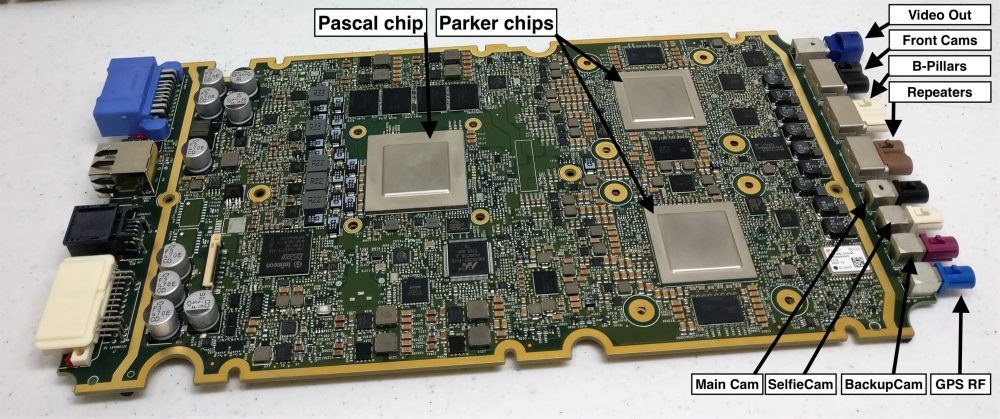

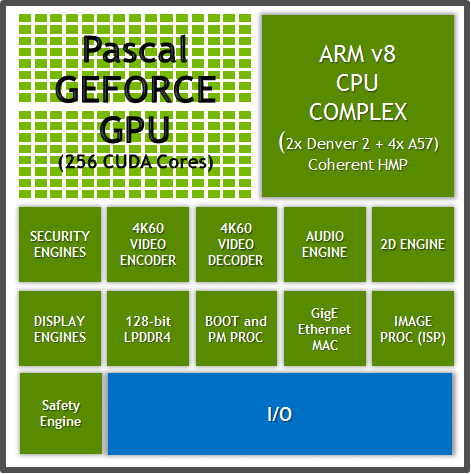

Right now they process everything, all frames from all 8 cameras with a single discrete GPU I believe, on an Nvidia GP102 based board.

But now that they have their own discrete NN chip, the Tesla AI chip, in future iterations (HW4, HW5) they could use the following computer topology within the board, with very little additional cost (the AI chips probably cost only a few dollars to make each - most of the cost is in making the board):

Code:

[AI Chip #1] [AI Chip #2]

\ /

[GPU RAM]

/ \

[AI Chip #3] [AI Chip #4]

I.e. four chips and shared RAM of say 16 GB high-speed GPU RAM with multiple access channels so that all CPUs can use the RAM all the time without slowing down each other.

(There's also the question of whether the Tesla AI chip uses separate RAM modules - a possible alternate design would be for the RAM to be integrated into the AI chip itself, as a sort of very fast transistor based SRAM. This would have a number of other advantages as well, such as close proximity of NN 'weight' data with the functional units representing 'neuron' nodes.)

But assuming that RAM is separate from the chip, the above board layout is a possible topology, where Chip 1 would handle cameras 1-2, Chip 2 would handle cameras 3-4, etc. While not all cameras have the same pixel count, the processing overhead is still similar and scales with the complexity of their neural networks.

Note that this way the total computing throughput of the system can be increased by a factor of 2x, 4x and 8x with very little additional cost other than a higher power envelope.

I'm reasonably sure HW3 is going to feature one AI chip (they want to keep it simple initially, and it appears the chip is plenty fast already) - if it features two chips it will be for redundancy and fail-over perhaps, not to increase performance.

All of this is speculation though - I'm sure we'll hear more about the details once the HW3 release gets closer ...