Thanks for sharing this. Useful for thinking about why visual HD maps are important:

“While other sensors such as radar and LiDAR may provide redundancy for object detection – the camera is the only real-time sensor for driving path geometry and other static scene semantics (such as traffic signs, on-road markings, etc.). Therefore, for path sensing and foresight purposes, only a highly accurate map can serve as the source of redundancy.”

Last night, I was trying to figure this out with regard to Mobileye’s approach: unless HD maps use human annotation, how do they provide redundancy, since the same neural networks are doing inference for HD mapping and real-time perception?

Other than Mobileye, I think most (all?) companies that make visual HD maps upload images, and then get humans to label them. The redundancy comes from the human labeler. Since Mobileye just uploads a few kilobytes of metadata about what the car thinks it sees, that redundancy isn’t there.

The best answer I could come up with to justify Mobileye’s approach is that if a vehicle makes a real-time perception error 1 in 20 times, then it can weigh that inference against the HD maps, which represent other vehicles coming to a different conclusion 19 out of 20 times. But I’m not sure if this makes sense is practice.

For example, what if the environment changes? Will the vehicle assume its real-time perception is wrong, and the (now outdated) HD maps are right? How do you deal with disagreements between real-time perception and HD maps (which, without annotation, are essentially non-real-time perception)?

This also got me thinking about lidar:

“While other sensors such as radar and LiDAR may provide redundancy for object detection – the camera is the only real-time sensor for driving path geometry and other static scene semantics (such as traffic signs, on-road markings, etc.).”

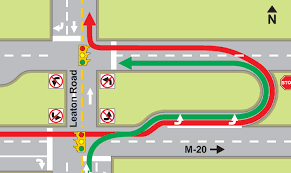

If one of the hard parts of computer vision for vehicle autonomy is recognizing depthless features of the environment like painted lane lines and traffic signs, then this provides context to Elon’s comments about lidar and cameras. To get to full autonomy, you need the car to flawlessly perceive lane lines, traffic signs (e.g. stop signs), traffic lights, turn signals, crosswalks, painted arrows, and so on. You need advanced camera-based vision.

Once you get to that point with camera-based vision, your neural networks might be so good at object detection (e.g. vehicle, pedestrian, and cyclist detection) using camera input that you no longer need lidar to achieve human-level or superhuman performance.

I say “human-level” because even if object detection is only as good as the average human, autonomous cars will still be safer because their reaction time is faster. Human reaction time under ideal conditions is 200-300 milliseconds. Braking reaction time might be more like

530 milliseconds. For people age 56 and up, the same study found a reaction time of 730 milliseconds. The actual number might be

2 seconds+. In contrast, we already have AEB systems with reaction times below 200 milliseconds.

Factoring in unideal conditions — distracted, drowsy, or drunk driving — the average for humans is probably much worse.