There was an interesting article posted on Seeking Alpha today arguing that cameras will win the "LIDAR vs cameras" debate for autonomous vehicles - but not in the way they're currently used:

Tesla: Cameras Might Win The Autonomous Race After All - Tesla Motors (NASDAQ:TSLA) | Seeking Alpha

In the autonomous space today, you have two very different philosophies - those based primarily around LIDAR (such as Google/Waymo) and those based around cameras (like Tesla). Versus cameras, LIDAR:

* Is much more expensive (formerly ~$75k per unit, now $7,5k, as per Google), versus low double-digits per camera.

* Requires a bulky, awkward spinning rig mounted to the top of the vehicle

* Produces a very detailed, low-error model of the world around it (cameras are prone to misstitching problems)

* May still require cameras for precise identification of what it detects

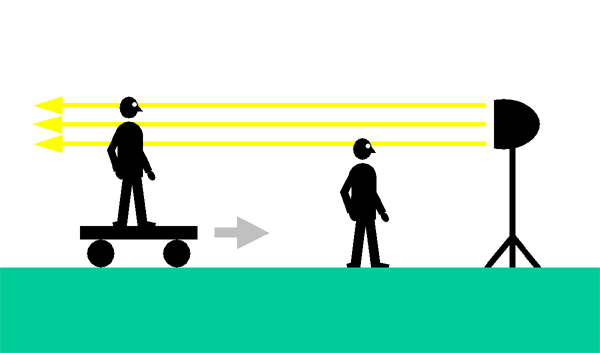

LIDAR, however, appears to be evolving into a camera-based technology: Time-Of-Flight. In this technology, light is emitted in bright pulses across a broad area, and cameras record not (just) how much light they receive, but more specifically, when they receive it, with sub-nanosecond precision. There's no spinning rig, greater vertical resolution, and most importantly, the hardware is producible with the same sort of semiconductor manufacturing technology that makes cameras so cheap. The same cameras can also double as colour-imaging cameras for identification.

In short, the article argues that cameras will win the day, but not the type Tesla is using, leaving it with a large liability for underdelivering on driving capabilities vs. competitors that spring up with ToF camera-based systems.

It's an interesting argument, although I'm not entirely convinced, for a number of reasons.

* What you really want is a fusion of 3d geometry and identification of what you're seeing. A line on a road or text on a sign or a lit brakelight or so forth has no detectable 3d geometry. Is that thing sticking out ahead some leaves on a tree or a metal beam? Is that a paper bag on the road or a rock? Etc. If in the future Tesla has to switch to ToF cameras for building 3d models of the world around them, they don't lose any of the progress that they've made based on a system where their 3d models are built with photogrammetry; they just map their imagery to better models.

* Tesla's liability isn't so much for the cost of FSD as it is for the cost of hardware retrofits if the current hardware proves inadequate. Swapping out cameras is almost certainly much cheaper than the cost of refunding thousands of dollars. Any vehicles not swapped out still continue to work, just with the (potentially) poorer photogrammetry-based 3d modelling.

* Unlike Tesla's competitors, Tesla's early start gives them reems of data collected from its vehicles's sensors in real-world environments - radar, ultrasound, and imagery. Even if competitors happen to choose a better 3d-mapping technology, they remain well behind in the size of their datasets. And data is critical; if you want to test a new version of your software, you can validate it against every drive in your dataset to ensure that it performs as intended.

* We actually don't know Tesla's plans for what sorts of cameras they plan to incorporate and into what. Liabilities for upgrading existing MSs and MXs would be vastly lower than for, say, a couple million M3s.

Tesla took a big hit with the Mobileye divorce, and is still playing catchup with AP2. And their insistence on using technology that was affordable to put on all vehicles without serious design compromises left them with no choice but cameras; traditional LIDAR has just been too expensive and awkward. But now that a potentially useful "upgrade" may be coming into play, will Tesla switch gears?

Tesla: Cameras Might Win The Autonomous Race After All - Tesla Motors (NASDAQ:TSLA) | Seeking Alpha

In the autonomous space today, you have two very different philosophies - those based primarily around LIDAR (such as Google/Waymo) and those based around cameras (like Tesla). Versus cameras, LIDAR:

* Is much more expensive (formerly ~$75k per unit, now $7,5k, as per Google), versus low double-digits per camera.

* Requires a bulky, awkward spinning rig mounted to the top of the vehicle

* Produces a very detailed, low-error model of the world around it (cameras are prone to misstitching problems)

* May still require cameras for precise identification of what it detects

LIDAR, however, appears to be evolving into a camera-based technology: Time-Of-Flight. In this technology, light is emitted in bright pulses across a broad area, and cameras record not (just) how much light they receive, but more specifically, when they receive it, with sub-nanosecond precision. There's no spinning rig, greater vertical resolution, and most importantly, the hardware is producible with the same sort of semiconductor manufacturing technology that makes cameras so cheap. The same cameras can also double as colour-imaging cameras for identification.

In short, the article argues that cameras will win the day, but not the type Tesla is using, leaving it with a large liability for underdelivering on driving capabilities vs. competitors that spring up with ToF camera-based systems.

It's an interesting argument, although I'm not entirely convinced, for a number of reasons.

* What you really want is a fusion of 3d geometry and identification of what you're seeing. A line on a road or text on a sign or a lit brakelight or so forth has no detectable 3d geometry. Is that thing sticking out ahead some leaves on a tree or a metal beam? Is that a paper bag on the road or a rock? Etc. If in the future Tesla has to switch to ToF cameras for building 3d models of the world around them, they don't lose any of the progress that they've made based on a system where their 3d models are built with photogrammetry; they just map their imagery to better models.

* Tesla's liability isn't so much for the cost of FSD as it is for the cost of hardware retrofits if the current hardware proves inadequate. Swapping out cameras is almost certainly much cheaper than the cost of refunding thousands of dollars. Any vehicles not swapped out still continue to work, just with the (potentially) poorer photogrammetry-based 3d modelling.

* Unlike Tesla's competitors, Tesla's early start gives them reems of data collected from its vehicles's sensors in real-world environments - radar, ultrasound, and imagery. Even if competitors happen to choose a better 3d-mapping technology, they remain well behind in the size of their datasets. And data is critical; if you want to test a new version of your software, you can validate it against every drive in your dataset to ensure that it performs as intended.

* We actually don't know Tesla's plans for what sorts of cameras they plan to incorporate and into what. Liabilities for upgrading existing MSs and MXs would be vastly lower than for, say, a couple million M3s.

Tesla took a big hit with the Mobileye divorce, and is still playing catchup with AP2. And their insistence on using technology that was affordable to put on all vehicles without serious design compromises left them with no choice but cameras; traditional LIDAR has just been too expensive and awkward. But now that a potentially useful "upgrade" may be coming into play, will Tesla switch gears?