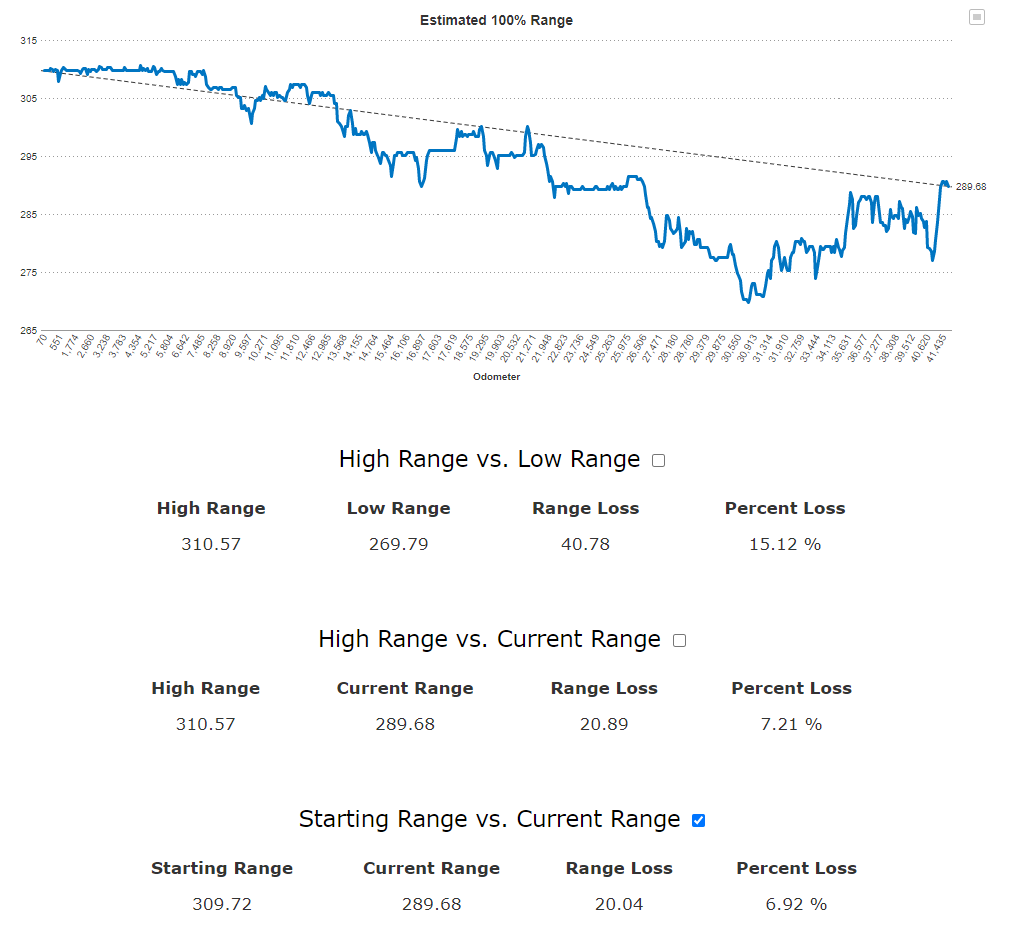

Like many others, I have been concerned with loss of 100% indicated battery range on one of my Model 3s. My P3D (build date 9/13/2018, delivery date 10/8/2018) had gotten down to 270.3 miles at 100% charge on January 20, 2020, at about 30,700 miles, which is a loss of 40.8 miles since the car was new.

I posted about going to the service center to talk with them about battery degradation, which I did on March 9, 2020. It was a great service appointment and the techs at the Houston Westchase service center paid attention to my concerns and promised to follow up with a call from the lead virtual tech team technician. I detailed this service visit in the following post:

Reduced Range - Tesla Issued a Service Bulletin for possible fix

While that service visit was great, the real meat of addressing the problem came when I spoke to the virtual tech team lead. He told me some great things about the Model 3 battery and BMS. With the knowledge of what he told me, I formulated a plan to address it myself.

So here is the deal on the Model 3 battery and why many of us might be seeing this capacity degradation.

The BMS system is not only responsible for charging and monitoring of the battery, but computing the estimated range. The way it does this is to correlate the battery's terminal voltage (and the terminal voltage of each group of parallel cells) to the capacity. The BMS tries to constantly refine and calibrate that relationship between terminal voltage and capacity to display the remaining miles.

For the BMS to execute a calibration computation, it needs data. The primary data it needs to to this is what is called the Open Circuit Voltage (OCV) of the battery and each parallel group of cells. The BMS takes these OCV readings whenever it can, and when it has enough of them, it runs a calibration computation. This lets the BMS now estimate capacity vs the battery voltage. If the BMS goes for a long time without running calibration computations, then the BMS's estimate of the battery's capacity can drift away from the battery's actual capacity. The BMS is conservative in its estimates so that people will not run out of battery before the indicator reads 0 miles, so the drift is almost always in the direction of estimated capacity < actual capacity.

So, when does the BMS take OCV readings? To take a set of OCV readings, the main HV contactor must be open, and the voltages inside the pack for every group of parallel cells must stabilize. How long does that take? Well, interestingly enough, the Model 3 takes a lot longer for the voltages to stabilize than the Model S or X. The reason is because of the battery construction. All Tesla batteries have a resistor in parallel with every parallel group of cells. The purpose of these resistors is for pack balancing. When charging to 100%, these resistors allow the low cells in the parallel group to charge more than the high cells in the group, bringing all the cells closer together in terms of their state of charge. However, the drawback to these resistors is that they are the primary cause of vampire drain.

Because Tesla wanted the Model 3 battery to be the most efficient it could be, Tesla decided to decrease the vampire drain as much as possible. One step they took to accomplish this was to increase the value of all of these resistors so that the vampire drain is minimized. The resistors in the Model 3 packs are apparently around 10x the value of the ones in the Model S/X packs. So what does this do to the BMS? Well, it makes the BMS wait a lot longer to take OCV readings, because the voltages take 10x longer to stabilize. Apparently, the voltages can stabilize enough to take OCV readings in the S/X packs within 15-20 minutes, but the Model 3 can take 3+ hours.

This means that the S/X BMS can run the calibration computations a lot easier and lot more often than the Model 3. 15-20 minutes with the contactor open is enough to get a set of OCV readings. This can happen while you're out shopping or at work, allowing the BMS to get OCV readings while the battery is at various states of charge, both high and low. This is great data for the BMS, and lets it run a good calibration fairly often.

On the Model 3, this doesn't happen. With frequent small trips, no OCV readings ever get taken because the voltage doesn't stabilize before you drive the car again. Also, many of us continuously run Sentry mode whenever we're not at home, and Sentry mode keeps the contactor engaged, thus no OCV readings can be taken no matter how long you wait. For many Model 3's, the only time OCV readings get taken is at home after a battery charge is completed, as that is the only time the car gets to open the contactor and sleep. Finally, 3 hours later, OCV readings get taken.

But that means that the OCV readings are ALWAYS at your battery charge level. If you always charge to 80%, then the only data the BMS is repeatedly collecting is 80% OCV readings. This isn't enough data to make the calibration computation accurate. So even though the readings are getting taken, and the calibration computation is being periodically run, the accuracy of the BMS never improves, and the estimated capacity vs. actual capacity continues to drift apart.

So, knowing all of this, here's what I did:

1. I made it a habit to make sure that the BMS got to take OCV readings whenever possible. I turned off Sentry mode at work so that OCV readings could be taken there. I made sure that TeslaFi was set to allow the car to sleep, because if it isn't asleep, OCV readings can't get taken.

2. I quit charging every day. Round-trip to work and back for me is about 20% of the battery's capacity, and I used to normally charge to 90%. I changed my standard charge to 80%, and then I began charging the car at night only every 3 days. So day 1 gets OCV readings at 80% (after the charge is complete), day 2 at about 60% (after 1 work trip), and day 3 at about 40% (2 work trips). I arrive back home from work with about 20% charge on that last day, and if the next day isn't Saturday, then I charge. If the next day is Saturday (I normally don't go anywhere far on Saturday), then I delay the charge for a 4th day, allowing the BMS to get OCV readings at 20%. So now my BMS is getting data from various states of charge throughout the range of the battery.

3. I periodically (once a month or so) charge to 95%, then let the car sleep for 6 hours, getting OCV readings at 95%. Don't do this at 100%, as it's not good for the battery to sit with 100% charge.

4. If I'm going to take a long drive i.e. road trip, then I charge to 100% to balance the battery, then drive. I also try to time it so that I get back home with around 10% charge, and if I can do that, then I don't charge at that time. Instead, let the car sleep 6 hours so it gets OCV readings at 10%.

These steps allowed the BMS to get many OCV readings that span the entire state of charge of the battery. This gets it good data to run an accurate calibration computation.

So what's the results?

On 1/20/2020 at 30,700 miles, I was down to 270 miles full range, which is 40.8 miles lost (15.1 %). The first good, accurate recalibration occurred 4/16/2020 at 35,600 miles and brought the full range up to 286 miles. Then another one occurred on 8/23/2020 at 41,400 miles and brought the range up to 290 miles, now only a 20 mile loss (6.9 %).

Note that to get just two accurate calibration computations by the BMS took 7 months and 11,000 miles.

So, to summarize:

1. This issue is primarily an indication/estimation problem, not real battery capacity loss.

2. Constant Sentry mode use contributes to this problem, because the car never sleeps, so no OCV readings get taken.

3. Long voltage stabilization times in the Model 3 prevent OCV readings from getting taken frequently, contributing to BMS estimation drift.

4. Constantly charging every day means that those OCV readings that do get taken are always at the same charge level, which makes the BMS calibration inaccurate.

5. Multiple accurate calibration cycles may need to happen before the BMS accuracy improves.

6. It takes a long time (a lot of OCV readings) to cause the BMS to run a calibration computation, and therefore the procedure can take months.

I would love if someone else can perform this procedure and confirm that it works for you, especially if your Model 3 is one that has a lot of apparent degradation. It will take months, but I think we can prove that this procedure will work.[/QUOTE

Great work! I love the thread!