I don't see a firm id. clocks are based on what bootloader sets them to at bootup. though it appears dtb pegs them at up to 2.4GHz?

Did this image happen to come from a development/beta vehicle? Some of the kernel debug parameters make sense in a production environment, but others really only make sense during development because of the potential negative performance impact.

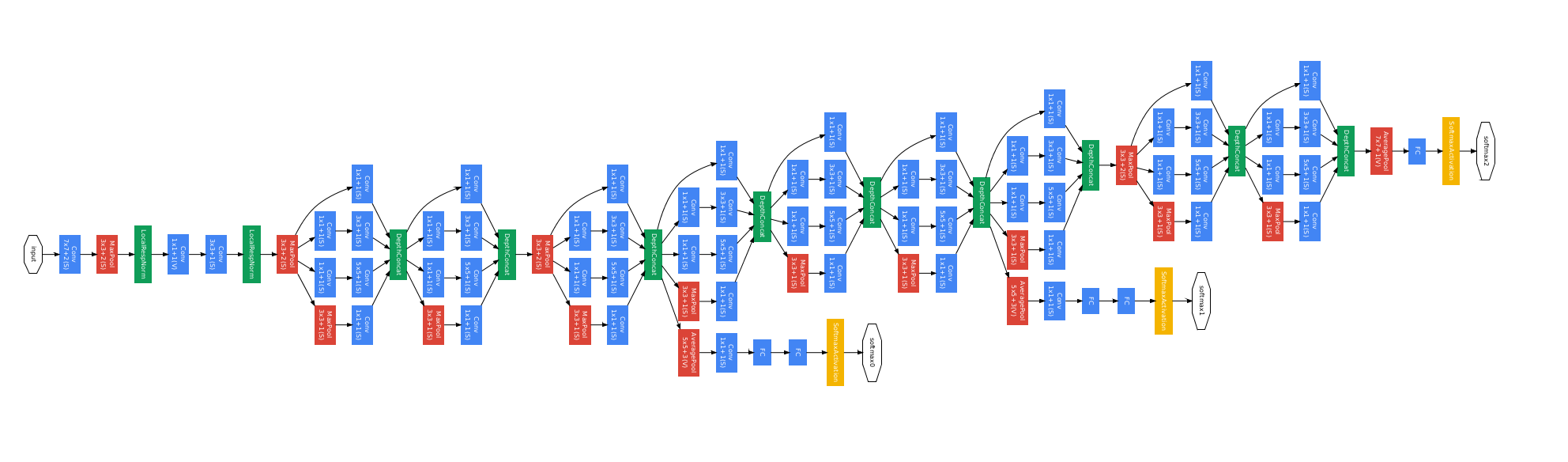

Anyway, from the hardware files you linked to, it would seem at first glance that the SoC is actually a 7880 with three clusters of four cores each. Those cores are at least compatible with the A72, so this could be a modified 7880 or it could be a custom run specifically for Tesla that is compatible enough with the 7880. In quantities much less than 500k, CPU manufacturers will very happily do some modifications and enable/disable features, so it's totally conceivable that Tesla did exactly that.

I have an absolute ton of questions about the running kernel, but those are likely more appropriate for a private conversation and then a public dump of knowledge gained.

Exynos 7880 8 core A72, weak single core but fast multi-core:

Samsung Galaxy A5 (2017) Benchmarks - Geekbench Browser

Benchmarks of a device that happens to have the CPU in it aren't really applicable, and this benchmark wouldn't tell us anything meaningful anyway. What does "773" mean? What does it represent? What is the workload creating the number 773 and what relation does it have to the MCU? Benchmarks are best for testing relative change of a known system or a known workload when making changes to that system or workload. Outside of that, they don't offer much value.

Examples:

SSE vs non-SSE acceleration of the same task on the same CPU in the same device to test improvements from using optimized instruction sets.

Intel Bronze 4114 vs Bronze 4116 running the same binary, performing the same work in the same host, to test improvements from more cores.

how do you arrive at 7880? Also there are 3 cpu clusters of 4 cores mentioned, but does that rally mean 12 cores? I don't deal with DTs often so I don't really know.

Customized SKU of the base SoC could account for the additional cluster of cores. Really, Tesla could just be using the compatibility with the A72 and the actual SoC isn't even an Exynos. But since the kernel config and hardware descriptors seem to imply at least that the basis of the SoC is a Samsung device, it's probable that they just customized it for Tesla.

This kind of makes no sense. Camera sensor determines the dynamic range, not the unit that receives the results, no?

This is exactly correct. The SoC camera sensor components probably aren't used except for maybe the cabin-facing unit. I'd bet Tesla has additional capture hardware on the board to handle all of the EAP cameras. Does anybody have detailed, high-res photos of the HW2.5 and/or HW3 boards?