Yeah, I really rely on the little sonar distance graphics when pulling in and out of my garage. I can't see how cameras will replace that useful accuracy.Imagine you're driving in, say, Bergamo, in those very, very narrow places in the Città Alta that are accessible for BEV but not others. Then imagine you're in a Model X. You really, really need very high precision. My oft=posted trip there shows one place that had 8cm on either side. There is zero chance I would have avoided damage were I driving with my vision.

Especially in old cities around the world that is common. Will vision be enough? I do not know. I do worry. That is a common use case in much of the world.

In Southern California and Texas, probably almost never.

As for Model X rear doors, I speculate they'll not eliminate those sensors, visual cues are not very accessible there anyway.

Surely they'll have solutions soon, if not immediately. The new solutions will be cheaper but more mathematically complex. The AI Autopilot team will have fun with these use cases, I'm sure. The visual screen representations will be as they now are, I hope!

As an aside, they must be close to remove outside rearview mirrors. Regulatory issues can be managed. BMW was pitching that in 2016:

Many others have done this too, and Tesla has repeatedly said it was coming.

BMW shows off mirrorless concept car at CES

The BMW i8 concept car revealed during CES features cameras placed where side view mirrors would be, and on the top inside of the back window.money.cnn.com

When?

Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Tesla, TSLA & the Investment World: the Perpetual Investors' Roundtable

- Thread starter AudubonB

- Start date

Yeah, I really rely on the ultrasound distance graphics when pulling in and out of my garage. I can't see how cameras will replace that useful accuracy.

E For Electric YouTube channel has a video on the software problems of VW ID4, but the Electric Viking’s summary of the E video is much more digestible.

I have a friend who owned an ID4 for half a year, but recently sold it and now has a Model Y on order. Every time we'd talk about our EV's he'd be complaining about his ID4 while I'd be gushing about my Model Y. I was surprised to hear all of the software issues he'd had in six months, it sounded terribly frustrating. At first he did not like the Tesla interiors, but after a few times in my car he's gradually come around to the spartan design. The fact Tesla software always works and is snappy also made him incredibly jealous.

He loved the way his ID4 drove, but the constant UI issues and lack of any updates just wore him down.

I suspect it's the logic of someone who would like to buy back in at a lower price.What do you guys think of bear argument by Chicken Genius that lead to his TSLA price target of $140. Apparently, he sold most of his TSLA shares and became a full on bear.

I personally think some of his arguments are wack but there's a few that is out of my knowledge. Just want hear some great opinion from TMC. I left some points out as they're just pure garbage.

Chicken Bear Argument -

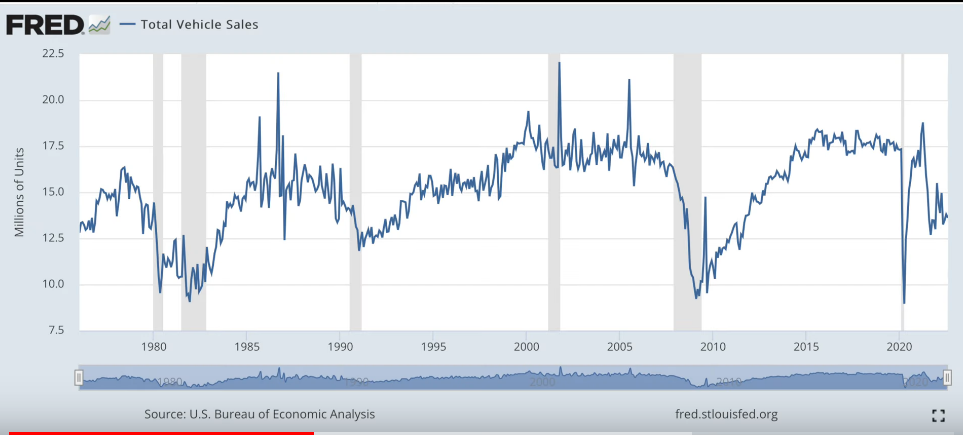

- This is one of the worst economic situations ever. Do you think people will buy a premium car? Auto sale is one the first to get hit.

- Tesla Backlog shrinking, back order are being exhausted.

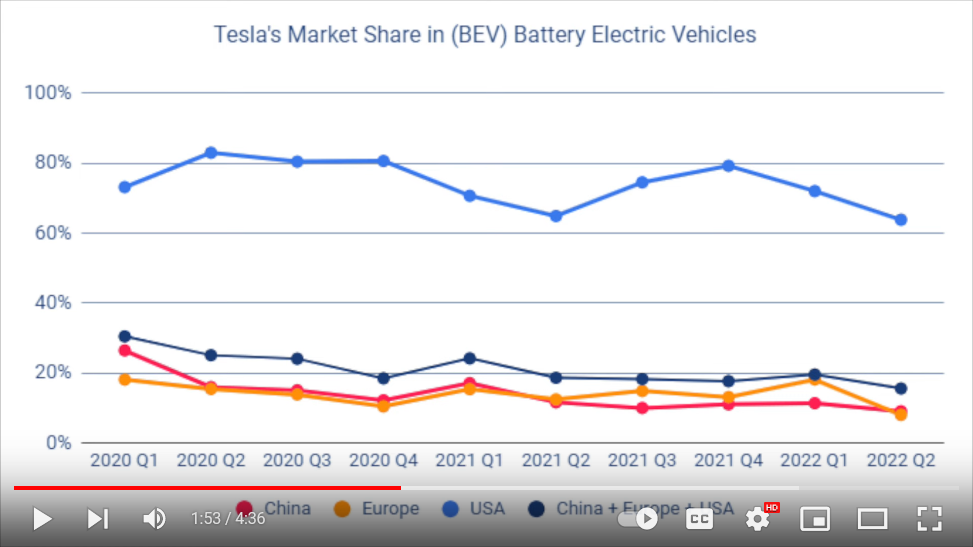

- Market share decreasing

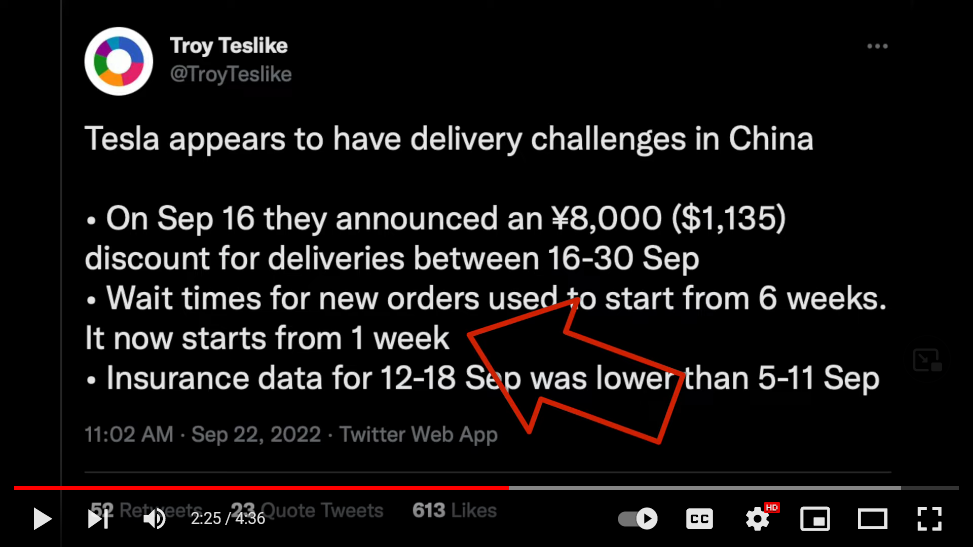

- Total demand destruction, China has 1 week. Where else will they get there order from? China economy is in poo, EU is even poo poo er.

- Nobody can explain to me high cash flow and 18B cash for a company that grows 50% yr. Management knows something we dont.

- Giga Berlin - cost of energy will directly affects cost of manufacturing.

watch youtube here

If we don't think the Tesla vision system can replace ultrasonics then we shouldn't bet on autonomy ever happening. The car should be able to "see" a wall or curb, remember the exact placement, and then situate the car through that space perfectly.Imagine you're driving in, say, Bergamo, in those very, very narrow places in the Città Alta that are accessible for BEV but not others. Then imagine you're in a Model X. You really, really need very high precision. My oft=posted trip there shows one place that had 8cm on either side. There is zero chance I would have avoided damage were I driving with my vision.

Regarding narrow paths, the repeater and camera views include the full side of 3/4 of the vehicle. So, using a human interpreted camera view, it's akin to side mirrors when reversing into a tight spot, very doable. The front 1/4 clearance is trickier though since B pillar can't see the body. So the Occupancy Network (ON) will need to rely on kinematic translation of the front camera feeds for those zones.Imagine you're driving in, say, Bergamo, in those very, very narrow places in the Città Alta that are accessible for BEV but not others. Then imagine you're in a Model X. You really, really need very high precision. My oft=posted trip there shows one place that had 8cm on either side. There is zero chance I would have avoided damage were I driving with my vision.

Especially in old cities around the world that is common. Will vision be enough? I do not know. I do worry. That is a common use case in much of the world.

In Southern California and Texas, probably almost never.

As for Model X rear doors, I speculate they'll not eliminate those sensors, visual cues are not very accessible there anyway.

Surely they'll have solutions soon, if not immediately. The new solutions will be cheaper but more mathematically complex. The AI Autopilot team will have fun with these use cases, I'm sure. The visual screen representations will be as they now are, I hope!

As an aside, they must be close to remove outside rearview mirrors. Regulatory issues can be managed. BMW was pitching that in 2016:

Many others have done this too, and Tesla has repeatedly said it was coming.

BMW shows off mirrorless concept car at CES

The BMW i8 concept car revealed during CES features cameras placed where side view mirrors would be, and on the top inside of the back window.money.cnn.com

When?

The new occupancy network already does the obstacle detection. *If* it is working properly, then it's a simple grid check for distance to object. No extra math needed. (accuracy over precision, as long as precision is sufficent to detect clearance)

Side mirrors will be a thing in the US until FMVSS 111 is updated. Note, my personal opinion is that it is currently legal to have the mirrors autofold during forward travel (or one can do it manually), though current design has questionable aero gains.

Electroman

Well-Known Member

I think you are overestimating the impact on weight savings and the benefits of better airflow , range and all other stuff that you mentioned, by an ORDER of magnitude.This ultrasonics move mostly impacts cost and hopefully performance on tasks that really need vision to work well, but the change also helps with mass, aerodynamics and polar moment of inertia.

- The ultrasonic hardware including the sensors, wiring, clamps etc. probably adds on the order of 10 kg to the mass of the car. Model 3 is about 1.6 metric tons, so this represents around 0.5% to 1% reduction in overall vehicle mass.

- Much of this mass was located around the periphery of the car, far from the center of mass, which is not ideal for minimizing resistance to turning, because the contribution of each kg of mass to the rotational inertia is proportional to the square of the distance from the center of mass.

This has a small but direct impact on:

- The ultrasonic pucks, especially the ones on the front bumper, disrupt airflow and thus add to aerodynamic drag force. I can't estimate how big the impact of this is, but it's definitely nonzero because a smooth surface will obviously be more slippery than one with discontinuities.

Range per kWh is the big one because battery cell supply is the long-term limiting factor on the speed of mission completion. 1% here, 1% there; it all accumulates to a big difference when we're looking at millions and then tens of millions of cars made every year.

- Range per kWh

- Total cost of ownership

- CO2 emissions per mile

- Acceleration

- Handling

- Braking

Last edited:

It will be much more advanced than that. Right now in several markets the authentication happens with biometric (often visual) authentication. Right now even US Immigration with Global Entry does not even usually require presenting a passport, Brazil has done that for some time, but do require passport scanning also. I have two bank accounts right now that are accessed with visual ID.I imagine he'll integrate with Block/Square

He will have a much broader product line than has been discussed here.

With better Twitter ID he can pretty much eliminate bots and other abuses, including prohibited speech. No mystery, one can allow pretty open speech if the haters cannot hide or deny.

We are close to Tesla being able to directly ID authorized users for, say, supercharging of non-Tesla. The x.com integration opens the world of subscription, purchases and financial services with advantages and risk mitigation building directly from Tesla Insurance learning.

This sort of semi-universal service model was being conceived in the early 1980's by the same people who were in the Silicon Valley think tanks then. There is no doubt in my mind that this is his vision.

He said as much in 1998/1999. Nobody believed him. Peter Thiel was just greedy, secretly sold him out, so Elon became wealthier and did SpaceX, Tesla, and lots of AI. Now he's taken the last key. Twitter, as such, is not transformational at all. It does handily sidestep a huge recruitment lag.

2daMoon

Mostly Harmless

It will be much more advanced than that. Right now in several markets the authentication happens with biometric (often visual) authentication. Right now even US Immigration with Global Entry does not even usually require presenting a passport, Brazil has done that for some time, but do require passport scanning also. I have two bank accounts right now that are accessed with visual ID.

He will have a much broader product line than has been discussed here.

With better Twitter ID he can pretty much eliminate bots and other abuses, including prohibited speech. No mystery, one can allow pretty open speech if the haters cannot hide or deny.

We are close to Tesla being able to directly ID authorized users for, say, supercharging of non-Tesla. The x.com integration opens the world of subscription, purchases and financial services with advantages and risk mitigation building directly from Tesla Insurance learning.

This sort of semi-universal service model was being conceived in the early 1980's by the same people who were in the Silicon Valley think tanks then. There is no doubt in my mind that this is his vision.

He said as much in 1998/1999. Nobody believed him. Peter Thiel was just greedy, secretly sold him out, so Elon became wealthier and did SpaceX, Tesla, and lots of AI. Now he's taken the last key. Twitter, as such, is not transformational at all. It does handily sidestep and huge recruitment lag.

Well put, and having posted the following in the Elon and Twitter thread it seemed appropriate to share as a nod to the above.

We are in the first chapter of this Twitter story, so time will tell whether or not it will be a horror tale. Many stories start rough and end very nicely.

To me this doesn't seem the sort of tale that should be discarded out of hand after a page or two. The author has a good reputation for crafting compelling works of overcoming great odds with heroic effort, does he not?

The Space launch sector has been disrupted by SpaceX.

The Transportation sector is being disrupted globally by Tesla.

The Energy sector is being disrupted by Solar Roof and Battery Storage.

The A.I. sector is being defined by FSD, Autonomy, and DOJO.

The Labor sector is on the verge of disruption by the Tesla Bot.

Tunneling is being disrupted by Boring Company.

Who's to say the Financial sector won't be disrupted on as large a scale by what he does with X via the Twitter acquisition?

Last edited:

Electroman

Well-Known Member

Here is the challenge for cameras that are positioned where it is positioned today: How will it see how many inches it is from an adjacent car at highway speeds? How will it know how far is the low curb parallel to the car?.If we don't think the Tesla vision system can replace ultrasonics then we shouldn't bet on autonomy ever happening. The car should be able to "see" a wall or curb, remember the exact placement, and then situate the car through that space perfectly.

Overloading the processing of vision data for stuff that are easily done by cheap sensors will go the way of removing wiper sensors - how did that work out?

I will tell you how that worked out: Broad daylight with sun beating down and it hasn't rained in weeks and no cloud in the sky. Bone dry. Out of the blue, my wipers start going FULL speed and it won't stop. It is making screeching noise. My passenger is laughing uncontrollably. I had to turn off Autopilot and then turn off wipers. Of course 50 grams weight savings would have increased the range by 1 cm.

How low can they push this before earnings?

The same way we do, by building a map of the environment and then knowing where we are in that space. I don't "know" that this all will work but I'm trusting that Tesla does.Here is the challenge for cameras that are positioned where it is positioned today: How will it see how many inches it is from an adjacent car at highway speeds? How will it know how far is the curb parallel to the car.

I am not one to tell our great leader what to tweet. But must observe that if they bang out the details and EM tweets something along the lines of “All financing is in place.”, well, that would be good.

Binning VW "software" problems and Tesla software "problems" in one got you my disagree.E For Electric YouTube channel has a video on the software problems of VW ID4, but the Electric Viking’s summary of the E video is much more digestible.

E was ghosted by both VW and Rivian for his negative videos pointing out issues that I feel justified as severe enough to be pointed out.

While all 7 ID4 problems listed are bad, my M3 SR+ is also far from free of software problems. The one this morning, for example, is one I have not experienced nor heard before - The right scroll wheel/button stopped working. Even after I finish my task and was preparing to drive home it still did not work. A quick reset fixed the issue.

I think Tesla might benefit from adapting aspects of the QA process from Spacex as they are surely held to a different standards.

You mean themselves?Two words: supplier agreements.

Tesla can't just stop buying sensors when the software starts working. They also need to coordinate with the bumper fascia supplier (especially if the holes are created via mold).

they made the fascias on the '12 S cars so I really would think they make them still?

Overloading the processing of vision data for stuff that are easily done by cheap sensors will go the way of removing wiper sensors - how did that work out?.

I will tell you how that worked out: Broad daylight with sun beating down and it hasn't rained in weeks and no cloud in the sky. Bone dry. Out of the blue, my wipers start going FULL speed and it won't stop. It is making screeching noise. My passenger is laughing uncontrollably. I had to turn off Autopilot and then turn off wipers. Of course 50 grams weight savings would have increased the range by 1 cm.

Autopilot needs the glass clear (of all gunk, not just rain) in front of all three cameras. How much area does one rain detector cover?

Answer: not enough

ZeApelido

Active Member

Tesla's perception / occupancy network doesn't just detect how far away objects are directly, it creates a 3D model of your surroundings.

This means once it sees a curb or parked car relative to that object's surroundings, it knows that object's position relative to its surroundings in 3D.

Tesla doesn't need to see that object anymore, it can triangulate it's location based on all the other visible landmarks that are still in the cameras' views.

And I would hope Tesla could do that, since it has to already make many decisions about fine positioning (like whether to enter a narrow street) without using ultrasonic sensors.

This means once it sees a curb or parked car relative to that object's surroundings, it knows that object's position relative to its surroundings in 3D.

Tesla doesn't need to see that object anymore, it can triangulate it's location based on all the other visible landmarks that are still in the cameras' views.

And I would hope Tesla could do that, since it has to already make many decisions about fine positioning (like whether to enter a narrow street) without using ultrasonic sensors.

willow_hiller

Well-Known Member

Tesla's perception / occupancy network doesn't just detect how far away objects are directly, it creates a 3D model of your surroundings.

This means once it sees a curb or parked car relative to that object's surroundings, it knows that object's position relative to its surroundings in 3D.

Tesla doesn't need to see that object anymore, it can triangulate it's location based on all the other visible landmarks that are still in the cameras' views.

And I would hope Tesla could do that, since it has to already make many decisions about fine positioning (like whether to enter a narrow street) without using ultrasonic sensors.

I think, eventually, this approach is going to require at least one additional camera. Probably positioned low on the front bumper. I can imagine a not uncommon scenario where the car is parked (and not actively perceiving the environment), and someone leaves something like a short shopping cart or bucket or cone in front of the vehicle. If the forward-facing cameras cannot instantaneously perceive objects below the hood, it could decide there's nothing in front of the car and hit whatever's in front of it.

Electroman

Well-Known Member

The front cameras only look at the 10 cm by 10 cm area in the glass where it is fixed. It cannot look at any other part of the windshield. Which serves its purpose fine. It is simply unable to differentiate between actual rain drops and other reflections/dust and any other visual artifacts. So you end up with false negatives and positives. A $10 sensor saved.Autopilot needs the glass clear (of all gunk, not just rain) in front of all three cameras. How much area does one rain detector cover?

Answer: not enough

Similar threads

- Locked

- Replies

- 0

- Views

- 3K

- Locked

- Replies

- 0

- Views

- 6K

- Locked

- Replies

- 11

- Views

- 10K

- Replies

- 6

- Views

- 5K

- Locked

- Poll

- Replies

- 1

- Views

- 12K