Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Tesla, TSLA & the Investment World: the Perpetual Investors' Roundtable

- Thread starter AudubonB

- Start date

Nolimits

Member

Seems Like a lot of buying pressure. It’ll

Be hard to keep it suppressed back under 760. Then again, been wrong many, many, many, times.

Be hard to keep it suppressed back under 760. Then again, been wrong many, many, many, times.

UkNorthampton

TSLA - 12+ startups in 1

UK: Plugged in Tesla at home to charge earlier, my family report that more people than usual are looking at it/charger.

Possibly due to fuel shortages/panic buying/roads blocked by queues into garages (at both the ones I drove past today).

A few irate drivers stuck in queues behind idiots queuing up to cross traffic into full garages. I helped the situation by beeping the horn. /s Both times, surprisingly it helped. On narrow UK streets, it doesn't take much to gridlock them.

I just wish Tesla could deliver more cars, they'd absolutely get sold in UK. Even with all Gigafactories ramped (USA Fremont & Austin, China & berlin), it's still supply rather than demand that is/will be limited

Possibly due to fuel shortages/panic buying/roads blocked by queues into garages (at both the ones I drove past today).

A few irate drivers stuck in queues behind idiots queuing up to cross traffic into full garages. I helped the situation by beeping the horn. /s Both times, surprisingly it helped. On narrow UK streets, it doesn't take much to gridlock them.

I just wish Tesla could deliver more cars, they'd absolutely get sold in UK. Even with all Gigafactories ramped (USA Fremont & Austin, China & berlin), it's still supply rather than demand that is/will be limited

Jack6591

Active Member

I wonder from time to time, what would happen if we (TMCers) organized buy orders at critical resistance points?

Just thinking out loud…

Just thinking out loud…

I would be a willing participant in that.....I wonder from time to time, what would happen if we (TMCers) organized buy orders at critical resistance points?

Just thinking out loud…

Stone_Watcher

Member

We’d be investigated by the SECI wonder from time to time, what would happen if we (TMCers) organized buy orders at critical resistance points?

Just thinking out loud…

Artful Dodger

"Neko no me"

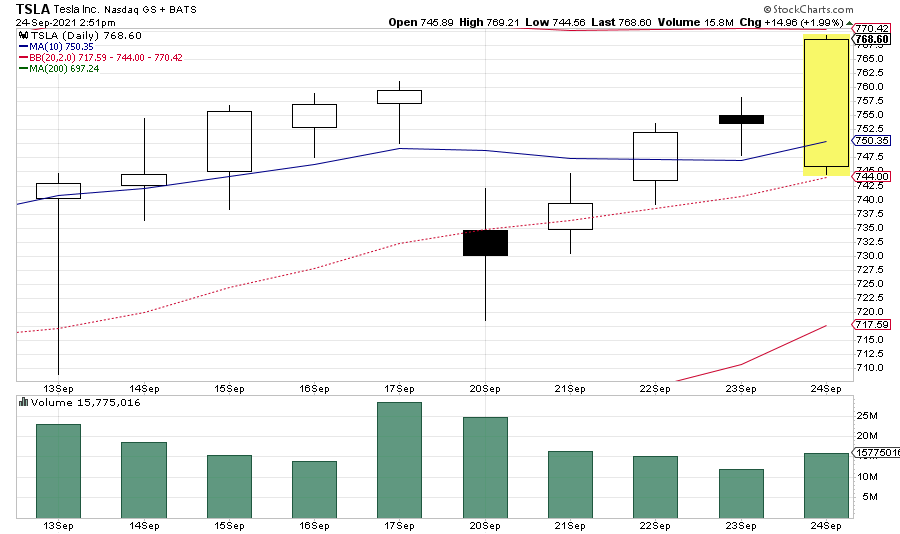

Seems Like a lot of buying pressure. It’ll

Be hard to keep it suppressed back under 760. Then again, been wrong many, many, many, times.

hacer

Active Member

The collapse in implied volatility has been larger for leaps than for shorter term calls (especially ATM and ITM leaps). I'm going to go out on a limb and say that there are no TSLA retail bulls selling leaps, except maybe astonishingly far OTM leaps.So world is up side down, retail sells the Calls, and MM's buys them

In particular the June '23 400 strike calls look like a real bargain to me so I bought some.

Summer of 2020?...

Remind me again why I watch this stock every day in the summer?

Jack6591

Active Member

We’d be investigated by the SEC

I’m not suggesting anything illegal, only changing the phrase “buy the dip” to “buy the resistance level”?

Artful Dodger

"Neko no me"

Already exists: R/WallStreetBets re: AMC + GameStopI wonder from time to time, what would happen if we (TMCers) organized buy orders at critical resistance points?

Just thinking out loud…

Short term that's known as "Buy High, Sell Low". Most people try to avoid that.I’m not suggesting anything illegal, only changing the phrase “buy the dip” to “buy the resistance level”?

Cheers!

Last edited:

Gigapress

Trying to be less wrong

Thanks for your response. I'm not going to address the politic here, which can easily take us out of realm of statistical analysis. Rather, I'd point out that you seem to be confusing the null hypothesis with the alternative hypothesis, which is pushing you to suggest a test size which larger than it needs to be on purely statistical grounds. So let's be clear about a proper setup of the statistical test.

Null Hypothesis: Tesla death rate = 1.2 per 100M miles

Alternative Hypothesis: Tesla death rate = 0.12 per 100M miles

Since we wish to show that Tesla has 1/10 the average rate, this is our alternative hypothesis. The hypothesis testing framework actually become much simpler when you have a specific alternative hypothesis in mind.

Let's consider a test with 800M miles. The number of fatalities is our test statistic. Under the null hypothesis, it is Poisson with mean 9.6, and under the alternative hypothesis it has mean 0.96. To achieve 99.9% confidence (0.1% significance), we reject the null hypothesis if the number of deaths is 1 or fewer. This test has significance 0.07% and power 75.05%. That is, if the alternative hypothesis is true, there is a 75% chance that this test will correctly reject the null hypothesis. If we can relax the significance of this test to 5% or lower, then we can reject the null with 4 or fewer deaths. Here the significance is 3.78% and power 99.69%. From a purely statistical viewpoint, 800M miles quite enough exposure for excellent significance and power, assuming that the exposure is representative of national exposure.

I'd also add that under the alternative hypothesis, there is only 7.31% chance that the number of death are greater than 2. So with very high probability, the observed death rate is at most 2 deaths per 800M or 0.24 per 100M. The important thing for Tesla is that they engineer a system that is truly 10 times safer than humans. If they do that, any reasonable test will have very good power.

A test based on 6B miles would have a Poisson test statistic with mean 72 under the null vs 7.2 under the hypothesis. Rejecting the null hypothesis with 46 or fewer fatalities has 0.07% significance and power 100% (beta < 10^-15). It is very unusual to require a test that has stronger limits on the Type II error rate than the Type I error. Indeed, if the alternative is true, then the probability of more than 12 deaths is a mere 3.27%. So requiring a test at this scale (6M miles) is massive overkill. Indeed, considering how many beta testers are required and how much the risk the general public would be exposed to at this scale, it make much more sense to pilot a smaller 250M mile test to rule out the possibility that the risk of this driving system is not substantially above average. Once you can reject the null hypothesis at about 5% significance, then you have reasonable confidence that you are not subjecting the public to unusual risk just to keep testing the safety of the system so to achieve a smaller alpha. So to be sure, there are social costs that argue against making any test of public safety like this larger than is necessary to achieve reasonable statistical control.

I should also point out that fatalities should not be the only outcome tested. For example, collisions are much more frequent than fatalities. If Tesla is able to reduce the frequency of collisions by 10 or so, then a 100M test may well be adequate to demonstrate this. Basically, if demonstrates that the rate of collisions is substantially reduced, then it is reasonable to argue that rate of fatalities is also reduced. To argue against such a position would require making specious objections like: "Given that there are 90% fewer collisions, how can we be sure that, when a collision does happen, it is not 10 times more likely to yield a fatality?" Such an objection can be countered by examination of collisions. Certain kinds of collisions have higher probabilities of incurring fatalities. I would expect a highway safety organization to have models that predict fatalities per collision attributes. It is straightforward for an analyst to use such a model to compute expected fatalities for each observed collision. These probabilities can be tested against actual fatalities to show that Tesla does not have an unusually high rate of fatalities per collision. And secondly, the total number of expected fatalities from observed collisions can be divided by exposure to arrive at an expected fatalities per 100 mile rate.

For simple, example let's consider only collisions where there is an injury or fatality. Corresponding to 1.2 fatalities per 100M vehicle miles nationally, there are also about 96 injured persons per 100M miles. Within a 100M mile test, Tesla will be able to estimate its injury rate with precision. Indeed, under the alternative hypothesis that its injury rate is 9.6 per 100M miles, Tesla has a probability of about 98% of observing 16 or fewer injured persons. Granted Tesla only expects 9.6 injuries, let's suppose they observe 16 (a rather unlikely number) and no fatalities. Given that there are about 80 injured persons per fatality. Conditional on 16 injuries, one would expect just 0.2 fatalities. Given that there were 0 fatalities, using the expected fatalities per injury, we obtain an estimate of 0.2 expected fatalities per 100M miles with evidence against the objection that Tesla has a higher than average ratio deaths per injuries. Specifically, it has 0 deaths per 16 fatalities. Even if in such a test 1 fatality had been observed, you'd have very thin evidence against the null hypothesis that the Tesla has the national average of 1 fatality per 80 injuries. So arguably a fair point estimate of the fatality rate is still between 0.2 and 1.0 deaths per 100M. The problem for Tesla is that you really don't want to be in a position where just one fatality compromises your case around substantially reduced injury rates. If Tesla were to offer 400M mile test, it would be in stronger position to tolerate 1 fatality. Here the significance is 4.77% while the power is 91.58%. So there is a 62% chance they avoid any fatalities, which would be the best case for using the ratio to injuries approach to estimating the fatality rate. But even if there were one fatality, they'd still have a 4.77% p-value on the narrow test around fatality counts and still have a very robust case on based on injury rates.

So my view is that Tesla can make a solid preliminary case based on a 100M mile test, but further testing in range of 400M to 800M miles may be needed if there are observed fatalities. The analysis above also illustrates why Tesla wants to study every crash with an injury very closely. From a business impact viewpoint alone, every injury crash is probably worth detailed simulation so that the AI system learns very well how to avoid them. The key issue is cutting injury rates 10-fold. If they do that, the rest will follow.

Now if a government agency wants to impose absurd testing requirements on Tesla, that is a matter of politics, not statistics.

As far as I know, the alternative hypothesis needs to be the logical negation of the null hypothesis. In other words, the alternative needs to directly contradict the null. For example, if the null is that "the sky is orange", then the alternative hypothesis is simply that "the sky is not orange"; a claim such as "the sky is blue" would be an invalid alternative hypothesis, because it is a more specific claim that does not cover the entire set of possibilities if the null hypothesis is false. The reason for this is that a given test only has two possible outcomes at a specified significance level: reject the null or fail to reject the null.

So, if our test statistic leads us to reject the claim that "the true fatality rate is at least 1.2 per 100 million", then the only thing we can deduce from that premise is that "the true fatality rate is less than 1.2 per 100 million". Your math that considers an 800 million mile test is correct for answering this particular question, but the Master Plan indicates that Tesla themselves, irrespective of any presumed government mandate, is trying to make a different, much stronger claim. They want to demonstrate that it's 10x better, and that's why the test I had described actually had the null hypothesis set at the threshold of 10x better at preventing fatalities than humans. This threshold is what's driving the 6 billion mile sample size requirement mentioned in the Master Plan. Thus, the only relevance of 1.2 death/100 mill is in giving us the test threshold of 0.12 deaths/100 mill.

That is:

Null: True fatality rate is equal or greater than to 0.12 per 100 million miles

Alternative: True fatality rate is < 0.12 per 100 million miles

Also, I still believe that the argument still stands that several billion miles of data is crucial for alleviating representativeness concerns. The usefulness of the test statistic scheme utterly depends on the premise that the sample is representative. Proving representativeness may end up ultimately being the limiting factor pushing up the sample size needs, but we can't determine that without insider access to the data.

Consider the beta testing data collection procedure. It is decidedly not a simple random sample from the population of all trips occurring in a given period of time. Rather, it is the product of users' personal decisions of when and where to use the software. For a variety of possible reasons, we may have concern that the sample disproportionately includes miles where the software will perform better, such that the test ends up being overly optimistic. Of course, this could go the other way as well where perhaps testers tend to use FSD Beta in especially dangerous situations where it performs worse. We know some demographic facts about the FSD Beta users pool and Tesla owners in general. Relative to the general population, an individual Tesla FSD data collector is more likely to be: American, male, technically minded, intelligent, young to middle-aged, white/east asian/south asian, college-educated, high socioeconomic status, living in certain locations, commuting at certain times, etc. All of these factors influence the choice of trips taken and may substantially bias the test.

This problem is compounded by the fact that neural nets are trained based on user test data, so we have reasonable concern about the AI overfitting its model to non-representative usage patterns, and thus underperforming in other scenarios. We already know this is happening, with the SF/LA/Seattle metro areas having noticeably better FSD Beta performance than elsewhere in America.

One solution that requires the least number of additional assumptions is to just wait a bit longer for Teslas soon-to-be multimillion vehicle fleet to collect a massive bevy of data from a larger pool of users, to give even underrepresented scenarios a chance to display their danger and provide enough information to detect any "hotspots" of poor performance. Then we can have greater faith that the sample and neural net training can be trusted to generalize to truly safe Lvl 5 autonomous driving. (This also makes the political constraints easier to work with.)

I agree that collisions in general and also collisions with injuries should also be included in the analysis. As you've correctly pointed out, these two questions can be precisely answered with vastly less data because collisions and injuries are vastly more common than fatalities. I disagree that the frequency of fatalities can be directly inferred from the frequency of collisions. Neural nets can do weird things and have difficulty with interpolation between training examples. It is quite plausible that FSD might have 10X fewer collisions than humans, but in the event of a collision have a drastically higher likelihood of catastrophic failure. For instance, thus far, previous iterations of FSD have had much lower collision rates than humans on highway driving, but also appears to have been drastically worse at avoiding catastrophic collisions with stationary objects like trucks.

Lol whoops. Thank you for catching that!Dennis Lynch is not Peter Lynch!

This is why this super-successful growth investor no longer owns Tesla shares

Dennis Lynch, head of Counterpoint Global, Morgan Stanley Investment Management, points to unit economics.www.marketwatch.com

Now I have to call and apologize!

too late to edit that post, unfortunately

StealthP3D

Well-Known Member

That is curious that Elon-Tesla is at a presentation with Ferrari, and of course in Italy. Not usually known as a technology hotspot but definitely well versed in racing cars. Why not have an important technology update in Japan, Germany, Silicon Valley, or a hundred other locations?

Compared to the big three, Italy brings new technologies to market in a very timely and adept manner. It's not uncommon for them to be on the leading edge of integrating the newest tech into their latest products.

Artful Dodger

"Neko no me"

We have casually snuck up upon the Upper-BB (now at just over 770)

2

22522

Guest

Thinking about what Dodger said. I think when it becomes something that people try to avoid, it may be construed as manipulation.I’m not suggesting anything illegal, only changing the phrase “buy the dip” to “buy the resistance level”?

O'Neil communicates buy points that are essentially "buy the resistance level" so there is a way to do it and stay out of trouble. He is also big into stop losses, so anyone who knows the algorithm can make hay by taking lunch money from investors that follow orchestrated advice.

On this forum, the point may be moot as the couch is empty from the stock running sideways at a much lower price point.

Thank you for bringing this up.

My view is that there are a lot of people both smarter and more informed than I am. With every transaction I put money on the table that they can take. So to protect my future from people who are smarter and faster, I stick to:

1) Buy the dip when money shows up in the couch.

2) No stop losses.

3) Buy only things I would be OK holding ~forever.

Eh, "buy as much TSLA as you can as soon as you can and hold for as long as you can" has worked out pretty well for me.I’m not suggesting anything illegal, only changing the phrase “buy the dip” to “buy the resistance level”?

edit: Clarified to avoid triggering fellow divorced folks.

Last edited:

You just triggered a memory of my exEh, "buy as much as you can as soon as you can and hold for as long as you can" has worked out pretty well for me.

StarFoxisDown!

Well-Known Member

If this is accurate/true......anyone willing and have the time to do the math to figure out Sept production? We have production numbers all the way up through Aug now, so we should be able to calculate at least somewhat close.

Similar threads

- Locked

- Replies

- 0

- Views

- 3K

- Locked

- Replies

- 0

- Views

- 6K

- Replies

- 6

- Views

- 5K

- Replies

- 6

- Views

- 11K

- Locked

- Replies

- 27K

- Views

- 3M