Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

3Victoria

Active Member

Hopefully, this suggests the white list is being populated ... things to ignore (like overpasses).My issue hasn't been cars in other lanes, it's with overpasses and overhead signage. Seemed way way way retduced today.

S4WRXTTCS

Well-Known Member

so has anyone got the updated fixes for AP 2.0 yet that EM tweeted about?

The P100DL owners are too busy playing with Ludicrous+ to bother testing any fixes for AP 2.0

ZeroDarkSilver

HEPA cleaning your atmosphere, you're welcome

I received an update notification last Friday but did not get my MX back until today as it was undergoing a full xpel wrap with Adonis Detail.

Just updated now and it is 2.50.201

Release notes appear identical to 2.50.185

FULL WRAP? Dang...give us some pics man!

Weird, why aren't you on 2.52.xxx series?

He's one of the 1,000 who got the AP2 beta features. So I guess they are on a different branch / build.FULL WRAP? Dang...give us some pics man!

Weird, why aren't you on 2.52.xxx series?

Does anyone know the differences between 2.50.201 and the other firmware versions that were released? The only difference that has been mentioned is that I got the AP2 Beta firmware as part of the initial 1000. I plan on checking with Model S owners as well to see if they have the same build that I did.

Much too early to have solid confirmation but I would like to mention that I am cautiously optimistic about the detection of idle objects outside of Tesla Vision when using TACC.

I came up to a stopped car (red light) that was outside of Tesla Vision, I tried to engage TACC and my car did not move. I was ready to brake right way if any acceleration started to happen. I then noticed that my dashboard "saw" the stopped non moving car because there was an illustration for it.

From prior experience with 8.0(250.185) it would with certainty of plowed into the stopped car in front of me.

I am hoping others (on any version of the latest firmware) confirm/deny my observations. I plan to ask Tesla as well to see what they have to say as they have been very responsive to reporting.

Much too early to have solid confirmation but I would like to mention that I am cautiously optimistic about the detection of idle objects outside of Tesla Vision when using TACC.

I came up to a stopped car (red light) that was outside of Tesla Vision, I tried to engage TACC and my car did not move. I was ready to brake right way if any acceleration started to happen. I then noticed that my dashboard "saw" the stopped non moving car because there was an illustration for it.

From prior experience with 8.0(250.185) it would with certainty of plowed into the stopped car in front of me.

I am hoping others (on any version of the latest firmware) confirm/deny my observations. I plan to ask Tesla as well to see what they have to say as they have been very responsive to reporting.

Economite

Member

I came up to a stopped car (red light) that was outside of Tesla Vision, I tried to engage TACC and my car did not move. I was ready to brake right way if any acceleration started to happen. I then noticed that my dashboard "saw" the stopped non moving car because there was an illustration for it.

Tesla really should be doing a better job of documenting its updates and making the updates infrequent enough that folks will have time to digest the update notes. The sort of "experimenting" you describe here really shouldn't be necessary. It's kind of dangerous when extrapolated across all of the Tesla drivers that need to "experiment" each time Tesla essentially rewires the car and changes the car's behavior.

Andyw2100

Well-Known Member

Does anyone know the differences between 2.50.201 and the other firmware versions that were released? The only difference that has been mentioned is that I got the AP2 Beta firmware as part of the initial 1000. I plan on checking with Model S owners as well to see if they have the same build that I did.

Just check the firmware upgrade tracker at Tesla Firmware Upgrade Tracker Web App. You can almost certainly find all the information you are looking for with respect to what cars have what updates there.

STILL RECOMMEND CAUTION WITH TACC UNDER 2.50.201 FIRMWARE

Had an opportunity to do city driving with the new 2.50.201 firmware and was pleased to see improved detection of immobile objects while TACC is engaged.

As an approximation, TACC picked up 9/10 immobile objects in front of me and slowed down/stopped accordingly. I am unsure if it was alterations to hardware/software/both which allowed for improved accuracy.

How I seem to be able to determine if TACC is 'aware' of a stopped object in front of me or not is if the 'vehicle' is rendered as a picture on the dash.

The one situation where TACC did not succeed in immobile object detection was:

Road Conditions: Night time. Dark. Only visible light to me is my headlights. traffic light and brake lights from the other car.

Other Car: White car stopped at red light in a left turn lane.

My Car: Driving with TACC at 50MPH. I merge to left turn lane.

I felt TACC wouldn't stop so applied hard pressure to the brakes. I stop behind the white car. The Tesla does not register a graphic of a vehicle in front of me on the dash. Light turns green and then a vehicle magically appears in front as the white car starts accelerating.

I do not think FCW or AEB would have kicked off had I not braked myself. Prior to 2.50.201, it would have been 0/10 detection of immobile objects so big improvement. I would like to see it at 99.999/100.

Since TACC does slow down from a long distance when it does detect an immobile object, I am considering driving with it on all the time. But you need to be cautious and alert since the detection software still needs to keep improving.

Had an opportunity to do city driving with the new 2.50.201 firmware and was pleased to see improved detection of immobile objects while TACC is engaged.

As an approximation, TACC picked up 9/10 immobile objects in front of me and slowed down/stopped accordingly. I am unsure if it was alterations to hardware/software/both which allowed for improved accuracy.

How I seem to be able to determine if TACC is 'aware' of a stopped object in front of me or not is if the 'vehicle' is rendered as a picture on the dash.

The one situation where TACC did not succeed in immobile object detection was:

Road Conditions: Night time. Dark. Only visible light to me is my headlights. traffic light and brake lights from the other car.

Other Car: White car stopped at red light in a left turn lane.

My Car: Driving with TACC at 50MPH. I merge to left turn lane.

I felt TACC wouldn't stop so applied hard pressure to the brakes. I stop behind the white car. The Tesla does not register a graphic of a vehicle in front of me on the dash. Light turns green and then a vehicle magically appears in front as the white car starts accelerating.

I do not think FCW or AEB would have kicked off had I not braked myself. Prior to 2.50.201, it would have been 0/10 detection of immobile objects so big improvement. I would like to see it at 99.999/100.

Since TACC does slow down from a long distance when it does detect an immobile object, I am considering driving with it on all the time. But you need to be cautious and alert since the detection software still needs to keep improving.

krazineurons

krazineurons

Am guessing the model has been trained enough to identify what a stopped vehicle looks like. Your scenario is a corner case which with enough usage will be trained as well. So I will recommend you try it multiple times till the model learns itSTILL RECOMMEND CAUTION WITH TACC UNDER 2.50.201 FIRMWARE

Had an opportunity to do city driving with the new 2.50.201 firmware and was pleased to see improved detection of immobile objects while TACC is engaged.

As an approximation, TACC picked up 9/10 immobile objects in front of me and slowed down/stopped accordingly. I am unsure if it was alterations to hardware/software/both which allowed for improved accuracy.

How I seem to be able to determine if TACC is 'aware' of a stopped object in front of me or not is if the 'vehicle' is rendered as a picture on the dash.

The one situation where TACC did not succeed in immobile object detection was:

Road Conditions: Night time. Dark. Only visible light to me is my headlights. traffic light and brake lights from the other car.

Other Car: White car stopped at red light in a left turn lane.

My Car: Driving with TACC at 50MPH. I merge to left turn lane.

I felt TACC wouldn't stop so applied hard pressure to the brakes. I stop behind the white car. The Tesla does not register a graphic of a vehicle in front of me on the dash. Light turns green and then a vehicle magically appears in front as the white car starts accelerating.

I do not think FCW or AEB would have kicked off had I not braked myself. Prior to 2.50.201, it would have been 0/10 detection of immobile objects so big improvement. I would like to see it at 99.999/100.

Since TACC does slow down from a long distance when it does detect an immobile object, I am considering driving with it on all the time. But you need to be cautious and alert since the detection software still needs to keep improving.

Economite

Member

Your scenario is a corner case which with enough usage will be trained as well. So I will recommend you try it multiple times till the model learns it

I'd be amazed if the on-board learning is sophisticated enough to learn that sort of things. In fact, it would probably be pretty dangerous if each car is independently trying to figure out what does or doesn't count as another car.

My guess is that (at best) the on board-logic is able to remember (I) locations where the driver has made the same correction multiple times in order to stay in lane, or (ii) locations where something (like an overpass) always appears in the sensors, but does not block travel.

If there is something that your car isn't handling correctly, best to report it to Tesla and then avoid using AP/TACC in that situation. Not good idea to keep intentionally running the car through scenarios it can't handle. That's just dangerous and rude to other drivers.

I'd be amazed if the on-board learning is sophisticated enough to learn that sort of things. In fact, it would probably be pretty dangerous if each car is independently trying to figure out what does or doesn't count as another car.

My guess is that (at best) the on board-logic is able to remember (I) locations where the driver has made the same correction multiple times in order to stay in lane, or (ii) locations where something (like an overpass) always appears in the sensors, but does not block travel.

If there is something that your car isn't handling correctly, best to report it to Tesla and then avoid using AP/TACC in that situation. Not good idea to keep intentionally running the car through scenarios it can't handle. That's just dangerous and rude to other drivers.

I do report to Tesla my findings. I only need to test enough to determine if a feature is reliable or not. I share that with others who will hopefully spread the word to prevent any mishaps which can harm other drivers and the Tesla brand.

There are other drivers right now, possibly not on TMC that are trying to push every button. Better to have a group of people who can test things in a controlled manner and report issues than have someone else report issues after they have had an accident.

I just treat AP2 like a new teenage driver. You let them fly to see their limits but never too far enough to hurt them selves or reach a point of no return where you can't take the wheel back.

Hopefully, it won't be necessary to put the vehicle into valet mode in order to keep AutoPilot under control....

I just treat AP2 like a new teenage driver. You let them fly to see their limits but never too far enough to hurt themselves or reach a point of no return where you can't take the wheel back.

krazineurons

krazineurons

Isn't the fleet learning model just about that? Each driver behaves differently in different situations, AP records its behavior and drivers behavior and uploads to the AP servers, similarly all the objects the camera is scanning are being sent to the server and also processed by the on board computer.I'd be amazed if the on-board learning is sophisticated enough to learn that sort of things. In fact, it would probably be pretty dangerous if each car is independently trying to figure out what does or doesn't count as another car.

The on board computer has a version of the trained model and runs the scenario through it and performs an action. The server keeps processing new data and updates the model and pushes it to the on board computer on all cars.

Probably they enhanced the code to do more things in this release but the fact that MXWing was able to see it work in 9/10 scenarios, it's also the result of the neural net processing.

Economite

Member

I only need to test enough to determine if a feature is reliable or not. I share that with others who will hopefully spread the word to prevent any mishaps which can harm other drivers and the Tesla brand.

My question is, why is Tesla releasing features with instructions that are so vague that they require this sort of reliability testing?... They shouldn't be outsourcing this function to untrained volunteers like yourself. If something is unreliable, it either shouldn't be released or should be released with instructions about when it is unreliable that are so clear there is no need for your testing.

Economite

Member

Isn't the fleet learning model just about that? Each driver behaves differently in different situations, AP records its behavior and drivers behavior and uploads to the AP servers, similarly all the objects the camera is scanning are being sent to the server and also processed by the on board computer.

The on board computer has a version of the trained model and runs the scenario through it and performs an action. The server keeps processing new data and updates the model and pushes it to the on board computer on all cars.

I think you're way overestimating the sophistication and depth of the machine learning. I doubt Tesla is using it in such a broadbased way. It would be impossible to debug. Also, I suspect there is a real limit to the amount of data they are actually uploading to the mothership. I bet most of the "machine learning" taking place is actually just simple map notation happening in-car.

Probably they enhanced the code to do more things in this release but the fact that MXWing was able to see it work in 9/10 scenarios, it's also the result of the neural net processing.

No way to know that this has anything to do with neural net processing. And, in this context, 9 of 10 is a pretty horrible and dangerous result.

I think you're way overestimating the sophistication and depth of the machine learning. I doubt Tesla is using it in such a broadbased way. It would be impossible to debug. Also, I suspect there is a real limit to the amount of data they are actually uploading to the mothership. I bet most of the "machine learning" taking place is actually just simple map notation happening in-car.

No way to know that this has anything to do with neural net processing. And, in this context, 9 of 10 is a pretty horrible and dangerous result.

Economize.......Lordy, Lordy.......why so negative??

MXWing and others.....love the feedback and updates, keep 'me coming

Lots of us out here who appreciate your time, thoughts and experience

Hopefully, it won't be necessary to put the vehicle into valet mode in order to keep AutoPilot under control.

I could of said AP2 was like a Drunk, Sleepy and new teenage driver but that would of been a little harsh

TACC has improved significantly in just two weeks. I'll be very interested in hearing what Tesla has to say on the inquiry if they made specific improvements to address the immobile object detection.

Updated responses from Tesla after submitting feedback:

"Object detection and TACC are improved with time in combination with updates. As a vehicle “learns” how to respond to objects and conditions the fleet with also benefit from the new data. We appreciate you taking the time to log your findings. I have logged your additional input to the request tracking your issues."

followed up by

"Thank you for these updates. 8.0 (2.50.201) is an refinement for improved performance of the existing features.

I am glad to hear you’ve noticed improved object detection. You are correct that the instrument cluster should display a rendering of what your vehicle detects.

I will pass along your information about this incident. Do you know about at what distance you began braking?"

---

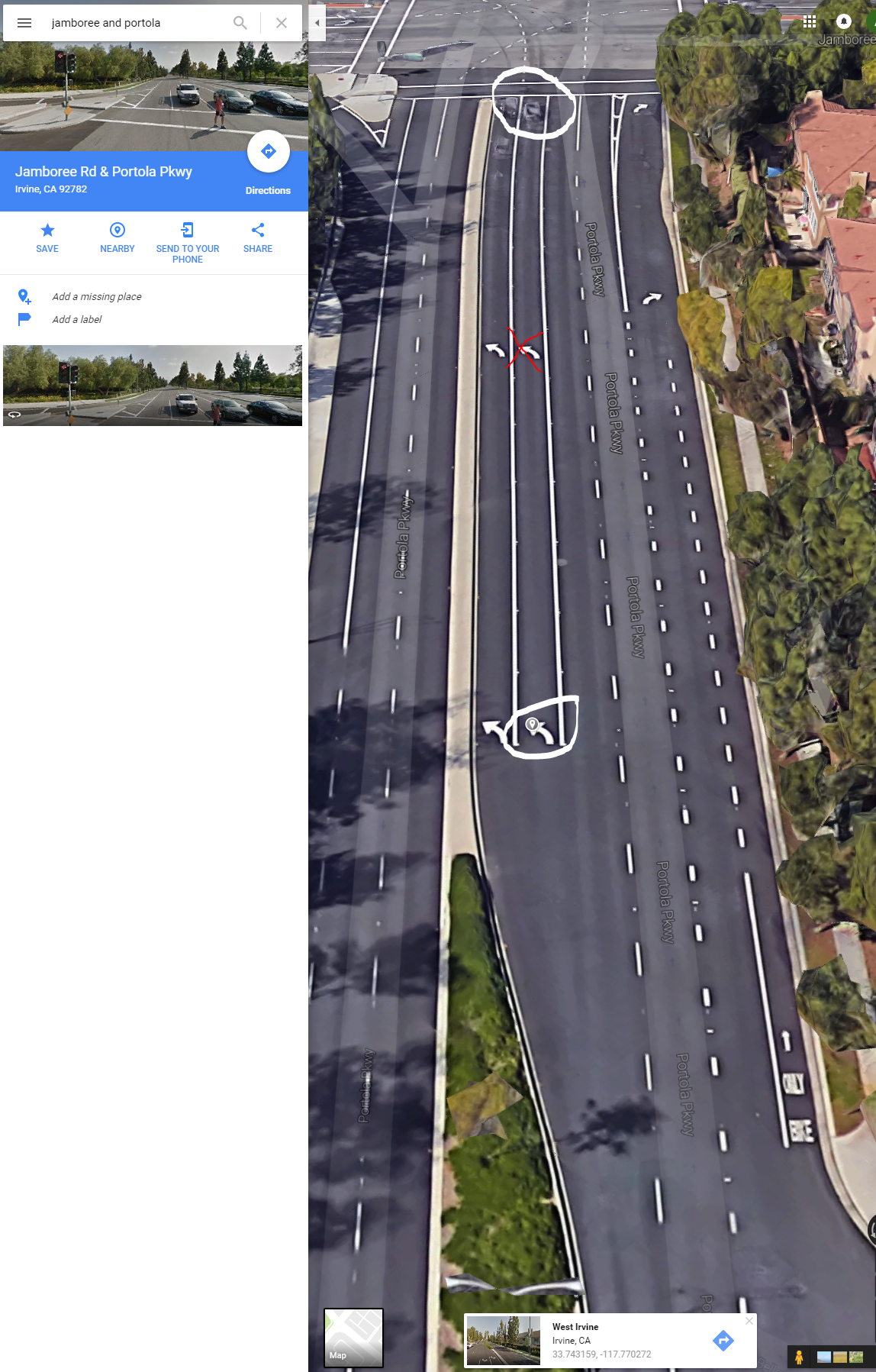

White Circles are car positions. X is approximately where I engaged the brakes. TACC has typically detected vehicles as soon as I enter the lane from that distance or a 1/4 in at the latest. I believe Tesla Vision had difficulty here because there was no cars adjacent to the stopped cars. It was also dark with minimal light sources to aid cameras.

The main takeaway is if the instrument cluster does not render the car, Tesla vision did not see it.

"Object detection and TACC are improved with time in combination with updates. As a vehicle “learns” how to respond to objects and conditions the fleet with also benefit from the new data. We appreciate you taking the time to log your findings. I have logged your additional input to the request tracking your issues."

followed up by

"Thank you for these updates. 8.0 (2.50.201) is an refinement for improved performance of the existing features.

I am glad to hear you’ve noticed improved object detection. You are correct that the instrument cluster should display a rendering of what your vehicle detects.

I will pass along your information about this incident. Do you know about at what distance you began braking?"

---

White Circles are car positions. X is approximately where I engaged the brakes. TACC has typically detected vehicles as soon as I enter the lane from that distance or a 1/4 in at the latest. I believe Tesla Vision had difficulty here because there was no cars adjacent to the stopped cars. It was also dark with minimal light sources to aid cameras.

The main takeaway is if the instrument cluster does not render the car, Tesla vision did not see it.

Similar threads

- Replies

- 2

- Views

- 255

- Replies

- 16

- Views

- 1K

- Replies

- 55

- Views

- 5K