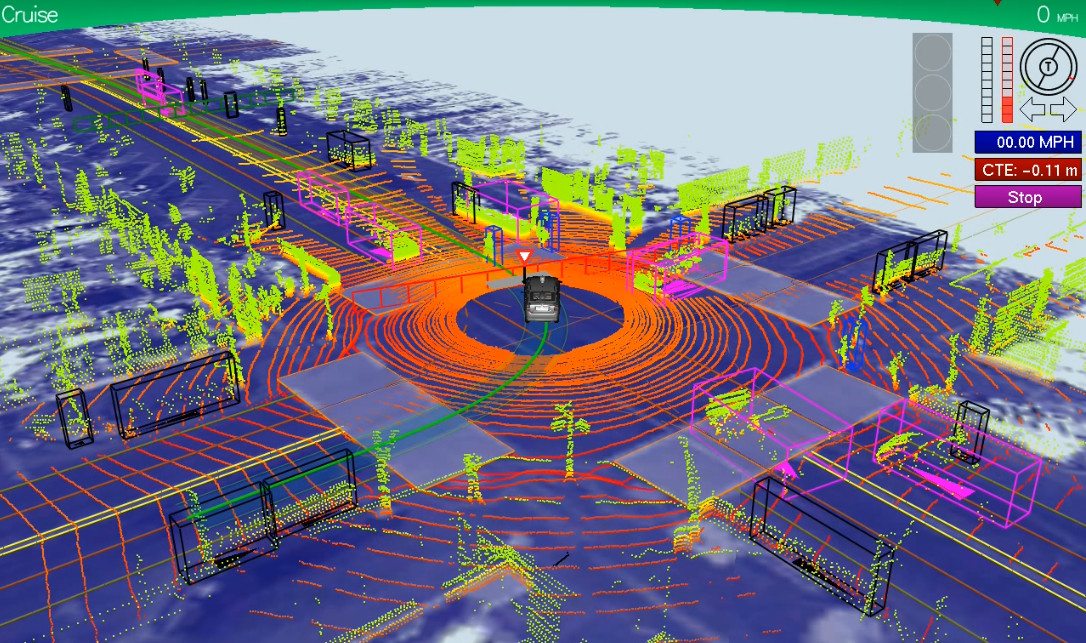

Found and decided to share one interesting approach to increase AI effectiveness which is also potentially applicable to autonomous cars

Next Big Future: Artificial Intelligence Agent outplays human and the in Game AI in Doom Video Game

( the paper is here [1609.05521] Playing FPS Games with Deep Reinforcement Learning )

on autonomous cars authors say:

"the deep reinforcement learning techniques they used to teach their AI agent to play a virtual game might someday help self-driving cars operate safely on real-world streets and train robots to do a wide variety of tasks to help people"

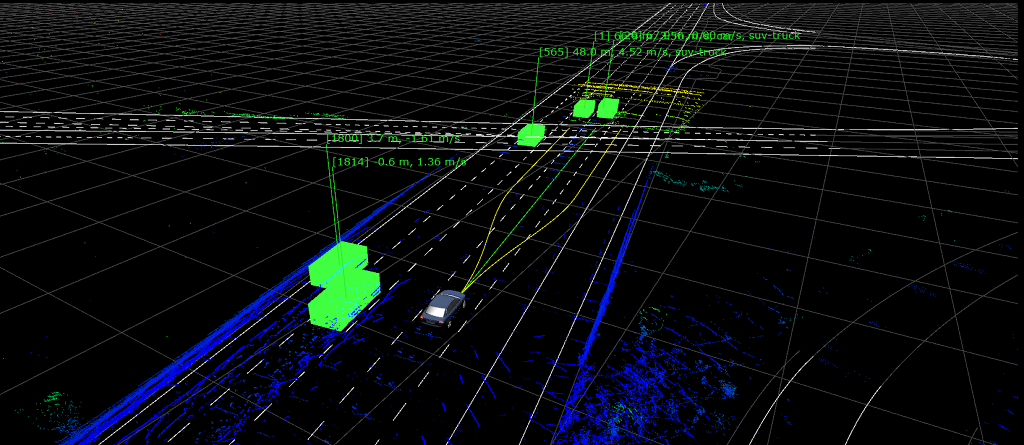

indeed, this approach learns only from 'what it observes' in 3D and still outperforms human players. So

if there are several trained networks (as proposed ) which allow both navigation and taking actions using neural network memory, and those networks make better decisions than human driver can do, then safety of autonomous cars might increase and become much better than we could expect even from experienced driver.

Next Big Future: Artificial Intelligence Agent outplays human and the in Game AI in Doom Video Game

( the paper is here [1609.05521] Playing FPS Games with Deep Reinforcement Learning )

on autonomous cars authors say:

"the deep reinforcement learning techniques they used to teach their AI agent to play a virtual game might someday help self-driving cars operate safely on real-world streets and train robots to do a wide variety of tasks to help people"

indeed, this approach learns only from 'what it observes' in 3D and still outperforms human players. So

if there are several trained networks (as proposed ) which allow both navigation and taking actions using neural network memory, and those networks make better decisions than human driver can do, then safety of autonomous cars might increase and become much better than we could expect even from experienced driver.