mspohr

Well-Known Member

As soon as they can figure out how to train them, they'll be ruling the road.I believe that you have stumbled onto Tesla's secret technology: A single fly brain embedded in every AP computer.

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

As soon as they can figure out how to train them, they'll be ruling the road.I believe that you have stumbled onto Tesla's secret technology: A single fly brain embedded in every AP computer.

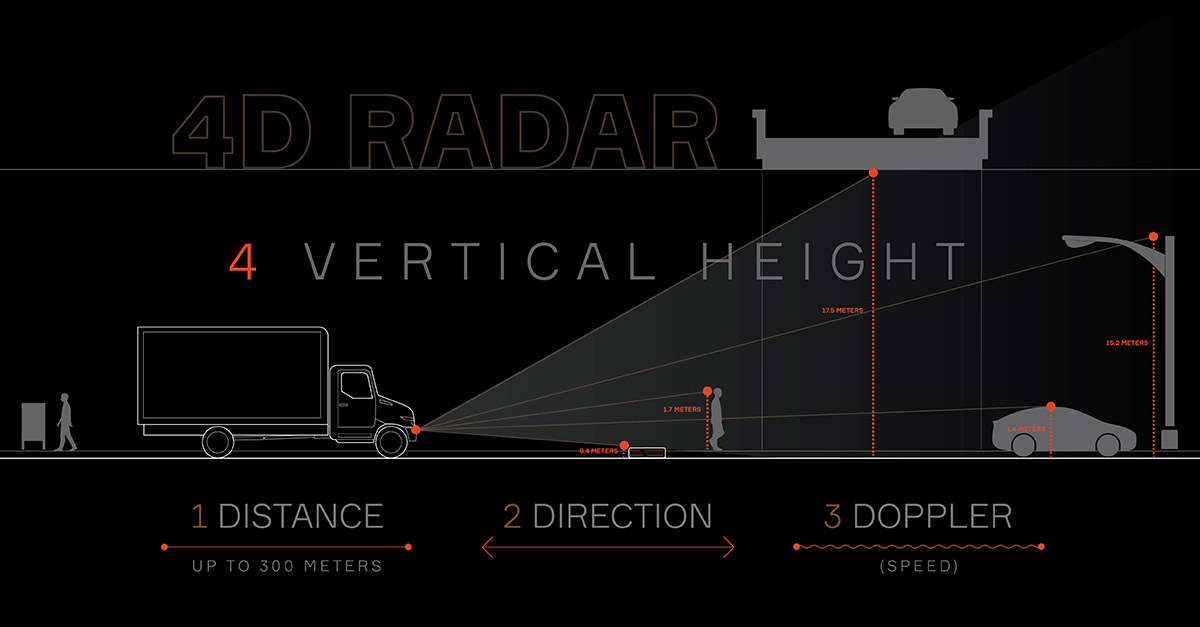

Competition handles this just fine. With the support from multiple inputs they are leaps and bounds ahead of Tesla in autonomy.Elon has mentioned that his decision to focus on Vision (cameras) is due to the heavy processing and competeting data generated by using both Vision and Radar at the same time. With both sensors operating the computer must take in the two data streams, compare their data and try to sort out which of the two senarios to follow. It can be a very difficult decision for the computer to sort out.

I keep coming back to the fact that people have been driving cars for the past 100 years using only their two eyes (and a sophisticated neural net that is our brain).

It's only a matter of time until the Tesla neural net becomes sophisticated enough to manage driving. It is not necessary for the Tesla net to be as capable as a human brain, just enough to drive. When you look at nature, we have small insects that manage to navigate complex 3D environments with very small neural nets. I think that the Tesla net could become as good as that of a fly.

I think a closer analogy would be a dragonfly. There have been studies on using dragonfly's as aids to augment robot vision based on how they predict prey's movements.Processing power: Could be that the neuron count of a fly is sufficient to get a drivers license and drive safely. Maybe we'll learn that one day. Today there is no evidence of that.

Neural network structure: Tesla and others use very different type of NNs compared to flys and humans. Maybe some day someone figures out how to build artificial comparable to what flys are using. This has been a research topic for decades. Today we are not even close. That said, it is likely that structure does not need to emulate organic brain, that general intelligence is not needed and that current deep learning like approach could one day prove to be sufficient.

Thus the question is: (A) Will Tesla R&D come up with neural network structure capable of driving autonomously and (B) does AP/HW3 have enough processing power to run that neural network. Some day Tesla may be able to give a positive answer to A. If they are, they might later be able to give a positive answer to B. Both are needed for current gen. cars to become autonomous.

When one sense or sensor fails, a smart being depends on what they have.there are all kinds of problems with snow. LiDAR and radar aren’t reading snow covered road signs either. Bad weather is a usecase to be handled after fair weather fsd is solved.

I think we're a very long way from AI handling this:When one sense or sensor fails, a smart being depends on what they have.

As a human, when driving in a snowstorm that has covered the street and signs, I use memorized "map": recalling what the signs were, looking at the environment and figure out where the road is.

Car should do the same. Use all input they can gather: radar, vision, map, sound, gps, ... And from those draw intelligent conclusions.

At the extreme: ok, Tesla perfects FSD based on vision, let's say in 2 weeks, it's perfect* everyone loves it.

*In good weather.

They're working inside their own self-defined "geo" fence: good weather. Then what? Woops, it's raining hard, take over bub. I already pull over when it's unsafe to drive in bad weather. How is this helping? OK it's better, in good weather, than 90% or whatever. What percent of all accidents does that solve for? Not 100, that's for sure.

I think that's a deal killer. And they don't get ANY knock-on functionality that they could use in the future to enhance regular driving, ability to see through fog, rain, snow, darkness.

Just seems like a huge blind spot, and the result will be a gimmick for daytime good weather use. Cool story, bro (Elon).

[long boring parts of trips are already really well solved by free AP, so that's not part of the FSD benefit]

And yet, people keep regularly smashing into each other in hundred car pileups with dozens of fatalities when encountering fog or smoke. That is why I think radar should always be included. It doesn’t need to be high resolution to avoid a bunch of stopped or slow cars.I keep coming back to the fact that people have been driving cars for the past 100 years using only their two eyes (and a sophisticated neural net that is our brain).

It's only a matter of time until the Tesla neural net becomes sophisticated enough to manage driving. It is not necessary for the Tesla net to be as capable as a human brain, just enough to drive. When you look at nature, we have small insects that manage to navigate complex 3D environments with very small neural nets. I think that the Tesla net could become as good as that of a fly.

Not so simple.And yet, people keep regularly smashing into each other in hundred car pileups with dozens of fatalities when encountering fog or smoke. That is why I think radar should always be included. It doesn’t need to be high resolution to avoid a bunch of stopped or slow cars.

We're a long way from humans handling this. We do a very poor job of it. First snow of the season, thousands of otherwise good snow-drivers bump into each other like bumper cars, forgetting how little grip they have. I almost always slide through the stop sign at the end of the block on day one, even though I remember to think about it.I think we're a very long way from AI handling this:

photonicsreport.com

photonicsreport.com

Yeah, that was a shock. I had to stop the video. I might be able to finish it later knowing what to expect, but maybe not, I have another 12 minutes to go!Oh my her voice.

Very impressive actually just an unusual voice for that knowledge level.What do you think about what she said?

I think they have worked really hard to avoid doing any of this. Maybe I'm wrong, but if you get into manual pathing then that data has a shelf life as things slowly change over time. I expect they have been taking the "high road" on this and trying to keep the pathing as a pure algorithmic programing exercise. I'd love to have one of the FSD engineers comment on that as some point.

Since this thread is addressing Tesla's lack of lidar, then your bad weather argument is moot because the laser in lidar can't shine through inclement weather either. Ok, so now let's bring in radar, which CAN see through inclement weather. If the weather is so bad that vision is useless, can the car continue to drive on just radar alone? Not even close. Radar resolution is poor, and like lidar, it can't read signs or stop light status or see lane lines.

yes it does, as it has to distinguish stopped cars from low overhead bridges, and distinguish low overhead bridges from low overhead bridges *and* stopped cars, and when there are elevation changes up, and down, and up and down.And yet, people keep regularly smashing into each other in hundred car pileups with dozens of fatalities when encountering fog or smoke. That is why I think radar should always be included. It doesn’t need to be high resolution to avoid a bunch of stopped or slow cars.