I'm starting this thread to analyze incident reports that Tesla files with NHTSA regarding crashes involving cars that might be related to FSD or AP or NOA. Note that Tesla has to report any incidence where the crash happens even if AP/FSD/NOA was engaged within 30 seconds prior to the crash.

Here is the NHTSA site where you can see details about the report and download the data. The data is for all OEMs.

www.nhtsa.gov

www.nhtsa.gov

Data sheet, 2022 : https://static.nhtsa.gov/odi/ffdd/sgo-2021-01/SGO-2021-01_Incident_Reports_ADAS.csv

Data Definitions : https://static.nhtsa.gov/odi/ffdd/sgo-2021-01/SGO-2021-01_Data_Element_Definitions.pdf

Tesla withholds a large number of data in the fields as "[REDACTED, MAY CONTAIN CONFIDENTIAL BUSINESS INFORMATION]". Still there are a lot of useful fields for analysis. We can use these fields to try to figure out how many crashes are AP/NOA and how many are FSDb, which is my first objective.

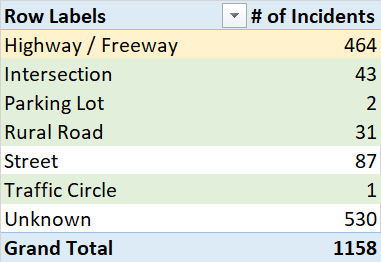

For eg. below I've a pivot table by "Roadway Type". Yellow is clearly AP/NOA, Green is FSD and the other two could be mixed.

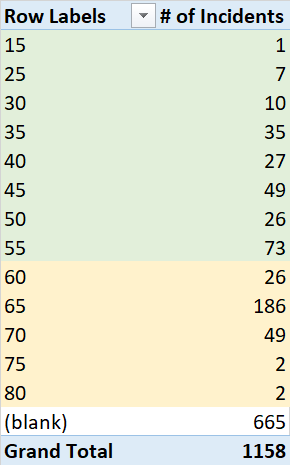

Here is the breakdown by posted speed limit. Again we can assume anything below 60 is FSDb (though there are edge cases).

Here is the NHTSA site where you can see details about the report and download the data. The data is for all OEMs.

Standing General Order on Crash Reporting | NHTSA

NHTSA has issued a General Order requiring the reporting of crashes involving automated driving systems or Level 2 advanced driver assistance systems.

Data sheet, 2022 : https://static.nhtsa.gov/odi/ffdd/sgo-2021-01/SGO-2021-01_Incident_Reports_ADAS.csv

Data Definitions : https://static.nhtsa.gov/odi/ffdd/sgo-2021-01/SGO-2021-01_Data_Element_Definitions.pdf

Tesla withholds a large number of data in the fields as "[REDACTED, MAY CONTAIN CONFIDENTIAL BUSINESS INFORMATION]". Still there are a lot of useful fields for analysis. We can use these fields to try to figure out how many crashes are AP/NOA and how many are FSDb, which is my first objective.

For eg. below I've a pivot table by "Roadway Type". Yellow is clearly AP/NOA, Green is FSD and the other two could be mixed.

Here is the breakdown by posted speed limit. Again we can assume anything below 60 is FSDb (though there are edge cases).