Sign up to get on the tester list here: xAI Grok

Release announcement: Announcing Grok

Excerpts:

www.teslarati.com

Tesla cars will run a small version of Grok some year.

www.teslarati.com

Tesla cars will run a small version of Grok some year.

Release announcement: Announcing Grok

Excerpts:

- Grok is designed to answer questions with a bit of wit and has a rebellious streak, so please don’t use it if you hate humor!

- A unique and fundamental advantage of Grok is that it has real-time knowledge of the world via the 𝕏 platform. It will also answer spicy questions that are rejected by most other AI systems.

- Grok is still a very early beta product – the best we could do with 2 months of training – so expect it to improve rapidly with each passing week with your help.

- We provide a summary of the important technical details of Grok-1 in the model card.

- If that sounds exciting to you, apply to join the team here.

- We give Grok access to search tools and real-time information, but as with all the LLMs trained on next-token prediction, our model can still generate false or contradictory information.

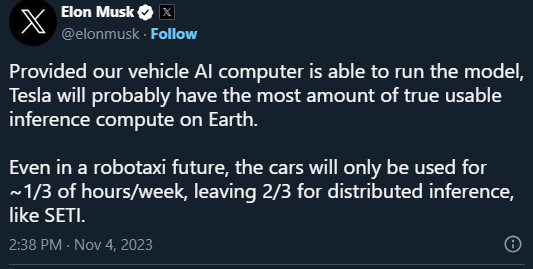

Tesla to run smaller native version of xAI’s Grōk using local compute power

The first product from Elon Musk-led xAI was announced on Friday, and the CEO has suggested that Tesla's vehicles may natively run a...

Last edited: