Of-course All Waymo needs is to post a one paragraph statement every quarter just like Tesla.What do you propose as an alternative system for evaluating safety?

Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Autonomous Car Progress

- Thread starter stopcrazypp

- Start date

-

- Tags

- Autonomous Vehicles

linux-works

Active Member

you think tesla brought the brains to that combo?Anyway, it is a pity, that Tesla and Mobileye ended their collaboration. I’m sure world would now have better autonomous cars on the road, if that was not the case.

lols

ME was right to cut tesla loose. they will advance without the liability known as tesla.

sorry fans. bitter pill to take. can your ego survive?

powertoold

Active Member

Mobileye has big brains if they think two separate perception systems that fail once every 10,000 hours leads to a system with a failure every 100,000,000 hours.

I can't believe some of you claim to work in the field. It's ok though. Milton has a shiny Badger to sell to y'alls.

I can't believe some of you claim to work in the field. It's ok though. Milton has a shiny Badger to sell to y'alls.

This might be the first time I get called Tesla fan on this forumyou think tesla brought the brains to that combo?

lols

ME was right to cut tesla loose. they will advance without the liability known as tesla.

sorry fans. bitter pill to take. can your ego survive?

On other forums it has happened, but because this is mostly pro Tesla site, this might be the first time. Well.. after 3000 messages, I think that it is nice for a change.

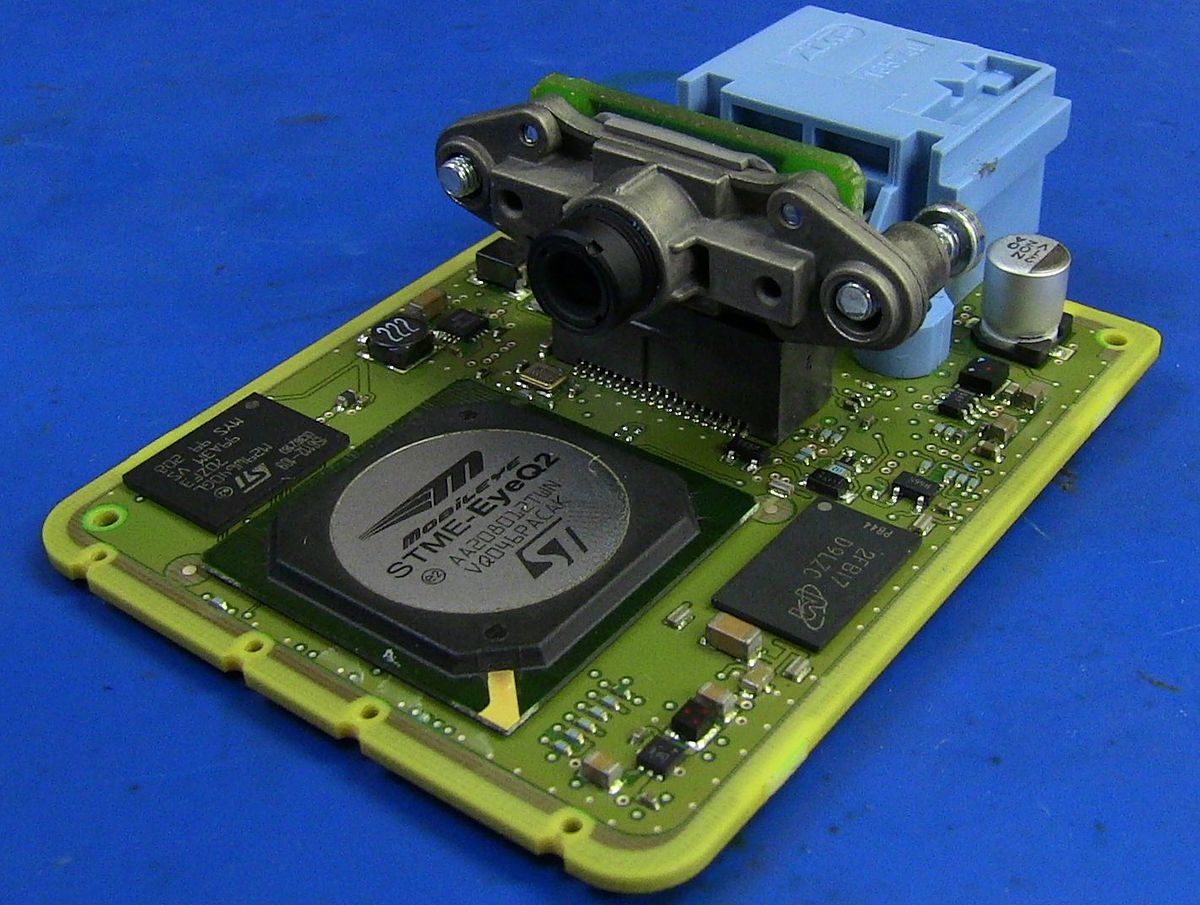

Back on the topic, here are different manufacturers using EyeQ3. I admit, that I’m not familiar with the quality of the other manufacturers systems using the same chip.

Last edited:

First things first; I don’t work on this field.Mobileye has big brains if they think two separate perception systems that fail once every 10,000 hours leads to a system with a failure every 100,000,000 hours.

I can't believe some of you claim to work in the field. It's ok though. Milton has a shiny Badger to sell to y'alls.

1/10,000 * 1/10,000 = 1/100,000,000 ?

FloridaJohn

Member

What is Tesla’s published failure rate of their perception system? You know, for comparison purposes.Mobileye has big brains if they think two separate perception systems that fail once every 10,000 hours leads to a system with a failure every 100,000,000 hours.

I can't believe some of you claim to work in the field. It's ok though. Milton has a shiny Badger to sell to y'alls.

diplomat33

Average guy who loves autonomous vehicles

Mobileye has big brains if they think two separate perception systems that fail once every 10,000 hours leads to a system with a failure every 100,000,000 hours.

Shashua is not saying that it will be exactly 100,000,000 hours per failure. He feels that it will be a good approximation. When two systems are completely independent, the odds of both systems failing at the same time, is the product of the odds of each system failing by itself. So, since both systems are close to independent, Shashua feels that the product of the odds will be a good approximation.

And the point is not to provide an exact failure rate but to give a ballpark figure that shows that having two systems will be orders of magnitude safer than having just one system.

linux-works

Active Member

I cant believe how eager many of you are to keep proving the proverb:I can't believe some of you claim to work in the field. It's ok though. Milton has a shiny Badger to sell to y'alls.

"it is better to be thought a fool, than to speak up and remove all doubt"

but again, ego is the watchword of this website. this is not a tech forum; its a fanboy forum. this contant 'my dad is bigger than your dad' is really getting tiring.

I can see why actual company support and engineer types dont stay long on forums like this. it turns a lot of people off when its 90% fanboys trying to out piss each other. worse when they just dont know what the hell they are talking about and are damned proud of it, too.

story of two americas, in fact, in a microcosm, right here.

This is an interesting issue. The two systems are independently analyzing the situation and therefore probabilities of failure are assumed to be random in each and therefore statistically uncorrelated. If that were clearly true the combined probability calculation would be defensible.Shashua is not saying that it will be exactly 100,000,000 hours per failure. He feels that it will be a good approximation. When two systems are completely independent, the odds of both systems failing at the same time, is the product of the odds of each system failing by itself. So, since both systems are close to independent, Shashua feels that the product of the odds will be a good approximation.

And the point is not to provide an exact failure rate but to give a ballpark figure that shows that having two systems will be orders of magnitude safer than having just one system.

However this kind of statistical predictive calculation is fraught with major possibilities for error. It would be correct if the possibility of failure in each had nothing to do with the external input, but only with some kind of random noise-generated failure within the systems themselves. IMO it is however quite likely that each has chance of perceptual error that is highly influenced by external challenging scenarios.

Each (Vision and fused Radar/Lidar) may actually have much better than 10^-4 (1 in 10,000) failure when presented with everyday scenarios that were trained for, but significantly worse when presented with unusual, unknown and/or untrained scenarios. Thus, in the set of unusual events (edge cases as we like to call them), the probability of failure may be far higher than 10^-4 for each, and since the major causative factor was the occurrence of the edge-case scenario, that is challenging for both systems, we can reasonably conclude that the probability of edge-case failures will then not be statistically independent. This has two very important implications:

- The 10^-4 baseline assumption is invalidated for this set of edge cases (maybe it's now 10^-3 (1 in 1000) or 10^-2 (1 in 100) for this troublesome set

- Further and very importantly, we can no longer take comfort in the multiply-them-together estimation method because the cause of failure was not random between them, but exposed possible vulnerabilities of each. It is in this very set where this is likely to be true.

Post-event analysis of accidents and failures often reveal the unexpected ways that engineering assumptions crumbled in the face of an assumed-independent failures that turned out not to be. Events that were assumed to be statistically independent became related by a chain-of-unfortunate-events scenario that defied the comforting statistical predictions.

I'm not going all the way to say that Amnon is pushing a clear fallacy, but I'm strongly cautioning that this use of statistical prediction contains important assumptions that deserve to be challenged and re-examined. It sounds technically comforting but I'm not ready to accept the randomness premise behind it.

And in this whole discussion, we're glossing over the issue of how the outputs of these independent perception modules are eventually fused into a driving decision. That is a further non-statistical process. Voting on which side to believe is difficult when there can be serious errors in the confidence level of each side's perception.

rxlawdude

Active Member

Real world data from a multitude of users over a long time span that is not curated by a vested interest.What do you propose as an alternative system for evaluating safety?

Drug trials can be done by a third party. I can't really think of how that would work for autonomous vehicles.

Did you ignore the analogy to a drug clinical trial? THAT'S WHY. Happy to cite multiple examples of fudged data in this context. Safety is safety, Daniel.

Daniel in SD

(supervised)

The issue is that only the manufacturer has the simulation tools to figure out if a disengagement was necessary to avoid a collision. Tesla is going to have the same issue once FSD gets a few orders of magnitude better. How can an FSD beta user determine whether or not a collision would have occurred had they not disengaged?Real world data from a multitude of users over a long time span that is not curated by a vested interest.

Did you ignore the analogy to a drug clinical trial? THAT'S WHY. Happy to cite multiple examples of fudged data in this context. Safety is safety, Daniel.

Once systems are actually deployed and driving billions of miles I agree that the DMV can just track accident data and miles driven and determine safety. We won’t need to rely on the analysis done by the manufacturer.

Real world data from a multitude of users over a long time span that is not curated by a vested interest.

Did you ignore the analogy to a drug clinical trial? THAT'S WHY. Happy to cite multiple examples of fudged data in this context. Safety is safety, Daniel.

Are you saying real world videos??? wait where is @Knightshade to call this hearsay.

diplomat33

Average guy who loves autonomous vehicles

Each (Vision and fused Radar/Lidar) may actually have much better than 10^-4 (1 in 10,000) failure when presented with everyday scenarios that were trained for, but significantly worse when presented with unusual, unknown and/or untrained scenarios. Thus, in the set of unusual events (edge cases as we like to call them), the probability of failure may be far higher than 10^-4 for each, and since the major causative factor was the occurrence of the edge-case scenario, that is challenging for both systems, we can reasonably conclude that the probability of edge-case failures will then not be statistically independent. This has two very important implications:

- The 10^-4 baseline assumption is invalidated for this set of edge cases (maybe it's now 10^-3 (1 in 1000) or 10^-2 (1 in 100) for this troublesome set

- Further and very importantly, we can no longer take comfort in the multiply-them-together estimation method because the cause of failure was not random between them, but exposed possible vulnerabilities of each. It is in this very set where this is likely to be true.

I went back and watched the CES presentation again to refresh my memory. I think there has been some confusion and twisting of Shashua's words.

Here are my notes:

1) The MTBF specifically refers to "sensing failures that lead to a RSS violation". So we are talking about a specific type of failure. We are talking about a perception failure that causes the car to violate ME's driving policy.

2) ME's aim is to achieve vision-only "end to end" FSD with a "target goal" MTBF of 10^-4. This goal would require "very powerful surround vision." So the vision-only MTBF of 10^-4 is a target goal. It has not been achieved yet AFAIK.

3) The reason ME is picking that goal for their vision-only FSD is because the probability of human injury per hour of driving is also 10^-4. So they want their vision-only FSD to have approximately the same safety as average human in terms of injury.

4) The probability of a fatality per hour of human driving is 10^-6.

5) Adding in some safety margins, ME's target goal for their L4/L5 FSD is to be 10x safer than average human driver in terms of fatalities. So their target goal is a MTBF of 10^-7.

6) ME believes that there is no single FSD system today that can achieve this safety goal. Traditional sensor fusion cannot achieve this goal either. ME believes that separate redundancy can achieve that goal. Hence have "two separate streams", one vision and one lidar/radar.

6) The two systems (vision and lidar/radar) are "approximately independent" but not "statistically independent".

7) If each system can achieve a goal of 10^-4 then their product would be 10^-8. But the two systems are not "statistically independent", so they should still achieve the lesser goal of 10^-7. The combined system (vision + radar/lidar) should approximately achieve 10x the human safety in terms of fatalities.

So this is where you are wrong @powertoold . Shashua says that the two systems are not statistically independent and that the combined MTBF would NOT be the product of the two individual systems. What he is suggesting is that if they can achieve a goal of 10^-4 for each system, that the combined MTBF should be approximately 10x less than the product and that would be "good enough" to achieve their goal of 10x safer than average human in terms of fatalities.

Last edited:

powertoold

Active Member

Shashua says that the two systems are not statistically independent and that the combined MTBF would NOT be the product of the two individual systems. What he is suggesting is that if they can achieve a goal of 10^-4 for each system, that the combined MTBF would be approximately 10x less than the product and that would be "good enough" to achieve their goal of 10x

Sounds great and all, but:

diplomat33

Average guy who loves autonomous vehicles

Sounds great and all, but:

Not sure what your point is.

powertoold

Active Member

Not sure what your point is.

You're saying I twisted his words, but I didn't. It's what he says about the product of the two sensor subsystems in that video.

diplomat33

Average guy who loves autonomous vehicles

You're saying I twisted his words, but I didn't. It's what he says about the product of the two sensor subsystems in that video.

Yes, two sensor subsystems that are statistically independent, the total MTBF will be the product of the two MTBF. That is a true statement. And with ME's "parallel approach" to sensor fusion, ME argues that the two subsystems are approximately independent. And I showed you what Shashua said at CES 2020 that explains things in more detail. The two systems are not completely independent so the product is an approximation, not an exact number. Do some research. Don't just grab one quote from an interview.

Last edited:

powertoold

Active Member

Yes, two sensor subsystems that are statistically independent, the total MTBF will be the product of the two MTBF. That is a true statement. And with ME's "parallel approach" to sensor fusion, ME argues that the two subsystems are approximately independent. And I showed you what Shashua said at CES 2020 that explains things in more detail. The two systems are not completely independent so the product is an approximation, not an exact number. Do some research. Don't just grab one quote from an interview.

he’s talking about the failure of the perception system, not the sensor itself. what he’s saying makes no sense in the context of perceptual predictions by self driving systems.

he says so many illogical things in that video. Even the analogy with the iOS and Android phone is a joke. it’s scary because he says all this stuff in a very believable and genuine way.

diplomat33

Average guy who loves autonomous vehicles

he’s talking about the failure of the perception system, not the sensor itself. what he’s saying makes no sense in the context of perceptual predictions by self driving systems.

It makes sense if the two systems are independent or close to it. Like his analogy in the interview of an apple and an android phone, they have a vision system (apple) and a radar/lidar system (android) each making independent decisions. The probability of both failing at the same time is the product of each individual failure rate. Now, since the two systems in the car are not completely independent, the product will give an approximation, not an exact number.

I think your problem is that you might be thinking of traditional sensor fusion where the sensors are not independent. But ME's "true redundancy" is not traditional sensor fusion. ME's perception subsystems are arranged in such a way that they are independent or close to it. At least, that is my understanding of how they have described it.

diplomat33

Average guy who loves autonomous vehicles

he says so many illogical things in that video. Even the analogy with the iOS and Android phone is a joke. it’s scary because he says all this stuff in a very believable and genuine way.

No, I think it makes logical sense to most people who understand AVs. You are just a Tesla fanboy so you only see things the "Elon way". So, anything that contradicts Elon does not make sense to you.

Similar threads

- Replies

- 10

- Views

- 937

- Replies

- 0

- Views

- 110

- Article

- Replies

- 4

- Views

- 3K

- Replies

- 54

- Views

- 4K