powertoold

Active Member

No, I think it makes logical sense to most people who understand AVs. You are just a Tesla fanboy so you only see things the "Elon way". So, anything that contradicts Elon does not make sense to you.

Ok wow lol

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

No, I think it makes logical sense to most people who understand AVs. You are just a Tesla fanboy so you only see things the "Elon way". So, anything that contradicts Elon does not make sense to you.

The bright side is that once Tesla widely releases fsd beta to the US fleet, our questions will be answered.

What questions will be answered?

I'm a little suspicious that if Tesla released V9.1 as is you would say it proves that Tesla's approach is correct. Or is there some quantifiable performance level that you'll be looking for in the wide release?Who to believe and what approach is correct

I'm a little suspicious that if Tesla released V9.1 as is you would say it proves that Tesla's approach is correct. Or is there some quantifiable performance level that you'll be looking for in the wide release?

I think it's important to set expectations now so we can avoid confirmation bias.

Ok wow lol

This is an interesting issue. The two systems are independently analyzing the situation and therefore probabilities of failure are assumed to be random in each and therefore statistically uncorrelated. If that were clearly true the combined probability calculation would be defensible.

However this kind of statistical predictive calculation is fraught with major possibilities for error. It would be correct if the possibility of failure in each had nothing to do with the external input, but only with some kind of random noise-generated failure within the systems themselves. IMO it is however quite likely that each has chance of perceptual error that is highly influenced by external challenging scenarios.

Each (Vision and fused Radar/Lidar) may actually have much better than 10^-4 (1 in 10,000) failure when presented with everyday scenarios that were trained for, but significantly worse when presented with unusual, unknown and/or untrained scenarios. Thus, in the set of unusual events (edge cases as we like to call them), the probability of failure may be far higher than 10^-4 for each, and since the major causative factor was the occurrence of the edge-case scenario, that is challenging for both systems, we can reasonably conclude that the probability of edge-case failures will then not be statistically independent. This has two very important implications:

This is a fairly well-known concept in system reliability engineering. Non-random (here edge-case scenario) events may be Special Cause failures that do not obey the statistical distribution assumptions. In a supposedly redundant system, Special-Cause failures that can affect both modules of the x2 redundant architecture are then known as Common-Cause failures. This is highly likely in simple redundancy where the two modules are identical. In this ME case, the two modules are very different ("not that kind of redundancy" to quote an earlier exchange about ME), and so admittedly it's not clear that Special Cause failures will become Common Cause failures. However it's also not at all clear that they won't, because both sides are encountering unusual input and can make error for related or independent reasons.

- The 10^-4 baseline assumption is invalidated for this set of edge cases (maybe it's now 10^-3 (1 in 1000) or 10^-2 (1 in 100) for this troublesome set

- Further and very importantly, we can no longer take comfort in the multiply-them-together estimation method because the cause of failure was not random between them, but exposed possible vulnerabilities of each. It is in this very set where this is likely to be true.

Post-event analysis of accidents and failures often reveal the unexpected ways that engineering assumptions crumbled in the face of an assumed-independent failures that turned out not to be. Events that were assumed to be statistically independent became related by a chain-of-unfortunate-events scenario that defied the comforting statistical predictions.

I'm not going all the way to say that Amnon is pushing a clear fallacy, but I'm strongly cautioning that this use of statistical prediction contains important assumptions that deserve to be challenged and re-examined. It sounds technically comforting but I'm not ready to accept the randomness premise behind it.

And in this whole discussion, we're glossing over the issue of how the outputs of these independent perception modules are eventually fused into a driving decision. That is a further non-statistical process. Voting on which side to believe is difficult when there can be serious errors in the confidence level of each side's perception.

The only things that ultimately matter, in my view, are injuries and deaths per million miles while using any technology, from humans driving to driver assist to lame-o L4. (Property loss $/MM arguably could be another valuable metric.)The issue is that only the manufacturer has the simulation tools to figure out if a disengagement was necessary to avoid a collision. Tesla is going to have the same issue once FSD gets a few orders of magnitude better. How can an FSD beta user determine whether or not a collision would have occurred had they not disengaged?

Once systems are actually deployed and driving billions of miles I agree that the DMV can just track accident data and miles driven and determine safety. We won’t need to rely on the analysis done by the manufacturer.

That's not true from my memory. I remember responding to one of the threads where you were talking about the Audi system and you pretty much did the same thing (counting eggs before they hatched). Plenty were saying the timeline for release was significantly farther back than you suggested.I was one of the first ones who called out Audi and BMW years ago when it was clear they were just in it for the PR. I even made a thread about it.

Usually people that are unbiased don't have to call themselves unbiasedAudi Pilot Driving actually had a good system but management gobbled it up like they did the 4 other development project that came after Piloted Drive was shut down.

Most people think i'm biased, but i'm not. I have ripped into just about every traditional automaker out there.

Update on BMW's INEXT Level 3 Highway System in 2021 (not happening)

UPDATE: A few updates are on in order. Looks like Mobileye is no longer joint with BMW on driving policy although they have given BMW the blueprints to move forward. This change most likely came from the new Mercedes/BMW autonomous deal in FEB 2019. Not only that, as a result of this Amnon no...teslamotorsclub.com

That's not an accurate take on Huawei's move at all. In 2018 there was already a government ban on Huawei (along with ZTE), signaling Huawei needed to find a replacement. In March 2019, they announced they were working on an in house replacement of Android (called Hongmeng or Harmony) and that they had been developing it since 2012.About the Huawei Phone OS, they were forced to make a phone in acouple months without Google Android so they forked the Android Open Source Platform (which alot of companies do) and rebranded it with plans to improve and differentiate as time goes. This is actually what makes them a tech company. An automaker wouldn't do that. They would take 5 years and then come out with absolutely nothing. Tesla did the same thing by ultilizing GoogleNet. Anyway this is different and meanless to the discussion. There is no good open source SDC software to fork.

The quoted statement from your own thread suggests L4. "Complete autonomous driving for all driving situation on the highway." L3 is not complete autonomous driving.I'm talking about Elon claiming level 5 in two years for the past 6 years and others either claiming Level 5 is impossible or that it will take a long long time, some mentioning 2030+.

But Elon kept claiming he will do it in 2018.

Neither nissan nor me ever promoted the propilot 2.0 as L4, but always as L3 in every related marketing. Even then the system as it is was never released. I'm not even talking about whether its L2 or L3. I mean the original system had 4 lidars, 12 surround cameras. The release hardware had 0 lidars and 3 forward cameras.

So now your egg counting is based on startups? For myself, my criteria remains the same as it was in 2017, it's not here until it is actually released and consumers are using the software at the actual level claimed (whether we are talking about end-to-end L2, L3, L4, or L5).It definitely was disappointing. But its par for course for traditional automakers. I made this post over 2 years ago and its still accurate. There's a reason there's still no automaker with a reliable OTA update system after years on years. Yet startups perfect it from day one.

Note that mercedes actually ended up giving up and going completely Nvidia for both hardware, software, ADAS and AV.

VW is still stuck creating teams, fumbling the development, nuking everything and creating another team. Rinse and repeat.

Update on BMW's INEXT Level 3 Highway System in 2021 (not happening)

UPDATE: A few updates are on in order. Looks like Mobileye is no longer joint with BMW on driving policy although they have given BMW the blueprints to move forward. This change most likely came from the new Mercedes/BMW autonomous deal in FEB 2019. Not only that, as a result of this Amnon no...teslamotorsclub.com

Its not even just because of safety risk. they absolutely have no ambition. You can hand them a L5 self driving system today free and they will find a way to fumble the roll out and spend 3 years just deciding what to do with it. Then spend another 3 three rolling out 100 cars in a city. safe to say they are hopeless.Its not the engineers its the 80 years old business suit heads calling the shots.The only hope are start-ups. These two articles are good reads...

The reason for the long deployment time is due to the trad automakers. Mobileye already came out and said it takes traditional automakers and tier 1s 3-4 to integrate. EyeQ4 went to production in Q4 2017 and it took NIO till the first half of 2018 to integrate and deploy it. So less than a year. Yet it takes someone like Ford and GM 4 & 3 years respectively to deploy EyeQ4 and Ford with crap driving policy. For EyeQ5, Geely's new Zeekr brand is integrating and deploying less than 1 year after its production date.

So blame the traditional automakers. However the eventually released propilot 2.0 in Japan is coming to the US this year.

Anyway, If you want the latest and greatest Mobileye system, you will only find it on EV startup cars not traditional automakers.

I mean you will literally see the latest and greatest supervision on the Zeekr this year.

Just my opinion of course but I think we are probably 3-5 years away from large scale deployment of L4 (geofenced) robotaxis in the US.

I wasn't wrong. I quoted a direct statement from Audi after their announcement in 2017.That's not true from my memory. I remember responding to one of the threads where you were talking about the Audi system and you pretty much did the same thing (counting eggs before they hatched). Plenty were saying the timeline for release was significantly farther back than you suggested.

First L3 Self Driving Car - Audi A8 world premieres in Barcelona

I have absolutely no favorites, I have reported and done research on almost every single SDC company. You on the other hand have one and only favorite, Tesla.Usually people that are unbiased don't have to call themselves unbiased. Other people are the proper judge of that, not oneself. From that thread alone, I think very few people will agree that you are unbiased. And ripping into other automakers does not make one unbiased, unbiased would be to show no preference at all for any company, but there are obviously companies you favor (there is nothing wrong with that BTW).

Elon/Tesla lies literally every day and you never once, atleast from what i recall call any of his lies "lies" let alone "bald faced lie".That's not an accurate take on Huawei's move at all. In 2018 there was already a government ban on Huawei (along with ZTE), signaling Huawei needed to find a replacement. In March 2019, they announced they were working on an in house replacement of Android (called Hongmeng or Harmony) and that they had been developing it since 2012.

Criticism of Huawei - Wikipedia

That turned out to be a bald faced lie from the article linked. All they apparently did was take AOSP and did a find and replace of any references to Android. So not only is it not their in house effort as they claim, in the two years they had since announcement of developing a new in-house OS, they did almost nothing significant. If instead they straight up said they were going to fork it (like Amazon's Fire OS) and their efforts showed in the fork, that's a whole different story. Instead they tried to hide their tracks in the most low-brow way possible (anyone who have worked in software development can see what I mean).

If that doesn't ring any alarm bells on claims by Huawei on software prowess, and also any forward looking claims they make going forward, I don't know what does.

The word "Complete" doesn't have any meaning here. We know that L3 car is a self driving car and L3 is autonomous driving.The quoted statement from your own thread suggests L4. "Complete autonomous driving for all driving situation on the highway." L3 is not complete autonomous driving.

No your criteria has always been pro Tesla and disparage any other development that others are working on.So now your egg counting is based on startups? For myself, my criteria remains the same as it was in 2017, it's not here until it is actually released and consumers are using the software at the actual level claimed (whether we are talking about end-to-end L2, L3, L4, or L5).

BTW, I looked up NIO last time when you discussed it. They are far less impressive than you put it. Although they launched the vehicle in June 2018, they didn't even have ACC in the vehicle until April 2019.

MASTER THREAD: FSD Subscription Available 16 Jul 2021

I would also be careful on any PR announcements from them. They may announce they "released" a feature, like for example NOP (their version of Tesla's NOA) below in April 2020, when they haven't yet actually.

NIO Introduces Navigation On Pilot And Revamped Parking Assist

NOP wasn't actually released until October 2020 (and judging from articles discussing previous releases, the initial release is not necessarily equivalent to industry leaders).

NIO OS 2.7.0 released, brings NOP driver assistance feature - CnTechPost

This is an interesting issue. The two systems are independently analyzing the situation and therefore probabilities of failure are assumed to be random in each and therefore statistically uncorrelated. If that were clearly true the combined probability calculation would be defensible.

However this kind of statistical predictive calculation is fraught with major possibilities for error. It would be correct if the possibility of failure in each had nothing to do with the external input, but only with some kind of random noise-generated failure within the systems themselves. IMO it is however quite likely that each has chance of perceptual error that is highly influenced by external challenging scenarios.

Infact we can even move forward until that is clearly understood. For example Lidar sees in total pitch darkness

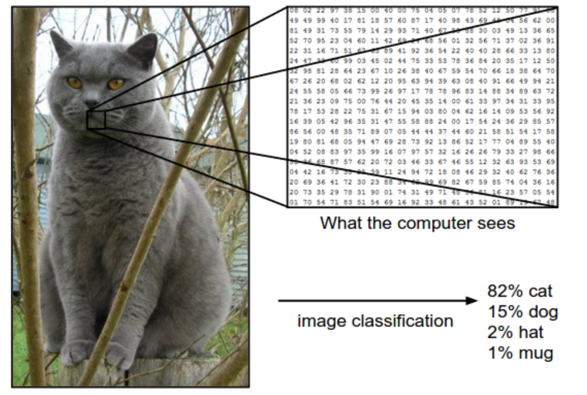

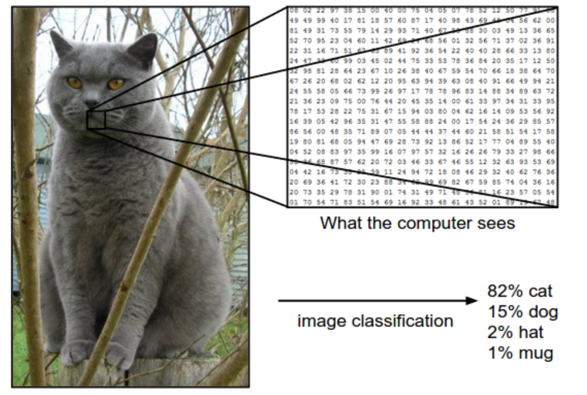

. Secondly as you can see in the video, lidar shoots out lasers, its an active sensor so it actually sees the accurate dimension and shape and distance of an object without the need of ML. While for example a camera is completely blind without ML.

headlights doesn't let you see everything.If your headlights are not functional you probably shouldn't be driving at night.

This is not correct. A camera is, obviously, not "blind" without ML. Otherwise pictures would always be blank.

No it isn't. You don't need ML to understand lidar. Again Lidar gives you precise measurement of the objects in your path and their distance.ML is what lets the computer understand what the camera sees.

Which is the same thing as with LIDAR

This is simply false as i have proven.In both cases it's simple for the computer to know there's "something" seen by LIDAR or camera.

Again false. Most SDC companies has system that uses the raw/processed input of lidar to determine if there's an obstacle in the way they need to stop for or drive around in case their ML models on lidar inputs fail. If you did robotics as a kid in school, you would know this.In both cases it requires ML to make an estimation what the something is-- and then further decision making regarding what, if anything, the vehicle needs to do about it.

This is blatantly false. A computer can't tell there's an object in a picture without ML. Its literally called object detection. Lidar provides accurate detection, shape and distance.The primary thing lidar provides, that previously was not being done with vision, is providing accurate distance to the objects.

Find me any ML system with 99.999999% accuracy. Newsflash: It doesn't exist!Tesla is now doing that with vision.

If they can obtain accuracy needed for safe driving then LIDAR no longer adds any value at all.

headlights doesn't let you see everything.

It is. Camera (vision) is just a bunch of numbers from 0-255 and without ML you can't make sense of it. Your vision system is completely blind.

No it isn't. You don't need ML to understand lidar. Again Lidar gives you precise measurement of the objects in your path and their distance.

This is simply false as i have proven.

This is blatantly false. A computer can't tell there's an object in a picture without ML. Its literally called object detection. Lidar provides accurate detection, shape and distance.

The point is that with LIDAR you don't need to use ML to detect objects. You can write regular old procedural code to prevent you from running into another car. Name a car sized object that you might want to run into, you don't always need to know what you're looking at.In both cases you get a bunch of data from a sensor, but need ML to know what you're "looking" at.

The primary thing lidar provides, that previously was not being done with vision, is providing accurate distance to the objects.

Tesla is now doing that with vision.

If they can obtain accuracy needed for safe driving then LIDAR no longer adds any value at all.

FirstLight Lidar

To travel fast, you need to see far. Our FirstLight Lidar is engineered to see more than twice the distance of even the most advanced conventional lidar systems, making it the only lidar that allows trucks to travel safely at high speeds. FirstLight eliminates virtually all interference from sunlight and other sensors—and using the Doppler effect, it can track the velocity of moving objects and people at distances and with levels of accuracy unmatched in the industry. The Aurora Driver | Self-Driving Technology

The point is that with LIDAR you don't need to use ML to detect objects. You can write regular old procedural code to prevent you from running into another car

. Name a car sized object that you might want to run into

You are forgetting velocity. It is also important to accurately calculate the velocity of objects.

Camera vision can do this too of course. But it requires complex ML

Lidar can provide other advantages too like detecting drivable space, localizing precisely on a map

Find me any ML system with 99.999999% accuracy. Newsflash: It doesn't exist!