Daniel in SD

(supervised)

Waymo vehicles also crash and are incapable of repairing themselves. Not autonomous.

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

Read the 100 pages of posts. And so when it fails, it's no longer autonomous. Like L2, but with fewer interventions. And should there even be "severe" cases in a carefully circumscribed L4 geofence? Shouldn't it be able to handle construction zones flawlessly?Again still not answering the question. What does not autonomous mean? Is anyone physically, remotely or otherwise controlling the car when it is in autonomous mode?

The traffic cone incident illustrates that autonomous vehicles in its current state can still fail and in so doing a robotaxi company need remote assistance systems and physical assistance in severe cases.

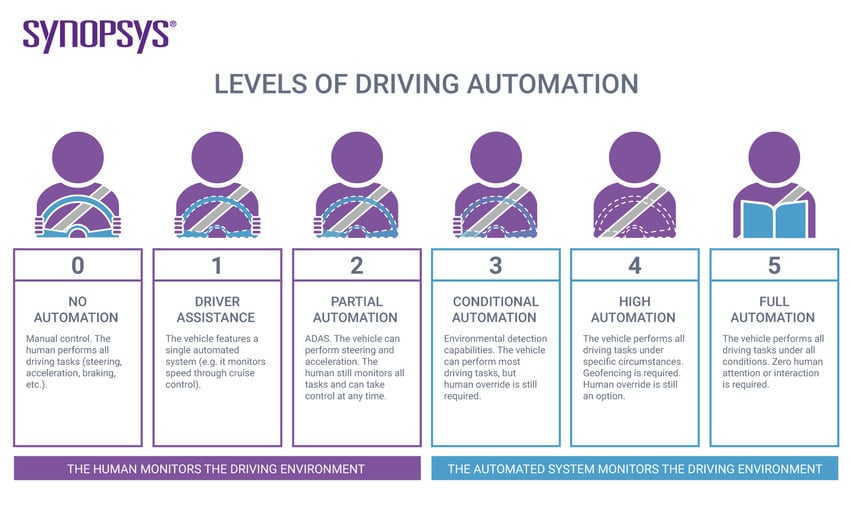

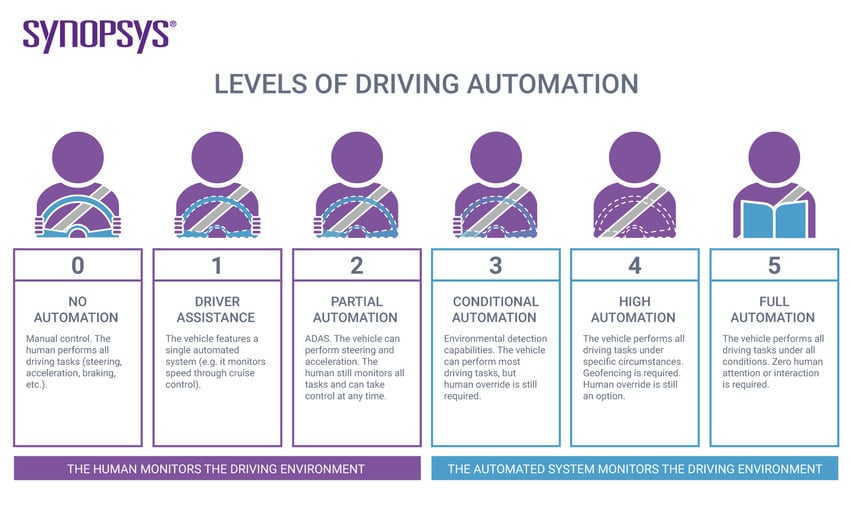

I have been reading these forums for a long time. No post in 100 pages define what constitutes an ADS. SAE J3016 defines that. When an autonomous system fails, it just means an autonomous system has failed. It does not define its level.Read the 100 pages of posts. And so when it fails, it's no longer autonomous. Like L2, but with fewer interventions.

And should there even be "severe" cases in a carefully circumscribed L4 geofence? Shouldn't it be able to handle construction zones flawlessly?

Paying passengers will not be amused to be late to their destination because the Waymo refused to proceed when it gets confused.

SAE J3016 is one definition, but many on the internet (including many journalists) completely ignore it and use their own definitions. For some people, any sort of remote assistance disqualifies it from being autonomous (even though it doesn't for SAE's purposes). Roadside assistance for mechanical breakdowns is a different case, as humans call for that too.I have been reading these forums for a long time. No post in 100 pages define what constitutes an ADS. SAE J3016 defines that. When an autonomous system fails, it just means an autonomous system has failed. It does not define its level.

Unfortunately we don't live in fantasy world. In the real world autonomous driving is a difficult technology to design and solve and that is why most have decided to use multi modal sensor fusion and a lot of data and real world driving to aid in solving the problem but we are still far way from solving it completely.

Ok? That is what remote assist/customer support and road side assist are for? Logistically speaking robotaxi companies will need that because it just makes sense to have a way to remotely diagnose a problem and get the car moving. The car gets a flat tire, you need roadside assist to change the tire or in a case where there is total failure of the system, dispatch a backup driver to complete the trip. Companies spend billions in having proper customer support for situations where customer need assistance.

Again how does any of those make the car not autonomous?

SAE J3016 is the only internationally recognized definition for what constitutes ADS. That document is what laws are currently being crafted around and is what most on the internet and journalists cite when they talk about autonomous driving levels. I'm aware some people bemoan SAE levels but i don't really care much for what "many" on the internet and journalists come up with for arbitrary reasons, for "many" people a L2 ADAS is an ADS. I don't listen to what those people on the internet have to say. As for alternate definitions of ADS, I have read some academic alternate definitions and they are not sufficiently different from J3016, they are just rephrase of the same concepts covered by J3016.SAE J3016 is one definition, but many on the internet (including many journalists) completely ignore it and use their own definitions. For some people, any sort of remote assistance disqualifies it from being autonomous (even though it doesn't for SAE's purposes). Roadside assistance for mechanical breakdowns is a different case as humans call for that too.

Basically a human does not call for remote assistance or roadside assistance to navigate around a traffic cone, so it makes sense some people feel it disqualifies a vehicle from being considered autonomous (even if it's not directly remote control of the steering wheel or accelerator/brake).

Again still not answering the question. What does not autonomous mean? Is anyone physically, remotely or otherwise controlling the car when it is in autonomous mode?

Waymo vehicles also crash and are incapable of repairing themselves. Not autonomous.

It would be easier if people, specifically enthusiast interested in discussing this topic read the document so we can all have a common base to discuss from. As a lot of these technicalities are covered in it. I do agree that a human giving high level input like instructing the robotaxi to reroute means it is not "fully autonomous" but it still highly automated as long as the car extricates itself from that situation without any lateral and longitudinal control from a person. It is a far cry from L2 partially automated system that requires a human fallback for control.I think it's easier to say what autonomous is, rather than what not autonomous means. Autonomous (to my mind) means that the car drives itself, start to finish, with no input whatsoever from a human other than to give it a destination. If the car needs intervention at any time, including requesting routing help, or help extricating itself from a situation that a normal human driver would be expected to be able to deal with, then it's not fully autonomous. I would make an exception for charging.

This is a good starting base to point out why J3016 does not use autonomous to describe the levels, but automated with modifiers for each level. Ranging from no automation to full automation. And specifically describe how even a L5 ADS failing in a given scenario does not make it not fully automated. What you are describing is binary system where it is either perfect or it is not " fully automated". The people creating these systems and setting these standards are under no illusions that we will get their in the next decade or two which is specifically why for the purposes of development and testing, a safety personnel can be required in the driver seat. Furthermore a L2 partially automated system needing supervision is not the same as a L4 highly automated system needing monitoring as J3016 describes "The term monitor driving automation system performance should not be used in lieu of supervise which includes both monitoring and responding as needed to perform the DDT and is therefore more comprehensive". It is much more technically nuance than we discuss it in these forums as binary systems.Perhaps one problem is that the word "autonomous" needs a modifier: "partially" or "fully" for example. My L2 car with EAP is partially autonomous, as it drives itself while I supervise, under suitable conditions.

I think that you are squeezing the stick too tightYou're both trying waaaaayyyy too hard here on TESLAMotorsClub.com. All of your FUD and whining hasn't and won't stop Tesla for a second.

This isn't anywhere near the end of Tesla's FSD development roadmap. FSD being officially deployed on Tesla's fleet is the beginning so Tesla+FSD owners are near the start of the FSD era.

Due to a shortage of semiconductor chips, not an abandonment of the feature. Once this temporary shortage is resolved, I expect GM will once again offer SuperCruise as an option.We've all been hearing the "competition is coming" for Tesla for a decade. That implied both EVs and also ADAS. Well, it's late 2021 and one of the big OEMs that has been struggling with recalling ~every EV they've produced is going to be late with their ADAS.

Tesla Autopilot loses its strongest rival as GM halts Super Cruise rollout

Tesla Autopilot’s most formidable rival from legacy auto is on a break, with General Motors confirming that its flagship driver-assist system, Super Cruise, is unavailable for now. The culprit behind this is, quite unsurprisingly, the ongoing semiconductor shortage. The halt of Super Cruise’s...www.teslarati.com

"Yet in a statement on Wednesday to CNET, a Cadillac spokesperson confirmed that Super Cruise is not being released to any of the company’s vehicles for now. It’s not just the Escalade, either, as the Cadillac spokesperson also confirmed that the CT4 and CT5 — the next two cars expected to receive the advanced driver-assist system — would also not be released with Super Cruise for the current model year. "

@daniel put it better than me on the same points, and you seem to agree with the response post to him. Again SAE J3016 is all well and good, but it's not really necessarily accepted in colloquial use (I doubt many people know about it) and it's reasonable to see why people may have a different take on what they qualify as "autonomous". Even when not talking about "autonomous" but rather the different levels, I see plenty of journalists get it wrong (and I try to push for corrections when I see that). For example Jason Torchinsky in Jalopnik (who even wrote a whole book on the subject) gets it badly wrong lots of times.SAE J3016 is the only internationally recognized definition for what constitutes ADS. That document is what laws are currently being crafted around and is what most on the internet and journalists cite when they talk about autonomous driving levels. I'm aware some people bemoan SAE levels but i don't really care much for what "many" on the internet and journalists come up with for arbitrary reasons, for "many" people a L2 ADAS is an ADS. I don't listen to what those people on the internet have to say. As for alternate definitions of ADS, I have read some academic alternate definitions and they are not sufficiently different from J3016, they are just rephrase of the same concepts covered by J3016.

Why is road side assist for mechanical breakdown different because humans call for that too? Why can't a software call for road side assist when said software is supposed to not only recognize when it is about to fail and fail safely but also know when it needs assistance in a given situation. Shouldn't a robotaxi vehicle be able to call for emergency services if it gets into a crash?

A computer is not a human. Computers do not posses the reasoning capability that we do. That is what is fascinating with neural networks and mimicking human "cognitive capability". And it makes sense that a software in its nascent stage can fail in stupid and spectacular ways and that does not make it not autonomous. Is an autonomous vehicle that gets into an accident not an autonomous vehicle because its perception system or planning system failed in a particular instance? Can anyone guarantee that their ADS will never get confused or fail? Will that make it not autonomous? Basically a robotaxi or autonomous vehicle should be able to call for assistance when it gets stuck so it makes sense to have remote assistance in those situations.

Here is another example using Waymo, it gets to a road on its planned route and discovers it is actually blocked and doesn't know what to do so it calls remote assist which then draw a line across the road and the car backs up and reroutes. To me that is pretty impressive for an autonomous car to know it was stuck, call remote assist which instruct it to find another route and it does that by itself. There was a safety driver there that could have taken over but they didn't. To me it just makes sense to be able to remotely diagnose an issue and assist. Compared to a L2 ADAS which gets to a road closed sign and tries to plow through it. That is the difference between a L2 ADAS and an ADS.

I think they still need CPUC approval.Big news! CA DMV authorizes both Cruise and Waymo for commercial autonomous ride-hailing in SF. Both companies can now charge a fee for their autonomous ride-hailing.

Autonomous means that it is capable of driving within a circumscribed area (or in the case of L5, anywhere).Again how does any of those make the car not autonomous?

Why should anyone care if a vehicle is truly "autonomous" or not if they can legally sleep in the backseat or watch a movie?Autonomous means that it is capable of driving within a circumscribed area (or in the case of L5, anywhere).

So, let's do a thought experiment: at what point would a "L4" autonomous vehicle no longer be L4 (i.e., "autonomous") if it goes into safety default, oh, say once every day on its robotaxi route and requires remote or in-person correction by the robotaxi provider?

All this BS about true autonomy is great, but until autonomous vehicles can predict what inherently unpredicable biological drivers, they all require intervention by humans - just how often is the differentiator. This is why the incremental approach by Tesla will, IMO, surpass the current "autonomous" technology. Maybe more hardware, maybe more CPU power needed. But they are on the right track, bitching and moaning notwithstanding.

7.1.1 Autonomous

This term has been used for a long time in the robotics and artificial intelligence research communities to signify systems that have the ability and authority to make decisions independently and self-sufficiently. Over time, this usage was casually broadened to not only encompass decision making, but to represent the entire system functionality, thereby becoming synonymous with automated. This usage obscures the question of whether a so-called “autonomous vehicle” depends on communication and/or cooperation with outside entities for important functionality (such as data acquisition and collection). Some driving automation systems may indeed be autonomous if they perform all of their functions independently and self- sufficiently, but if they depend on communication and/or cooperation with outside entities, they should be considered cooperative rather than autonomous. Some vernacular usages associate autonomous specifically with full driving automation (level 5), while other usages apply it to all levels of driving automation, and some state legislation has defined it to correspond approximately to any ADS at or above level 3 (or to any vehicle equipped with such an ADS).

Additionally, in jurisprudence, autonomy refers to the capacity for self-governance. In this sense, also, “autonomous” is a misnomer as applied to automated driving technology, because even the most advanced ADSs are not “self-governing.” Rather, ADSs operate based on algorithms and otherwise obey the commands of users.

For these reasons, this document does not use the popular term “autonomous” to describe driving automation.

Small comment: Waymo applied for their permit to include the safety driver, it's not been forced on them.I think they still need CPUC approval.

It seems Waymo's approval still requires safety drivers. Cruise's does not, but their ODD is much more limited.

It would be easier if people, specifically enthusiast interested in discussing this topic read the document so we can all have a common base to discuss from. As a lot of these technicalities are covered in it. I do agree that a human giving high level input like instructing the robotaxi to reroute means it is not "fully autonomous" but it still highly automated as long as the car extricates itself from that situation without any lateral and longitudinal control from a person. It is a far cry from L2 partially automated system that requires a human fallback for control.

This is a good starting base to point out why J3016 does not use autonomous to describe the levels, but automated with modifiers for each level. Ranging from no automation to full automation. And specifically describe how even a L5 ADS failing in a given scenario does not make it not fully automated. What you are describing is binary system where it is either perfect or it is not " fully automated". The people creating these systems and setting these standards are under no illusions that we will get their in the next decade or two which is specifically why for the purposes of development and testing, a safety personnel can be required in the driver seat. Furthermore a L2 partially automated system needing supervision is not the same as a L4 highly automated system needing monitoring as J3016 describes "The term monitor driving automation system performance should not be used in lieu of supervise which includes both monitoring and responding as needed to perform the DDT and is therefore more comprehensive". It is much more technically nuance than we discuss it in these forums as binary systems.

Well in the given example, because people would rather not be stuck waiting to be rescued when the car encounters a traffic cone. All this discussion about terminology is all well and good, but in the end people only care about the practicality. While things are still in experimental stage, this is all swept under the rug for now, but when the services finally need to be profit making, the companies would have to consider how many remote assistants are sustainable, and how many support vehicles can they keep around, etc.Why should anyone care if a vehicle is truly "autonomous" or not if they can legally sleep in the backseat or watch a movie?

Again, the SAE doesn't use the word "autonomous" anyway.

Of course the quality of service matters. None of know how often Waymo rides fail to complete or how many vehicles can be serviced by a single remote assistant. Hopefully we'll get a better picture soon when these services deploy in San Francisco. I'm guessing there will be much more customer and media scrutiny there.Well in the given example, because people would rather not be stuck waiting to be rescued when the car encounters a traffic cone. All this discussion about terminology is all well and good, but in the end people only care about the practicality. While things are still in experimental stage, this is all swept under the rug for now, but when the services finally need to be profit making, the companies would have to consider how many remote assistants are sustainable, and how many support vehicles can they keep around, etc.

There are also other operation models (self owned autonomous vehicles as opposed to fleets) where there would be zero dedicated remote assistance or support vehicles to help to handle things when the car can't figure things out. For those to be practical, these kind of things would have to be ironed out first.