Daniel in SD

(supervised)

Aren’t they claiming this year now for public driverless ride service?They are always two years away

I guess that’s only in Bolts, the Origin purpose built robotaxi is coming in 2023.

Last edited:

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

Aren’t they claiming this year now for public driverless ride service?They are always two years away

What I want to figure out is how is everyone else doing the planning - they are not as transparent as Tesla.Yeah, the Cruise Under the Hood is excellent too.

What I want to figure out is how is everyone else doing the planning - they are not as transparent as Tesla.

5) Level 5.0000 is unattainable in the next few decades and not even worth pursuing.

The autonomous Progress is still quite a challenge as another report of an Autonomous Vehicle accident, Whitby, near Toronto, Canda that landed the backup driver in the hospital in critical condition:

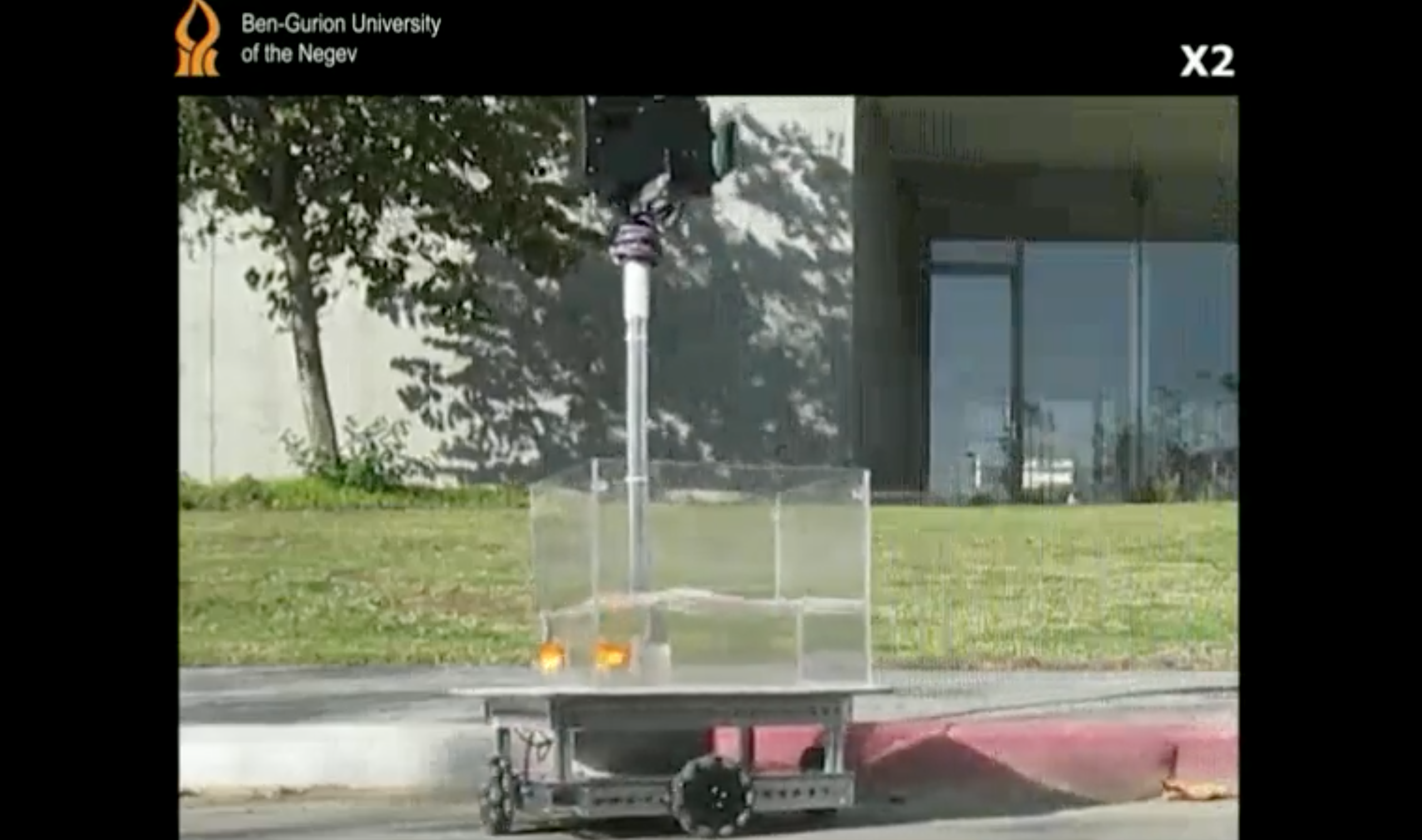

It's an L3+ Olli Autonomous Shuttle. The website relies on "Computer Vision and Analytics" but the picture seems to show that there's an electronic LIDAR (non-mechanically-rotating) on the front top and another one above the front grille. It's operated by Whitby Autonomous Vehicle Electric Shuttle Project.

"The resulting investigation has found that the vehicle was being operated in manual mode prior to, and at the time the vehicle left the roadway. Therefore, the hazard mitigation safety systems designed for the vehicle while in autonomous mode were disabled at the time of the collision."

...another case of either human error or poor design for the human controls...

sevehicle.medium.com

sevehicle.medium.com

What if you decide to have only full autonomy outside the city, out on the open road?

And Level 2-3 or manual operation within the built environment?...

Can ‘outer markers’ make self-driving cars happen?

I am not sure the author is trying to say.

L5 on freeways and L2-3/manual within the city built-environment?

The article describes the city's built-environment is just like the airplane using "autoland". The problem is "autoland" is very complex and most pilots don't use the automatic landing procedure unless they have to like in poor visibility.

L5 on freeways is L4.I am not sure the author is trying to say.

L5 on freeways and L2-3/manual within the city built-environment?

The article describes the city's built-environment is just like the airplane using "autoland". The problem is "autoland" is very complex and most pilots don't use the automatic landing procedure unless they have to like in poor visibility.

Nevertheless, using transmitters/sensors for intersections, traffic lights, road markers, obstacles, even pedestrians, other cars (V2V), Vehicle-to-Everything (V2X) ... is a great idea until the car's collision avoidance system is perfected. The problem is it's in an infrastructure project that costs money. And the current Build Back Better still has a hard time passing so who would have the courage to bring tackle this issue to the 50 Republican plus 1 Democratic Senator?

Cruise has a bunch of unedited videos. Obviously the proof will be when they open to the public which is supposed to happen this year.Haven't seen any AV developers navigate complicated inner city traffic in let's say SF, at adequate speed.

The simulations I have seen so far ooze cherry-picked footage.

Which reminds me of the old Silicon Valley motto: fake it till you make it.

Or: simulate it till you can make it truly stick.

There are even some FSD beta videos of that.Haven't seen any AV developers maneuver through complicated inner city traffic in let's say SF, at adequate speed.

The simulations I have seen so far ooze cherry-picked footage.

Which reminds me of the old Silicon Valley motto: fake it till you make it.

Or: simulate it till you can make it truly stick.

There may be multiple limitations, including2) The AVs will work everywhere but will have some other ODD limitation like speed or weather restriction and thus will be L4.