Legally, I took to mean in context, it was not for example stolen. The person in question legally borrowed the vehicle, even though the act of driving it may be done in an illegal manner.Actually no because I specifically said you let them borrow it legally.

Lending it to a drunk driver would be illegal. Hence why I specifically said that.

And then called that out again, specifically, in the second post when JB seemed unclear on this which was over an hour before your reply above.

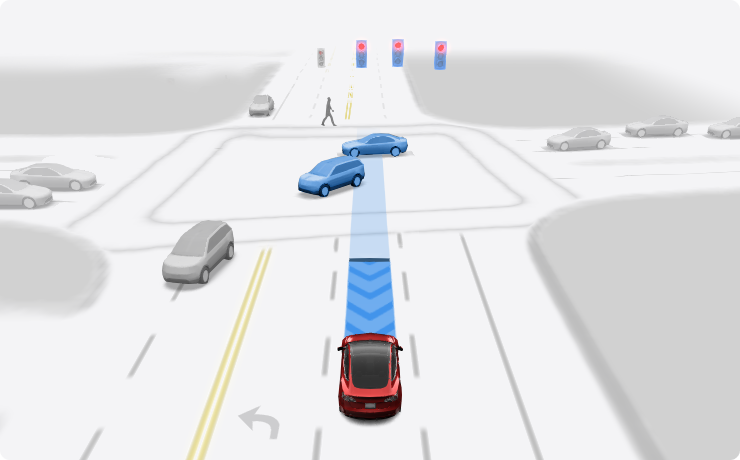

I can give a related example. If the owner knows the car drives like a drunk driver or has serious flaws and lets it operate anyways, they can be held criminally liable. That's probably the most relevant point to what you were responding to:

The VW/Mobileye L4 example will be more instructive. The operator, owner, and vehicle manufacturer is VW, but the software and processors are Mobileye.So if I own a Tesla robotaxi for personal use, I'm responsible if it does anything wrong.

There will probably be future combinations where there are 3 parties or more that may be responsible.

Last edited: