Pedestrian detection systems don’t work very well, AAA finds

How did the cars do on the easy test?

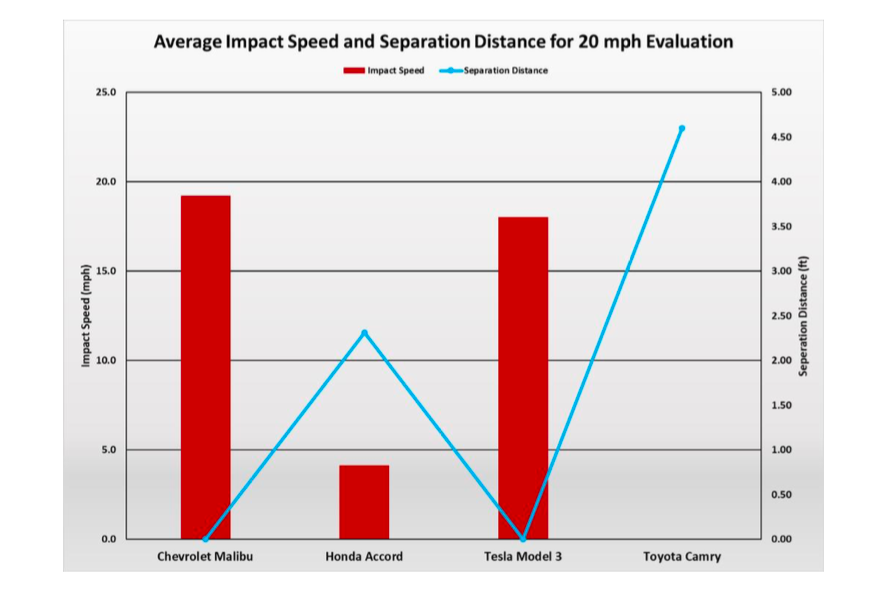

Unfortunately, the results of the tests were very much a mixed bag. For the Chevy Malibu, while it detected the adult pedestrian at 20mph (32km/h) an average of 2.1 seconds and 63 feet (19.2m) before impact, in five tests it failed to actually apply the brakes enough to reduce the speed significantly before each collision took place. The

Tesla Model 3 managed little better; it also hit the pedestrian dummy in each of five runs.

On average, the Chevy slowed by 2.8mph (4.5km/h) and alerted the driver on average 1.4 seconds and 41.7 feet (12.7m) before impact. In two runs, there was no braking at all, even though the system detected the pedestrian dummy.

The

Honda Accord performed better. Although it notified the driver much closer to the pedestrian (time-to-collision at 0.7 seconds, distance 32 feet/9.7m), it also prevented the impact from occurring in three of five runs and slowed the car to 0.6mph (1km/h) in a fourth.

Best of all was the

Toyota Camry. It gave a visual notification at 1.2 seconds and 35.5 feet (10.8m) before impact. But the Camry also stopped completely before reaching the dummy in each of five runs.