Yes, there were a bunch of reviews. It was all discussed at length in the autonomy investor day thread at the time of the event.

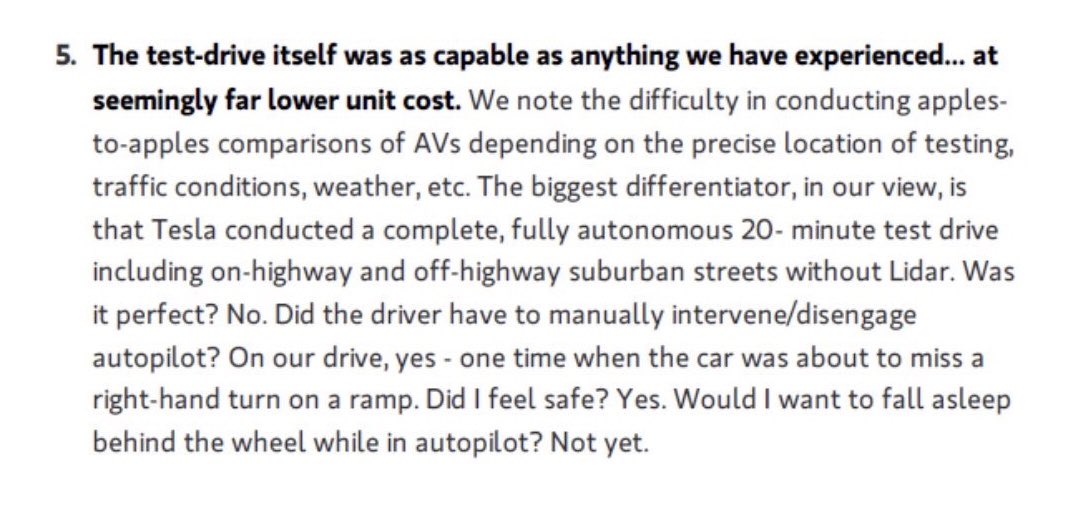

Here is a review by Morgan Stanley.

It is interesting to go back and revisit that thread. There was so much optimism by Tesla fans, including me, at the time that Tesla was really going to deliver FSD very soon. According to the Morgan Stanley quote above, Tesla showed them a decent 20 minute autonomous ride on highway and some off highway streets. Yet, 10 months after autonomy investor day and that demo, Tesla still has not delivered any part of "City NOA", not even any meaningful "traffic light response". It is especially striking when compared to Cruise that has demonstrated real autonomous driving.

So what happened? Now, I don't dismiss the demo ride. I think it really happened just as Morgan Stanley describes. Although I do think Tesla picked a super easy route that would put their FSD in the best possible light. But I think it is clear now that Elon is grossly underestimating the work that is required to make autonomous driving happen and/or overestimating the ability of AI and machine learning to solve the problems. He sees what Tesla has and thinks Tesla can finish the features in 10 months when it actually requires a lot more time. He thinks lidar is doomed because he genuinely seems to think that machine learning will solve autonomous driving in a matter of months. And maybe, Tesla's camera vision approach will eventually work in the long term but it is obvious now that it will take a lot longer than Elon thinks. Based on what we see from Waymo, Cruise and others, clearly, in the short term, lidar is still very helpful, even essential for safe reliable autonomous driving.