....but that is just it. You can't fully describe an unknown instinctual action in a language. We and animals deal on the fly with unknown unknowns without thinking. AI and all software will have limits if it has no pre defined information of a unknown unknown situation since it can't do accurate open improvisation based off missing info and make "I have a feeling that this will work" changes on the fly.

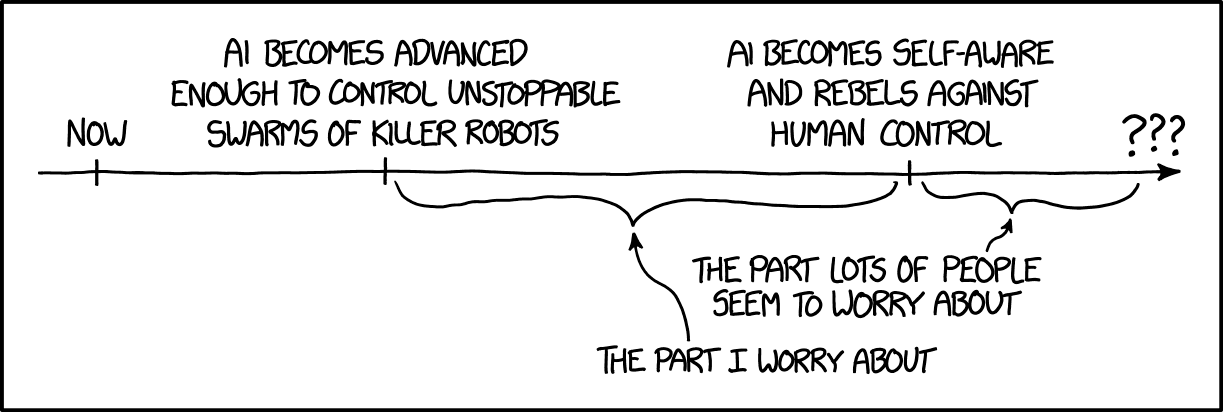

Again I don't believe AI will (or could) become sentient and "rise up to destroy us" but even playing that game I still get a different outcome. In the "rise up to destroy us" scenario (which I don't believe) in which we are like rats to the AIs. Again there will be many,

MANY, MANY, MANY AIs, so AIs will will look like competitors to each other. In the "rise up to destroy us" scenario all the AIs will be too busy trying to out deal the other AIs than worry about the

scurrying around their "feet".

Also I believe in the

power method. No power supply, no working AI. So pull the

.

Of course it is all an exercise in futility since we as individuals don't have any real control. Speaking of control just to be on the safe side I'm giving my TV remote control a deep message and hot mineral oil bath.