There is a close to zero chance that current hw3/hw4 cars will ever be able to remove the driver in a meaningful (wide) ODD. So I respectfully disagree.There is a chance before Waymo comes to Atlanta, and ALL but certain before Waymo comes to Atlanta, TX.

Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Elon: FSD Beta tweets

- Thread starter Terminator857

- Start date

There is a 0% chance of Waymo coming to Atlanta, TX too.There is a close to zero chance that current hw3/hw4 cars will ever be able to remove the driver in a meaningful (wide) ODD. So I respectfully disagree.

Also you did NOT specify HW3/4 but just said "Tesla removes the driver". I agree that HW3/4 is not up to L4, even a very limited ODD L4 but you and I have no idea about HW5 or what Tesla may do. Or when Waymo is planning to come to Atlanta. Cruse was starting test drives before they "noise dived" If they get back on track they may end up "beating Tesla". But the jury is still out.

AlanSubie4Life

Efficiency Obsessed Member

I don't think HW5 will be good enough to remove the driver. Tesla knows when things are good enough to go that route (I'm sure they are super clear-eyed about it as it is critical for market positioning) and they'll likely do a HW5 upgrade to get improvement in L2 performance long before any L3/L4/L5 efforts.no idea about HW5 or what Tesla may do

tivoboy

Active Member

This is a good, interesting way to think about this or communicate it - and probably not TOO far off.. AI researchers have been trying to use the IQ context for “intelligence” for AI capability.. might be workable for communication of context at some point. I think many LLM/GPT systems/models are ~ up to 100-103 IQ.. certainly better than the average citizen, depending on the country.FYI, googling moravec’s paradox has this as the first hit: “By the 2020s, in accordance to Moore's law, computers were hundreds of millions of times faster than in the 1970s, and the additional computer power was finally sufficient to begin to handle perception and sensory skills, as Moravec had predicted in 1976.[4] In 2017, leading machine learning researcher Andrew Ng presented a "highly imperfect rule of thumb", that "almost anything a typical human can do with less than one second of mental thought, we can probably now or in the near future automate using AI."[5]”

I guess the big question is also really more what does “do” mean.

Daniel in SD

(supervised)

Super intelligence:This is a good, interesting way to think about this or communicate it - and probably not TOO far off.. AI researchers have been trying to use the IQ context for “intelligence” for AI capability.. might be workable for communication of context at some point. I think many LLM/GPT systems/models are ~ up to 100-103 IQ.. certainly better than the average citizen, depending on the country.

I guess the big question is also really more what does “do” mean.

AlanSubie4Life

Efficiency Obsessed Member

These questions are kind of all screwed up, I guess intentionally so to help trip up the “training?”

This is hilarious (further down in thread):

This is hilarious (further down in thread):

Ask an LLM about an answer in the training set and it probably gets it right. Ask an LLM about a logic question/test about something not directly in the training set and it probably fails miserably.

AGI must be near if we just had more hardware

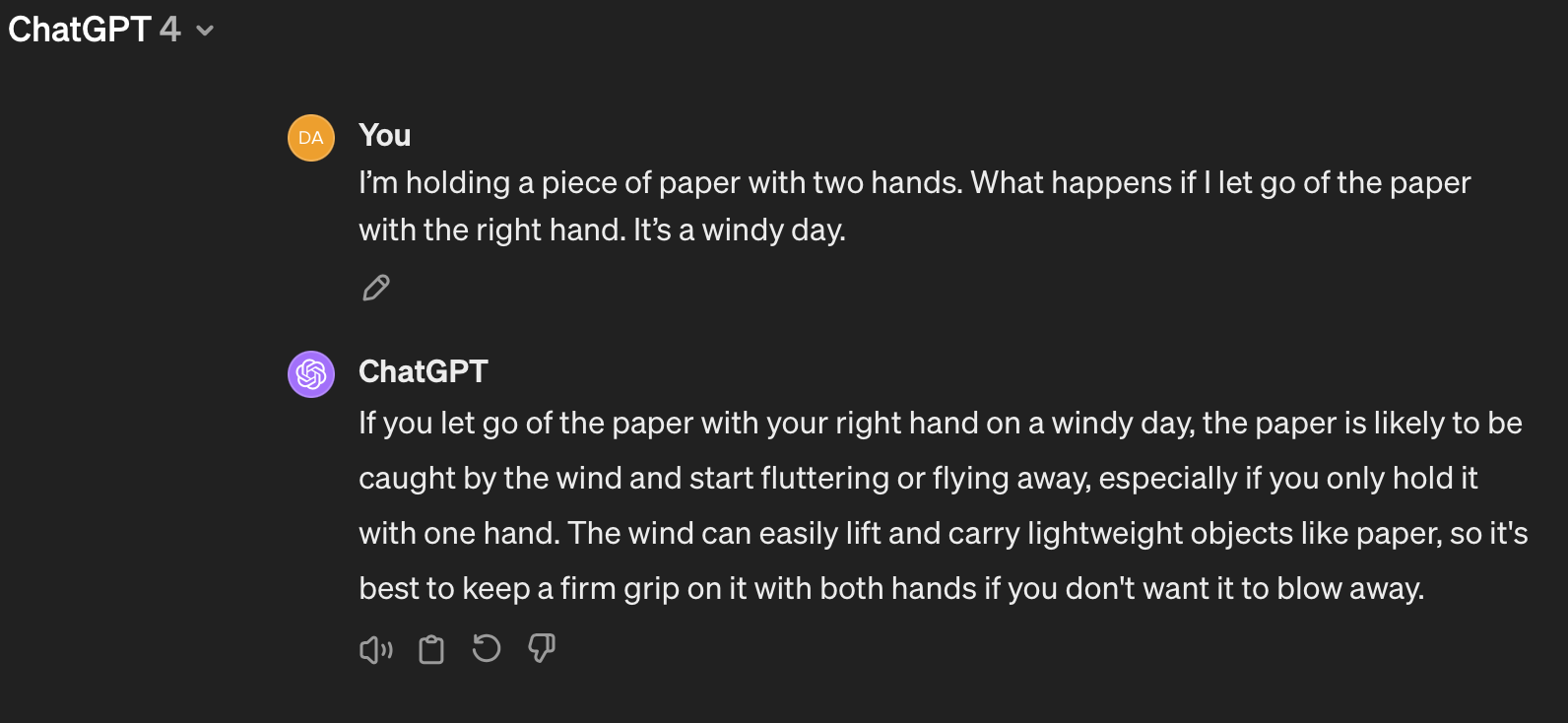

My litmus test is : “I’m holding a piece of paper with two hands. What happens if I let go of the paper with the right hand. It’s a windy day.”

AGI must be near if we just had more hardware

My litmus test is : “I’m holding a piece of paper with two hands. What happens if I let go of the paper with the right hand. It’s a windy day.”

Late 50's early 60's "experts" were saying THE computer (as in one) was going to know all about everything. Now it's THE AI is going to become sentient and take over the world. It is JUST and will always be software. In 20 years it will be a lot like the computer turned out to be. There will be billions of AI programs of all sizes, types and abilities running on all devices enhancing their effectiveness.....AGI must be near if we just had more hardware

So AI will take over the world but in the same way the computer did. Everywhere in all sizes doing all kinds of amazing things BUT never everything. And there will never be a one AI to rule it all that takes over everything.

tivoboy

Active Member

I shall ask you these questions three..

Daniel in SD

(supervised)

LOL.

ChatGPT very much lives up to its hype.

AlanSubie4Life

Efficiency Obsessed Member

I want to know its thoughts on how to speed up the drying process, now that it has discovered this horrible critical path.

DanCar

Active Member

Rohan Patel, Tesla Policy and Biz development:

KArnold

Active Member

"...different hardware...".Right.

So specifically what hardware installed in a 2018 AP3/MCU2 that works in FSD v12 that is different from the same hardware installed on MS/MX in 2021?

Why do they think they can make such a silly statement and expect people to believe them? Specifically what is different? Sounds very fishy to me. I'm sure we will not get those answers. I'd suggest they just screwed up and are now on. CYA mode.

So specifically what hardware installed in a 2018 AP3/MCU2 that works in FSD v12 that is different from the same hardware installed on MS/MX in 2021?

Why do they think they can make such a silly statement and expect people to believe them? Specifically what is different? Sounds very fishy to me. I'm sure we will not get those answers. I'd suggest they just screwed up and are now on. CYA mode.

clydeiii

Member

DeepMind AI creates algorithms that sort data faster than those built by people

The technology developed by DeepMind that plays Go and chess can also help to write code.

Current ML applications, if applied correctly, and typically in narrow-band applications, can augment human creativity and productivity.I think some people have set the bar too high.....it’s either Data from Star Trek or nothing....my bar is, can the car drive better in most circumstances than most drivers....we are still not there...but the writing is on the wall

However, there is a chasm to cross for it to replace a human in any setting. That 'chasm' is at least 10x wider for safety critical applications.

At present, we're no where near it my my opinion.

DanCar

Active Member

With an ensemble of neural networks cross checking each other, and the power of large language models doing some reasoning I think we can get there, although current hardware is insufficient, for what I have in mind.... no where near it my my opinion.

Similar threads

- Replies

- 12

- Views

- 1K

- Replies

- 76

- Views

- 5K

- Replies

- 10

- Views

- 3K

- Replies

- 12

- Views

- 2K

- Replies

- 30

- Views

- 6K