Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Tesla.com - "Transitioning to Tesla Vision"

- Thread starter run-the-joules

- Start date

qdeathstar

Completely Serious

That video is a whole lot of nothing.... car works fine. The fact that you can’t see the lead car is abated by the larger following distance.

gearchruncher

Well-Known Member

Literally all they are doing is leaving radar modules off some cars. They're leaving them on others, so clearly they support this variation on their line. There is no difficulty in coordinating, and Tesla builds variants all the time. The moment you are loading the new, verified, fully functional SW on the line, you stop installing the radar. You know, exactly like they did before the SW was ready, because they didn't need to actually coordinate SW and HW being aligned.Its about co-ordinating the validation/certification with manufacturing. Not easy to say the least.

The difficult coordination here is that they wanted to remove radar before the SW was ready, and they hoped that while the cars were in transit they would get the SW done and they could OTA it. But, like every other AP SW project at Tesla, that took much longer than they expected, and they needed to roll out a reduced functionality version so they could actually sell the cars to customers this quarter. This is so clearly either about money or supply chain issues that it's hilarious to hear people try and argue that Tesla is simultaneously amazingly agile and also awful at it at the same time as a more likely situation.

rxlawdude

Active Member

You'd think. But I'll be happy when FSD for city streets is available, beta or not.Why not ?

They just removed something. They didn't add anything new.

Removing radar shows Elon's influence in a manner similar to SpaceX; there's a push to improve a non-redundant system to the point that it's more effective, efficient, and safer than an alternate redundant system. I believe Elon's approach and maximization of efficiency is meant to lead to faster innovation, overall.

powertoold

Active Member

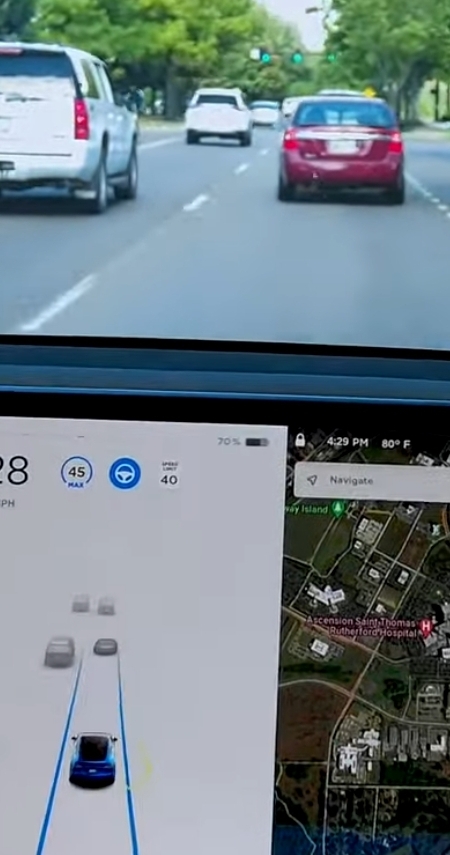

Tesla Vision is still showing the 2nd front car if only a small sliver of it is visible. This is very impressive IMO, and it bodes well for V9. I also find that cars on the visualization are much more stable. With my radar car at a stop, adjacent cars will float when there's movement near them:

Last edited:

gearchruncher

Well-Known Member

Just to point out, the cameras high up on the windshield can see much more of that car than you can from the interior camera angle. Yes, this is a good thing, and not a corner case, but it doesn't mean Tesla Vision (tm) can identify a vehicle from the "sliver" we see. We'd need to see the actual video feed to understand just how little of a car the vision system can understand as a car.Tesla Vision is still showing the 2nd front car if only a small sliver of it is visible.

The real future is when the system can remember and intuit that a car is there even when it cannot be seen based on seeing it in the past. That seems like it's needed to be "superhuman."

gearchruncher

Well-Known Member

Elon has been saying since 2018 that Vision only is the correct way to do autonomy. If this is his modus operandi, why did he wait until 2021 to remove the radar, and only from certain cars? Why was he letting the team work on v8 and previous FSD that used radar for years now? Why did he have to ask the AP team if AEB was still present on this release? The whole "Elon is so committed to first principles that he doesn't even consider other solutions" narrative doesn't work here. They had a whole blog post for 5+ years explaining just how world leading the were in processing radar...Removing radar shows Elon's influence in a manner similar to SpaceX; there's a push to improve a non-redundant system to the point that it's more effective, efficient, and safer than an alternate redundant system.

What redundancy did he remove from SpaceX that is used in other programs? I have co-workers that came from the GNC team at SpaceX and they use pretty traditional, redundant, aerospace sensing to control those systems. But not multi-domain, because that hasn't been traditionally used either.

Last edited:

qdeathstar

Completely Serious

Waiting until now isn’t the issue... it’s the fact that it is only some cars.... before it’s equal to the existing tech.

I bet pure vision autosteer will never work in the rain.....

I bet pure vision autosteer will never work in the rain.....

Yoliber

Member

Tbf it didn't recognize the SUV until the SUV started accelerating before the sedan. At the end of the clip you could see that it can't look past the other SUV. Does that matter? Personally I think it does. If that black SUV is distracted and rear ends a vehicle, or last minute dodges an obstacle, I hope the Tesla can react fast enough.Tesla Vision is still showing the 2nd front car if only a small sliver of it is visible. This is very impressive IMO, and it bodes well for V9. I also find that cars on the visualization are much more stable. With my radar car at a stop, adjacent cars will float when there's movement near them:

qdeathstar

Completely Serious

I’d say if the suv and car are moving at the same speed, then it isn’t important to show them both on the screen of keep track of them separately....Tbf it didn't recognize the SUV until the SUV started accelerating before the sedan. At the end of the clip you could see that it can't look past the other SUV. Does that matter? Personally I think it does. If that black SUV is distracted and rear ends a vehicle, or last minute dodges an obstacle, I hope the Tesla can react fast enough.

EVNow

Well-Known Member

Tesla Vision is still showing the 2nd front car if only a small sliver of it is visible. This is very impressive IMO, and it bodes well for V9. I also find that cars on the visualization are much more stable. With my radar car at a stop, adjacent cars will float when there's movement near them:

View attachment 667622

Anyone knows whats happening to autopark ? IIRC that used radar.

CyberGus

Not Just a Member

Anyone knows whats happening to autopark ? IIRC that used radar.

The parking sensors are short-range sonar, not radar.

stopcrazypp

Well-Known Member

Yep. My impression also. The "pings" that show up during parking are the short range ultrasonic sensors. Radar is for long range.The parking sensors are short-range sonar, not radar.

gearchruncher

Well-Known Member

For some definition of "long range." They did have to turn off smart summon, so this was using radar.Yep. My impression also. The "pings" that show up during parking are the short range ultrasonic sensors. Radar is for long range.

Tam

Well-Known Member

For some definition of "long range." They did have to turn off smart summon, so this was using radar.

Smart summon range is 200 feet, so that ruled out sonars. What we have left are camera and radar that can work with that range.

Radar is really good and quick at detecting moving obstacles so I guess it's been used also.

Now that radar is out of the picture, it's just a matter of time for the camera to catch up with the radar's job.

Last edited:

I believe that the remembering and predicting part, i.e "temporal continuity and extrapolation" is exactly the most exciting benefit of v9, and by extension all the similar, though possibly hastily-constructed, updates to various other AP features.Just to point out, the cameras high up on the windshield can see much more of that car than you can from the interior camera angle. Yes, this is a good thing, and not a corner case, but it doesn't mean Tesla Vision (tm) can identify a vehicle from the "sliver" we see. We'd need to see the actual video feed to understand just how little of a car the vision system can understand as a car.

The real future is when the system can remember and intuit that a car is there even when it cannot be seen based on seeing it in the past. That seems like it's needed to be "superhuman."

The improved stability seen in the visualization is probably evidence of this. There are ways to achieve a more stable display without really reflecting a fundamental improvement in NN confidence, but I don't believe they're just playing display games with us; it makes perfect sense that the temporal analysis would have this stabilizing effect.

Regarding the radar-sees-past discussion, I again want to caution everyone against over-hyping that radar capability, and by extension being overly mourning its removal. The radar cannot "see", identify classify and track a lead vehicle just because some microwaves scooched under/around the in-between car and the reflection scooched back again.

Yes it's wonderful to be alerted that "there's something up there and it's suddenly stopping" - but that's about all the info you get. I'd be cautious about the idea that it would draw a box and track it reliably; that is probably more effective from the advanced camera NN catching glimpses as a human driver would. And if the system is aware, from glimpses, that the immediately-in-front vehicle is following a lead vehicle too closely, then it should adjust its own following distance in defense against a possible pile-up - the same way a smart and experienced human would. I'm not denying that the radar could help out here, just saying that its output is far less detailed than people are implying.

YieldFarmer

Analytic.eth

According to Telsa internal manual circa 2018, Radar is used for:For some definition of "long range." They did have to turn off smart summon, so this was using radar.

Auto Lane Change

AEB

Autosteer

Forward Collision Warning

Lane Departure Assist

Overtake Assist

Traffic Aware Cruise Control

At 0:41 when the car is stopped, it sees a long line of cars on the left lane but just the single car in front. I don't think vision is doing anything special here. In the snapshots you posted, as others have mentioned, the higher vantage point of the camera just let's the cameras detect the car on front.Tesla Vision is still showing the 2nd front car if only a small sliver of it is visible. This is very impressive IMO, and it bodes well for V9. I also find that cars on the visualization are much more stable. With my radar car at a stop, adjacent cars will float when there's movement near them:

View attachment 667622

powertoold

Active Member

At 0:41 when the car is stopped, it sees a long line of cars on the left lane but just the single car in front. I don't think vision is doing anything special here. In the snapshots you posted, as others have mentioned, the higher vantage point of the camera just let's the cameras detect the car on front.

When stopped, the 2nd front car isn't relevant. The 2nd front car tends to show up when the car is in motion.

Similar threads

- Replies

- 2

- Views

- 465

- Replies

- 49

- Views

- 16K

- Replies

- 2K

- Views

- 162K

- Article

- Replies

- 106

- Views

- 10K